More on Steemify development progress

Since I started working on Steemify somewhere in September 2017, the project has grown significantly in complexity. Some things I was able to get away with by using a simple implementation, no longer suffice. One of those things I'm currently working on, is the list of notifications.

As there may be many hundreds or even thousands of notifications for a user, it would not be wise to load those into the app all at once. Not only will this have a serious impact on performance, but also it might use a significant amount of data even when you'd only want to view, let's say, the 10 most recent notifications. A commonly used technique to tackle this problem is called pagination. Basically you split up the dataset into smaller chunks; pages. In the current version of Steemify, this is also implemented, but in a basic way.

When working with constantly changing data, this simple approach is not going to work well. You might get new notifications while loading more pages, which would couse duplicates to appear in the list. On the other hand, some notifications might get deleted while you are browsing the list, causing some to not appear at all. Lucky for me, smart minds at companies like Twitter and Facebook have already tackled this problem.

Pagination problem explained

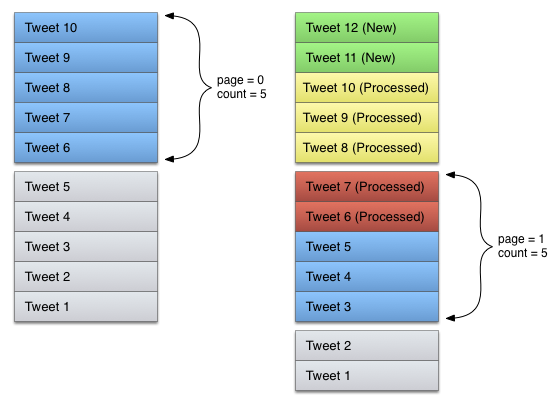

If we take Twitter as an example, consider a list of reverse-chronologically ordered (higher number = newer) tweets. We can load the pages, consisting of 5 items each, one by one:

Now, if new items are added while we have already loaded page 0, and then request page 1 from the server, we'll get into trouble:

As you can see, items 7 and 6 are now loaded twice. We could ofcourse filter these out of the list inside of our app, and that would work pretty well as long as the amount of duplicates is low compared to the page size. Consider what would happen if there were 5 or more new items; it would load all the items from the previous page again. If the rate of adding new items is high, we might even get into the situation where we are unable to load older items!

The/a solution

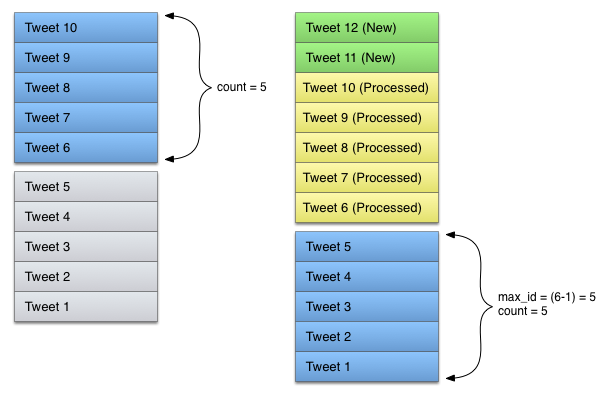

A good solution to this problem, is using a so-called cursor. When fetching a page from the server, we tell it to only return items that are older than our last processed item:

In the above image, the max_id parameter is used to specify at what item we want our next page to begin. This is great, because now the client does not have to worry about getting duplicates. It also solves the problem of items getting deleted (although the client might still show items no longer existing on the server, until it reloads from the start again).

Final thoughts

Using a cursor shifts most of the complex logic to the server, so clients do not have to worry about duplicate or missing items. Also, there are a few other tricks to make this even more efficient, which will be implemented on the Steemify servers as well.

Me and my fellow blockbrothers are a witness as @blockbrothers. If you want to support us we would appreciate your vote here. Or you could choose to set us as your proxy.

Vote for @blockbrothers via SteemConnect

Set blockbrothers as your proxy via SteemConnect

As blockbrothers, we build Steemify, a notification app for your Steemit account for iOS.

Get it Here:

@bennierex If there is anyone that can make it Work Right it would be You. Thank You for making STEEM Better Everyday.......

Thanks!

Sometimes it is difficult to explain why some stuff takes a long time to make, and even more difficult when the changes are not immediately obvious to the users. In this example of the notifications list, most users will not notice anything different, although a lot of work has gone into creating it. I believe this is also a problem the Steemit Inc. team sometimes faces.