Finding Software Vulnerabilities by Fuzzing with American Fuzzy Lop

Finding Software Vulnerabilities by Fuzzing with American Fuzzy Lop

Fuzzing is a software quality technique that aims to find flaws through running an application passing as input a lot of diverse values, possibly random, exploring the handling of unexpected or invalid data. It’s a technique that frequently finds security flaws, by its nature, but is able to find any kind of bugs. Fuzzing is like brute force testing: if you pass all possible input values to an application, you’ll run through all code flows and find all the problems.

In practice, obviously, you can’t run with all possible input combinations. Random values or blind variations rarely will exercise all the possible code flows, so we’ll have to apply some smarts to it. I’ll explain some techniques American Fuzzy Lop, or AFL, uses, as its one of the fuzzers most well-known for its efficiency.

The bunny that gives American Fuzzy Lop its name

AFL is an open source fuzzer, released in November of 2014. Even though traditionally fuzzers treat the binary under test as a black box, not analyzing the code that builds it, AFL has an interesting approach: it uses compile-time instrumentation in order to keep track of code coverage and expose interesting execution paths.

The status screen of AFL

This instrumentation is made through modified GCC or clang compilers, generating binaries that can be analyzed with AFL. This first step is having the source code of the application that is going to be tested, ready to be built by one of those compilers. Then it’s usually as simple as setting an environment variable to point to the instrumenting compiler and run the build as usual. The impact on the runtime performance is minimal.

If you don’t have the source code or your application is built in a language not supported by these compilers, there’s experimental support for runtime instrumentation using QEMU. It works mostly the same, but with a significative impact on performance.

To start fuzzing, you need an application that takes an input file and processes it. AFL will run it a huge amount of times with different input files, with a very low timeout and low memory limits. Any application crash is recorded in an output queue, and hangs are also categorized. Variations considered as interesting by AFL feed back the input queue.

How does it generate variations, and what are considered the interesting ones?

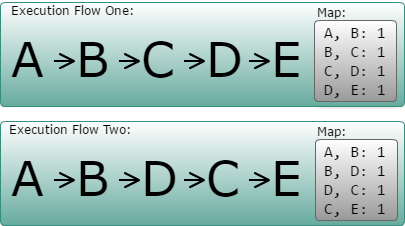

Well, as the application runs, AFL uses the instrumentation to keep a map of previous and current execution, address, with the count of passes on this pair. So it can recognize, for example, an execution that passes through A, B, C, D and E from another that went through A, B, D, C and E. The input file that generate new pairs on this execution map, or a significative change in the quantity of passes, are marked as interesting, and kept for further future exploration.

A representation of the maps generated by different execution flows

Inputs that generate maps equivalent to previous executions are considered having passed through the same flow as previously runs, and are discarded.

Execution starts with a set of initial test cases, ideally unique and as simple as possible, and the input queue is fed with the variation of those cases considered as interesting. For each test case of this input queue, the binary is executed with a large number of variations of the original file.

These variations are chosen for their effectivity on finding new interesting paths: It starts flipping each bit of the original file, then each byte. The third step breaks the file into 8, 16 and 32-bit chunks, and run preset arithmetic operations on these values.

AFL flipping bits and bytes

The last deterministic step is replacing each byte of the current input file with about a couple dozen of interesting values: -1, 256, max int, and so on. It’s possible to create a dictionary of values that would be interesting for your particular application, accelerating this process.

After the run of deterministic mutations, AFL runs a step with random mutations: how many and each kind is randomly determined and executed, this time also adding new data or removing parts of the file.

Finally, it picks two test cases that differ in only two places and generates a new one splicing both changes.

Right now you can realize two important points: the number of times the application is going to be executed is proportional to the length of the input file, since the deterministic processing makes changes in every bit looking for new execution paths; and that a very large number of runs for each test case is going to be necessary to find new paths.

AFL helps with both points. For the first one, besides the recommendation of starting with a single, simplest test case possible, it offers a test case minimization tool, called afl-tmin. It has a simple idea: it keeps removing bits of the file as long as the coverage maps maintain the same. In the end, you have the smallest file that covers that execution flow in the application.

If you have a large number of potential input files, it also provides a tool called afl-cmin, that runs the input files and identifies the smallest distinct subset that exercises the largest number of execution paths in the binary under test.

For the second point, it implements a feature called forkserver. Basically, it injects some code in the binary that pauses the processes after the application loads with all the dependencies, but before it reads any inputs, and then forks the processes for each execution required. The operating system isolates each child processes and prevents all the startup costs to be applied again, allowing running many more times per second. There are ways to customize the time of the fork, allowing to pre-load even more resources accelerating the execution even more. There’s also a persistent mode, where you change your source code in a way that it can run multiple scenarios in a loop, saving even the fork cost.

Still, even though sometimes results pop up quickly, you can expect to wait hours (or days, or weeks) and still see the fuzzer finding new paths and possibly new crashes. To speed this up even more, its simple to run multiple instances in parallel in a single machine working together and sharing the input queue. With a little more setup, you can do the same with a cluster of machines.

And it’s not just crashes that you can find with this technique. You can write applications that call the code under test and perform additional checks. For example, when fuzzing a compression library, you can compress the input data and then uncompress it, and check if it still matches. You could create an application that runs two independent implementations of an algorithm and checks if the results match. And so on.

With a little more creativity, you can also test code that doesn’t usually processes input files. You can write an application that transforms the input file and the equivalent of an in-process HTTP call, and fuzz a webserver.

Not everything is easy, though. Code that has many checks against magic values, or calculates hashes or checksums, will have a hard time finding new paths after those checks. In these cases, you can write post-processors of the generated test cases, if you know the file format and where the checksums are, and set the right values so the input can be processed.

Alternatively, you can change the code under test to disable the checks, and assume they are always correct.

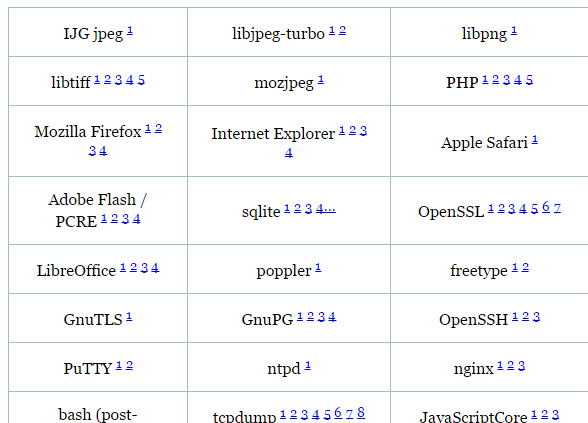

With these features, the efficiency of fuzzing with AFL is huge. Considering only the public reports, people found countless bugs in over a hundred projects with it, a lot of them with security implications.

Sample of AFL’s trophy case

Have you tried AFL or a different fuzzer? Do you contribute to an open source project that could have bugs exposed by it? Let us know in the comments!

Follow @burnin for more content. Missed one of my previous posts? Check them out:

Steemit Unofficial FAQ

Ten Cover Songs That You Should Listen To

Steemit's NFL Highlights: Preseason Week 2

Steemit's Free Online Sci Fi Compendium - Vol #1