Six Concepts from the Edge.Org Annual Question for 2017:

2017 : WHAT SCIENTIFIC TERM OR CONCEPT OUGHT TO BE MORE WIDELY KNOWN?

[Image source: pixabay.com, licensed under CC0, Public Domain]

Introduction

As I noted in [Retrospective] Five Dangerous Ideas from Edge.Org, one of the things I enjoy reading every year on the Internet is the Annual Question from edge.org. This year's question, "What scientific term or concept ought to be more widely known?" is no exception. I haven't made it through all 206 entries yet, but I am enjoying myself as I work my way through. I thought I would share six of them to get you started. You'll probably want to click through and maybe even bookmark it for later.

In no particular order, the six that I picked out to write about are: Reciprocal Altruism, submitted by Margaret Levi; Confirmation Bias, submitted by Brian Eno; Epsilon, submitted by Victoria Stodden; Regression to the Mean, submitted by James J. O'Donnell; Type I and type II Errors, submitted by Phil Rosenzweig; and Power Law, submitted by Luca De Biase.

Reciprocal Altruism: Margaret Levi

Sara Miller McCune Director, Center For Advanced Study in Behavioral Sciences, professor, Stanford University; Jere L. Bacharach Professor Emerita of International Studies, University of Washington

In her submission, Levi discusses the behavioral concept of altruism. She notes that many species develop altruistic behavior in order to improve survivability, fitness, and welfare for a group. She notes that reciprocity was originally studied as a form of tit for tat, where altruism was exhibited with the expectation of repayment, in an iterative fashion. However, she notes that more recent studies have observed more of a pay-it-forward approach to reciprocal altruism, where the expectation for repayment is to some future beneficiary who may not be the same person, or even in the same family, as the person who initially behaved altruistically. She terms this, long-sighted reciprocal altruism, and notes:

The return to such long-sighted reciprocal altruists is the establishment of norms of cooperation that endure beyond the lifetime of any particular altruist.

Of course, the mention of "tit for tat" reminded me of the recent post by @bitcoindoom, Why Down Votes and Flags are an Unavoidable consequence of Game Theory, and the notion of long-sighted reciprocal altruism makes me wonder if steemit can make use of a different sort of reciprocal altruism than tit for tat in order to cultivate the quality of our content.

Confirmation Bias: Brian Eno

Artist; Composer; Recording Producer: U2, Coldplay, Talking Heads, Paul Simon; Recording Artist

In this short submission, Eno points out that having more information often serves to reinforce our existing beliefs instead of improving the quality of our decision-making.

Epsilon: Victoria Stodden

Associate Professor of Information Sciences, University of Illinois at Urbana-Champaign

Of course, I can't see the Illinois at Urbana-Champaign without thinking back nostalgically to the days when the World Wide Web was new and I fought my way through compiling the NCSA Mosaic browser on a Sun workstation for my first time, but that's not today's topic.

In this submission, Stodden tells us about the statistical parameter, shown as the Greek letter epsilon, which represents uncertainty. Because statistics involves sampling, our knowledge is necessarily incomplete. There is an underlying physical process which generates data, and there are the data and measurements that we observe. Epsilon tells us that even with perfect measurements, we still don't have a complete picture, because there is other data that hasn't been observed. Therefore, we should never expect perfect predictions, regardless of the precision of our measurements. In conclusion, Stodden notes:

The 21st century is surely the century of data, and correctly understanding its use has high stakes.

Regression to the Mean: James J. O'Donnell

Classics Scholar, University Librarian, ASU; Author, Pagans; Webmaster, St. Augustine's Website

In this submission, O'Donnell talks about the law of regression to the mean. There are so many possible coincidences, so many conceivable unlikely scenarios, that some of them are likely to happen at almost any moment. When we see an amazing coincidence, it's most likely that the next thing to happen will be far more ordinary.

O'Donnell concludes by saying:

Heeding the law of regression to the mean would help us slow down, calm down, pay attention to the long term and the big picture, and react with a more strategic patience to crises large and small.

Type I and Type II Errors: Phil Rosenzweig

Professor of Strategy and International Business at IMD, Lausanne, Switzerland

Of course I was happy to see this topic, after incorporating it into my own article: On SteemBots and Voting Errors (see Confirmation Bias ; -). In general, we want to pursue a course of action when it will succeed and decline to pursue it when it will fail; accept a conclusion when it is correct and reject it when it is false; vote for a steemit post when it is valuable and decline to vote when it is not.

The problem is, as we learned from Stodden's Epsilon, decisions are made under uncertainty. If we act when we should not have, that's a Type I error. If we don't act when we should have, that's a Type II error. There is often a tradeoff between the two, and different people often have different preferences about which type of error should be preferred.

Rosenzweig captures the dynamics in these excerpts:

To summarize, a first point is that we should not only consider the outcome we desire, but also the errors we wish to avoid. A corollary is that different kinds of decisions favor one or the other. A next point is that for some kinds of decision, our preference may shift over time...

... There is a final point, as well: Discussion of Type I and Type II errors can reveal different preferences among parties involved in a decision.

Power Law: Luca De Biase

Journalist; Editor, Nova 24, of Il Sole 24 Ore

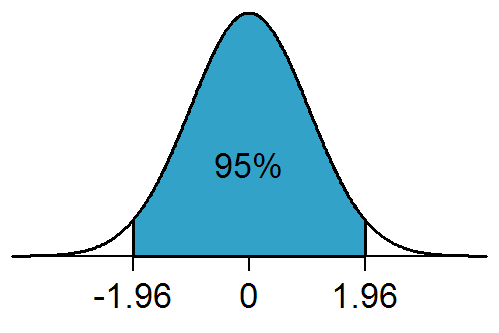

We are accustomed to thinking about data that is distributed along the so-called bell curve, or Normal curve. If you graph this data, most data is near the center, and the tails are thin on both sides.

[Image By Qwfp (talk) - self-made, CC BY-SA 3.0, https://en.wikipedia.org/w/index.php?curid=15541054]

However, De Biase points out that we frequently find data organized more like a ski jump than a bell. If one parameter causes a proportional increase in another, then the average is unimportant and polarization is inevitable. This "power law" distribution is observed frequently throughout much of nature, and it crops up in human artefacts when network effects are in play. In general, peoples' intuitions are not well suited for thinking about data that follows a power law distribution.

For a good book on making use of power laws in business, I recommend The 80/20 Principle and 92 Other Power Laws of Nature: The Science of Success.

Conclusion

With 206 articles to choose from, this sampling doesn't even qualify as cursory coverage, but it should whet your appetite. I highly recommend clicking through and reading these 6 full articles as well as the other 200.

There's a ton of good stuff there, you can use this post's comments to let people know about other submissions that you enjoy.

@remlaps is an IT professional with three decades of professional experience in data communications and information systems. He holds a bachelor's degree in mathematics, a master's degree in computer science, and a master's degree in information systems and technology management. He has also been awarded 3 US patents.

The data that is being collected is massively skewed. How do you factor in for that? Like human's natural competition mechanism that regulates their drive to ensure their popular view is directly foreseen as correct among lesser uncompetitive view points. The amount of data we are collected is indicative of society as a whole is it not? But in social media, news platforms, and other medias the voice of outliers are denounced as liars! Like mainstream outed the liars, not themselves of course, but have excluded some views of realistic scenarios as a result. What is the source of this in human behavior other than the drive to compete to be on top? Humans like to be the top on every chain, even one made upon human data. Natural predatory mode. Idk... I think it would be an interesting topic to see what actions behind data collection are skewed by competition.

Thanks for the feedback. On one hand, putting data on public block chains may help, since everyone can access it and decide what to believe for themselves. The trade-off is that we may have even less privacy than we do now.

Privacy isn't something I value given the schizophrenia and intermittent psychosis. There are no secrets in psychosis so I find it strange how private normal people are or have become...when you think your constantly being watched or filmed or viewed privacy seems trivial. A right that is elusive. I am on board for blockchain technology! Your post was very interesting and I enjoyed reading the highly educated opinions of the points you zoned in on.