Using R Programming & Text Analysis On A Few Rap Lyrics

Hi. In this page, I share some experimental work in the R programming language where I analyze rap lyrics from the tracks Fort Minor - Remember The Name and Eminem - Lose Yourself. In each song, I look at word counts and perform sentiment analysis.

The full version of this post can be found here on my website here.

Sections

- Introduction

- Fort Minor - Remember The Name Lyrics Analysis

- Eminem - Lose Yourself Lyrics Analysis [Output Only]

- References

Introduction

Rap lyrics generally contain more words than other musical genres so I have decided to look at a few rap lyrics.

The R packages that I use are dplyr for data wrangling and data manipulation, ggplot2 for plotting results, tidytext for text analysis, and tidyr for data cleaning and data formatting.

# Load libraries into R:

library(dplyr)

library(ggplot2)

library(tidytext)

library(tidyr)

Fort Minor - Remember The Name Lyrics Analysis

The artist Fort Minor was a side project from the Linkin Park member Mike Shinoda. Mike sometimes provides vocals in the form of rapping in a few of the Linkin Park tracks. Fort Minor's Remember The Name single was released in 2005 and was featured in the video game NBA Live 06, the 2006 & 2007 NBA Playoffs and in the 2008 NBA draft.

### 1) Fort Minor - Remember The Name

# Read Fort Minor - Remember The Name lyrics into R:

remember_lyrics <- readLines("fortMinor_rememberTheName_lyrics.txt")

# Preview the lyrics:

remember_lyrics_df <- data_frame(Text = remember_lyrics) # tibble aka neater data frame

> head(remember_lyrics_df, n = 10)

# A tibble: 10 x 1

Text

<chr>

1 You ready?! Let's go!

2 Yeah, for those of you that want to know what we're all about

3 It's like this y'all (c'mon!)

4

5

6 This is ten percent luck, twenty percent skill

7 Fifteen percent concentrated power of will

8 Five percent pleasure, fifty percent pain

9 And a hundred percent reason to remember the name!

10

The key function that will help in obtaining word counts is the unnest_tokens() function. Each word from the song lyrics will be in a row.

# Unnest tokens: each word in the lyrics in a row:

remember_words <- remember_lyrics_df %>%

unnest_tokens(output = word, input = Text)

# Preview with head() function:

> head(remember_words, n = 10)

# A tibble: 10 x 1

word

<chr>

1 you

2 ready

3 let's

4 go

5 yeah

6 for

7 those

8 of

9 you

10 that

Word Counts In Fort Minor - Remember The Name

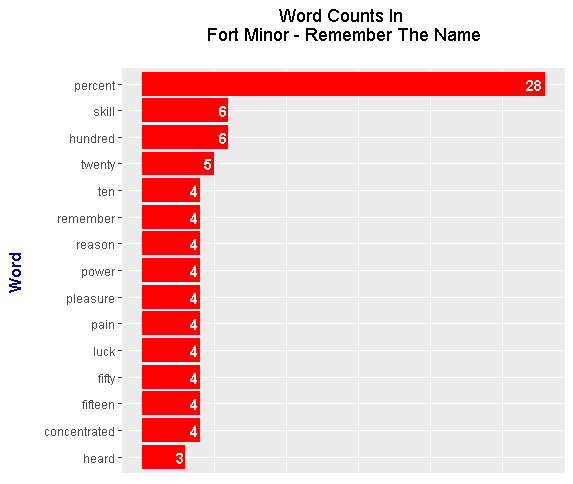

There are words in the English language that do not offer much meaning on its own but it helps make sentences flow. These words are called stop words. An anti_join() from the dplyr package in R is used to remove stop words from the Remember The Name lyrics.

### 1a) Word Counts in Remember The Name:

# Remove English stop words from Remember The Name:

# Stop words include the, and, me , you, myself, of, etc.

remember_words <- remember_words %>%

anti_join(stop_words)

The count() function from the dplyr package in R is used to obtain the words counts. These results are plotted as a sideways bar graph with the ggplot2 package functions.

# Word Counts:

remember_wordcounts <- remember_words %>% count(word, sort = TRUE)

> head(remember_wordcounts, n = 15)

# A tibble: 15 x 2

word n

<chr> <int>

1 percent 28

2 hundred 6

3 skill 6

4 twenty 5

5 concentrated 4

6 fifteen 4

7 fifty 4

8 luck 4

9 pain 4

10 pleasure 4

11 power 4

12 reason 4

13 remember 4

14 ten 4

15 heard 3

Once the word counts are obtained, a plot from ggplot2 can be generated in R.

# Plot of Word Counts (Top 15 Words):

remember_wordcounts[1:15, ] %>%

mutate(word = reorder(word, n)) %>%

ggplot(aes(word, n)) +

geom_col(fill = "red") +

coord_flip() +

labs(x = "Word \n", y = "\n Count ", title = "Word Counts In \n Fort Minor - Remember The Name \n") +

geom_text(aes(label = n), hjust = 1.2, colour = "white", fontface = "bold") +

theme(plot.title = element_text(hjust = 0.5),

axis.title.x = element_blank(),

axis.ticks.x = element_blank(),

axis.text.x = element_blank(),

axis.title.y = element_text(face="bold", colour="darkblue", size = 12))

In Fort Minor - Remember The Name, the word percent is the most common followed by the word skill. Other common words include number words, reason, power, pleasure, and pain. These common words pretty much come from the chorus.

This is ten percent luck, twenty percent skill,

Fifteen percent concentrated power of will

Five percent pleasure, fifty percent pain

And a hundred percent reason to remember the name!

Bigrams: Two Word Phrases

### 1b) Bigrams (Two-Word Phrases) in Remember The Name:

remember_bigrams <- remember_lyrics_df %>%

unnest_tokens(bigram, input = Text, token = "ngrams", n = 2)

# Look at the bigrams:

> remember_bigrams

# A tibble: 667 x 1

bigram

<chr>

1 you ready

2 ready let's

3 let's go

4 go yeah

5 yeah for

6 for those

7 those of

8 of you

9 you that

10 that want

# ... with 657 more rows

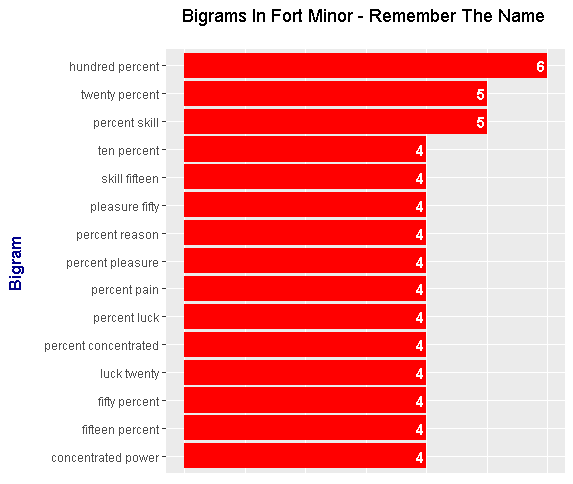

Just like with the single words, we want to remove stop words in the bigrams. We can't easily remove the stop words in the bigrams and the two word phrases are in one column. The separate() function from R's tidyr package is for separating the bigrams into their two separate words. From R's dplyr package, the filter() function is then used to remove stop words.

# Remove stop words from bigrams with tidyr's separate function

# along with the filter() function

bigrams_remember_sep <- remember_bigrams %>%

separate(bigram, c("word1", "word2"), sep = " ")

bigrams_remember_filt <- bigrams_remember_sep %>%

filter(!word1 %in% stop_words$word) %>%

filter(!word2 %in% stop_words$word)

# Filtered bigram counts:

bigrams_remember_counts <- bigrams_remember_filt %>%

count(word1, word2, sort = TRUE)

> head(bigrams_remember_counts, n = 15)

# A tibble: 15 x 3

word1 word2 n

<chr> <chr> <int>

1 hundred percent 6

2 percent skill 5

3 twenty percent 5

4 concentrated power 4

5 fifteen percent 4

6 fifty percent 4

7 luck twenty 4

8 percent concentrated 4

9 percent luck 4

10 percent pain 4

11 percent pleasure 4

12 percent reason 4

13 pleasure fifty 4

14 skill fifteen 4

15 ten percent 4

Next, the words are united with the use of the unite() function along with their counts. A bar graph is then generated.

# Unite the words with the unite() function:

remember_bigrams_counts <- bigrams_remember_counts %>%

unite(bigram, word1, word2, sep = " ")

> remember_bigrams_counts

# A tibble: 52 x 2

bigram n

* <chr> <int>

1 hundred percent 6

2 percent skill 5

3 twenty percent 5

4 concentrated power 4

5 fifteen percent 4

6 fifty percent 4

7 luck twenty 4

8 percent concentrated 4

9 percent luck 4

10 percent pain 4

# ... with 42 more rows

# We can now make a plot of the word counts.

# ggplot2 Plot (Counts greater than 8)

# Bottom axis removed with element_blank()

# Counts in the bar with geom_text.

remember_bigrams_counts[1:15, ] %>%

ggplot(aes(reorder(bigram, n), n)) +

geom_col(fill = "red") +

coord_flip() +

labs(x = "Bigram \n", y = "\n Count ", title = "Bigrams In Fort Minor - Remember The Name \n") +

geom_text(aes(label = n), hjust = 1.2, colour = "white", fontface = "bold") +

theme(plot.title = element_text(hjust = 0.5),

axis.title.x = element_blank(),

axis.ticks.x = element_blank(),

axis.text.x = element_blank(),

axis.title.y = element_text(face="bold", colour="darkblue", size = 12))

These results from the bigram end up being not as interesting. The common bigrams are pretty much from the chorus.

Sentiment Analysis Of Fort Minor - Remember The Name

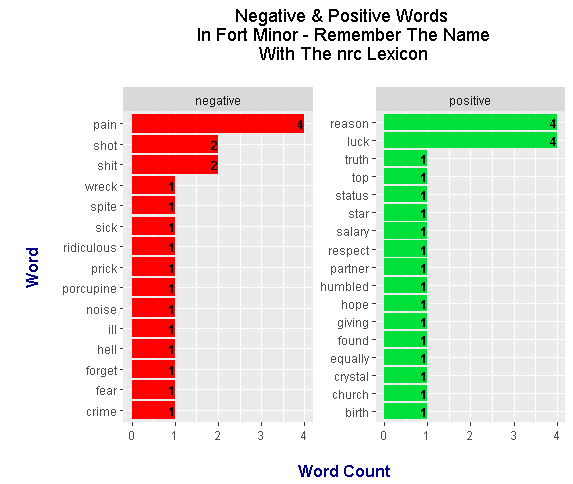

The sentiment analysis done here looks at whether the words in the lyrics are either positive or negative. There are three main lexicons which are nrc, AFINN and bing. Here, the nrc lexicon and bing lexicon results are presented.

nrc Lexicon

### 1d) Sentiment Analysis:

# There are three main lexicons from the tidytext R package.

# These three are bing, AFINN and nrc.

# nrc and bing lexicons used here.

### nrc lexicons:

# get_sentiments("nrc")

remember_words_nrc <- remember_wordcounts %>%

inner_join(get_sentiments("nrc"), by = "word") %>%

filter(sentiment %in% c("positive", "negative"))

> head(remember_words_nrc)

# A tibble: 6 x 3

word n sentiment

<chr> <int> <chr>

1 luck 4 positive

2 pain 4 negative

3 reason 4 positive

4 shit 2 negative

5 shot 2 negative

6 birth 1 positive

# Sentiment Plot with nrc Lexicon

remember_words_nrc %>%

ggplot(aes(x = reorder(word, n), y = n, fill = sentiment)) +

geom_bar(stat = "identity", position = "identity") +

geom_text(aes(label = n), colour = "black", hjust = 1, fontface = "bold", size = 3.2) +

facet_wrap(~sentiment, scales = "free_y") +

labs(x = "\n Word \n", y = "\n Word Count ", title = "Negative & Positive Words \n In Fort Minor - Remember The Name \n With The nrc Lexicon \n") +

theme(plot.title = element_text(hjust = 0.5),

axis.title.x = element_text(face="bold", colour="darkblue", size = 12),

axis.title.y = element_text(face="bold", colour="darkblue", size = 12)) +

scale_fill_manual(values=c("#FF0000", "#01DF3A"), guide=FALSE) +

coord_flip()

With the use of the nrc lexicon, the sentiment analysis results show that there is a near 50-50 balance of negative to positive words. This is somewhat misleading as the rapping in the track is quite aggressive in tone and there are quite a few swear words.

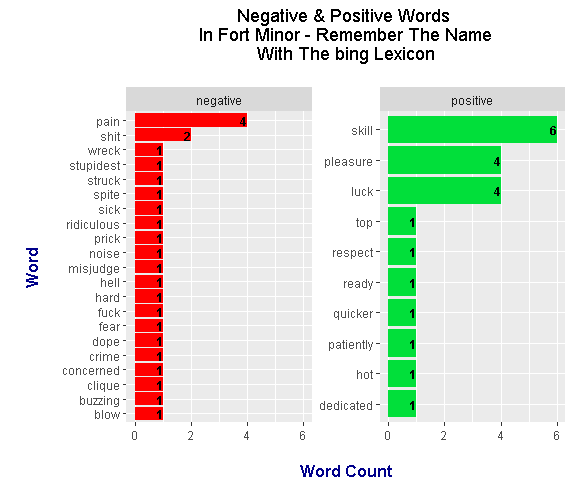

Bing Lexicon

### bing lexicon:

# get_sentiments("bing")

remember_words_bing <- remember_wordcounts %>%

inner_join(get_sentiments("bing"), by = "word") %>%

ungroup()

> head(remember_words_bing)

# A tibble: 6 x 3

word n sentiment

<chr> <int> <chr>

1 skill 6 positive

2 luck 4 positive

3 pain 4 negative

4 pleasure 4 positive

5 shit 2 negative

6 blow 1 negative

# Sentiment Plot with bing Lexicon

remember_words_bing %>%

ggplot(aes(x = reorder(word, n), y = n, fill = sentiment)) +

geom_bar(stat = "identity", position = "identity") +

geom_text(aes(label = n), colour = "black", hjust = 1, fontface = "bold", size = 3.2) +

facet_wrap(~sentiment, scales = "free_y") +

labs(x = "\n Word \n", y = "\n Word Count ", title = "Negative & Positive Words \n In Fort Minor - Remember The Name \n With The bing Lexicon \n") +

theme(plot.title = element_text(hjust = 0.5),

axis.title.x = element_text(face="bold", colour="darkblue", size = 12),

axis.title.y = element_text(face="bold", colour="darkblue", size = 12)) +

scale_fill_manual(values=c("#FF0000", "#01DF3A"), guide=FALSE) +

coord_flip()

The results under the bing lexicon are much different that the one with the nrc lexicon. Under the bing lexicon, there are more negative scoring words while the top positive word has a higher count than the top negative word.

Eminem - Lose Yourself Lyrics Analysis

For the second rap song, I have chosen to look at the rap song Lose Yourself by Eminem. This track was featured in the movie 8 Mile (2002).

For the most part, the R code is not much different than the one for Fort Minor - Remember The Name. To save time and space, only the results are shown here.

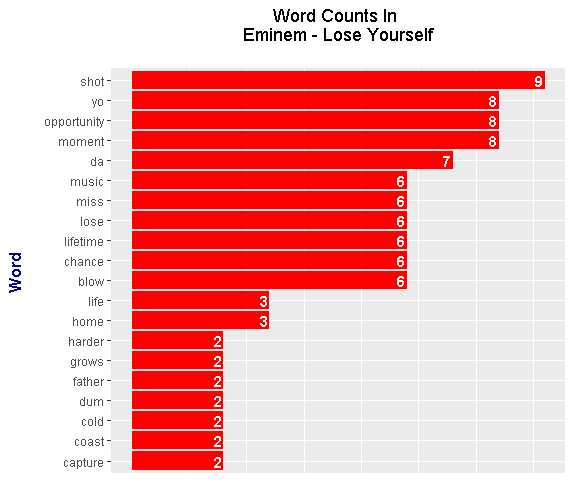

Word Counts In Eminem - Lose Yourself

The most frequent word from Lose Yourself is shot. There are also words such as yo and da. Some other high frequency words in the track include miss, lose, opportunity, moment, lifetime and chance.

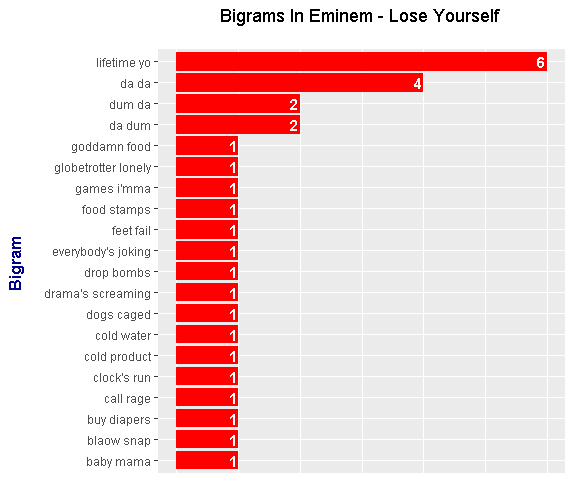

Bigrams In Lose Yourself

The most frequent bigram is lifetime yo followed by da da. These results ended up being not too interesting but it was worth a try.

Sentiment Analysis On Eminem - Lose Yourself

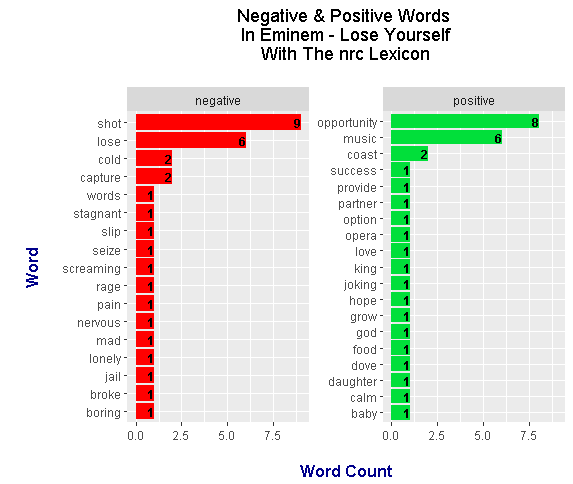

nrc Lexicon

Under the nrc lexicon, there is a near 50-50 split on positive and negative words in Eminem's Lose Yourself. The top positive word is opportunity and the top negative word is shot. I am skeptical of the word music being a positive word.

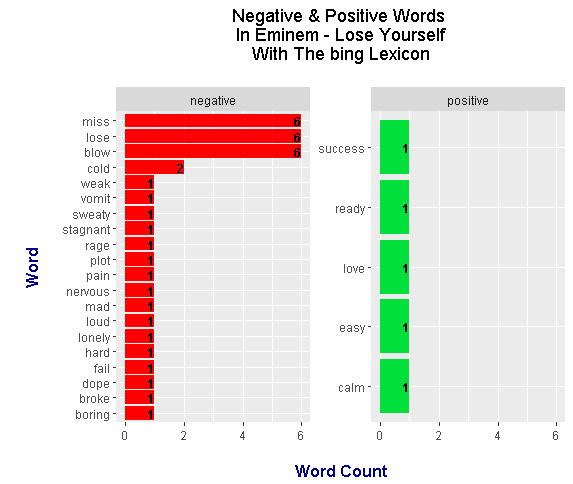

bing Lexicon

From using the bing lexicon, we find that the results are more skewed to the negative side than with the nrc lexicon. This makes sense as there is a lot of emotion and aggression in the rapping.

References

- R Graphics Cookbook by Winston Chang

- Text Mining with R - A Tidy Approach by Julia Silge and David Robinson

- Rap lyrics from a lyrics website (azlyrics.com I think)

Your post received an upvote by the @illuminati-Inc music curation team and its partner @curie.

You may consider voting for the Curie witness; all witness payouts are used to fund Curie operations including but not limited to more than 10 curation teams (vote here).