Putting curie on Auto-Pilot

I was looking over the guidelines for suggesting posts to curie in the chat room yesterday and I thought if I could automate a part of the curation process.

It didn't take a lot of mental regurgitation over the idea. I knew I had to try and see what I can do. @furion's steemtools library would come in handy once again. So, thank you for that!

Here's the rationale behind what I did:

- parse the blockchain for the timeframe between the last 6 and the last 20 hours

- look for

commentoperations (blogposts and comments) - restrict for blogposts

(1). The link would be retrieved if all the following conditions are met:

- author has reputation < 60

- author has < 100 followers

- author has < 3000 steem power

(2). Then I would look into these authors' blogs for their last 4 blogposts (assuming that 4 is a decent number of higher quality posts one can have in the course of the last two days).

(3). If a post would have amounted for < 10 SBD and is in the desired timeframe (between last 6 and 20 hours), give me the link.

(4). The links would appear on screen as they are retrieved, but they would also be saved to file (txt).

The output on the screen contains duplicate links (some links appearing more than once) but the final text file is clean, containing only unique links that meet all the criteria specified.

The Code

It takes up to an hour to run the code to completion on a public node, and much less in your local node.

To run it, you need Python (I use 3.4) and steemtools. Make sure to have successfully installed all the dependencies. A guide to steemtools is provided by @furion in his release post.

And here's my code:

from steemtools.blockchain import Blockchain

from steemtools.base import Account, Post

b = Blockchain()

ac = Account

curr_block = b.get_current_block()

st_block = curr_block - 24000

postlist1 = []

postlist2 = []

# 'replaying' the blockchain, starting 20 hours ago and ending ~6 hours ago

# filtering by comments (blogposts and comments)

for event in b.replay(start_block=st_block, end_block=curr_block - 7200, filter_by=['comment']):

post = event['op']['permlink']

author = event['op']['author']

reputation = ac(author).reputation()

steem = ac(author).get_sp()

followers = ac(author).get_followers()

if post[:3] != 're-' and reputation < 60 and steem < 3000 and len(followers) < 100:

# looking into their blogs and retrieving the last 4 blogposts

# if the post has a payout of 10 SBD or less and is posted in the desired timeframe

# give me the link

blog = ac(author).get_blog()

for post in blog[:4]:

payout = Post.payout(post)

timeEl = Post.time_elapsed(post)/60

if payout < 10 and timeEl > 360 and timeEl < 1200:

try:

print(Post.get_url(post))

postlist1.append(Post.get_url(post))

except:

continue

# removing duplicates

for item in postlist1:

if item not in postlist2:

postlist2.append(item)

# writing the final results cleanly to file

with open('curie.txt', 'wt') as f:

for line in postlist2:

f.write(line+'\n')

f.close()

You can get this code from my github as well. Use it at your discretion. If you need help with modifying or adapting it, let me know through a message here or on the chat.

I ran the program earlier today and I got ~650 unique links in the output file. Given that these links meet the criteria (<60 rep, <3000 sp, <100 followers, <10 SBD, 6-20 hours timeframe), I can only imagine how many good posts may be left behind.

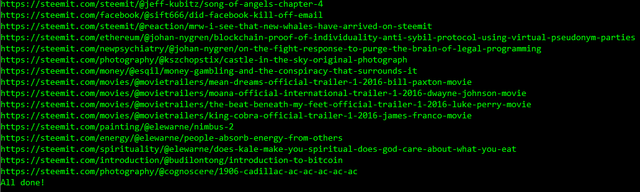

Automation is most definitely needed in the curation process. I'm going to end by pasting the last ~50 links from my output file of earlier today. Some of these links may still meet the criteria for curie:

https://steemit.com/proposal/@cryptofunk/my-marriage-proposal-at-the-highest-point-in-hong-kong-victoria-peak

https://steemit.com/steemit/@steemrocket/13-ways-to-promote-your-next-steemit-post-infographic

https://steemit.com/mars/@fathermayhem/is-mars-really-a-red-planet

https://steemit.com/easy/@cashni89/easy-cash-machines-review

https://steemit.com/steemit/@digital-wisdom/posting-our-first-bounty

https://steemit.com/graffiti/@jahvascrypt/year-round-metal-enjoyment-nor-eastour-2016

https://steemit.com/steemit/@stepka/may-it-be-in-steemit-s-plans-to-build-special-rooms-for-every-native-language-in-the-future-a-little-question-suggestion

https://steemit.com/engineering/@engineercampus/product-lists-and-the-avtomat-kalashnikova

https://steemit.com/story/@franks/a-short-story-brighton-city-of-gold-by-frank-sonderborg-republic-of-b-chapter-3

https://steemit.com/mourning/@roguesoldier407/real-talk-papi-s-sonnet

https://steemit.com/poetry/@roguesoldier407/real-talk-the-weird-lonely-drunk-at-the-pub-with-the-notepad

https://steemit.com/blog/@uceph/lucid-dreaming-journal-day-5-chaos

https://steemit.com/poetry/@timbot606/the-man-in-the-moon-an-original-poem

https://steemit.com/art/@gavicrane/detailed-watercolor-guide

https://steemit.com/howto/@ejaredallen/how-to-write-a-blogpost-2016926t182111633z

https://steemit.com/spanish/@bluechoochoo/como-y-donde-ver-el-debate-presidencial-en-linea

https://steemit.com/cord-cutting/@bluechoochoo/how-to-watch-the-us-presidential-debate-livestreams-online-without-cable-authentication

https://steemit.com/life/@jg02/6-steps-to-conquer-fear

https://steemit.com/life/@jonjon1/unraveling-my-truth

https://steemit.com/uni/@ellehendery/induction

https://steemit.com/outcast/@dogtanian/makes-no-difference

https://steemit.com/money/@scan0017/100-trillion-reasons

https://steemit.com/news/@lyndsaybowes/the-ethnic-cleansing-of-palestine

https://steemit.com/food/@chef.cook/chocolate-biscuits

https://steemit.com/news/@steemtalk/steem-talk-your-daily-best-of-steemit-digital-newspaper-evening-edition-20

https://steemit.com/history/@matthew.raymer/strangers-in-a-strange-land

https://steemit.com/business/@matthew.raymer/deadlines-and-blind-dates

https://steemit.com/bitcoin/@spanzy/bitcoin-mining-the-world-s-first-decentralized-currency

https://steemit.com/politics/@the.dudermensch/the-top-5-factors-that-changed-my-political-awareness-forever

https://steemit.com/blockchain/@ttm/ubs-guides-4-banks-on-blockchain-settlement-system

https://steemit.com/photography/@unhorsepower777/photography-people-in-black-and-white-part-2-original-photography-by-unhorsepower777

https://steemit.com/beyondbitcoin/@jennane/why-i-m-grateful-to-steemit-useful-links

https://steemit.com/night/@tskeene/malt-liquor-stories-night-train

https://steemit.com/anarchism/@dsayers/the-case-against-political-voting

https://steemit.com/writing/@thefinalgirl/original-work-you-ll-always-find-your-way-back-home-chapter-3-part-1

https://steemit.com/relationship/@decimus/cut-the-arrogance-replace-with-confidence

https://steemit.com/facebook/@sift666/did-facebook-kill-off-email

https://steemit.com/fallout4/@scottii/nuka-world-mad-mulligan-s-mine

https://steemit.com/heroesofthestorm/@scottii/how-to-with-the-lost-vikings-heroes-of-the-storm

https://steemit.com/neuquen/@matiasm/neuquen-argentina-un-dia-de-primavera

https://steemit.com/movies/@movietrailers/mean-dreams-official-trailer-1-2016-bill-paxton-movie

https://steemit.com/movies/@movietrailers/moana-official-international-trailer-1-2016-dwayne-johnson-movie

https://steemit.com/movies/@movietrailers/the-beat-beneath-my-feet-official-trailer-1-2016-luke-perry-movie

https://steemit.com/movies/@movietrailers/king-cobra-official-trailer-1-2016-james-franco-movie

https://steemit.com/mtg/@collin-stevens/magic-the-gathering-top-kaladesh-cards-minus-gearhulks-planeswalkers-and-the-rare-land-cycle-let-me-know-if-i-missed-any

https://steemit.com/debates/@atosales/my-coverage-of-the-reality-show-known-as-the-debates

https://steemit.com/steemit/@jeff-kubitz/song-of-angels-chapter-4

https://steemit.com/steemit/@reaction/mrw-i-see-that-new-whales-have-arrived-on-steemit

https://steemit.com/photography/@kszchopstix/castle-in-the-sky-original-photograph

https://steemit.com/introduction/@budilontong/introduction-to-bitcoin

https://steemit.com/photography/@cognoscere/1906-cadillac-ac-ac-ac-ac-ac

If you have suggestions for other useful scripts, please let me know!

To stay in touch with me, follow @cristi

Cristi Vlad, Self-Experimenter and Author

This is a good idea, to generate a list of users, which can then be manually curated further to eliminate plagiarism etc.

that could be handled in part too by automation. anyhow, I think the last touch should be human, while most of the filtering (removing the noise) should be done through automation.

Nice, and thanks for sharing the source code! Resteemed :)

thank you!

Auto-curie!! I like the name ^^

lack of inspiration @lemouth :)

Is that the criteria currently being used by the manual curators? If so, then it definitely makes sense to automate it. If the human curators are doing something different, then I would not - because they are doing a really good job, and I'd hate for them to start missing good posts that their current process catches due to automation.

Another thought would be to eventually create a portal where the Curie curators could view all the filtered posts, and even have a way to flag/categorize posts and communicate/share information with each other to minimize repeated/duplicate reviewing of the same posts by multiple members.

yes, those are a few of the criteria. it's kindof unhumane to manually curate so many posts everyday - at least 600 met the criteria for when I ran the code...

Yeah, it's actually kind of insane how they manage to sift through all the posts and find as many good ones as they do!

Good ideas. Thank you!

Automating processes - it's the only way to really scale out something. Good stuff.

in the world of today, yes...especially if it involves technology

So this automates the ability of finding posts that meet the Curie criteria?

What would be nice is a website that links to qualifying posts and is broken down by time (i.e. hourly time sections). This would help with the manual curation.

https://steemdb.com/comment/curie

Does this work? :)

wow. what a quick response! yes, I think it works great. it's much more convenient to have everything on a page than having to run the code!

good job!

Curie is an awesome project. I'm surprised they made the criteria to where the rep has to be less than 60 and you have to have less than 100 followers. There are a lot of people at our reputation level and follower count that badly need help. It is an awesome project!

I think one of the most urgent priorities is to retain new users. I know some of us with higher rep are being frustrated with the rewards, but still, retention of new users is more important. IMHO.

Yeah I agree. I can see a big need for that for sure. I have got 4 people onto this platform in real life and then they quit already. For the people like us it makes it a tough thing because there are suddenly going to be very few people who can actually main a sustainable income off this right now. (Average $100 / day In SBD) We are pushing hard but I fear that if things continue the way they have been the only ones that will be able to sustain themselves will be someone like Charlie Shreem. It is putting out good stuff for sure but if at least 100 content creators can't be sustainable it sort of worries me.

I'm here because of a bot announcement about the sharing of my link in this place, and this has been a great chance to know this project! Thanks!

good job, dude!