Human eye has a resolution of 576 megapixels. Can a DSLR camera having 576 megapixels resolution ever get developed? How? Why not?

The Human Eye as a Camera

The human eye has a hardware capacity of about 120 megapixels (see: Dave Haynie's answer to What is the resolution of the human eye in megapixels?), which seems great. But only about 6 million of those are color sensors, and of those, only about 400,000 detect the blue-end of the visible spectrum. And in daylight, the sun oversaturates the monochrome receptors, so they shut off and only the color sensors, which mostly do not saturate (the blue-sensitive S-cones can), are active. So your eye is basically a 6 megapixel camera, most of the time

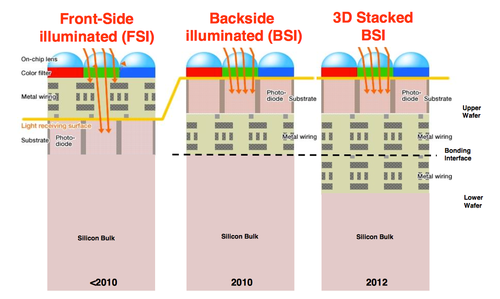

And I have even worse news for you… your eye has a pretty lousy sensors, as 6 or even 120 megapixel sensors go. In camera terms, it’s a front-side illuminated sensor.. the 2000s called, and they want your eye back. The image sensor in your eye is located behind all the “wiring”… blood supply in this case. Modern cameras, and the eye of the octopus, have their sensors in the front, their wiring and other stuff in the back. That’s called a backside sensor because the sensors are built at the bottom of a chip, but in use the chip is flipped around so the sensors are in the front.

And worse still, you have this big bundle of wiring — well, nerves — right in the middle of the eye. So you can’t see the thing directly in front of your eye. Never noticed? Well, we have really good software driving this hardware. Really good software. Your brain is more powerful at this stuff than a room-sized supercomputer.

So I have a few Olympus Micro Four Thirds cameras, a Pen F and an Olympus OM-D E-M5 mark II, which have a thing called “hires” mode. They shoot 8 shots, moving the sensor by 1/2 pixel increments, to deliver two interstitially overlapping 20 or 16 megapixel images with full R,G,B color on every pixel. The result is an image that’s roughly 50 or 40 megapixels in effective resolution.

Your eye does that same kind of thing, only better. Microtremors move your eye constantly, about 70–110 times per second, while your brain (that software) is constantly fusing that last image with a bunch of previous images. The result is that what the best we can see is about 4x the resolution of the actual eye, close to 500 megapixels in the dark, or maybe 24–26 megapixels in the bright sun (and again, not exactly an equivalent pixel, but that’s the best equivalence I can come up with). And of course, we’re actually seeing a fused stereo image from two of these cameras, so that boosts the resolution to maybe around 50 megapixels, at least in a sense.

How about the lens — a camera is only as good as the lens, after all? We have a very, very simple lens. A single element, in fact, while I have camera lenses with 24 or more lens elements. And the lens is focused by changing its shape… so as we get older, it becomes less flexible, and the focus range shrinks. I have camera lenses decades older than I am that still focus well. Did nature cheap out on the lens?

Well, compared to a camera, a bit. But they made up for it in parts of the sensor design. The average eye is about 22mm diagonal on the average, just a bit larger than a micro four-thirds sensor... but the spherical nature means the surface area is around 1100mm^2, a bit larger than a full-frame 35mm camera sensor. The highest pixel resolution on a 35mm sensor is on the Canon 5Ds, which stuffs 50.6Mpixels into about 860mm^2. Being spherical rather than flat, there’s no need for all those camera lens elements. Many of the elements in a typical lens are there to project an image clearly on a flat field, your camera sensor. The lens naturally projects in a sphere. Like… oh, I dunno, your eye! In fact, some camera companies have been looking into curved field sensors to deliver a better image in a smaller area with simpler optics. We got there millions of years earlier.

Real cameras have an advantage on shutter speed. Anything too fast going by we’ll see as a blur, as again, we need to integrate a number of images to really understand what we’re seeing. Some cameras can shoot at 1/32,000th of a second, and shoot full resolution images at 60fps or so. Special-purpose high-speed cameras go even faster. Naturally, we have made cameras specifically to photograph things beyond the reach of our eyes.

Cameras and Resolution

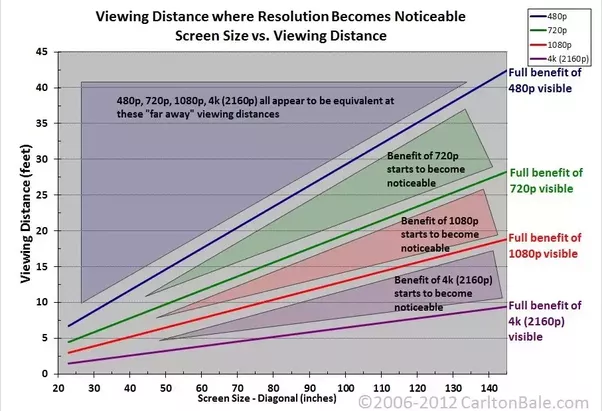

But we also drop off fast in practical resolution. Think about HDTV, only around 2 megapixels per image. If you’re watching my 70″ HDTV at more than 15 feet away, if you have normal vision, you can’t really see the high-definition part. If you’re five feet away, you can probably tell it’s not a 4K television (8 megapixels). So that 16–24 megapixel mirrorless or DSLR? Pretty much all you need for most purposes.

But you’re still demanding higher pixel counts, eh? We see APS-C with a sweet spot at about 24 megapixels, Micro Four-thirds at 20 megapixels, and full frame all over the place, 12 megapixels up to 50 megapixels. That’s because professional cameras are built to solve professional photo problems, and that’s not necessarily a one-size-fits-all situation.