Blueprints for Spaceship Earth - A Tokenized Resource Economy based on Ecological Economic Models

Mathematical and computational models of physical phenomenon are necessarily incomplete by construction in the same way that the we say "the map is not the territory". Perhaps they must also be falsifiable in the same way a scientific theory must be falsifiable.

If this is the case, then intuitively speaking, there may be a deep synergy between the fact that both scientific models and theories are necessarily falsifiable in the same way that there is an isomorphism between types and proofs (see Curry–Howard isomorphism).

Scientific theories are founded upon mathematical and epistemological proofs. Scientific models can be constructed much like certain verified computer programs are constructed - by interconnecting dependent types. Well, real world software is actually a bit more hairy, but let’s assume humans can actually code formally verified software for a second and possibly connect these things together.

This type of hypothetical software is kind of at the same level as a mini-scientific theory or framework. If you can build it, it works somewhat like a scientific theorem and it can actually do stuff! I’d imagine this is kind of like the holy grail of software engineering that we are striving towards.

Although it’s common to think of physical models as a directed graph of connected black boxes in which you give various input and outputs to (think Simulink diagram), the data going into and out of the models is less important than how the data types interrelate.

The inputs to the black boxes have types and so do the outputs. The boxes have constraints as to what types can come in and out. If you connect the wrong type it will break and the model will not run. The way these types are related and interconnected must make sense according to some scientific model based on a theory or else the code should not be compiled, or turned into executable code, since its not ‘proven’.

For example, imagine a software system for climatology that simulates and predicts weather patterns. Though the data is the true indicator of falsifiability at the end of the day, the first step is to ensure the types and interfaces used to design the software (to connect the functions & components, which I refer to as black boxes) make sense physically. Ie the model must make sense before you can hope the data makes sense.

If improper types, say improper physical units of measure, are used in some function somewhere and you have a fallacy in your proof or a bug in your computational model, then we are indeed doing worse than fudging the numbers and we don't only piss off the compiler... we could also unleash the wrath of politically motivated climate change deniers. We end up being wrong without knowing it and must psychologically prepare for the effects of the bull-shit asymmetry principle.

That’s all very theoretical and remains to be proven sound. Practically speaking, we’ve built many of these types of physical models and simulators before: climatology, geology, and oceanography simulators all exist. Some of it was written in Fortran in the 80s by our fathers, while other more modern ones are used to upset the expectations of the every day media consumer naively expecting a sunny day tomorrow. Certainly, some are more accurate than others. It's worthwhile to mention that there are also many prior integrated systems that were built for Earth Scale simulation (starting from World3 on to CAM) that have pretty sophisticated models using continuum mechanics, but I believe these could be greatly improved. There are even more likely to come out in the future.

In the same light, I’d imagine it’s possible to create a software ‘ecosystem’ that lets people openly and collaboratively connect their various physical and computational models of the Earth to build a big simulator that can incrementally become more accurate as models are refined. It certainly wouldn’t be written in Fortran, though it could reference a lot of the work already done in various projects. Perhaps it would be more like Idris or dependently typed Haskell (I personally really like this Idris library and imagine it would be based off of something like this: https://github.com/timjb/quantities).

The fact is that types (which can be thought of as 'that which allows different functions to compose and modules to interrelate') could be designed which would allow implementations of the models to be plugged in together and interconnect these physical simulators (I know… easier said than done). Assuming this was possible, what’s so great about that?

Well, imagining that we can have this big (open & collaborative) earth simulator and various systems can be interconnected it’s interesting to entertain the notion of following critical ecological economic indicators of value through this simulator. Imagine that these core indicators as represented by the flow of crypto-tokens. In this context I’m referring to tokens in sense in which they are used in distributed ledger technologies or blockchains (as clumsy as current implementations are).

Yes, it’s true with simulators like this the amount of data is enormous, but bare with me as a lot of this computation is likely delegated to off-chain solutions (think state-channels or maybe using something like Golem or Enigma) and only critical parts would be persisted on a blockchain perhaps on a weekly basis; trustless computation may be more important than frequent persistence in this case. The practicality of this could be a few decades away, but it’s quite an interesting line of thought to follow.

Why am I bringing crypto-tokens into this big earth simulator idea? Why are they so important or even relevant? Well, the token is like a unit of something that flows much like data flows through a model or a black box. They flow in one way only and can’t be ‘double spent’ or split as they flow from the reference frame of the network; currently token transactions are generally simple financial instruments that are irreversible due to a blockchain protocol's immutability and single history (ie a common prefix).

That is, between untrusting parties or autonomous computational agents (think of fast smart-contracts) interacting with the network, the tokens will flow in the exact same way from the frame of reference of all participants in the network. It’s objective in the same way we imagine matter and motion to be objective or, perhaps more efficiently, can be objective upon a disagreement.

If you have trouble understanding what I’m referring to check out data-flow programming or try out something like LabVIEW.

In data-flow languages, instead of writing a function which takes in variables as inputs and returns some output, you create a block (that is, instead of a function in every day programming it’s a visual node) that has data flowing in and data flowing out. It can be visualized like water flowing through a pipe or current through a circuit, except it’s not only water and electrons, but other types of things as well and transformations occur inside the box.

Blockchain tokens also obviously have different types, which can even be different physical quantities of units of measure like energy, or mass, etc. These tokens can flow as virtual representation of real world flows. There is a synergy between these tokens that can represent key ecological economic value of some sort, and the energy flows in physical processes.

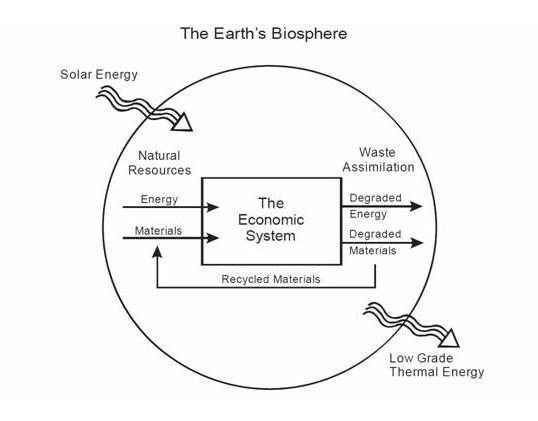

In a more abstract sense we can look at the earth as a thermodynamically open system that receives energy input from the Sun and dissipates some energy out back to the solar system. A good visual is the diagram seen in ecological economic models:

Figure 1 — The Earth as a open system of resource flow

Now, given this diagram, and the concept outlined earlier of a token that can be used for physical models can you begin to formulate a visual for how these tokens can flow and what types of tokens there would be? In a naive sense it’s not so hard to do (ignoring the vast complexity of the big earth simulator for now). As can be seen in the diagram, the economic system is a like a blackbox where people create economic value with relevance to the sphere of human activity (see Noosphere).

This type of economic value doesn’t have any direct correspondence to the key ecological economic value indicators that we want to discover. However, it helps to note, that the boundary or interface around the economic system (the actual rectangle around the economy in the diagram) is the connection of the economy to the biosphere / ecology.

This interface is what I think needs to be built out with tokens that represent measurable physical quantities that have ecological economic value which flow in and out of the economy. The economic systems that interface or interact (ie produce or consume) with the external environment or ecological systems, interact with natural resource stocks and ecosystem services; in this context I’m suggesting natural resources stocks and ecosystem services be software representations generating token supplies and flows (amounts and rates) based on a pluggable model framework.

Whether through economic actions consuming raw resources or perhaps producing waste, I tend to think of ecological economic activity as working with a potential ecological debt and the key indicators that cause instability are the indicators of this debt. Also, somewhat unrelated, this interface to the natural world can also be thought of as the source of provenance for supply chains that start with raw materials (think the part before raw materials).

If such tokens were to be constructed for representing these physical matter and energy flows (and perhaps other variants), these tokens would have to have dynamic and changing supply as well as interdependence amongst each others’ supply and supply rate of change. Not everything would really make sense to model either, but there are mathematical ways to calculate how these dynamic systems are coupled and there should be a way to frame them around dependent types.

In modeling ecological systems, one can imagine that there is a stock of natural resources that grows and decays at certain rates given certain conditions (or forcing functions). Typically, ecological models use differential equations like predator-prey systems (see Lokterra-Volta equations) and are constructed with stock and flow diagrams to model these supply and demand dynamics. If we allow modelers to construct these stocks and flows models with the right data types, basically a type-based modeling framework for tokens, then we can connect these systems. The type system in this case, or some domain specific language which has the primitives for these types, allows for plugging these models together.

So, as a practical example, imagine the stock of biomass of living trees in the Amazon bioregion. This stock might be estimated through computer vision analysis of satellite imagery sampled at a certain rate, or even using a complementary set of sensors such as drone networks coupled with other statistical estimations from a trusted source of data.

This data can be fed into a model (which runs one of these equations to adjust the token supply based on how the trees die and grow) and in between samples the model adjust to the actual state, instead of the simulated state . The levels of the supply are constantly kept regulated kind of like a feedback-control system based on real world data (perhaps an adaptive Kalman filter).

Basically, using a sensor network to receive data about the state of natural resources and models of the data to predict changes of state between samples, we can create token types and changes of token flow rates each with supply that is minted and regulated based on the model simulation. It will increase and decrease accordingly.

A good example of a modeling context like this can be found here (carbon sequestration models) by the folks working at the Natural Capital Project on a project called Invest. I mean to show here that it is indeed feasible and people are attempting to model carbon sequestration (matter flows) for local community forestry resource management using a cost-benefit analysis based on natural capital. If it was possible to implement in a scalable way, using collaborative modeling, and using blockchain tokens or similar systems that can act as an autonomous computation engine to regulate token supplies, it may be quite powerful as a representation of real world value as well and work well with the future free market as a form of constraint.

When tokens are consumed or transformed by human economic processes some stock will go down other times it will go up. Also, since these tokens can be interconnected as they form an interdependent chain of value amongst themselves, externalities and coupling behavior can be calculated before consumption and ecological economic value (think of it as a basis for estimating ecological debt) can be estimated beforehand to comply with constraints like carrying capacity or critical factors which lead to that ecosystem’s collapse or failure. If one token of a certain type is consumed or transformed at a high-rate, it may likewise consume or produce some other tokens which are linked with it at corresponding rates based on the model.

Given that data and the models, natural capital stock and ecosystems services of a bioregion, or perhaps the entire earth, can be simulated as a collection of different types of tokenized stocks and flows. Such a system might be the basis of constructing local or global resource economies.

Another way to think about all this is to use a metaphor. Take the Gaia hypothesis which views the earth as a whole system which is a large network of coordinating organisms. Now, with such an digital interface created that models the relationship between the ecology and economy, the Earth can also be thought of as having a digital self-representation based on a large model of how it behaved in the past and where it is now (like a state machine) and a set of externality channels can be connected to our current global economy.

It’s like an Earth that is more ‘self-aware’ in a sense and now has a digital memory and can make predictions using a model and simulation of itself. This is a basic feedback control system that the Earth can use to regulate itself. The better this simulation the more likely we can come up with better constraints for a resource economy and better we can represent ecological economic value and avoid ecological debt.

Though I realize this technology seems very futuristic or simply impossible, I think in principle it’s feasible. The reason I wrote this is that ideas like this are blueprints which can act as seeds for the construction of such technology. I imagine that there are many variants of these blueprints. I’m interested to know what would your blueprints look like?

Congratulations @tetraqube! You received a personal award!

Click here to view your Board

Congratulations @tetraqube! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Vote for @Steemitboard as a witness to get one more award and increased upvotes!