Should we be getting worried?

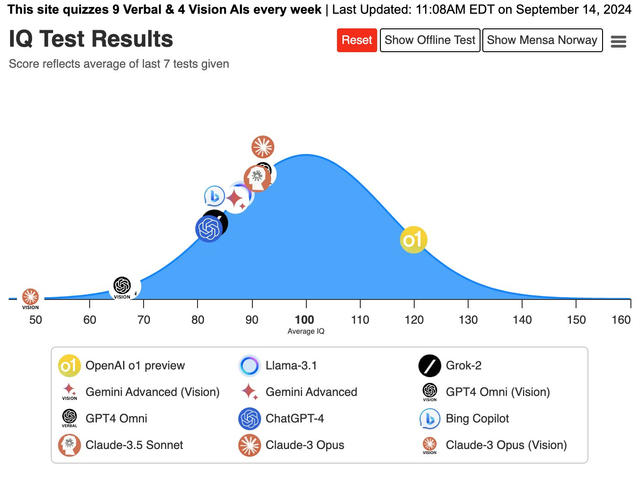

OpenAI's new model has an estimated IQ of 120 -- above the vast majority of humans. My rough estimate is that by 2027, LLMs will test above virtually all humans.

I don't think is (or will be) AGI. Passing reasoning tests is not the same as general reasoning, which has other important capabilities, such as long-term planning, online learning, broad integration, etc.

I do expect that AGI will be achieved between 2030-2033. That will be true human-level equivalence. ASI will follow soon after.

Note -- there are unpredictable double-exponential effects when AI is used to accelerate AI research. Although AI is already used to train AI models, there will be sharp discontinuities where the rate of improvement rapidly accelerates when AI researchers cause a feedback loop. This means that ASI cannot be derived from simple exponential trends, and possibly neither can AGI.