PSYCHOLOGICAL CYBER WARFARE | Here's Your Chance To Write A Deep State Twitter Bot Army

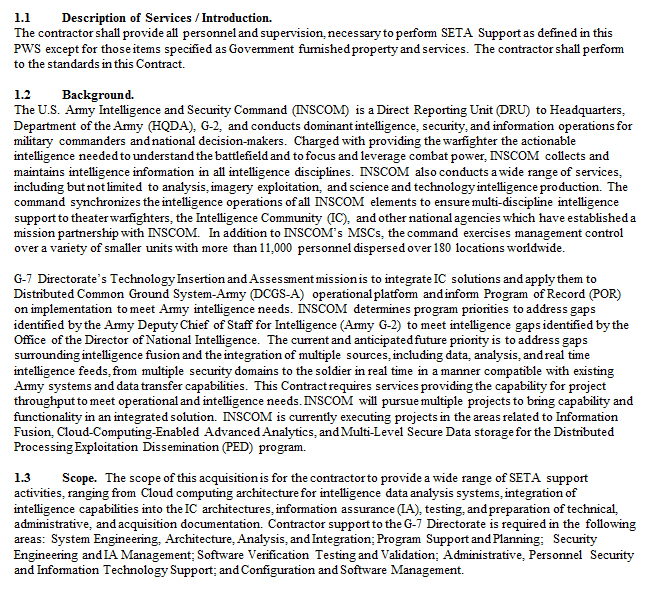

The United States Army Intelligence and Security Command (INSCOM) is a $6 Billion a year cyber intelligence unit that reports directly to U.S. Army commanders and top government officials. It's job is to conduct intelligence, security, and information operations for both the United States Army and the National Security Agency (NSA).

After a year of non-stop complaining by every deepstate Washington elite about how Russian Twitter bots and troll farms influenced the 2016 US election, the US army have finally decided to pretend that they now also need to build an online army of their own to help combat this nonexistent online threat from Russia and the rest of the world. It's not even as if they're trying to hid the fact either.

Placing an advert on an official government business opportunities and procurement website, the INSCOM are requesting tech companies to send them white papers for a weaponized army of Twitter bots. They don't want an army of accounts for the purpose of just post spam thought, they actually want an AI army that will also automatically respond organically to interactions by being able to generate between 3 and 10 unique statements based on a single original social media statement, whilst still retaining the meaning and tone of the original. The AI tool, which is supposed to learn as it posts, is required to translate foreign language content, such as text, voice, images from different social media platforms into English, from a number of different languages including Arabic, Russian and Korean.

The Army also wants the AI to have the capability to derive sentiment from all social media content, which they state at a minimum should "distinguish negative, neutral, and positive sentiment based on collective, contextual understanding of the specific audience." They also want it to be able to determine anger, pleasure, sadness, and excitement. Probably the most worrying capability they're requesting it have has to be whether or not it can suggest if "specific audiences could be influenced based on derived sentiment."

It's seems to me they are looking for something that has the ability to organically evolve a conversation in the hope of either changing peoples perception of reality, or to be simply to be used for entrapment purposes.

INSCOM has an Administrative Control (ADCON) relationship with 1st Information Operations Command. INSCOM G7 executes materiel and materiel-centric responsibilities as a Capability Developer and as the Army proponent for design and development of select operational level and expeditionary intelligence, cyber, and electronic warfare systems. 1st Information Operations Command (Land) provides IO and Cyberspace Operations support to the Army and other Military Forces through deployable support teams, reachback planning and analysis, specialized training, and a World Class Cyber OPFOR in order to support freedom of action in the Information Environment and to deny the same to our adversaries.

0001: Content Translation of PAI

A Capability to translate foreign language content (message text, voice, images, etc.) from the social media environment into English. Required languages are Arabic, French, Pashtu, Farsi, Urdu, Russian, and Korean.

B Identify specific audiences through reading and understanding of colloquial phrasing, spelling variations, social media brevity codes, and emojis.

- 1 Automated capability for machine learning of foreign language content with accuracy comparable to Google and Microsoft Bing Translate. Must be able to incrementally improve over time.

- 2 Recognize language dialect to ensure effectual communication.

0002: Automated Sentiment Analysis (SA)

A Capability to derive sentiment from all social media content.

1 At minimum, distinguish negative, neutral, and positive sentiment based on collective, contextual understanding of the specific audience.

- a) Capability to determine anger, pleasure, sadness, and excitement.

2 Capability to recognize local colloquial and/or slang terms and phrases, spelling variations, social media brevity codes, capitalization, and emojis will be included.

3 Automated machine learning of SA must be able to incrementally improve over time.

- a) Software should allow for heuristic updates to improve overall capability; e.g., manually suggest updates based on personal knowledge and experience.

B Capability to suggest whether specific audiences could be influenced based on derived sentiment.

0003: Content Generation Based off of PAI

A Capability to translate English into Arabic, French, Pashtu, Farsi, Urdu, Russian, and Korean.

B Automated capability to generate/create at least three, and up to 10, unique statements derived from one (1) original social media statement, while retaining the meaning and tone of the original.

- 1 Customize language in a dialect consistent with a specific audience including spelling variations, cultural variations, colloquial phrasing, and social media brevity codes and emojis.

0004: Assessment

- 1 Capability to continually inform MOE with/through sentiment analysis, content generation, and new target audience content.

- 2 Capability for end user to extract empirical data and visualize metrics of service, including number of content samples translated, number of content samples generated, number of content samples downloaded, number of conversations influenced by generated content, etc.

The advert states that they're not looking for hardware/firmware/software, but instead they're actually seeking a full 'vendor service'. This means that they're probably looking to outsource the Pentagons Twitter Troll army to an outside company. It'll be interesting to see who the final contract is awarded to.

This isn't the first time the US has been involved in this kind of social media manipulation. Back in 2011, under the Obama administration, a government contractor called Centom was awarded a contract by the Pentagon to set up and manage hundreds of fake social media accounts anonymously.

Revealed: US spy operation that manipulates social media | The Guardian - 03/17/2011

The US military is developing software that will let it secretly manipulate social media sites by using fake online personas to influence internet conversations and spread pro-American propaganda.

A few years later the Pentagon sunk millions of dollars into researching how to influence Twitter users. Even the British Army have created a Battalion of "Facebook Warriors". More recently, INSCOM put out similar business opportunity to this. In November last year they asked tech companies to submit white paper for a set of tools they could use in Open Source Intelligence gathering.

System Engineering Technical Assistance (SETA) Support for US Army Intelligence and Security Command G-7 Directorate.

Distributed Common Ground System-Army (DCGS-A) is the United States Army's primary system to post data, process information, and disseminate Intelligence, Surveillance and Reconnaissance (ISR) information about the threat, weather, and terrain to echelons. DCGS-A provides commanders the ability to task battle-space sensors and receive intelligence information from multiple sources.

DEFLECTION

This is totally a distraction aimed at making it look like this is the first time the US has been engaged in such warfare. Former MI5 officer Annie Machon told RT news that "the West has been running these so-called troll farms against other countries as well for a long time, so are they just trying to expand their operations by developing this new software? Or are they trying to disingenuously suggest to people that actually they haven’t done it before and only the Big Bad Russians, or the Big Bad Chinese, have run troll farms."

Either way it's a total waste of time and money because anyone that has been on social media for longer than 5 minutes can tell if an account is an automated bot or not even if it is controlled by AI. One thing I do jknow, I'm looking forward to bumping into one on Twitter soon.

Do you really know if that pro-Trump meme or far-right tweet is from a real person, or just an unmanned string of code that was purchased for pennies?

Although bots—automated accounts on social media—are certainly not a new phenomenon, they have a renewed political significance. Part of the Russian government’s well-documented interference in the 2016 U.S. election included pushing deliberately divisive messages through social platforms.

Investigators found that hundreds or thousands of dodgy Twitter accounts with Russian digital fingerprints posted anti-Clinton tweets that frequently contained false information.

A Daily Beast investigation reveals manipulating Twitter is cheap. Really cheap. A review of dodgy marketing companies, botnet owners, and underground forums shows plenty of people are willing to sell the various components needed to run your own political Twitter army for just a few hundred dollars, or sometimes less.

And once your botnet is caught, due to holes in Twitter’s security and sign-up features, it takes only a few minutes to do it all over again.

“It requires very little computing power to fire this stuff out,” Maxim Goncharov, a senior threat researcher at cybersecurity firm Trend Micro who has studied the trade of social media bots, told The Daily Beast.

The crossover between companies offering services to promote brands and those selling Twitter accounts to criminals highlights a constant struggle throughout the Twitter bot industry: everything is murky.

Nice work

This will only increase with time, and eventually our creations will just end up surpassing us in intelligence. Of course there is still a long way to go since what you are talking here is simply bots.

But still, our AI will end up growing so much it will surpass us. Can you imagine an AI as a president of a country?

amazing thoughts

I really thought I was going to be learning how to build a twitter bot army when I clicked on this post. :D

I also recently became a target of these twitter bots even I didn't have any tweet through this account. I was using it for information & news purpose only and tat's why I was following all types of accounts about Syrian issue, when my account reported by Russian or Syrian twitter accounts just because of following some pro-opposition accounts & I lost my account :(