Introduction to Machine Learning: The Neural Network!

Introduction to Machine Learning:

The Neural Network

Check out my previous post for an introduction to linear models and an idea of what machine learning is actually all about:

https://steemit.com/mathematics/@jackeown/introduction-to-machine-learning-introduction-and-linear-models

Neural Network Hype!

Neural networks have been getting a lot of press lately because of the following accomplishments (among so many others, many of which are just as exciting):

- They have defeated the world's best Go player. (Go is an ancient Chinese game which experts didn't think computers would master for another 20 years!)

- They have mastered object recognition with images beyond human capabilities: https://arxiv.org/abs/1502.01852

- They have learned how to play ARBITRARY Atari games using only screen images as input:

History and Motivation

Neural networks (very different from what we have today) were first proposed by Warren McCulloch and Walter Pitts in 1943 and it's been a long, slow, and mostly fruitless journey since then. There was always incredible promise for these models since they were based on our understanding of biological brains, which we know can do some incredible things. However, for the longest time, they were mostly useless because we didn't have the computing power to do much with them, we had no good way to train them, and they always under-performed the latest machine learning techniques like "support vector machines" and "random forests" [I might do a post on these later ;)].

This all changed with the introduction of the "sigmoid activation function" and "backpropogation" which allowed neural networks to be trained. Since then, there's been a lot more research into artificial neural networks and how to train them effectively. I am inclined to think that with the amount of research going towards neural networks lately, we are in the midst of a new renaissance spurred on by neural networks and although the field is constantly evolving and new papers are coming out almost every day, it's relatively simple to describe the basics, so here we go!

What is a Neural Network?

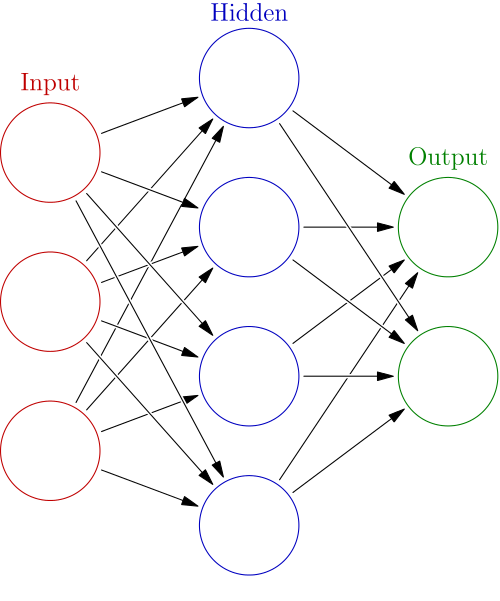

The above picture is a neural network. In graph-theory you'd recognize it as a directed graph. Each circle is called a "neuron" or maybe even "node" and each edge has a "weight" associated with it (some real number represented to whatever precision you care about...). The neurons are organized into "layers." There is only ever one input layer and one output layer, but there can be as many "hidden" (middle) layers as you want. Most of the time, these layers are fully connected to the next one (meaning no valid edge is left out). Edges in a "feedforward" neural network always go from left to right, but "recurrent" neural networks may have loops. (Recurrent neural networks are used for time series data and other things where your input is large or sequential like classifying the author of a book or the mood of a tweet letter by letter.) I'll be focusing on feed-forward neural networks for now since they are easier to describe in the context of machine learning as I've described it.

In order to understand the neural network, we first need to understand the neuron individually.

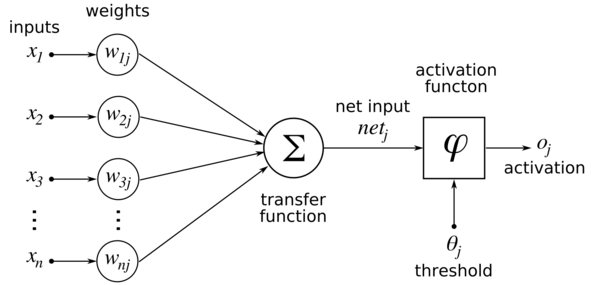

The picture above is an expanded picture of a neuron in the jth layer of a neural network.

It takes n inputs (X1...Xn) from the previous layer and sends out a single output (which is usually duplicated as one input for each neuron in layer j+1). The neuron's output is determined as follows:

- The inputs (Xi) are multiplied by their associated weights (Wi).

- These weighted inputs are summed.

- A bias/threshold value for this particular neuron is added to this sum.

- This new sum is run through an "activation function" to determine the neuron's output.

Most activation functions always have positive slope (monotonic) and stay between 0 and 1. This makes sense from a "biological" perspective where a neuron output corresponds to firing (1) or not firing (0).

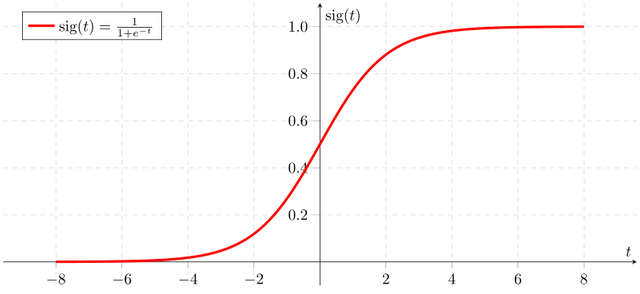

A classic activation function is the Sigmoid (meaning S-like):

Once you understand that this is how a single neuron works, you should be able to see how a forward pass of data through a network like this would work. Each neuron does its job (strongly determined by its weights and biases) and passes its answers on to the next layer until we arrive at the final layer. Normally we start by initializing all weights and biases to small random numbers. (At this point the neural network is useless.) Then we train it using data and slowly shift the weights and biases towards values which make the network as a whole produce helpful outputs.

That's pretty much all there is to it...this is an artificial feed-forward neural network (ANN).

[To keep this post relatively short, I've left out any inkling of how these get trained, but I'll get to it... ;)]

To see these in action, check out this simulator:

http://playground.tensorflow.org

Very interesting. Thanks

Dude...in this world of bots on steemit, you have to have more detailed posts whose responses would be hard to automate...otherwise we all just assume you're a bot...So if you're not a bot, let me know and I'll unflag you...

Hey. What did i do wrong? Sorry. I'm new. Please tell me. I liked reading your post. I wish there's more content of machine learning topic. I had some courses at the university and its always interesting for me to read something about it. I even upvoted you...

Sorry, dude. Lately steemit has been filled with accounts which automatically comment on almost every post they come across with generic comments like "Awesome Post" or "Great content." Leaving comments like these don't add anything to the discourse about a topic and at the very least, people will ignore your comments because they think they're bots.

Basically, try to leave more descriptive comments which mention something inside the post like "Are artificial neurons called perceptrons?" or "Wow! I didn't know machine learning beat the world's best GO player!" or something like that.

Sorry I flagged you. Honest mistake :)

(I have removed the flag and upvoted your comment)

I hope you continue to enjoy my machine learning content. If there's any specific questions you have about it, feel free to ask!

Thanks for explaining. I've just seen you posted another ml topic. Its already bookmarked for later reading. J

Awesome you got this up so fast with such high quality, it is going to take a bit to digest all of this info. Fantastic job with the article.

Thanks! And thanks for the @randowhale!

I'll give you better feedback once I manage to get through it all... for now just a job well done :)