Autoencoders...

Autoencoders

Sorry I haven't posted in a long time, but I've been working on a model for the zillow kaggle competition. (Kaggle is a website which hosts datasets and competitions with prizes!) Autoencoders are basically feed forward neural networks which is trained on the identity and which has small hidden layers...Here's a post explaining what I know and asking a question for brownie points!

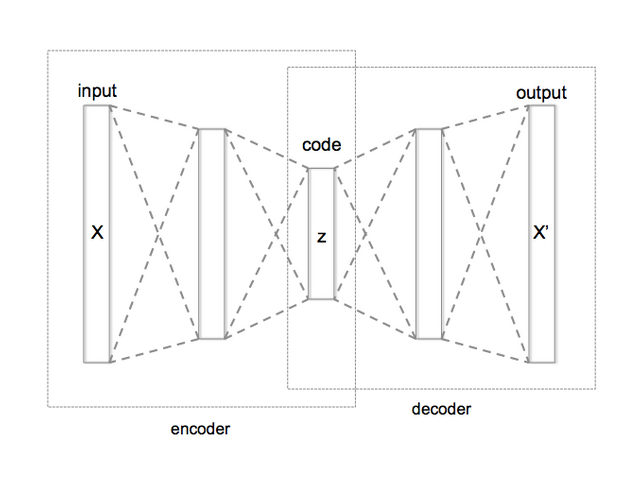

So anyway...look at this picture of an autoencoder!

Autoencoders are simply feedforward neural networks as I've described in previous posts..

HOWEVER this one is trained on data where the output is the same as the input...in other words, you are training a neural network to predict the identity on a set of data!

This sounds stupid and counter-intuitive at first glance...but it can help you do feature detection and/or dimensionality reduction. Here's how...

It's remarkably simple...you just make the middle layer of the network have very few neurons. In order to learn how to reproduce the input on the other side, it has to learn a compressed representation in that small hidden layer! This is the key insight!