Mathematics - Linear Algebra Combinations and Independence

Hey it's me again! Today we will continue with Linear Algebra getting into Linear Combinations and Independence. At the end we will also talk about how all this is useful in Matrixes and Linear Systems. I would suggest you to read the previous post about Linear Vector Spaces first :) So, without further do let's get started!

Linear Combinations:

Using linear combinations we can define linear spaces using some vectors they contain. Suppose we have the vectors v1, v2, ..., vn and w that are vectors of a specific vector space, then we say that w is a linear combination of the vectors v1, v2, ..., vn if there are numbers k1, k2, ..., kn so that:

w = k1*v1 + k2*v2 +... + kn*vn

Example:

Suppose we have the vectors v = (2, 1) and w = (-1, -1) of vector space R^2, then a random vector u = (a, b) of this vector space is written as an linear combination of v, w like that:

u = (a, b) = (a - b)v + (a - 2b)w

cause u = (a, b) = k*v + l*w = k*(2, 1) + l*(-1, -1) = (2k - l, k - l) =>

a = 2k - l

b = k - l => k = b + l

-------------------------------------------------------

a = 2*(b + l) - l => 2b + l = a => l = a - 2b

k = b + a - 2b => k = a - b

Linear Span:

All possible linear combinations of the vectors v1, v2, ..., vs of a linear vector space constitute a subspace of this linear vector space. This subspace is called the span or space generated by vectors and is symbolized as:

span{v1, v2, ..., vs} or S({v1, v2, ..., vs})

Example:

Think about our previous example there we had a random vector u = (a, b) be a linear combination of the vectors v = (2, 1) and w = (-1, -1) that are all in the vector space R^2. If you think about it more clearly you will see that R^2 = span{v, w}.

We can also say that R^2 = span{(1, 0), (0, 1)} or R^2 = span{e1, e2} where e1 = (1, 0) and e2 = (0, 1)

This can be generalized and a vector space R^n can be defined using the span of the vectors e1, e2, ..., en. So, we have that R^n = span{e1, e2, ..., en} where e1 = (1, 0, ...., 0), e2 = (0, 1, ...., 0), ..., en = (0, 0, ...0 1). All this is true, cause if we think about a vector u = (x1, x2, ..., xn) than u = x1*e1 + x2*e2 + ... + xn*en.

So, after this example and little theory you now should know that a vector space can be defined or generated by many different combinations of vectors. So, R^2 = span{v, w} = span{e1, e2}. We will later on get into which one is "better".

Some other things that apply to the span are:

- If we have a linear vector space V and V = span{v1, v2, ..., vs} then if v(s+1) is a vector of V, non-zero and also different to the other vectors we can say that V = span{v1, v2, ..., vs, v(s+1)}

- If A is a mxn matrix then the range R(A) of this matrix is generated as a span from the columns A1, A2, ..., An of A and so R(A) = span{A1, A2, ..., An}

The first one is self-explainatory, but let's get into an example for the matrix one so that you get a smooth transition into linear independence.

Example:

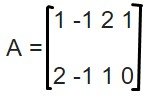

Suppose a matrix

Then R(A) = span{ (1, 2), (-1, -1), (2, 1), (1, 0)}, where each of the vectors is representing a column of A.

So each vector w is a linear combination of this vectors:

w = c1*(1, 2) + c2*(-1, -1) + c3*(2, 1) + c4*(1, 0), where c1, c2, c3 and c4 are real numbers

The question is: Are some of those vectors maybe unnecessary?

Well, if we try it out we will see that the vectors (2, 1) and (1, 0) can be written as linear combinations of the vectors (1, 2) and (-1, -1). This means that those 2 vectors are linear dependent as we will see later on.

So, if k, l are 2 real numbers than:

(2, 1) = k*(1, 2) + l*(-1, -1) = (k - l, 2k - l)

If you solve the linear system of 2 = k - l and 1 = 2k - l you will end up with k = -1, l = -3.

(1, 0) = k*(1, 2) +l*-1, -1) = (k - l, 2k - l)

If you solve the linear system of 1 = k-l and 0 = 2k - l you will end up with k = -1, l = -2.

So, we proofed that these 2 vectors are unnecessary to be used or are linear dependent.

We can write the span again by simply using the vectors (1, 2) and (-1, -1), cause these 2 are linear independent. So, R(A) = span{(1, 2), (-1, -1)} and the random vector w = a*(1, 2) + b*(-1, -1) where a, b are reals.

But, if you check it out again you will see that we can also use the other vectors for the span and so:

R(A) = span{(-1, -1), (2, 1)} = span{(2, 1), (1, 0)} = span{(1, 2), (1, 0)}.

So we again end up with many different sets of vectors that can generate the R(A), the same way as many different set of vectors could generate a subspace of a vector space.

Linear Independence:

Let's say we have v1, v2, ..., vs be vectors of a vector space V. When each linear combination of those vectors c1*v1 + c2*v2 + ... + cs*vs = 0 results into c1 = c2 = ... = cs = 0 then v1, v2, ..., vs are called linear independent. If at least one of the ci has a non-zero value than those vectors are linear dependent.

Example:

Suppose the vectors e1 = (1, 0, 0), e2 = (0, 1, 0) and e3 = (0, 0, 1) of vector space R^3.

c1*e1 + c2*e2 + c3*e3 = 0 => (c1, 0, 0) + (0, c2, 0) + (0, 0, c3) = (0, 0, 0)

It's obvious that c1 = c2 = c3 = 0 and so e1, e2 and e3 are linear independent.

We can proof the same for e1, e2, ..., en of vector space R^n.

If any of the ci was non-zero than we would say that they are linear independent!

Theorems:

- Each subset of linear independent vectors is also a linear independent set

- Each superset of linear dependent vectors is also a linear dependent set

- A set of vectors is linear dependent when (at least) one of the vectors is a linear combination of the others

- When v1, v2, ... vs generate the vector space V ( V = span{v1, v2, ..., vs}) and u1, u2, ... ut are linear independent then t<=s

Basis of a Vector Space:

A subset or subspace B = {v1, v2, ..., vs} of a vector space V is called a basis of V when the vectors v1, v2, ..., vs are linear independent and also V = span{v1, v2, ..., vs} that means that v1, v2, ..., vs generate this vector space. We also call those vectors the basis vectors.

So, the vectors e1, e2, ..., en of a vector space V are basis vectors, cause they are linear independent and also generate the vector space. The basis that we get using those vectors is called the standard basis.

Something that will get us into the dimension of a vector space is:

When {v1, v2, ..., vs} and {u1, u2, ..., ut} are both a basis of V then s = t.

Dimension of a Vector Space:

The number of vectors in a basis of a vector space V is called the dimension of this vector space and is written as dimV. When dimV = n < ∞ we say that this space is finite-dimensional, else this space is infinite-dimensional.

So, we can see that:

- All the basis vector sets of a vector space V have the same number of vectors equal to the dimension

- The maximum number of linear independent vectors of a vector space V is equal to it's dimension

- The subspace {0} has no basis, cause the only element 0 it contains is not linear independent. That's why we say that this space has a dimension of 0 (dim{0} = 0)

- When V = span{v1, v2, ..., vm} then there is a subset of {v1, v2, ..., vm} that is a basis of V

- Each finite-dimensional space has at least one basis

So, when knowing the size we can also know if a given subset is a basis of V or not. Suppose dimV = n and vectors w1, w2, ... wn of V are linear independent, then this set also generates the space V ( V = span{w1, w2, ..., wn} ). In the same way if we know that dimV = n and the vectors generate the space V we know that these vectors are linear independent.

Matrixes:

We can use all the stuff we learned today in Matrixes too.

So,

- The dimension of the range R(A) (dimension of the column-space) of a matrix A mxn is called the rank of A or rank(A).

- When A, L and U are matrixes and A = LU where L is invertible then rank(A) = rank(U). So, when U is an elementary matrix of A in echelon or canonical form we can calculate rank(A) by calculating rank(U).

- The maximum number of linear independent columns is equal to the maximum number of linear independent rows of a mxn matrix. So, rank(A) = rank(A^T), where A^T is the transpose of A.

- dimN(A) + dimR(A) = dimR^n, where dimR(A) = rank(A). So, when dimN(A) = 0 then rank(A) = dimR(A) = n.

- When rank(A) = n then det(A) != 0 and vise versa.

Homogeneous and non-homogeneous systems:

We can also find relation between homogeneous and non-homogeneous systems.

Theorem with A being a mxn matrix:

- The set of solutions of an homogeneous system AX = 0 is a subset of R^n and when we we have for example 2 solutions X1, X2, then X1 + X2 and k*X1 (k a real number) are also solutions of this system. So, any combination of those solutions is also a solution.

- When X1, X2 are 2 solutions of an non-homogeneous system AX = b then X1 - X2 is a solution of the corresponding homogeneous system AX = 0.

- When Xr is a solution of the non-homogeneous system AX = b and Xo is a solution of the corresponding homogeneous system AX = 0, then any other solution of AX = b is written as X = Xr + Xo.

So, when AX = 0 has no solution then AX = b has also no solution, when AX = 0 has one or infinite solutions, AX = b has also one or infinite solutions.

So, when does AX = b have a solution?

Well, suppose a matrix A mxn then:

- When b is a subspace of the range R(A) (space of columns) of A and we have the system AX = b we know that this system has one or infinite solutions. If b is not a subspace of R(A) we know that the system AX = b has no solutions.

- When N(A) = {0}, so the nullspace of A contains only 0, we know that the system AX = b has either one or no solutions. When N(A) != {0} then AX = b has either no or infinite solutions.

The following sentences about a square matrix nxn are equivalent:

- A is invertible

- The homogeneous system AX = 0 has one solution (the o-solution)

- AX = b has a solution for each b in R^n

- R(A) = R^n

- N(A) = 0

- rank(A) = n

- dimN(A) = 0

- det(A) != 0

And this is actually it and I hope you enjoyed it! I know that I put a lot together today, but I had to say all that so that we are finished. Next time we will get into some examples of Linear Systems so that you see where all this stuff we talked about today is useful.

Bye!