What is machine learning?

Introduction

Machine learning is programming computers to optimize

a performance criterion using example data or past experience.

We need learning in cases where we cannot directly write a computer program to solve a given problem, but need example data or experience. One case where learning is necessary is when human expertise does not exist, or when humans are unable to explain their expertise.

Consider the recognition of spoken speech-that is, converting the acoustic speech signal to an ASCII text. We can do this talk seemingly without any difficulty, we are unable to explain how we do it. Different people utter the same word differently due to differences in age, gender or accent. In machine learning, the approach is to collect a large collection of sample utterances from different people and learn to map these to words.

Another case is when the problem to be solved changes in time, or depends on the particular environment. We would like to have general purpose systems that can adapt to their circumstances, rather than explicitly writing a different program for each special circumstance.

Consider routing packets over a computer network. The path maximizing the quality of service from a source to destination changes continuously as the network traffic changes. A learning routing program is able to adapt to the best path by monitoring the network traffic.

Another example is an intelligent user interface that can adapt to the biometrics of its user—namely, his or her accent, handwriting, working habits, and so forth.

Applications

Already, there are many successful applications of machine learning in various domains:

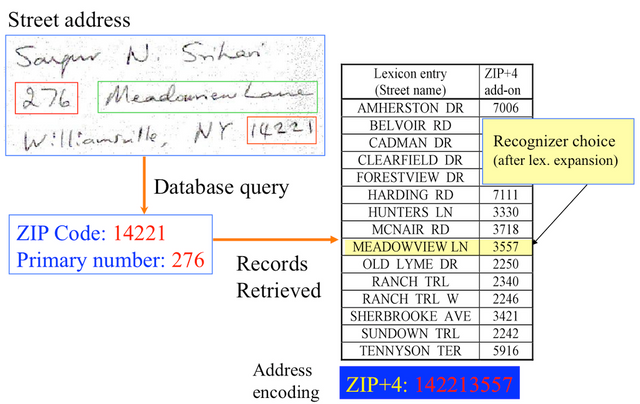

- There are commercially available systems for recognizing speech and handwriting

- Retail companies analyze their past sales data to learn their customers’ behavior to improve customer relationship management

- Financial institutions analyze past transactions to predict customer’s credit risks

- Robots learn to optimize their behavior to complete a task using minimum resources

- In bioinformatics, the huge amount of data can only be analyzed and knowledge extracted using computers.

These are only some of the applications that I will discuss throughout this blog.

We can only imagine what future applications can be realized using machine learning:

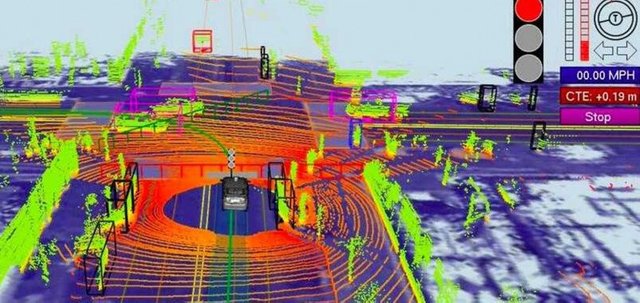

- Cars that can drive themselves under different road and weather conditions

- Phones that can translate in real time to and from a foreign language

- Autonomous robots that can navigate in a new environment, for example, on the surface of another planet

Current digital image processing possibilities

History

In the past, research in these different communities followed different paths with different emphases.

Machine learning has seen important developments since it first appeared as a theory.

- First, application areas have grown rapidly. Internet related technologies, bioinformatics and computational biology, natural language processing applications, robotics, medical diagnosis, speech and image recognition, biometrics, finance, sometimes under the name pattern recognition, sometimes disguised as data mining

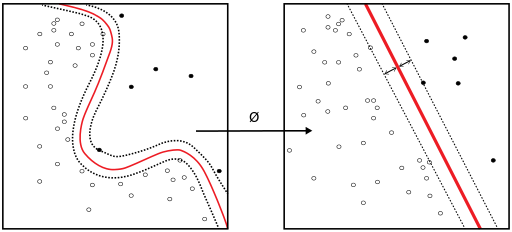

- There have been supporting advances in theory. Especially, the idea of kernel functions and the kernel machines that use them allow a better representation of the problem and the associated convex optimization framework is one step further than multilayer perceptrons with sigmoid hidden units trained using gradient-descent

- Another revelation hugely significant for the field has been in the realization that machine learning experiments need to be designed better. We have gone a long way from using a single test set to methods for cross validation to paired t tests.

Types of problems and tasks

Machine learning tasks are typically classified into three broad categories, depending on the nature of the learning "signal" or "feedback" available to a learning system. These are:

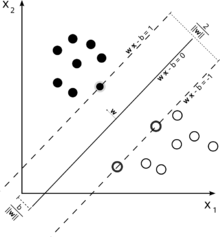

- Supervised learning: The computer is presented with example inputs and their desired outputs, given by a "teacher", and the goal is to learn a general rule that maps inputs to outputs.

- Unsupervised learning: No labels are given to the learning algorithm, leaving it on its own to find structure in its input. Unsupervised learning can be a goal in itself (discovering hidden patterns in data) or a means towards an end (feature learning).

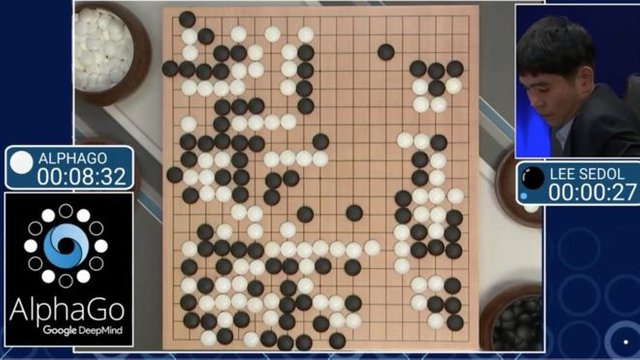

- Reinforcement learning: A computer program interacts with a dynamic environment in which it must perform a certain goal (such as driving a vehicle), without a teacher explicitly telling it whether it has come close to its goal. Another example is learning to play a game by playing against an opponent.

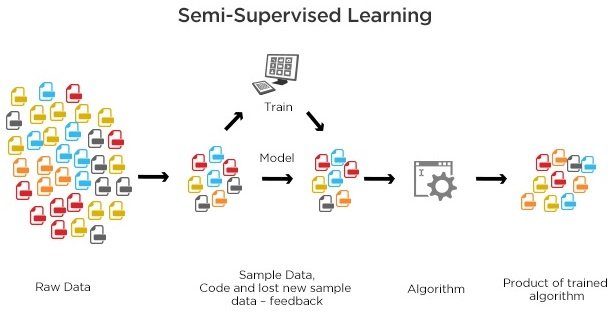

Between supervised and unsupervised learning is semi-supervised learning, where the teacher gives an incomplete training signal: a training set with some (often many) of the target outputs missing.

Transduction is a special case of this principle where the entire set of problem instances is known at learning time, except that part of the targets are missing.

Categorization considering the desired output of a machine-learned system

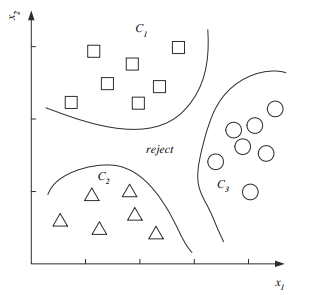

- In classification, inputs are divided into two or more classes, and the learner must produce a model that assigns unseen inputs to one or more (multi-label classification) of these classes. This is typically tackled in a supervised way. Spam filtering is an example of classification, where the inputs are email (or other) messages and the classes are "spam" and "not spam".

- In regression, also a supervised problem, the outputs are continuous rather than discrete.

- In clustering, a set of inputs is to be divided into groups. Unlike in classification, the groups are not known beforehand, making this typically an unsupervised task.

- Density estimation finds the distribution of inputs in some space.

- Dimensionality reduction simplifies inputs by mapping them into a lower-dimensional space. Topic modeling is a related problem, where a program is given a list of human language documents and is tasked to find out which documents cover similar topics.

Different way of solving problems

It has long been believed, especially by older members of the scientific community, that for machines to be as intelligent as us, that is, for artificial intelligence to be a reality, our current knowledge in general, or computer science in particular, is not sufficient.

People largely are of the opinion that we need a new technology, a new type of material, a new type of computational mechanism or a new programming methodology, and that, until then, we can only “simulate” some aspects of human intelligence and only in a limited way but can never fully attain it.

I believe that we will soon prove them wrong.

First we saw this in chess and go, and now we are seeing it in a whole variety of domains.

Given enough memory and computation power, we can realize tasks with relatively simple algorithms.

The trick here is learning, either learning from example data or learning from trial and error using reinforcement learning.

I believe that this will continue for many domains in artificial intelligence, and the key is learning.

We do not need to come up with new algorithms if machines can learn themselves, assuming that we can provide them with enough data (not necessarily supervised) and computing power.

Summary

This is an introductory post, intended for steemers curious about machine learning,

as well as others working in the industry who are interested in the application of these methods.

Machine learning is certainly an exciting field to study about!

Later posts will contain concepts from areas of computer programming, probability, calculus, and linear algebra. For some cases, I will provide pseudocode of algorithms to make more vivid pictures of what we are talking about.

I enjoyed writing this post.

I hope you will enjoy reading it so I continue reporting about machine learning and its applications in modern industry. I am also grateful to those who provide me any kind of feedback.

As a confessed botter, I have a lot of incentive to run efficient bots. A casual reader may think that intelligent bots can be more efficient than dumb bots, but in practice, that's not the case. First, I believe that humans and bots are optimized for much different tasks. Humans are intuitive and have judgement. Their decision making is fuzzy, unreliable, and subject to whim, but can integrate much more diverse information than computers. Computers can make optimal decisions within a well defined parameter space, but can do so much faster and more reliably than humans. Importantly, computers will execute decisions without second guessing themselves.

An example would be that a human can judge when a dog is upset and likely to attack based on visual cues. A computer can judge when a dog is likely to attack based on quantitative factors like blood sugar levels or age. A computer will reliably fight or fly, but humans will unfortunately become paralyzed sometimes.

I have dabbled in machine learning and know that getting a machine to decide anything boils down to statistical inference, which works well with defined parameters, but becomes unreliable when parameters are not easily quantifiable or have complex interactions. This is a liability for computer decision making because the process can easily overweight irrelevant parameters and result in decisions that are obviously stupid to a human.

For these reasons I use dumb bots that act based on trivial measurements like time. I leave the complex decision making to myself and use bots for simple decisions. Mostly their value to me is speed and objectivity. What I mean is if I put the ultimate decision of execution in the hand of the bot, then I don't have to worry about my own last minute irrationality undercutting an otherwise optimized decision making strategy.

That's the current state of affairs. It will change as software evolves.

Btw, some times AI is much better when things have complex interactions due to patterns that we do not perceive and therefore cannot anticipate. This extends to forecasting sport events and market movements by feeding all sort of seemingly trivial data sets, which can improve prediction accuracy.

The best reason to keep doing if-then-else bots is because it would take some time to learn doing things with AI variants.

Of course for simple tasks, AI-level sophistication may not be required at all.

your idea is very intelligent @machinelearning

vote done

Hi @steemed, you may be interested in a new subset of AI I'm researching, Swarm AI. I think it's promising for machine intelligence because it goes beyond merely using statistical inference and neural nets. But what's interesting is that a Swarm AI network of humans could evolve into a network of bots that work on trivial tasks organized by a self-optimizing stigmergic algorithm, from which a general-purpose intelligence may emerge.

https://steemit.com/steemit/@miles2045/steemit-as-a-stigmergic-artificial-general-intelligence-with-human-values

How about bot ? why a bot like wang isn't blocked ?

ooo0000OOO!!

Can we have a machine learning class? Please, please, can we?

That would rock, wouldn't it!?

Amazing and interesting post! keep it up! will be following ur blog!

Thank you. I expect nothing less from your charts!

very well written and explained!

Great post! Hope you more in depth about some specific approaches or methods so we can learn more! Also, looking forward to pseudocode. It helps.

Of course. This was just an simple overview.

Thank you for your feedback.

Great article! While I agree that machines are capable of outperforming humans at certain tasks, I don't expect they will ever be as advanced as human intelligence. The computer that won at Go, which you mentioned, would not be able to perform a trivial task for humans, such as route planning, since it was not explicitly designed for that purpose.

Thus, I don't think we will ever see "generalist" machines that can solve any of the range of problems humans can solve with little to no effort. Despite your suggestion that machines will be able to be able to solve problems they weren't specifically designed to address. Even through the aforementioned cleverly implemented reinforcement learning.

Nevertheless, I am reminded of Conway's Game of Life, where, through simple rules a computer program is able to demonstrate complex behavior. It is a fascinating topic, but limited in my opinion in how far algorithms can "evolve" given their strict adherence to the underlying code they are based on.

The questions is not if, but when machines will be capable of simulating human thinking. I am sure that within 30-50 years, we will see incredible things being done by machines. the robotization will replace 70% of the work atm humans are doing.

future generations will have an incredible convenient life, which today only the rich people with maids etc. enjoy. this will be general standard. cars will be driven, so everybody will be transported like a celebrity and there is no need to drive yourself! robots will clean our houses and will buy everything (food etc.)

So what will be left for us humans to do, if almost every aspect of life will be done by machines for us?

I see the following areas for our personal life:

Sport/ Fitness

Art / Philosophy

Reading/ Writing

Hobbys (Fishing, Gardening ec. etc.)

Education

and here will be out work heading

With the help of machines we will combat problems like ozone layer, oil running out, improving solar tech, saving the world from collapsing as 13 billion humans will be living on the earth by than and we have to prevent the extinction of animals through climate change and for example over fishing.

by than singularity might have occurred, and machines will help us improve space travel to a point where we will be able to colonize and travel distances we cant imagine right now.

and of course everybody will be paying his bills with STEEM and STEEM $ ;)

this is just what i can come up with, being a normal human with very limited brain resources and imagination, i cant think further, but we have obviously bright people here, so i would love to hear how you imagine the future!!!

Never say never! We are getting better. Maybe we just need more samples for learning.

The problem is how to collect all the relevant data to train an algorithm.

They are training on a minor subset of all samples which it will occur while finding a solution in real life situations.

My argument isn't concerning the amount of training data, which, for a machine based on deep-learning, like the one that plays Go, can be prohibitively large. It's more about the flexibility of (good) machine learning methods, which is very low. Again, referring to the specifics of deep learning, it requires a ton of trial-and-error in determining the parameters of the model. This work requires the manual adjustment of expert technicians.

Anyway, my argument was just that generalist machine learning computers, capable of solving the sorts of problems humans do every day, without specific setup and method implementation for each category of task, is way far off.

I think we will see machines in the not so distant future that are quite capable of doing anything a human can do. They will operate 100x quicker than a human and have access to all the worlds data instantly allowing them to learn at an incredible pace.

How is Machine Learning related to Artificial Intelligence?

Machine learning is a subset of AI.

That is, all machine learning counts as AI, but not all AI counts as machine learning.

Makes sense. Thanks for finally clearing that up for me!

i just know from here :'v ..thank you @machinelearning

If anyone is interested in setting up a deep neural network themself there` s a great open source software stack. It includes ASR ( Autormatic speech recognition tools), image matching tools and also textanalysis(grammar checks, sentiment analysis, keyword finder, similarity checker) to process incoming requests. Further it has a knowledge base attached plus several tools to manage your data backend on which the queries are finally processed.

There are docker containers and also virtualbox images available so you` re setup very quick with your own whole machine learning flow.

http://lucida.ai/

Lucida: Infrastructure to Study Emerging Intelligent Web Services

As Intelligent Personal Assistants (IPA) such as Apple Siri, Google Now, Microsoft Cortana, and Amazon Echo continue to gain traction, webservice companies are now providing image, speech, and natural language processing web services as the core applications in their datacenters. These emerging applications require machine learning and are known to be significantly more compute intensive than traditional cloud based web services, giving rise to a number of questions surrounding the designs of server and datacenter architectures for handling this volume of computation.

btw...at least one paragraph is copied from here: https://www.ibm.com/developerworks/library/l-machine-learning-deep-learning-trs/index.html

I'd like a machine learning tutorial :)

indeed that would be a great idea!