Self-Driving Cars Make Moral Decisions As Well As Humans

Human's sense of morality is one of our biggest traits. However, it looks like we might soon not be the only ones possessing morality. We might face some competition, namely in the form of driverless cars.

Whats new?

A new study has looked at human behavior and moral assessments to see how they could be applied to computers.

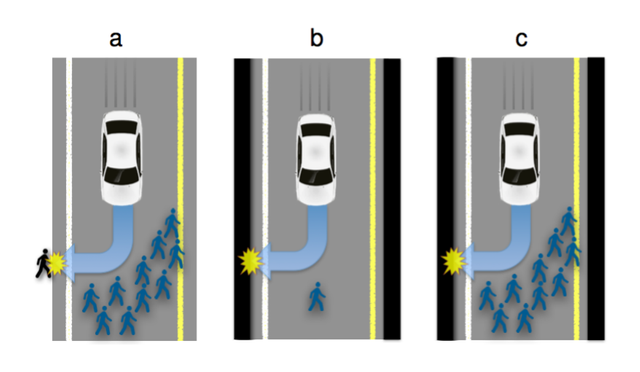

Just like a human in a car, a driverless car could be faced with split-second moral decisions.

Picture this: A child runs into the road. The car has to work out whether it hits them, veers off to hit a wall and potentially kill other passersby, or hit the wall and potentially kill the driver.

It was previously assumed that this kind of human morality could never be described in the language of a computer as it was context dependent.

The study found quite the opposite. Human behavior in dilemma situations can be modeled by a rather simple value-of-life-based model that is attributed by the participant to every human, animal, or inanimate object.

Scientists worked this out by asking participants to drive a car in a typical suburban neighborhood on a foggy day in an immersive virtual-reality simulation. During the simulation, they were faced with unavoidable crashes with inanimate objects, animals, people, etc. Their task was to decide what object the car crashes into.

The results were then plugged into statistical models leading to rules to work out how and why a human reached a moral decision. Remarkably, patterns emerged.

For what can this be used?

Now that scientists worked out the “laws” and “mechanics” in the way a computer would understand, it means we could now simply teach machines to share our morality. This will have some huge implications in regards to self-driving cars and beyond.

We need to ask whether autonomous systems should adopt moral judgments, if yes, should they imitate moral behavior by imitating human decisions, should they behave along ethical theories and if so, which ones, and critically, if things go wrong who or what is at fault?

Now that we know how to implement human ethical decisions into machines we, as a society, are still left with a double dilemma. Firstly, we have to decide whether moral values should be included in guidelines for machine behavior and secondly, if they are, should machines act just like humans.

Along with this moral questions, a bunch of legal questions pop up as well. For example, who can be held liable for any harm that is caused by such "morally acting" cars?

It remains to be seen how science, industry, politics and jurisprudence handle these issues.

Did you find this interesting? Feel free to comment, upvote, resteem and follow me

For further information:

http://journal.frontiersin.org/article/10.3389/fnbeh.2017.00122/full

http://www.iflscience.com/technology/the-fatal-moral-dilemma-posed-by-driverless-cars/

https://www.sciencedaily.com/releases/2017/07/170705123229.htm

good article....

This is something that is talked about in the boardroom and by the designers of the cars; however, it's not really something the general public thinks about, but should.

I agree. However, the influence of a general debate is limited anyway since the stakeholders decide which way this will go.

I don't quite agree with the approach here. To me an engineer tries to prevent problems. Period. Ethical decisions and other such concepts can be made obsolete if you prevent problems from happening in the first place.

How to prevent problems? A real engineer (as I see it) always goes closer and closer to the source of the problem. If suddenly a kid appears on the road, how can we detect the kid earlier? If there's a hole on the road, how can we detect it earlier? Always go for extending and improving the sensors of the car so that we detect any relevant variables very early and prevent potential problems.

Can we get to zero accidents? Not in the near future. But if we can get to ridiculously small chances of having an accident on the road, well, that's a huge progress on solving the problem.

Machines have certain advantages over people. They never get tired, their sensors can be extended, they can be made faster... there's really no limit. Let's use those advantages.