Correcting some incorrect things on Infowars RE: Alex Jones, David Knight, Owen Shroyer, etc.

First. I am a regular watcher of Infowars as well as many other news sources. I will listen to any source, and then research and think for myself.

For awhile now I've been hearing a few incorrect things. They are not truly important, but they rub me wrong a little bit just because I was alive and involved in the fields they are talking about.

Their incorrect information doesn't really hurt anything other than teaching a lot of the listeners the incorrect thing.

These facts relate to early internet and early internet technology.

David Knight is likely my favorite anchor at Infowars, but he frequently refers to the early internet as DARPANET as it was created by DARPA. Most of his information is accurate about this with the exception that it was called ARPANET the D as in defense was never used to name this network. I suspect this was because it went beyond DEFENSE and was an early cooperative effort between DARPA, Government Agencies, and Universities. It was used for a lot of early scholarly pursuits. That's it. That is my correction on that. ARPANET is the correct name. DARPANET did not exist and is an improper term used to refer to ARPANET.

This has been going on for awhile and if it were the only thing I would not bother writing this post.

Recently Mark Zuckerberg indicated they had detected some scraping of Facebook.

David Knight, Alex Jones, and Owen Shroyer have taken this statement and taken it into directions that are completely inaccurate. That is okay, it just shows me they are trying to talk about something of which they are only vaguely familiar with or they are assuming things.

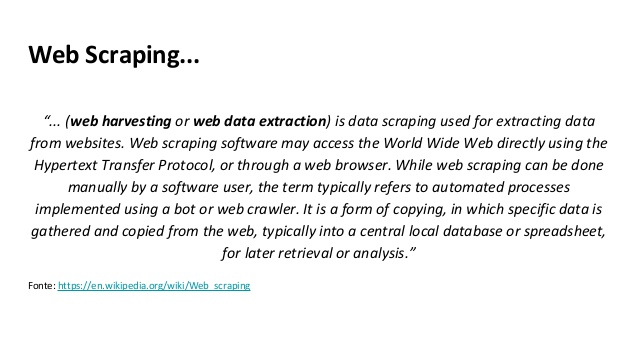

They have read the definition of scraping, or web scraping on the show several times. I don't think they quite understood what the definition means. That is what I will hopefully clear up in this post.

Scraping

Scraping is basically a technique of taking data from a source that will use some standard for how it is input and parsing it using rules to look for data.

An example would be [email protected]. You could make a program that read through all text and anywhere it encountered the @ symbol you could check to see if it fit a format for an email address. If it did you could build a list of email addresses by SCRAPING large amounts of data. You can do this using sources that did not intend it to be used this way.

So let's talk about what Mark Zuckerberg was indicating.

Web pages use HTML which is Hyper Text Markup Language to define how a web browser should display that page. This uses two common ports (unless intentionally changed) which are 80, and 443. Port 80 is the port that is used for http://address web pages. Port 443 is the port that is used for https://address web pages which are web pages using SSL (Secure Socket Layer) to encrypt the data. You will want to be using https anywhere you are putting out monetary or private information you don't want someone to potentially sniff (monitor packets flowing through a point and extract information from it).

A person that makes a scraping program or a spider (type of bot that uses scraping) will teach the program how to look for web addresses and follow those to other websites to scrape those sites as well. This is typically how search engines like Google learn what all the addresses and content is out on the internet. They have spiders that crawl links on the internet and look at text and build databases of keywords to search against.

If you were to design a scraping program intentionally designed to target Facebook you could connect to http or https and pull a profile, extract all the public information that facebook profile has, follow links to all of their friends and repeat the process.

The thing is. You can do this without being detected. A web browser is just a program that reaches out over port 80, 443, or some other website and says "Show me this page".

The web server says "Sure, here is what it should look like in html" and the browser displays it on the screen.

A scraping program only needs to say "Show me this page" just like a browser and it processes the information. It does not necessarily get detected other than the fact that can lead to a chain of many other queries immediately following that.

Another way to detect it is if you then see people talking about data that was only on your website outside of the network in some report, and you didn't supply that report. It is logical to assume they or someone they are affiliated with built an application to scrape the website and build a report.

Scraping can be done by anyone with some basic web programming information. It is also not something a site can stop. This is especially true if you have a lot of public information on the site and they don't need special privileges.

So basically when Mark Zuckerberg said this it was not the big issue Infowars seemed to think it was. If anyone that has a web page of any kind saying "We detected scraping" is about as valuable as saying "I breathe oxygen". It is something that is constantly happening to every website by many bots, and sometimes intentionally by people. The vast majority of this is not for nefarious purposes.

Now yesterday Alex Jones said he remembers learning about scraping in High School. This is wrong. Knowing his age, this term was not popular or normal vernacular during the time Alex Jones would have been in High School.

HTML and Web Pages were only getting minor experimental work by some people in universities in 1993-1994 but they had not gone live for commercial purposes and the entire world until a year or two after that. In fact, the internet was very anti-commercial up to that point.

So the web exploded right around the time Alex Jones would have graduated or already been out of High School.

Web scraping, and scraping would not have been taught in High School and even most Universities up to that point. If he took some programming classes though he very well could have encountered the term "parsing".

Scraping is essentially a more specific version of parsing. Parsing is something you do within your own program. Scraping is the same thing but refers to scraping data from a source that you don't have control over.

This may not be important to many of you, but like I said the incorrect information was bugging me a bit, so I felt the need to speak up.

ARPA evolved into DARPA, and I'm guessing that is why Knight used the term DARPANET even though it was in fact ARPANET. Many times I'll use the term War Department, because it more accurately reflects the true mission of the DOD.

It's not to be misleading, but rather to be more accurate. Knight probably knows that most people don't know what ARPA is, so to drive it home he said DARPANET, that's my guess anyhow.

It also puts emphasis on the fact, that the internet has pretty much been weaponized since it's inception. Seeing as how the agency who created it was funded by the 'War Department' (or DOD).

As far as scraping goes, I don't think that just any ole bot can scrap all of FB's data this because of the various privacy levels. Unless of course the bot had admin level access to every single facebook user account. It doesn't even make sense that FB would need to scrap the data since the users are submitting the information to them directly.

My guess is that FB immediately takes submitted data and collates it into various categories for "advertising purposes".

Wikipedia:

So yes it has changed it's name several times. The predecessor to the internet though was never called DARPANET, simply ARPANET.

Yeah, but I haven't seen anyone making the claim that it has. I specifically kept referring to public information in my post (if anyone can view it then it can be scraped), and mentioned access levels briefly which would coincide with your mention of admin level access.

My goal here was to explain what scraping is, and that it is not difficult. It is frequently done. Hell a lot of people write little programs for steem interfacing that scrape the steemd.com output.

Infowars was talking about scraping as a bigger and more nefarious statement than it was when Zuckerberg said it. Pretty much every website that is linked or referenced somewhere else on some other website is scraped by things regularly. So Zuckerberg saying it was more deserving of a "No shit?" said with sarcasm than concern that he just said something scary. If you have a website. It is highly likely it is being scraped from time to time. About the only way it will not be is if it is on no search engines, it is not linked to from any other websites that themselves are scraped, or it is in an intentional custom encryption format that a standard HTML scraping will be insufficient to process it, but then regular browsers would not be able to use it without extensions/plugins.

Then Alex talking about how he was taught about scraping in High School when I know how old he is and the term scraping would not have been used at that time. Perhaps he learned about parsing.

Curated for #informationwar (by @openparadigm)

Relevance: Accuracy in Reporting

Our Purpose

To be sure the devil is in the details. I was in high school before the internet existed as a commercial application and new about ARPANET because my advanced electronics instructor was working on improving it for commercial application back in 1982. They barely could send text back and forth and manly it was used to connect up the different science project for the military.

It is extremely important that information be accurate and this was bugging me too. Glad you cleared this up and thanks.

It good to be fair about correcting the info that it's given from news agencies or journalists, even if they're not biased. We're all humans.

I kind of get what you're saying about Web Scraping, it is a very good explanation. But an infograph about this would be a good resource for all of us to understand :)

These last few days is the first I'd heard the term 'scraping'.

I figured it was just another hijacked word someone made up to appear smart.

Nah it's a legit term. Zuckerberg threw it out there, and then non-programmer journalists just started running with it without really knowing what they were talking about. :)

when do journalists EVER know what they are talking about?

Regarding Info wars...I've found that it's just about as accurate as any liberal publication. Much more so than CNN or WaPo for example.

Some sections are more accurate than others...I pretty much skip his 'health' section.

I like Infowars. I do keep my mind my own as illustrated by this post, but I check out something pretty much every day from Infowars. Especially when I'm slapping the @newsagg posts together.

Alex Jones is such a cretin...

I've seen him be a major asshole before. Yet I just remind myself he is human.

Well he says what he's paid to

I don't buy that.

His overlords do!

Distractions

You guies have done great job. your jobs are sophisticated.

Your comments are very wisdom with special bits.