Image processing and subpixel edge detection

One of the most useful tools which allow engineers to design vision systems detecting or recognizing objects in images is subpixel edge detection. This article explains the concept and these which lay the foundation of it.

Image representation

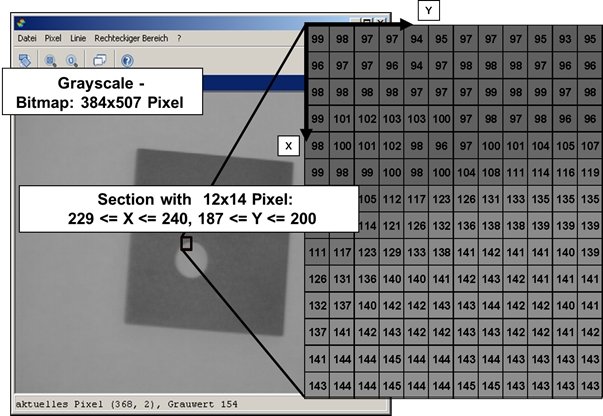

The base for many measurement applications with optical methods is intensity images. The intensity which is perceived as brightness in the image is mapped to a digital gray scale image. Therefore these images are called grayscale images. The image is a grid that is composed of individual picture elements, so-called pixels. E ach pixel represents a numerical value which represents the gray value. In a camera with a resolution of 8 bit grayscale differs from 0 for black to 255 for white, with 12-bit resolution there are 4096 gray levels. Grayscale images can be displayed as a matrix for processing and storing with software (Figure 1).

Figure 1. Computer based representation of grayscale images as matrix

There are different formats for storing digital images. For use in metrology, only image formats are possible, which are suitable for lossless transfer of image data. An involving loss transfer, as it is used for example in image compression to reduce image size, changes the image and may affect the location of edges and thus the measurement result. For lossless transfer, for example, the BMP (Windows bitmap), PNG (Portable Network Graphics [1]) and TIFF format [2] are suitable.

Image processing operators

There are different so-called “operators” for digital image processing. A distinction is made between point operators, local, global, and morphological operators.

Image processing operations that affect a pixel only depending on its value and its current position in the image without considering the neighborhood of the pixel are called point operations. Examples for point operators are brightness correction and the inversion of a grayscale image. The commonly used “gamma correction” in image processing to adjust images to the human visual perception is also a point operator using a power function with an exponent called gamma. By potentiating the gray values, a non-linear stretching in one part of the image and a non-linear compression in another part of the image is performed. With values for gamma larger than one, the image is darker, and for values less than one, the image is brighter.

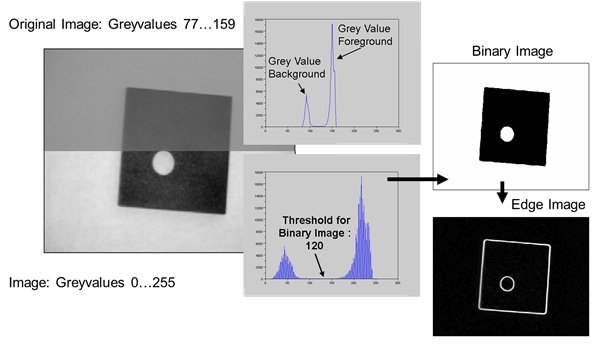

Figure 2 shows the use of two other point operators. For contrast enhancement that is also called histogram stretching, the gray values are changed so that the entire available gray scale is used. For image segmentation often a global thresholding is used. Here, a binary image is created (black-white image) by displaying pixels below the threshold as black and above as white. This method is also known as binarization. A suitable threshold value can be determined from the histogram of the gray values when a bi-modal distribution of the gray values is available. A known computational method for thresholding is represented in [3].

Figure 2. Contrast enhancement for histogram stretching, binary image with threshold from bimodal histogram and edge image derived from binary image

For local operators, the new gray value of a pixel depends not only on its previous value but also on the gray values of the pixels in its environment. The environment is defined by a so-called neighborhood. A typical neighborhood is the 8-neighborhood (3 x 3 pixel). Figure 3 shows the use of two operators considering the pixel itself and its eight neighbors, which are referred in this context as filters for eliminating image distortions.

Figure 3. Local operators for eliminating image distortion: mean and median filter

Local filters in which the pixels of the filtered image are calculated from the weighted sum of the pixels of interest are referred as linear filters. The underlying mathematical procedure is a so-called convolution. There are many different linear filters [4]. Filters, such as the average filter described above or the Gaussian filter, in which the weighting factors depend on the distance to the subject pixel according to the shape of the Gaussian curve, are used to smooth the image. Thus they represent a low-pass filter. Also, the median filter, in which the median of the surrounding pixels determines the filtered pixel, is a low-pass filter.

Edge detection

In contrast to the low-pass filters, the high-pass filters are used for highlighting edges.

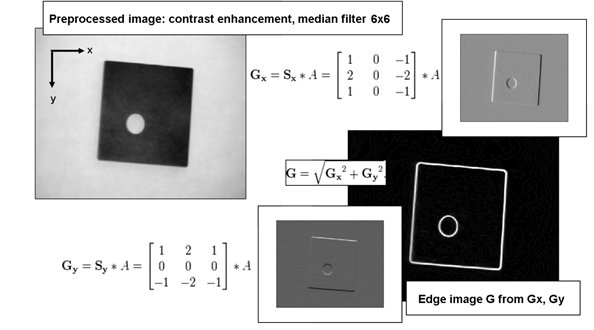

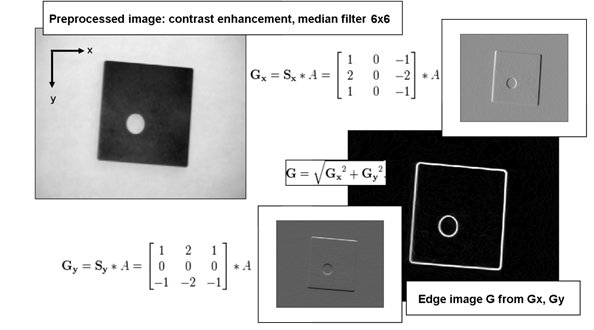

Figure 4 shows an edge image generated with the so-called “Sobel filter”. Given the image captured by the camera, in this example first preprocessing is done to remove distortion with the above described low-pass filters. Subsequently, edges are highlighted in two directions by two filter masks of the Sobel filter. The superposition of the images provides the edge image. This type of edge filters is based on the discrete differentiation of the image and is therefore also referred as a gradient filter.

Gradient filters have high-pass properties and increase the image noise. Therefore, the filters are designed so that they result is averaged over multiple rows or columns. Another representative of this kind of edge filters is the Prewitt filter [4, 5]. For determining the edge positions also the positions of second derivative’s zero crossings can be used, such as the Laplacian filter does [4, 5]. There are moreover gradient filters for edges that combine various filters such as the Canny edge detector [6].

Figure 4. Edge detection using Sobel filter

Also a binary image (Figure 2) is suitable for edge determination. Here the definition of a global threshold value, which is used for segmentation of the image into foreground and background, determines the edge position. This approach is beneficial when only one edge in an image with several edges must be identified (e. g.: shadow edges) or for low edge smoothness (“fringed” or “pixelated” edge).

In images from camera sensors on CMMs, edges are determined along search paths that are perpendicular to the edges of measurement object’s nominal shape (figure 5). For this purpose, a region around the edge (ROI – Region of Interest or AOI – Area of Interest) within the camera image (FOV – Field of View) is selected, which has the shape of the edge (e. g. for a circle, a ring or a ring segment). In this area, the search beams are generated. Along each search beam, an edge point is determined. The maximum of the first derivative along the search path or a threshold value are used as edge criteria. The first criteria corresponds to the previously described edge detection with a gradient. The second criteria corresponds to the edge detection based on a binary image, as shown above. When threshold criteria is used you distinguish between a global threshold, which applies to the entire edge region, and a local threshold which is determined individually for each search area or search path.

Presentation of edges determination using search paths and different criteria

Figure 5. Determination of edges along search paths using different criteria

Subpixel edge detection

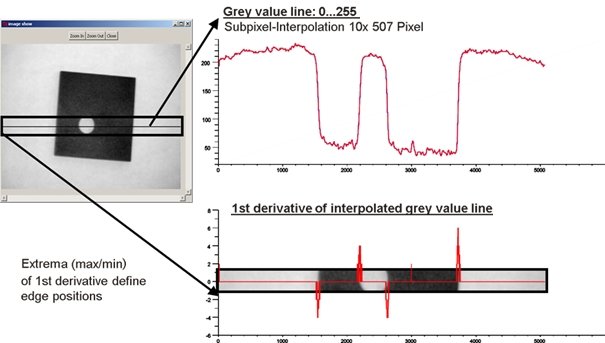

For a more precise determination of the edge position below the pixel resolution, an interpolation between the pixels is used, which is called a sub-pixel interpolation (Figure 6) [7].

Figure 6. Subpixel interpolation [8]

Figure 7. Grey value line and its 1st derivative along a search path

A correct determination of the edge position requires that light intensity is always below signal saturation of the camera because the edge position might be shifted due to the saturation.

To calculate product shape’s features sequences of pixels from the detected edge points are formed by contour tracing [9]. These contour points are transformed into coordinates taking into account the image scale and position of the camera sensor in CMM’s coordinate system (Figure 8).

Figure 8. Simplified presentation of image processing for determination of circle’s parameters without subpixel interpolation

More information can be found in the literature on image processing [4, 5, 10, 11].

... and https://optinav.pl/blog/

Bibliography

- ISO/IEC 15948 Informationstechnik – Computergrafik und Bildverarbeitung – Portable Netzwerkgrafik (PNG): Funktionelle Spezifikation (English: Information technology – Computer graphics and image processing – Portable Network Graphics (PNG): Functional specification) 2004-03.

- TIFF, Revision 6.0, Adobe Systems Incorporated, USA 1992. (Internet, 14.04.2016: http://www.adobe.com/Support/TechNotes.html).

- Otsu, N.: A threshold selection method from gray-level histograms, IEEE Transactions on Systems, Man, and Cybernetics, Vol. 9, No. 1, 1979, pp. 62-66 (1979).

- Jähne, B.: Digitale Bildverarbeitung und Bildgewinnung, Springer-Verlag Berlin 2012, ISBN-13: 978-3642049514 (English: Jähne, B.: Digital Image Processing and Image Formation, Springer-Verlag Berlin 2016, ISBN-13: 978-3642049491).

- Demant, C., Streicher-Abel, B., Waszkewitz, P.: Industrielle Bildverarbeitung: wie optische Qualitätskontrolle wirklich funktioniert, Springer Verlag, Berlin 2011, ISBN: 978-3-642-13096-0 (English: Demant, C., Streicher-Abel, B., Waszkewitz, P.: Industrial Image Processing, Visual Quality Control in Manufacturing, Springer Verlag, Berlin 2013, ISBN 978-3-642-33904-2).

- Canny, J.: A computational approach to edge detection, IEEE Transactions on Pattern Analysis and Machine Intelligence, IEEE Computer Society Washington, DC, USA, vol. 8, 1986, pp. 679-698.

- Töpfer, S.: Automatisierte Antastung für die hochauflösende Geometriemessung mit CCD-Bildsensoren, Dissertation, Technische Universität Ilmenau 2008.

- Imkamp, D.: Multisensorsysteme zur dimensionellen Qualitätsprüfung, in: PHOTONIK Fachzeitschrift für optische Technologien, AT-Fachverlag GmbH Fellbach, Ausgabe 06/2015 (Internet, 14.02.2016: www.photonik.de/multisensorsysteme-zur-dimensionellen-qualitaetspruefung/150/21002/317557). (English: Imkamp, D.: Multi sensor systems for dimensional quality inspection, in: LASER+PHOTONICS 01/2016, AT-Fachverlag GmbH Fellbach (Internet, 14.02.2016: http://www.photonik.de/multi-sensor-systems-for-dimensional-quality-inspection/150/21404/321005 ).

- Pavlidis, T.: Algorithms for Graphics and Image Processing, Rockville, MD: Computer Science Press, USA 1982.

- Sackewitz, M. (Hrsg.): Leitfaden zur industriellen Bildverarbeitung, Vision Leitfaden 13 (1. Auflage Vision Leitfaden 1, English: Bauer, N. (Hrsg.): Guideline for industrial image processing), Fraunhofer Allianz Vision, Erlangen 2012, ISBN 978-3-8396-0447-2.

- VDI/VDE-Richtlinie 2632 Blatt 1 (part 1) Industrielle Bildverarbeitung – Grundlagen und Begriffe (English: Machine vision – Basics, terms, and definitions), April 2010.

Hello @smiga! This is a friendly reminder that you have 3000 Partiko Points unclaimed in your Partiko account!

Partiko is a fast and beautiful mobile app for Steem, and it’s the most popular Steem mobile app out there! Download Partiko using the link below and login using SteemConnect to claim your 3000 Partiko points! You can easily convert them into Steem token!

https://partiko.app/referral/partiko

Congratulations @smiga! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Vote for @Steemitboard as a witness to get one more award and increased upvotes!