Mathematics, AI and the Human Brain

Earlier this week, google scientists published an interesting article in nature where they were able to show that animals (they used mice in this case) learned a given behavior by mapping the distribution of input rewards/penalty to the distribution of the actions themselves! To the AI initiated, you know this is a huge deal because it shows that leaning in animals has a mathematical bases and by extension then, we can teach machines (which learn via maths) to learn as animals (you can use humans here for all intent and purposes).

Source: Image by ElisaRiva from Pixabay

For the non-initiated in AI, my job here is to break this down for you, so that you can appreciate the remarkable discovery and maybe realize for yourself that AI has come to stay and all we're left to do as humans is to figure out a way to thrive with it, rather than the alternative (not good).

So how do animals learn? Consider a brand new baby, just born into the world, and how she navigates it. Almost everything she does initially is completely random - cry when hungry etc, but eventually she learns that crying gets her food. See what just happened? The stimuli was hunger, the action taken was to cry and the reward was food! Easy-peasy! Now next time the baby is hungry, what do you think she will do? Of course, cry again. She gets food and the stimuli-action-reward sequence continues until it becomes a learned behavior, that crying when hungry, solves the hunger problem.

Now reverse this scenario and imagine for a minute that the baby has callous or inhumane parents. The baby cries but gets no food. What do you think will happen to that baby over time? Infact, let's stretch this analogy a step further and assume the rather extreme scenario that the parents are psychic and know when their baby is about to get hungry and always try to give her food just before she cries. However from time to time, their psychic abilities, not being perfect, will miss their baby being hungry until after she begins to cry, but because they are inhumane, they refuse to give her food until she stops crying. Spend a moment to think about this situation. What do you think the learned action of the baby will be? You guessed it - such parents will raise a cry-less (and probably neurotic) baby. This is not fiction and some scientists have studied and came to the same conclusion

What I have described above is the classic AI technique called Reinforcement Learning (RL) that has currently shown the most promise for machines approaching human intelligence

To the AI enthusiast, one could argue that RL has been around for a long time, so why the fuss about the recent google article/research? The answer lies in the fascinating results that was obtained from this latest research.

You see, previous RL reward/penalty studies have usually used binary valued stimuli to action encoding (e.g. you cry, you get food, you don't cry, you don't get food e.t.c.). The challenge with such binary encoding is that world is way more complex than a "0/1", "yes/no", "right/wrong", "left/right", "black/white" input/output type problems. Everyone knows that there are certain nuances to living in this world and humans are experts at navigating such nuances, which they've spent a lifetime learning. For example, every human knows there are different shades of gray, but how can you make a machine learn that? It's really not as easy as it sounds and the holy grail for AI research has been to encode such complex human-like behavior into machines.

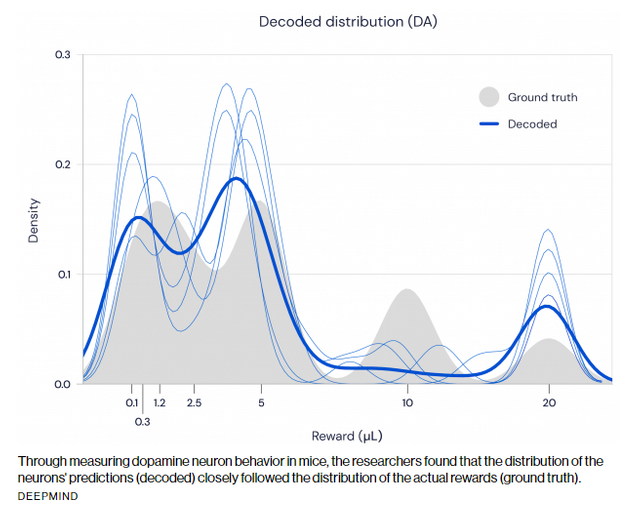

It seems the smart minds at google have finally figured it out (or at least moved us closer) by using classic probability theory (yes, the kind you learnt in high school). By treating the stimuli itself as a random variable (i.e. a consequence of chance which is close to how the world really works) and mapping the distribution of the response to the distribution of input, they found both distributions to be the same as seen in the figure above. See how the decoded distribution matches the ground truth distribution? This means that there exists a strong correspondence of the observed behavior (with all its variability) to the given input (with all its imperfection). Simply put, giving robots/machines imperfect stimuli in their training, is the way to create robots that are as imperfect as humans.

If all this still sounds like some foreign language to you, don't worry about it too much for now as I plan to do an AI school here on steemit, where I breakdown most of the complex AI concepts into easy to understand precepts. The school will run for as long as there is interest and I will also try to respond to the most interesting questions and comments. Stay tuned!

aiplusfinance

That was informative. i am also a student of AI, and i am currently working on neural networks.

Thanks @asimgul. Glad to know another AI evangelist here. I might do a post on neural networks (I try to be as simple as possible and concentrate more on the concepts than the maths) soon and will look forward to your input.

Thanks bro. i was finding content related AI on steemit. but i did not find any useful except yours. Can you suggest me a good course related to Neural networks and calculas ?

For neural networks, I'll suggest Coursera etc. Anyone will. You can also buy a book on the topic and apply the algorithms in the book.

Currently,i am following machine learning andrew ng. Thanks bro for that info.

#posh

https://twitter.com/aiplusfinance/status/1218366487787757568

Fine, upvoted and reblogged to thousand followers.. Thanks to approve @puncakbukit as witness and curator.

Congratulations @aiplusfinance! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

You can view your badges on your Steem Board and compare to others on the Steem Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPTo support your work, I also upvoted your post!