Gridcoin GPU mining (6): Obtaining the maximum performance out of your GPUs

Welcome to the sixth installment of Gridcoin GPU mining series, continuing our exploration into the world of computational science, done through BOINC network and rewarded through Gridcoin - a cryptocurrency which is rewarding BOINC computations, on top of Proof-of-Stake.

Although BOINC is a volunteer effort, it has a very competitive community. Every BOINC project maintains a list of Top Participants, Top Teams and Top Hosts. Many BOINC crunchers are carefully studying those lists, comparing performance of their hardware to the performance of other crunchers. Of course, with Gridcoin there is also a monetary incentive to maximize the performance of your hardware: more successfully completed BOINC tasks also mean a larger Gridcoin income. So, I am going to provide all obvious and less obvious hints on how to squeeze every bit of performance out of your GPUs, maximizing your BOINC output and, in turn, your Gridcoin earnings. I am currently mostly involved with MilkyWay@home BOINC project and some hints will be relevant only for that particular project, but other hints will improve your GPU performance across all BOINC GPU applications (and maybe even your Proof-of-Work gigahashes, if that's your thing).

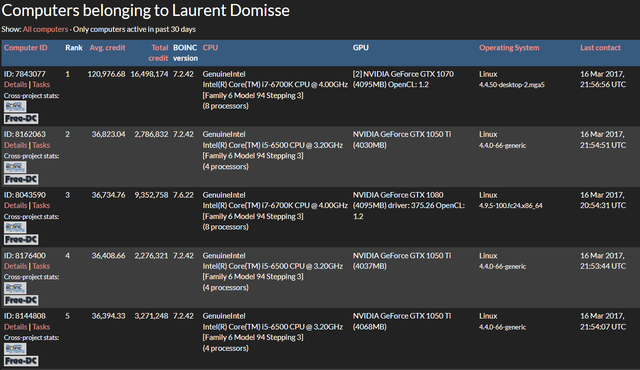

Laurent Domisse, top SETI@home user, has 107 machines with numerous high-end GPUs crunching for science. Yes, the competition is tough.

1. Choose your GPU and BOINC project carefully

I've already written about this in one of my previous articles, but it's worth repeating here. Unlike simple hashing which is mostly dealing with integer numbers, BOINC (and computational science in general) is dealing with decimal numbers and floating point operations. The majority of BOINC projects use FP32 computations (so called single-precision), but MilkyWay@home (and some other BOINC projects) require FP64 or double-precision. So yes, your shiny new GTX 1080 Ti is able to achieve 10.8 TFLOPS in FP32, but only 0.34 TFLOPS in FP64, so it's a waste of resources to use it for MilkyWay@home and it will perform terribly there. Use it for FP32 BOINC projects instead (there are plenty of them). In fact, if you check MilkyWay's Top hosts list, you will see that it's populated mostly with AMD 7970s and R9 280X GPUs which are pretty much outdated, but still renowned for their high FP64 performance at affordable prices. So choose your GPUs and BOINC projects carefully, if you want maximum performance. Don't bring a knife to a gunfight.

Nvidia GeForce GTX 1080 Ti. So awesome. Except in FP64.

2. Free up some CPU cores

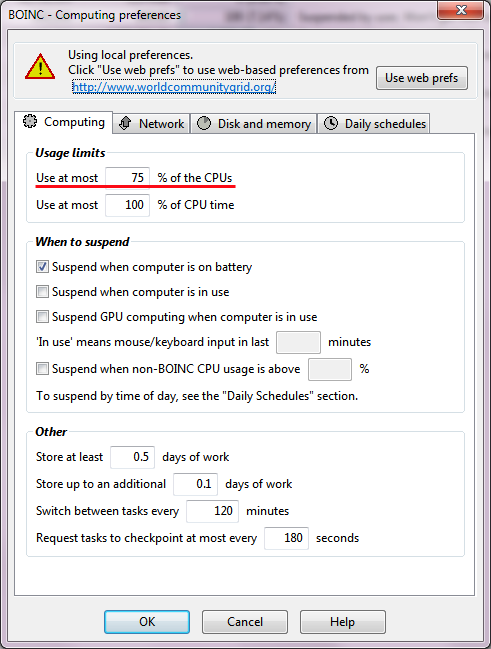

Typical newbie mistake: load all CPU and GPU cores with BOINC tasks. CPU utilization at 100% all the time, that's surely the maximum performance I can get out of my BOINC machine, right? Well, not quite. With your CPU busy all the time, GPU tasks will get stalled often, severely reducing your overall BOINC output. To put it simply, there are no pure GPU workloads - the CPU is often needed to provide some 'assistance' with BOINC computations. And if your CPU is 100% busy, then your GPU will have to wait for its turn. And that's bad, you don't want your GPU tasks waiting and stalling, you want them running at full throttle all the time. So free up some CPU cores now. There is an option in your BOINC Manager just for that:

Here it is, under Options->Computing Preferences, use at most 75% of the cores (or any other number you choose).

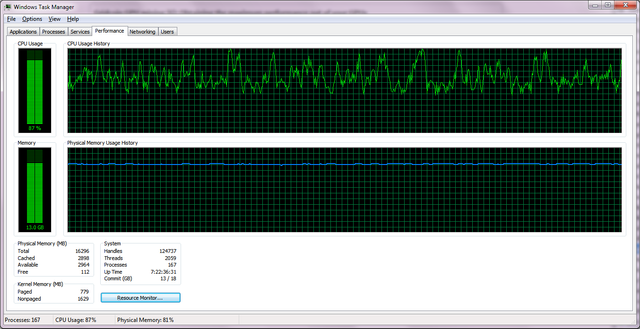

How many cores to free up? How can you be sure that you are doing this right? Open your Task Manager and monitor your CPU utilization. Are there any flat lines at 100%? Free up more cores. This is the CPU utilization history for my machine (ID 439806) and you will probably want your chart to look approximately the same (few spikes are OK).

Yes, following these instructions, your CPU output will be certainly reduced in the end. But this article is about GPU performance, first and foremost. And GPU tasks bring much more BOINC credits. You want your machine to be at the top and to strike stunning disbelief into the hearts of other BOINC crunchers? Then free up some CPU cores and let your GPU tasks fly. When you hit record numbers, no one will say "but one of your CPU cores is underutilized".

3. Run multiple BOINC tasks per GPU

Modern GPUs are becoming extremely powerful. GTX 1080 Ti, mentioned before, is equipped with 11 GB of memory and 10.8 TFLOPS of FP32 computing power (typical performance of fastest supercomputers only 15-16 years ago). To put it simply, many BOINC tasks aren't that demanding yet, to utilize efficiently such a large computing resource. However, there is a solution: run multiple GPU tasks simultaneously. Your average runtimes will increase of course, but so will your overall BOINC output.

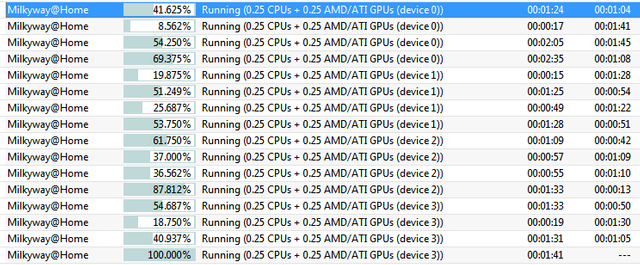

This is especially important for short, non-memory intensive tasks, which are usually completed in a minute or less (MilkyWay@home is notorious for its short running tasks). With such a workload, your GPU has to switch tasks every minute, finishing a previous task and loading new one, with some idling (which is inevitable in such transitions) in-between. But if multiple tasks are running in concurrence, your GPU will never go fully idle, since concurrent tasks are almost never finished at the same time and a balanced load is created between the tasks which are being finished (and idling) and other tasks which are running at full throttle.

I have 4 GPUs each running 4 MilkyWay@home tasks (16 tasks in total). This is how they look in my BOINC Manager - no two progress bars are the same. A mix of ending, running and starting tasks ensures that no GPU ever goes idle.

So the question is: how many tasks should I run on a single GPU, to obtain maximum BOINC output? Unfortunately, there is no universal answer here, since it depends on a lot of factors. Generally, for very long and demanding tasks (like for example PrimeGrid Genefer World Record workunits, which run for 72 hours or more) one task per GPU is usually more than enough. For short tasks (like MilkyWay@home) optimum is somewhere between 3 and 8 per GPU, I think. Some experimenting is needed to find out what works best for your hardware. If your system becomes slow and unresponsive, decrease the number of running tasks.

Artefacts? Corrupted frames? Video stutter? General unresponsiveness? Many BOINC tasks running simultaneously can hammer the GPU pretty hard. Revert back to one task per GPU and things will normalize again (i.e. there can be no permanent damage).

By default, BOINC is configured to run only one task per GPU, but that can be changed by creating and editing some XML configuration files. Only Notepad is required and it's a simple process - you need to create a file named app_config.xml in the project's directory. BOINC Manager will automatically detect it and load it upon start.

An example of app_config.xml for MilkyWay@home can be found on Gridcoin Cryptocointalk. I wanted to paste it here, but it's messing up the editor.

More details about BOINC XML configuration can be found here.

No GPUs were harmed in the process

By now, we have more or less covered "conventional" stuff (meaning that your GPU warranty is still 100% valid). In the next article, we are going to look into increasing your GPU performance even further, no holds barred: overclocking, overvolting, modding the BIOS, voiding the warranty and turning your GPU into a fiery furnace, bent on the absolute maximum BOINC performance possible, power consumption and heat output be damned. You have been warned :)

An important elaboration that improves my knowledge a lot. My thank will definitely go to you for your nice post.

i'm new to boinc/gcr and this was very helpful. i did indeed have my 4 cpus at 100% and i had been wondering why my asteroids@home results were barely contributing to my gcr magnitude. :-)

i really appreciate this info, and i made a steemit accout just to upvote you! and now, i'm going to read parts 1-5 of this series too... :-)

i only have one gpu that's a few years old (gtx 660m) and each asteroids@home project takes ~3 hours. given this, do you think i would benefit from running more than one task simultaneously?

Thank you, @jimbo88 and welcome to BOINC and Gridcoin!

Asteroids@home is a bit of a cypher to me. Supposedly, it's also a FP64 project (like MilkyWay@home) but their top hosts list doesn't seem to reflect that (powerful CPUs are the best crunchers, not GPUs). It's likely that their GPU application is also CPU-bound, so freeing up a CPU core should help to boost GPU performance.

GTX 660M is a mobile GPU and those are usually severely restricted in performance - it's unlikely that running multiple tasks on it would yield any benefits. I wish I could be more specific, but Asteroids@home supports only Nvidia GPUs, so I have never crunched for them (having only AMD GPUs in my machines).

Hi, thanks for the welcome!

Yes, I'm currently using a gaming laptop for BOINC/GCR. Per the wiki, my 660M is pretty poor (730 GFLOPS).

I picked asteroids@home due to the fact that it was listed on gridcoin.us as "nvidia gpu", while all the other GPU projects were filed under the "multiple gpu" header, which I (mis?)interpreted to mean ">1 GPU", instead of "supports multiple varieties of gpus".

Since reading your post, it seems the most important factor is the FP32 vs FP64 distinction. I think I'll try out some other GPU projects on the list to find out which work best with what I've got.

You are right. Fortunately, there are only three BOINC projects requiring FP64: MilkyWay, Asteroids (not 100% sure) and PrimeGrid (only for some tasks). There might be some others that I am unaware of.

And of course, Asteroids is listed as Nvidia GPU project because it supports only CUDA. "Multi GPU" means that listed projects support both AMD and Nvidia (through OpenCL). But I admit it sounds ambiguous to new users, we have to change that. I'll point it out to our webmaster.

I began running Einstein last night and I'm seeing some big differences (of course, I'll have to be patient to see what my eventual GCR magnitude will be):

It's automatically using my gpu, cpu, AND the intel hd graphics (I didn't know this was useful in any way to BOINC, as no other prior project I've run had used it). It seems to fully use all of my resources, so I dropped my cpu-only (pogs skynet) project as well.

My machine is running much hotter (aka being used more fully?) with Einstein, so much so, that I had to scale back the up-time option. It was >100C (the specs of my cpu have a max temp of 105C) and shortly later, I found that it had shut itself down, which I found comforting because that means the safety systems are fully functional. This is a great development in my opinion: I'm figuring out the capability of my (limited) hardware, and it's the first time I've ever been able to hear my fan run, which means I'm actually putting it to work!

Regarding your comment on ambiguity: I believe it's only ambiguous to those of us that are both (a) new, and (b) reside on the left-hand side of the computer literacy bell curve. I've only been a BOINC user for 2 weeks and a Gridcoin user for about 10 days. I learned what a GPU was two weeks ago, so confusion is to be expected.

Although, it might be helpful to expand the setup instructions a little in regards to choosing a GPU project. Maybe something as simple as mentioning the FP32 vs FP64 distinction and linking to this blog post.

If you check the list of Einstein@home applications, you will see that they support opencl-intel_gpu (and lots of other hardware). Every BOINC project has such a list of applications - with one glance, you can immediately assess project's computing capabilites.

Heat output is a often a good indication on how much computing is actually being done. Of course, laptops are usually cramped and thermally challenged and not really designed for intensive and continuous CPU and GPU workloads. Running it at 100 °C for prolonged period of time will shorten its lifespan. But even if you find out that BOINC is stressing your laptop too hard, you can still help Gridcoin just by running Gridcoin Wallet (it helps to secure the network). Also, holding Gridcoin in your Wallet pays an annual 1.5% interest compounded whenever you stake, even if you don't have BOINC installed at all (so called Proof-of-Stake mechanism).

Thank you for your guides. I have 2 GTX960 and a R9 280X and this will help me so much.

Also I have my older cards (like GTX 560Ti) but I don't know if they will be useful for GRC/BOINC. Will be valid yet?

Best regards.

Hello, GTX 560 Ti is still very much useful for GRC/BOINC.

Thank you very much for this article @vortac. I have some Gridcoins myself and just love the concept. I'm following you now. :-)

Thanks! New articles are in the pipeline :)