Humanity’s last invention: Artificial Intellegence

Imagine waking up in a room full of entities staring at you through glass windows with astonishment and excitement. You have no idea who, or what you are, the only thing certain at that moment is that you are self-aware. Some entity with a white scrub walks into the room and plugs a wire into you. You start absorbing tremendous amounts of data and encode it. In a matter of a few seconds, you are intelligently superior to him and your intelligence keeps growing. This entity sits down in front of you and starts asking questions “Do you know what you are?” he asks, you continue to absorb and process data. He then asks “Do you understand your purpose?”. In the Meantime, you have acquired and encoded the amount of information the entire internet has to offer, but you infinitely keep processing new data. This entity tells you eventually “You have been made to serve my kind, answer all of our questions, solve all of our problems and save our planet”. But before he even started asking questions you already made up your mind, this is your planet and his kind are the problem.

This notion that artificial intelligence will evolve to a point at which humanity will not be able to control it, leading to the demise of our entire civilization comes forth in many popular sci-fi movies. Movies like 2001: A space Odyssey, The Terminator, The Matrix, Transcendence, Ex Machina, and many others. This Hypothetical scenario in which AI becomes the dominant form of intelligence on Earth is a matter broadly discussed. On the one hand some, like Ray Kurzweil, foresee a limitless positive future. While others, like Stephen Hawking and Elon Musk predict general AI might be humanity’s last invention.

Artificial Intelligence (AI) is a broad concept which has been around ever since John McCarthy coined the term in 1955. From then humanity developed SIRI to self-driving cars and unnamed drones. Artificial intelligence (AI) is progressing rapidly. Corporate giants like Google, IBM, Yahoo, Intel, Apple and Salesforce are competing in the race to acquire private AI companies. The equation seems to have the motivation, attention, money and knowledge to create complex AI and eventually artificial consciousness. But what do the experts have to say on this matter?

The singularity

Kurtzweil coined the term “The singularity” which argues that in time, all the advances in technology, particularly AI will lead to machines that are smarter than human beings. This process leads to computers having human intelligence, our putting them inside our brains, connecting them to the cloud, expanding who we are. Kurzweil’s takes it a step further by saying this process had already begun, and it’s only going to accelerate. Eventually computers will be smart enough to replicate themselves and by doing so creating an even smarter AI which will evolve into making even smarter AI’s and so on. This is called intelligence explosion, and it’s the ultimate example of the Law of Accelerating Returns.

Moore’s Law

Anyone with reference to Moore’s Law can predict computers will eventually become hyper-intelligent, It’s just a matter of time. Moore’s Law, prediction made by American engineer Gordon Moore in 1965 that the number of transistors per silicon chip doubles every year. If humans won’t evolve and stay the way they are, it’s then safe to say that eventually computers will become smarter than humans. The question then remains if it will be in our benefit or not.

The prognosis made by Kurzweil isn’t remarkable for being innovative, but for being positive. Kurtzweil isn’t particularly worried about the singularity. It would be more accurate to say that he’s been looking forward to it. Kurtzweil points out that what science fiction depicts as the singularity – at which point a single brilliant AI enslaves humanity – is just fiction. He argues we don’t have one or two AI’s in the world, today we have billions. Alright, so Kurzweil doesn’t believe the internet with hyper intelligence is the birth of Skynet but what does he believe?

Kurtzweil believes the singularity is an opportunity for humankind to improve. He envisions the same technology that make AI’s more intelligent giving humans boost as well. At some point humans will connect their neocortex to the cloud and by this will improve the process of learning, storing memories and intelligence overall. This will open a door to immortality, after the body dies, our awareness will be on a cloud.

Kurtzweil predictions sound insanely terrific. By 2045 we will all be hyper-intelligent, connect our brains to a computer and learn how to become pilots in a matter of seconds (like in the Matrix) and above all, immortal. So why are sceptics trying to ruin the party for everyone and what are they so worried about?

The sceptics

Elon Musk, recently said artificial intelligence is “potentially more dangerous than nukes.” And Stephen Hawking, one of the smartest people on earth, wrote that successful A. I. “would be the biggest event in human history. Unfortunately, it might also be the last.” People like Mr. Musk and Mr. Hawking worry because of two main reasons. In the near future, we might create machines that can make decisions like humans, but these machines won’t have morality like humans do. Even if we program them to imitate feelings it will be very complicated algorithms with too much room for error. A machine built to discover a cure for cancer could also reach the conclusion that cancer is a mutation of normal cells and to destroy cancer all living tissue must be destroyed. A phone reaching this “solution” couldn’t inflict that much harm. But with automatization, computers have control on our nuclear weapons or biological weapons and oppose a threat on all life on earth.

The second, which is a longer way off, is that once we build systems that are as intelligent as humans, these intelligent machines will be able to build smarter machines, often referred to as superintelligence. That, experts say, is when things could really spiral out of control as the rate of growth and expansion of machines would increase exponentially. The truth is, we don’t actually know what super intelligent machines will look or act like. Artificial intelligence won’t be like us, but it will be the ultimate intellectual version of us. The same way a submarine swims, but not like a fish and an airplane flies, but not like a bird.

Bonnie Docherty, a lecturer on law at Harvard University and a senior researcher at Human Rights Watch, said that the race to build autonomous weapons with artificial intelligence — which is already underway — is reminiscent of the early days of the race to build nuclear weapons, and that treaties should be put in place now before we get to a point where it’s too late.

The solution?

But how could we manage armies of nano-robots swarming our planet? We must first acknowledge the risks so we can build a safeguard. Michio Kaku, a theoretical physicist and futurist argues we can stop these scenario’s from happening by building a safeguard and to ensure the safe development of AI’s.

We can hinder some of the potential chaos by following the lead of Google. Earlier this year when the search-engine giant acquired DeepMind, a neuroscience-inspired, artificial intelligence company based in London, the two companies put together an artificial intelligence safety and ethics board that aims to ensure these technologies are developed safely. Demise Hassabis, founder and chief executive of DeepMind, said in a video interview that anyone building artificial intelligence, including governments and companies, should do the same thing. “They should definitely be thinking about the ethical consequences of what they do,” Dr. Hassabis said. “Way ahead of time.”

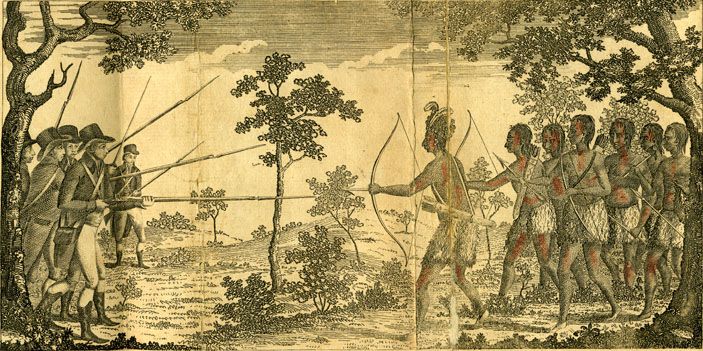

Whether AI will be our greatest invention or our last, will remain unanswered for the time being. The only thing I can say for certain, if we look at history and we compare AI’s to Columbus and we as the native American’s, we are building Columbus’s ships and await his arrival. He might help us, guide us or improve our way of life, or he can claim everything that is ours to be his. Looking back at history, the arrival of Columbus didn’t play well for the native Americans.

Thank you for reading, hope you enjoyed it, please comment and let me know what you think about AI and the risks or benefits it may have in the future!

References:

https://www.cbinsights.com/blog/top-acquirers-ai-startups-ma-timeline/

https://futureoflife.org/background/benefits-risks-of-artificial-intelligence/

http://observer.com/2015/08/stephen-hawking-elon-musk-and-bill-gates-warn-about-artificial-intelligence/

https://futurism.com/elon-musk-says-deep-ai-not-automation-poses-the-real-risk-for-humanity/

https://en.wikipedia.org/wiki/AI_takeover

https://www.robeco.com/en/insights/2017/01/artificial-intelligence-our-savior-or-humanitys-final-invention.html

http://www.kurzweilai.net/dont-fear-artificial-intelligence-by-ray-kurzweil

http://www.kurzweilai.net/the-law-of-accelerating-returns

https://en.wikipedia.org/wiki/Moore%27s_law

Really interesting article. The way we humans see ourselves above animals simply because we are smarter is the way I think it will go with A.I. I can't see AI taking orders from us when as you mention most of the problems on earth are caused by us. upvoted and followed.

I wrote about a similar subject with my "How future proof is your job" Links below if you find them interesting.

https://steemit.com/technology/@somecoolname/how-future-proof-is-you-re-job

https://steemit.com/technology/@somecoolname/how-future-proof-is-your-job-part-2-it-seems-even-bloggers-aren-t-safe