Consequentialism and big data, the need for intelligent machines to assist with moral decision making

Machine intelligence is necessary to scale morality

By Myworkforwiki (Own work) [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons

When we discuss the concept of morality it is often from the perspective of intuitive morality. This is not going to be about any intuition, or emotions, as in my opinion these can actually create bias, and tilt morality in favor of personal interests. So for this discussion we will not place much emphasis on this even though I expect any reader to have their moral intuitions.

Consequentialism is a consequence based method of determining the correct or incorrect actions. So if you are trying to learn from consequences it is often a trial and error approach. This kind of learning of right from wrong is often the only viable approach because there is not always a mentor, a teacher, a religious guide, or script on what to do in a particular situation. Entities learn what to do and what not to do, by looking at what happened to the people who did or didn't do a particular action.

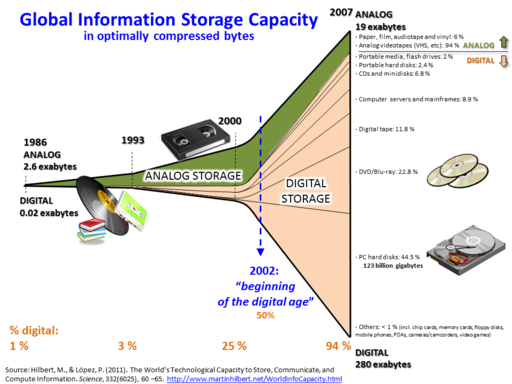

Consequentialism requires a lot of analysis of data. Big data analysis is a method of scaling consequentialism up and data analytics companies are known to assist in this process. The problem is the common Joe Sixpack has no method of even attempting to improve their decision making along these lines due to barriers to entry (cost of hiring a data analytics company) or due to inability to process the data if they managed to collect it themselves. The smart types (I will include myself) do constantly analyze the data we come across to try to continuously improve our decision making capability (morality) but we find ourselves limited to what data we can process using very limited tools such as our brains, calculators, etc.

The day trading example

A good example for understanding how consequentialism works is to take a look at the typical day trader in crypto. If we think of investment decisions as we think of moral decisions, and we label a bad investment decision as immoral in practice and a good investment decision as moral in practice, then we can know based on return on our investment whether or not any particular decision was moral. Most day traders lose money because they invest with their brains while the quantitative traders know that the manipulation of psychology and of these individual brains allows them to utilize bots to take advantage of certain emotions such as fear, mania, etc. As a result, quantitative traders simply turn investment decisions into algorithms and remove their intuition entirely from the decision making process.

Quantitative trading requires lots of data, lots of computation resources to analyze the data, and high quality sources of data. A source of data includes public sentiment about a particular stock. If we were to put this in a moral context this would be public sentiment about a particular topic or choice (this is moral sentiment of the crowd). The sentiment often is right, but not always, as the crowd is influenced by emotions. Algorithms merely take the current sentiment of the crowd as just another input into a calculation.

The only way to scale up decision making in investing is to use artificial intelligence. We know this because in practice the entities which have the highest quality information and the best AI are winning the ROI competition. Why is it when the topic is morality that so many cannot see also that the same processes are at work? In consequentialist morality it is obvious that the same computers which can be used to help make an investment decision can help make a moral decision, merely by tweaking the algorithms. Big data represents the mass of data too big and too much for human brains to process, but this is the data necessary to be processed to determine the most moral course of action or the highest quality decision.

A consequentialist can view morality as a risk management strategy. Better morality in the world and in decision making means lower risks.

References

- https://en.wikipedia.org/wiki/Quantitative_analyst

- https://en.wikipedia.org/wiki/Big_data

- https://steemit.com/ethics/@dana-edwards/is-the-moral-high-ground-merely-a-synonym-for-moral-pyramid

- https://steemit.com/politics/@dana-edwards/total-transparancy-benefits-the-top-of-the-pyramid-and-may-not-actually-work-as-intended-there-are-costs

Consequentialism requires a consequence rating system. What do you propose?

There are many ways to rank/sort consequences. The method I use for myself is based on the consequences to my interests. If I include for instance my own fate as being something I'm interested in and the fate of others whom I care about, then the consequences are ranked in relation to how they effect me and whatever/whomever I care for.

I can for example determine that breaking a certain law is very very stupid because it could result in a consequence of a permanently damaged reputation (certain people will never see me the same again) or it could result in me losing my freedoms (prison), or losing my resources (loss of wealth). I can also for example determine that certain decisions whether legal or illegal are very very smart, because the consequences to my reputation are minimal damage or even positive gain, or the consequences to my freedom are that I get more of it, or and the consequences to my wealth are that I increase it, and so on.

There are of course more things that I care about than just reputation, money, freedom, but these are common examples almost anyone can relate to. In the end we all determine what is smart or stupid based on cost vs benefit analysis which is something an AI is perfectly suited to handle. Even for investing in stocks we rely on algorithms which basically do cost/benefit analysis, ranking different stocks by a point or grading system.

What I propose as a solution is to let each consequentialist individual tell the machines their own values, interests, etc. Then allow the AI to learn about them by voluntarily giving as much data as possible to the AI. This would allow the AI to eventually gain insights into what makes an individual tick, and allow that AI to best help that individual become a more moral person based on their own particular values and interests.

Basically I believe that to the AI we should be radically transparent. This is because I view the AI as an extension of the mind of the individual without a will of it's own. It's an extended mind or limb, and all communication between the individual and it, in my opinion should remain confidential. The function of this AI is to help the individual think better, and make higher quality decisions.

Damn good information. I'm one step closer to being a better day trader! Cheers and Followed

@dana-edwards I love the way you have compared the morality and decision making based on that to the stock investment decisions. You are right - If we make our box of moral behavior, framed by a set of morality rules, then we take the judgement and emotions out of our behavior to a large extent. Just like the automated stock traders. Ergo - we end up managing ourselves fairly well though not perfectly

Kudos on your thought process. Upvoted Full. Followed you.

I usually blog about wildlife awareness based on my wildlife photos and my thoughts on life linked to those photos. Your blog gives me a whole different direction for 'food for thought'.

Regards,

@vm2904

Relationship between machine intelligence and morality, nice concept and need further more research !!

People view morality as an inherently human attribute. Changing this perception will be one tough task going ahead in a future where humanoids with "artificial" intelligence will be among us.

The catch 22 is that humans aren't cognitively capable of being moral. The morality humans put forth as the standard to follow is impossible for any human to be. So in essence, do we want to accept that we are all evil, and all monsters, or do we want to try to be better?

If we just create transparency without an actual capability of being moral then what good is that? We will all get to look like ignorant monsters sooner or later. What exactly will improve?

I'm not sure that completely self autonomous AI is even a possibility although I'm aware that many are attempting to prove me wrong and are doing a pretty good job of it so far but in relation to making moral decisions from big data, I would think that the process of making these decisions would be somewhat determined by the information provided in the beginning, i.e the programmers own version of morality. If AI really did become self aware and capable of determining it's own morality, I would be pretty worried.

As for being able to guide us in our own moral decisions by weighing the positive and negative consequences, I think it's quite sad that we've arrived at a point where we need this to be so at all. It must mean we have lost our own ability to communicate with our hearts and souls.

I take your point also that the amount of data required for making any decision with all of the information is too much for any of our brains to cope with but I would add that perhaps we are over-complicating something that was intended to be simple. Do we really need to know everything about everything and will that lead to a happier, healthier, freer society???

Really interesting post mate. Lots to think about.

Hope your day is going well. :)

You are correct that AI has to be trained by the crowd. You are incorrect in thinking "the programmers" have this duty. We are all the trainers as all sources available can be provided as data sources. The knowledge base from which AI can make sense of morality is not provided in centralized fashion by programmers in an ivory tower but ideally in a decentralized collaborative fashion by anyone over the Internet. Think about how Wikipedia for example has become a repository for human knowledge? The same in my opinion can be done for morality because knowledge can be contributed to by global participants.

This is irrelevant science fiction. It is equal to asking when Jesus will return or if God exists. These may happen or may be true but when dealing with morality we have to stick to science in my opinion (avoiding religion) as this is the only way to seriously tackle the problem. An AI which is self aware is like discovering aliens or God exists, but since we have no way to prove self awareness or measure it, it is equal to asking if atoms in a rock are self aware or if the universe itself is self aware, because the rock and the universe also do computation.

What good is transparency if humans get to make just as many mistakes as before? If we are going transparent then we have to reduce our error rate else we immediately look bad. This transparency will create a massive amount of demand for these sorts of AI 'moral calculators' which I speak of. Would you rather have your life destroyed by coordinated shunning if you mess up, or would you rather consult with an AI before doing anything so that you minimize your risk of that as much as possible?

It's not that I'm over complicating but the technology is making life over complicated. The more transparent society becomes the more complicated even the smallest decisions become because the errors are under a magnifying glass. Just because things go more open and transparent it does not mean your peers will not expect perfect decisions from you and will not seek to punish you for the slightest little mistakes.

Consider this, if we are trending toward radical transparency where no one can have privacy or secrets, where everyone knows anything about you, and must make decisions based on this knowledge of you, well don't you want these people to have the maximum ability to make quality decisions off this information? Would you prefer we all know everything about you but rely only on our ignorant biased flawed brains to rank and judge your decisions?

And there is my point, I don't think it is fair to you or to anyone if we are judged by ignorance. I do not think humans are capable of being rational, or fair, or moral, whether these humans are in the position of judge or in the position of being judged. I think if the crowd is going to have unlimited transparency, openness, then it also needs to scale up it's decision making and moral capacity along with this. If this does not happen then it will be a horrific dystopia in my opinion.

I understand this of course and how it is achieved but that suggests that the AI would have autonomous responsibility for determining what is moral and what isn't from the information it is provided with by way of the internet and all of the communication that takes place on it but in my personal opinion, I would say that a lot of what society celebrates on social media and the internet in general is less than moral, to me, so the information would be skewed. Steemit as an example on the surface is a place of morality and decentralised thought but in reality it is no such thing and any information gained by AI from watching the behaviour and reward for that behaviour would surely lead the AI to have a twisted view of what was acceptable behaviour.

I get this as well but forgive me for saying but science and scientists do not have the best track record for making moral decisions. The Manhattan Project and CERN are two examples I could cite where scientists through caution to the wind and made a decision which could have adversely affected the entire population. If there is the possibility of AI becoming self aware then we should give it considerable thought and I would hope decide on a different, less dangerous approach, rather than allowing my own desire to find out to override what for me is a natural instinct to air on the side of caution, especially when the potential dangers are so great.

I have to be honest and say neither but if forced to choose I would have to go for the first option. The second is just too creepy for me. I would rather be guided by my own sense of morality even though I appreciate that this has been confused throughout my life by social influences.

I really appreciate your time mate and hope you can appreciate that I am very ignorant to a lot of factors but I'd like to be more aware myself and develop my own moral compass more so I will definitely be keeping an eye out for more of your posts and am sure I will be encouraged by them to consider things in a different light, which I always enjoy. :)

Cheers @dana-edwards. Have a great day mate.

Interesting conceptual contemplations.

Personally, I’m still somewhat sceptical about such prospects, given the subjective nature of “morality.” For instance, consider some of the things some extremist Muslims would deem “immoral.” Or even if we were to look at western cultures’ belief systems, we could surely find plenty examples of ideas of right/wrong that are merely based in outdated world views which do not account for expanded consciousness and possibilities of embracing everything as having some value in a particular place and time. The concern I’d have with A.I becoming the judge of morality is ensuring the integrity of the data it’s drawing from - in the sense of being able to filter out / lower the weight of information produced from cognitive bias and maintain objectivity, uninfluencable by flawed logic.

Though then again, depending upon just how intelligently these systems could be designed...

Morality is subjective. Consequences on the other hand are not so subjective. Statistics (probability) and consequences are measurable. This allows you to determine the risk spectrum.

That means risk to your reputation, risk against your interests, etc. Morality being subjective simply means you have to take moral sentiment into account which also is a matter of analyzing available data.

How do companies for example determine how to be perceived as moral? First they will study the location they operate in to determine moral sentiment in that particular country. So in China they will rely on their understanding of Chinese moral sentiment and most importantly the compliance requirements with the Chinese government. In Europe they have to comply with the European governments and must understand the moral sentiments of Europe. This is a matter of knowledge management and data analytics. This means that yes, you can automate quite a bit of it, and you can put AI to the task of processing the information in theory because really we are just dealing with number crunching here.

AI gives us the benefit of having perfect logic. So you can for example put in the Sharia law and Islamic morality. The AI would just see them as local rules, rules which apply when dealing with Muslims, or in Muslim countries, but the subjective part is how are these rules interpreted? For this we would have clerics, scholars, who have a similar role to our Supreme Court system. The problem here is public sentiment might disagree with the official ruling.

Which do you choose? Well you can look at the risk statistics. If there is a dictatorship or a very sophisticated surveillance market then it's likely you will have to in most cases focus on compliance. In specific, you would have to weigh the risk to your business of compliance vs non compliance in multiple different scenarios and set policies in advance for when you'll comply or not comply.

I admit this is going to be difficult to automate but it's not impossible for AI to help with some of this. AI can for example deduce certain insights that you might never have considered by reviewing the data yourself. On the other hand your risk appetite might be more than what most is and so you would have to ultimately decide if you'll risk it with non compliance.

All of these decisions require calculations, number crunching, and AI helps with that part.

You mention that sources of information matters. On this I agree but it's a similar problem to what DPOS faces when relying on data feeds. To find a trusted source of information is the challenge. All businesses and government agencies face the same problem. All people actually face the problem of which sources of information to trust. Only high quality information should be used but it's hard to filter the high quality information from the noise.

I don't think AI is going to solve that problem. I think for that we'll be relying on the crowd. AI can only really crunch numbers and do logic. AI isn't able to find meaning, or determine value.