Big Data 6/6

Welcome to the Last entry about the big data, I hope to subscribe, interact and give you Resteem. Thanks @javf1016 :)

First Entry: https://steemit.com/business/@javf1016/big-data-1-10

Second Entry: https://steemit.com/education/@javf1016/big-data-2-10

Third Entry: https://steemit.com/education/@javf1016/big-data-3-10

Fourth Entry: https://steemit.com/education/@javf1016/big-data-4-10

Fifth Entry: https://steemit.com/education/@javf1016/big-data-5-6

IV. Encrypted Information

In an organization Big Data is located on external servers, for example in the cloud, where data is stored and accessed, making it a challenge for security. One solution to this need is to be able to access, interpret such data in a way that we do not have to descript them; As a result we have that the data would be encrypted and its security would depend on the method used to encrypt them.

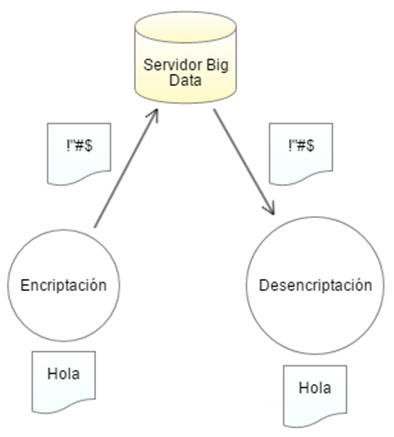

In this context we have the fully homomorphic encryption (FHE), where the data are encrypted when they are transferred to the servers, which have interpreters that allow access to the data without having to decrypt them. When we are in the interpretation stage can only be deciphered if the function has the key configured. At this point it sounds very interesting but we have a disadvantage, which makes this option almost totally unviable, this is because the number of steps for data protection slows the operations with the data, in a magnitude in which They become almost inoperable in real time. Fig. 10

Fig 10. Homomorphic encryption process

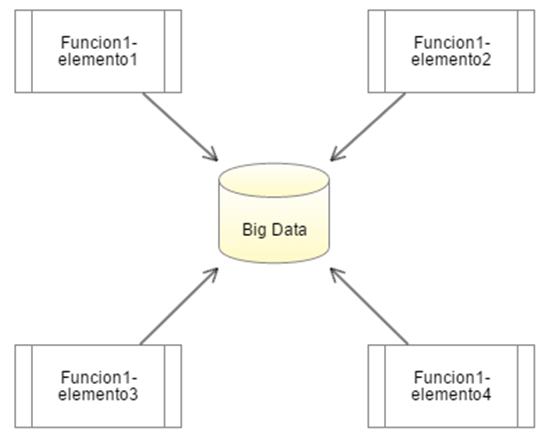

We also have the so-called multi-part computing (SMC), in which computational elements come together to perform the processes, each focused on different regions of the Big Data, thus maintaining the privacy of the Big Data. Fig. 11

Fig.11 Process of multi-part computation, several elements execute the function1 to different amounts of data

V. Textual analysis.

Big Data techniques can support textual analysis in real time and thus provide reliable results in a short time. In the application Big Data has challenges such as the development of scalable algorithms for data processing, in other words that the functions we use for example in machine learning are aware that some data will be imperfect; Another challenge we encounter is that there are no machine-focused tools that are customizable in the environment to be used. The correct representation of the information is already structured or unstructured, the ability to obtain excellent results as the data continues to increase and thus not lose confidence in the results obtained, are the maximum future concerns in text analysis.

5.1 Translation in the Big Data

The development of functions for the processing of ontologies, together with data mining, currently linked to voice recognition, automatic analysis, represent a great opportunity for diversification in the fields of use of the Big Data.

The main objective of this branch is to move from the translation of words, phrases to the entire translation of documents in block, either by OCR or voice recognition, to arrive at a point that the analytical use of the Big Data is transcendental in Globalization and its use is much more effective in fields such as business, education, etc. Speaking in terms of security, real-time language support would provide us with a much more advanced capability in the creation of functions that, regardless of language, respond effectively and in a timely manner, in addition to being able to predict circumstances shaped in clusters Of languages, with the option that this information be managed from the cloud, in the style of the best search engines that we have today.

5.2 Garbage Data

Data Garbage can be defined as the accumulation of data, which are not verified, which can lead to unwanted or unnecessary data. Organizations may consider that a large amount of data means better results, but this abundance can only result in nonsense analysis, erroneous predictions, not counting that their size either in the cloud or on their own servers increases indiscriminately, bringing High costs that are unnecessary.

Additional functions at the time of insertion of data would be the best choice for the selection of information, in addition to that for each learning decision, not all fields are necessary, filtering the information a second time at the time of interpretation, It accelerates us much more the capacity of learning, focused on the necessary data.

All this giving us the ability to validate if they are true, false, correct or incorrect data, or if they are data prepared to react to events, creating holes in information, copies and so on.

V. CONCLUSIONS

Big Data's computer security, focused on security and information integrity, is a powerful tool to prevent intrusions, such as information theft, to take care of the organization in which it is implemented. Although most techniques used in security are known, they are not fully implemented since in some places, it is not a priority, but it is something that must be changed, at the moment only for using a web or mobile application, our information Has already been captured by more than 10 databases hypothetically speaking, but what happens if that information is used elsewhere, who gives us the truth that that will not happen, is something that happens every day and not just We should be concerned but also the organizations that are collecting this information either for their credibility or just to improve and be more competitive.

The proper management of Big Data not only saves us hardware expenses, collecting the data better but also our actual response time to any circumstance, making us better seen in society as an organization, and thanks to this data and Good functions can make our ability to analyze and respond to our customers regardless of the place or cluster in society is much more correct and we can reach a greater number of customers, satisfying the needs with which they live each day.

REFERENCES

[1] Sunil, Soares. Not Your Type? Big Data Matchmaker On Five Data Types You Need To Explore Today. [En línea] http://www.dataversity.net/not-your-type-big-data-matchmaker-on-five-data-types-you-need-to-explore-today/

[2] Accenture’s 2014 report; “Big Success with Big Data"; Documento WEB - PDF; [https://www.accenture.com/us-en/_acnmedia/Accenture/Conversion-Assets/DotCom/Documents/Global/PDF/Industries_14/Accenture-Big-Data-POV.pdf]

*[3] Paul C. Zikopoulos; “Harness the Power of Big Data The IBM Big Data Platform”; Editorial: IBM Corporation; 2012.

BIBLIOGRAPHY

Big Data Foundations. Rayo, Ángel. 2016. 2016.

Commissioners, International Conference of Data Protection and Privacy. 2016. International Conference of Data Protection and Privacy Commissioners. [En línea] 2016. https://icdppc.org/.

Dunham, oseph Menn and Will. 2016. Reuters. [En línea] 2016.

Fragoso, Ricardo Barranco. 2012. IBM developerWorks. [En línea] 18 de 06 de 2012.

- Information is beautifull. [En línea] 2016.

http://www.informationisbeautiful.net/visualizations/worlds-biggest-data-breaches-hacks/.

Michael S., Rebecca S., Janet S., otros,. 2012. Analytics el uso de Big Data en el mundo real, Informe ejecutivo de IBM Institute for Business Value. s.l. : IBM Corporation, 2012.Sunil, Soares. Not Your Type? Big Data Matchmaker On Five Data Types You Need To Explore Today. [En línea] http://www.dataversity.net/not-your-type-big-data-matchmaker-on-five-data-types-you-need-to-explore-today/.

The Plataform for Big Data: a Blueprint for Next-Generation Data Management. Amr A., Webster M. 2013. s.l. : Cloudera White Paper, 2013.