Reverse Engineering a drone from Amazon to make it programmable

Introduction

Welcome to my first entry. As I would consider myself a drone enthusiast I was searching online to get myself a drone building set. I wanted to have a drone that I could program to do stuff based upon it's video feed. For example feeding that into a Convolutional Neural Network.

So as I was browsing I saw that these sets were usually pretty expensive and they never came with any instructions to build them. So I thought it might be fun to use a cheap toy drone that is controlled via an app.

So I searched through amazon and found the JJRC Blue Crab. It met all the requirements and I ordered it for 40€. It came a couple of days later and I was exicited to start my project. My goal was to control the drone and watch the video feed from my computer.

Throughout this project I used the updated Kali Machine 2017.2 and Python 3.6.3 for the scripts.

So let's jump right in.

Reverse Engineering from Package Data

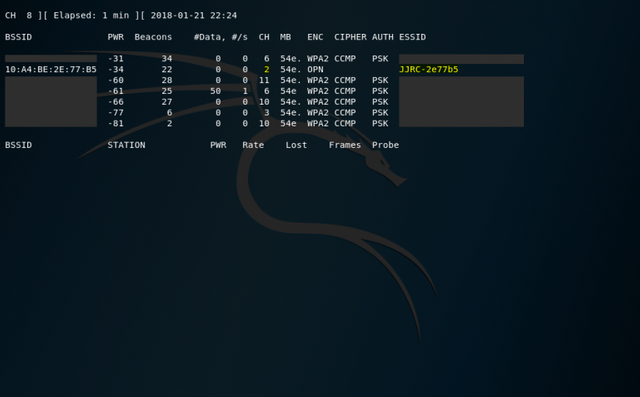

So I knew the drone was creating a WiFi called JJRC-Something. So in order to capture the traffic the app was sending to the drone I fired up Kali Linux and used aircrack-ng to start a scan.

First stop the network driver in order to use monitor mode.

sudo airmong-ng check kill

sudo airmong-ng start wlan0

sudo airodump-ng wlan0mon

As you can see airodump-ng picked up the WiFi of the drone and also that it is running on channel 2. Next I recorded some packages.

sudo airodump-ng -w JJRC_out -c 2 -bssid 10:A4:BE:2E:77:B5 wlan0mon

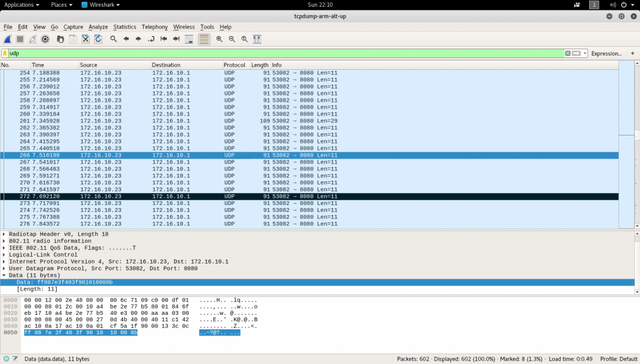

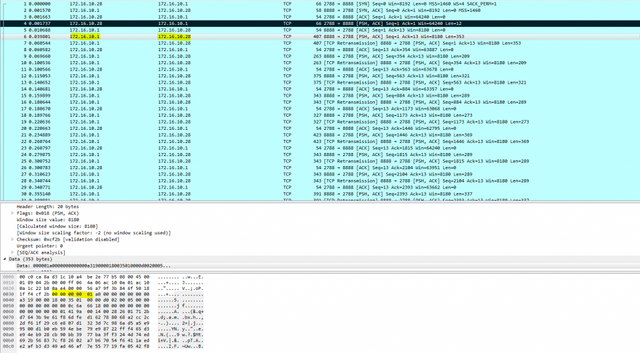

While recording I simply used all the functions the drone had and flew around so I could get a full picture of what packages would be sent. After I was done I opened the file in WireShark.

After some inspection I concluded that the videostream is being sent via a TCP stream on port 8888 and that controls are being sent via UDP to port 8080 on the drone. I also noticed that a simple data string of hex numbers was used to control every part of the drone. With the help of live package inspection by using

tcpdump -i 2 udp dst port 8080 -x -vv

I was able to decipher most of it. For better understanding here is a link to explain the vocabulary that is being used in the explanaiton.

"Arm" means the start of the blades, "disarm" the initiation of the landing sequence and "stop" the momentary stop of the blades.

My notes are on my personal blog!

On to the testing!

Flying the drone with the Keyboard

To test my observations I used the inputs of my keyboard and wrote a python script that repeats prerecorded packages from my PC after connecting to the drone's WiFi. You can find the code on my blog!.

As I was not yet able to write an algorithm that determines the the last byte of the udp control packages for each setting, I was able to simply relay packages. So I assigned Q, Y, W, S, A, D, E, R, 1 and 2 as control keys. Each key replays a package that controls the drone to the key's supposed direction. The next task was to get the camera streaming live on my PC.

Streaming the video feed of the camera

As I already mentioned in the "Reverse Engineering from Package Data" - Part I saw a TCP connection on port 8888 being established between the drone and the app upon first connection.

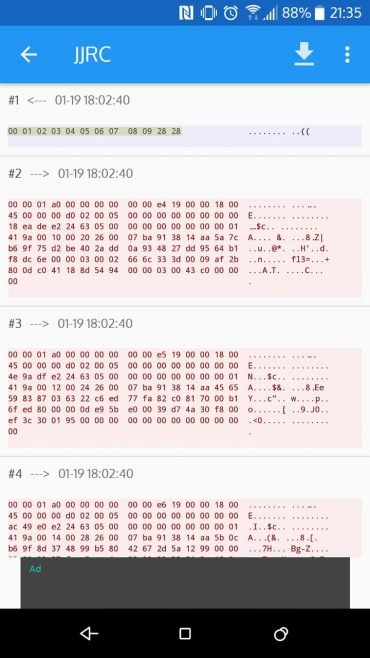

I intercepted some data on my phone with the Android app Packet Capture and saw a certain short data string was being sent once a minute and before sending it the drone would not answer with the video stream.

The blue box is an outgoing connection made by the app. It sends the "passphrase" the drone is waiting for which is

0x01 0x02 0x03 0x04 0x05 0x06 0x07 0x08 0x09 0x28 0x28

Talking about 123456 being the most used password in the Adobe breach back in 2015.

The following data is a raw h264 stream as I found out. The python script simply prints it to stdout.

s2.send(binascii.unhexlify(b'000102030405060708092828'))

data = s2.recv(1024)

sys.stdout.buffer.write(data)

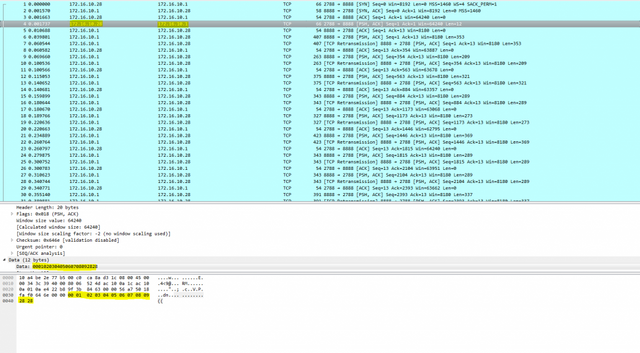

Above is the captured package in which the python script sends the passphrase to the drone.

We know that the video stream is raw h264 due to the 0x00 0x00 0x00 0x00 0x01 prefix.!

The Gstreamer pipeline saves the day by taking the raw h264 data, parsing it, decoding it and playing it.

python3 drone.py | gst-launch-1.0 fdsrc fd=0 ! h264parse ! avdec_h264 ! videoconvert ! xvimagesink sync=false

The drone.py listens for keyboard presses and triggers the corresponding package sending. In another thread it establishes the TCP connection with the drone and writes the raw h264 stream to stdout.

The pipe-symbol forwards the output to the gstreamer pipeline. There, first the input fdsrc is defined, which means the gstreamer is reading from a file descriptor.

The data gets forwarded to h264parse and avdev_h264 which parse and decode the raw h264 data. The videoconvert plugin converts the then created video from one colorspace to the one the xvimagesink needs to display the stream in a player.

sync=false is used to drop packages at the cost of video quality and at the gain of framerate.

On Windows

I also got it running on windows. Since the gstreamer version there doesn't offer a fdsrc I forwarded the stream from the python script sending datagram packets to the gstreamer pipelines udpsrc.

gst-launch-1.0 udpsrc port=5555 ! h264parse ! avdec_h264 ! videoconvert ! autovideosink sync=false

Using the videostream

The following gstreamer pipeline converts the stream to raw RGB and send that via the udpsink. Like that you could build up an RGB array and do pixel transformations with the stream video.

python3 drone.py | gst-launch-1.0 fdsrc fd=0 ! h264parse ! avdec_h264 ! decodebin ! videoconvert ! video/x-raw,format=RGB ! udpsink host=127.0.0.1 port=5000

The output is a continues stream of hex values. They are to be interpreted in pairs of nine. So the first top left pixel of the first frame would be represented by the first nine hexdigits.

Raw Data Stream Beginning: 232d2b...

-> First pixel: 23/ff Red 2d/ff Green 2b/ff Blue (hexadecimal)

-> 35/255 Red 45/255 Green 43/255 Blue (decimal)

I wrote a python script that renders the image from a file using matplotlib.

Thanks for reading.

All code is aviable at my blog!.

Congratulations @highonapples! You received a personal award!

Click here to view your Board

Congratulations @highonapples! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Vote for @Steemitboard as a witness to get one more award and increased upvotes!