Fake App I call Diminished Reality

Many of the most magical pieces of consumer technology we have today are thanks to advances in neural networks and machine learning. We already have impressive object recognition in photos and speech synthesis, and in a few years cars may drive themselves. Machine learning has become so advanced that a handful of developers have created a tool called FakeApp that can create convincing “face swap” videos. And of course, they’re using it to make porn. Well, this is the internet, so no surprise there.

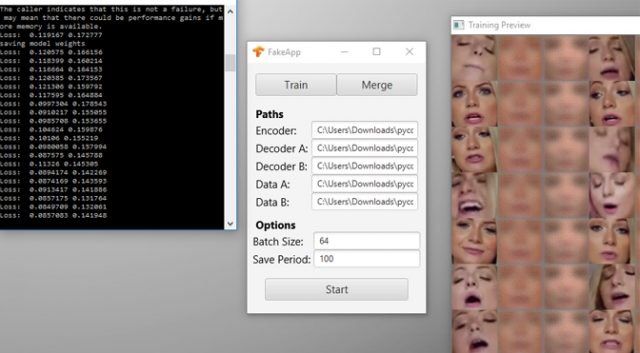

FakeApp is based on work done on a deep learning algorithm by a Reddit user known as Deepfakes. The tool is available for download and mirrored all over the internet, but setup is non-trivial. You need to download and configure Nvidia’s CUDA framework to run the TensorFlow code, so the app requires a GeForce GPU. If you don’t have a powerful GPU, good luck finding one for a reasonable price. The video you’re looking to alter also needs to be split into individual frames, and you need a significant number of photos to train FakeApp on the face you want inserted.

The end result is a video with the original face replaced by a new one. The quality of the face swap varies based on how the neural network was trained — some are little more than face-shaped blobs, but others are extremely, almost worryingly, convincing. See below for a recreation of the CGI Princess Leia from Rogue One made in FakeApp. The top image is from the film and the bottom was made in FakeApp in about 20 minutes, according to the poster. There’s no denying the “real” version is better, but the fake one is impressive when you consider how it was made.

The first impulse for those with the time and inclination to get FakeApp working was to create porn with their favorite celebrity swapped in for the actual performer. We won’t link to any of those, but suffice it to say a great deal of this content has appeared in the two weeks or so FakeApp has been available. Some are already coming out strongly against the use of this technology to make fake porn, but the users of FakeApp claim it’s not any more damaging than fake still images that have been created in Photoshop for decades.

The real power, and potential danger, of this technology isn’t the porn. What if a future version of this technique is so powerful it becomes indistinguishable from real footage? All the face swaps from FakeApp have at least a little distortion or flicker, but this is just a program developed by a few people on Reddit. With more resources, neural networks might be capable of some wild stuff.

MSM has been using this tech for a while

Here how I made mine