How Machine Learning is changing Software Development

I'm not here to talk to you about how amazing A.I. is, what Deepmind is working on, or speculate about robotic overlords. I do do that, sometimes. Today, I want to focus on the most simple and boring type of A.I. that is Machine Learning without Neural Networks.

Why? Because it will change the way software is created forever.

Wait, isn't all A.I. just Neural Networks?

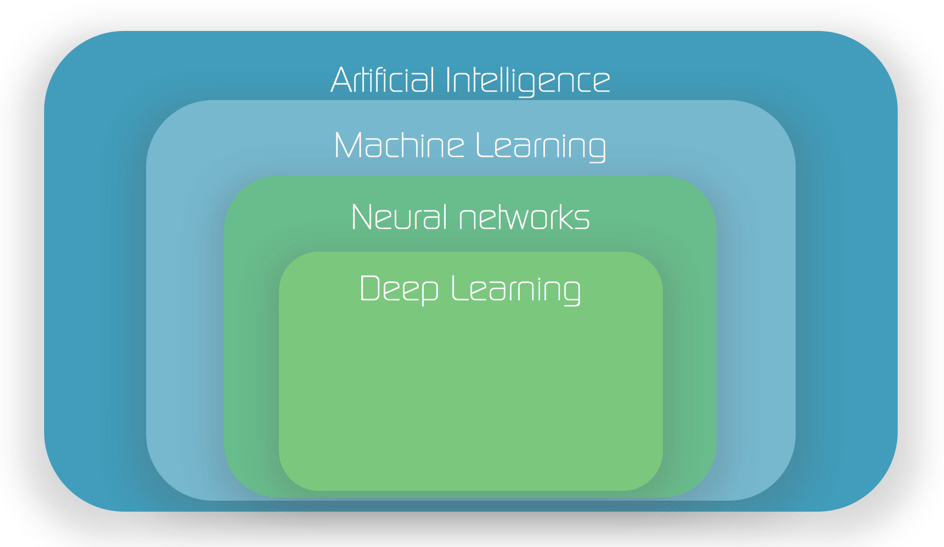

Okay, let's get a couple things out of the way definitions wise. While it may seem that Neural Networks, Deep Learning, Machine Learning and Artificial Intelligence are all the same things, they all have their own history and origin, as well as hierarchy. The reason you might be hard pressed to see that distinction is because of all the research and media attention around the last decade of advances specifically in Deep Learning.

I've done two courses on Artificial Intelligence, one with M.I.T. and the other with Toronto University and Geoff Hinton. Geoff Hinton goes pretty much straight into Neural Networks and then into Deep Learning, as do many other courses. Luckily for me though, I had done the M.I.T. course which had one out of 20 lectures on Neural Networks, the rest covering all other aspects and history of Artificial Intelligence. So let's break it down some.

A.I. = M.L.

The good thing here is that most of the terminology actually has logic to it. To put it simply, Artificial Intelligence is any system that can make its own decisions. For all intents and purposes, given the research and advances of the last three decades, you can safely interchange these two terms. You're pretty much only ruling out rule-based "expert systems" that airlines used in the 80's. Other than that, everything interesting in A.I. relates to Machine Learning.

Machine Learning covers a lot

Luckily again, Machine Learning is self-explanatory. Instead of you telling the machine what decisions and rules to make, you teach it. A machine that learns. So that leaves the methods of teaching and learning pretty wide open. So what can you teach a machine, and what can it learn?

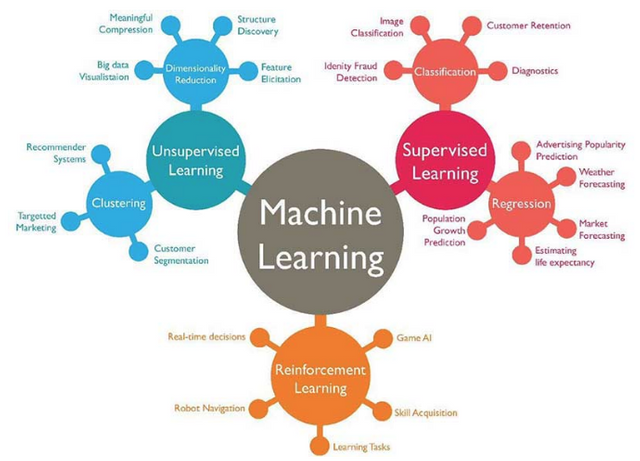

This is the current landscape. It all sounds very fancy and complicated, and it is. To dumb it down, here is what you can do with them:

- Classification algorithms can be taught to split existing data into classes, like say names of animals. Then when you give it new data, it will tell you which class it belongs to, like say this is a chicken.

- Regression algorithms basically try to learn the function of a dataset, by predicting future data based on past data. Exactly like the "regression line" you had in Excel, but fancier.

- Unsupervised Learning can be used if you've got lots of data and you can't make sense of it, so you teach the machine to try and make sense of it instead

- Reinforcement Learning is how beat every human on Earth in games like GO and Chess, or drive autonomous cars and drones. If you're not doing those things, you don't need to know about it.

While the last two get a lot of the media, the first two are the moneymakers. So we're going to focus on them. Regression is trying to understand how the dots in your plot relate to each other. Classification is the opposite, and tries to separate the dots in your plot into groups. There are a lot of ways to do each of those things, and Neural Networks is just one of them. So let's get it out of the way, before we get into the practical stuff.

Neural Networks are a special flavor of ML

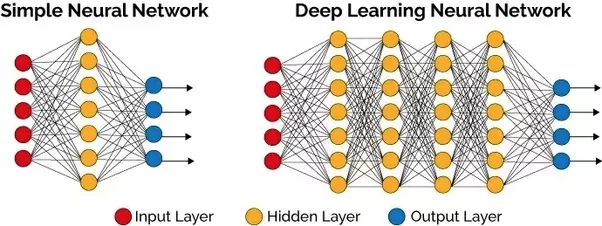

Neural Networks and the associated learning algorithms hold a special place, because they're inspired by the brain. We know that neurons are connected in vast networks inside our brain, and that electrical signals go from neuron to neuron to produce all of our conscious experience. Seeing. Hearing. Thinking. Speaking. All neural networks in action.

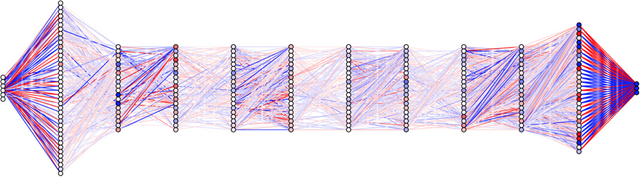

What's inside? Well, a bunch of neurons, organized into inputs, layers, and outputs. Really the function of the layers is to introduce complexity. Otherwise you could only do really simple things like add numbers together. But when you make all those spiderweb connections across hundreds or even thousands of neurons in several layers, it turns into magic.

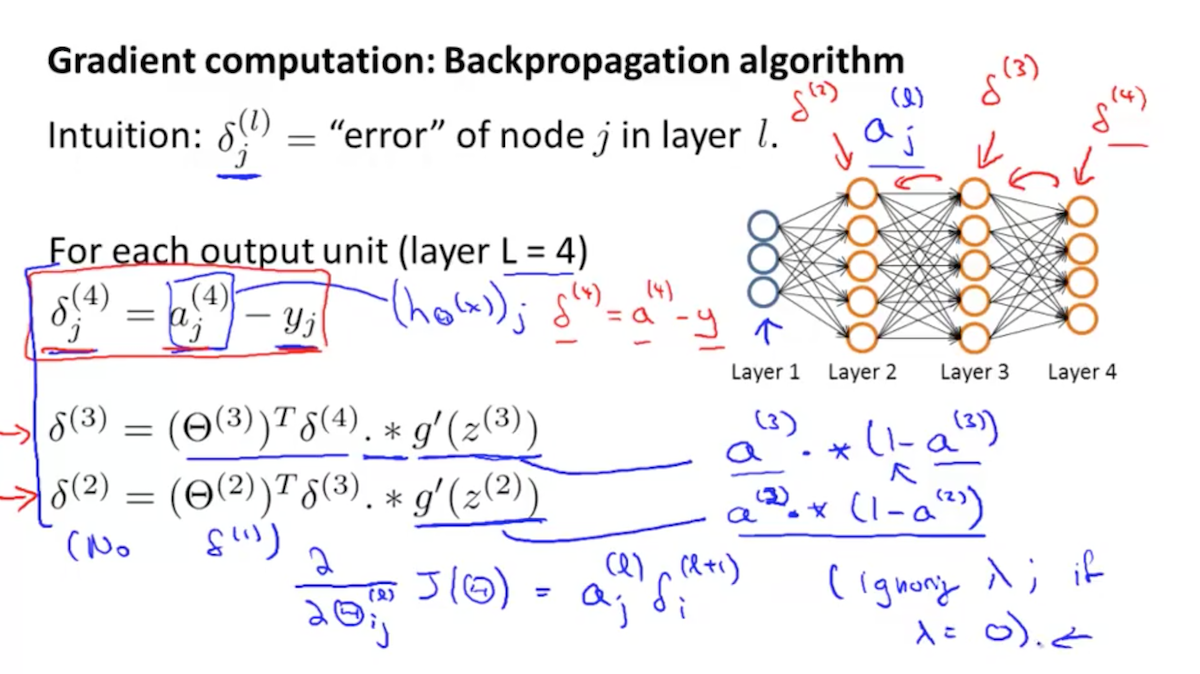

Am I kidding about magic? Yes and no. It's magical in how powerful such a seemingly simple thing is. It can learn almost anything with a learning process called "backpropagation", which starts by comparing how far the prediction is from the intended outcome. Then it makes a series of minute changes across that whole network, and tries again, to see if it got better or worse. The real explanation goes beyond high-school math pretty quick, and involves working out the partial derivatives from the output all the way back to the input.

What magic can it do? It can read handwriting. It can recognize objects in pictures. Play chess, even. All at human level, or beyond/. The magic also means we're not 100% sure what's going on in there. It's so complex. Change one value on one of those lines, and the whole output changes. Why does it work? When does it work? How do we find the best and fastest way to train the network? Work in progress, let's say.

Can't we just always use magic?

In theory, yes. In practice. Not so much. Let us introduce our other contestants to demonstrate why.

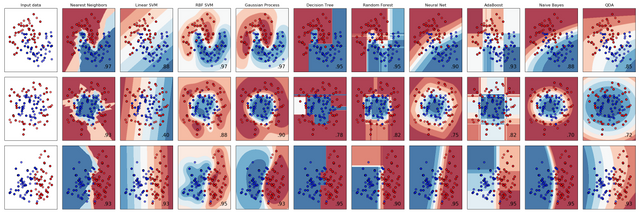

On the left, you see three datasets with a white background. Going from left to right, each column represents a type of Machine Learning algorithm trying to separate the blue dots from the red dots. This is called Classification. Remember, we've told each algorithm already which color each dot is. It's just trying to create a rule for which area blue dots go in, and which area red dots go in. As you can see, results may vary!

Something you may notice is that Neural Nets, the fourth from the right, is doing something funny. Each time, it's doing something different. How does that happen?

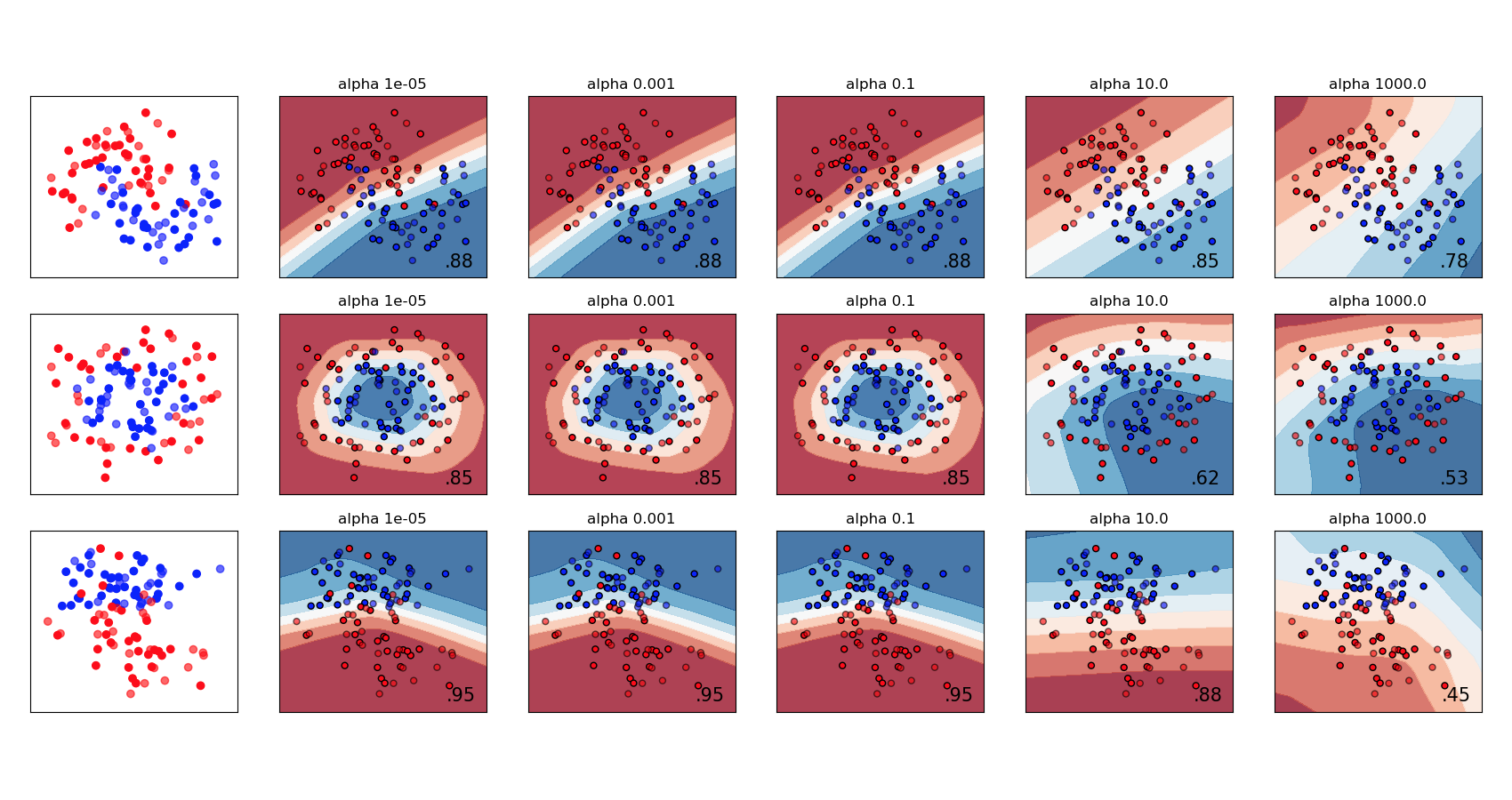

To really make this point hit home, the above picture is just one Neural Network with three different datasets. This time, the columns represent changing one setting, called hyperparameters, of the network. Even then, you get wildly different outcomes.

Neural Networks are unpredictable by nature. It's why they're so powerful. So the tradeoff is big. So why can't you just fiddle around a bunch to make it work?

Reasons you can't use Neural Networks every time:

- They're complex, and making informed decisions for their design requires serious math skills most people don't yet have

- They're unpredictable, so you have to fiddle around to make it work at all, even if you know what you're doing

- It's hard to say if you've done the right thing, unless you try a lot of different things

- Even if there are many ways to measure how good your network is, it can be difficult to understand how to fix any problems

- Making up your mind about the above can take a lot of tries, and each try can take a lot of time and money

The less popular sidekicks to Neural Networks

As you saw earlier, there are many alternatives. I'll focus on the two which give you simple and predictable outcomes with two very different approaches. Why? Because most often, one of these will quickly solve your problem. Both can be used for Regression and Classification, depending on your problem. Again, I'll choose to focus on Classification for reasons I'll explain later.

Anecdotal evidence from observing winning entries at data science competitions (like Kaggle) suggests that structured data is best analyzed by tools like XGBoost and Random Forests. Use of Deep Learning in winning entries is limited to analysis of images or text. - J.P. Morgan Global Quantitative & Derivatives Strategy

The difference between Neural Networks, and all other Machine Learning methods is how they learn. As we saw earlier, Neural Networks kind of guess their way to the best solution. Kind of. The other methods actually calculate a solution. They consider the data you give them, and use a large variety of mathematical optimization methods to simply find a right answer. Another benefit? These methods are fast to train, and fast to execute. So no need for cloud computing or special hardware. So let's look at them.

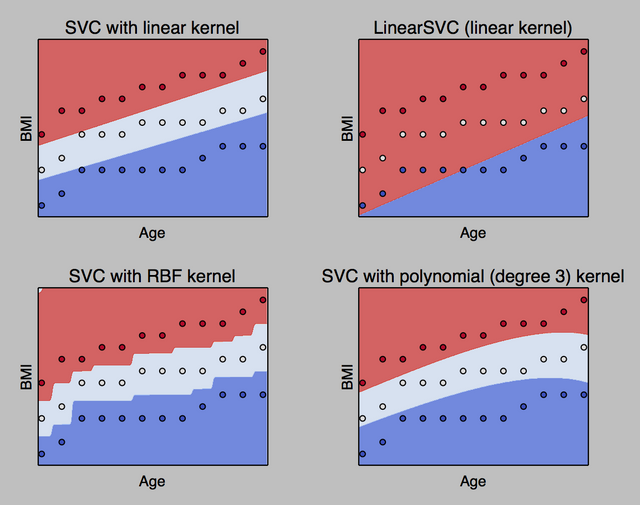

Linear is straightforward

The most logical and simple way to try to separate a dataset is to draw a straight line through it with a ruler. That's what a human would do. That's also what Support Vector Machines ("SVM") do, despite the kind of awesome and complicated sounded name. The algorithm tries to find the best single straight line to separate your datasets, and then sets a buffer around that line to separate the datasets as far as possible.

You might be interested to find out that the original SVM algorithm was invented already in 1963, decades before A.I. was cool. Many variations incl. non-linear solvers, i.e. not straight lines, but we really want to keep it simple and understandable. So linear it is.

Trees are your friends

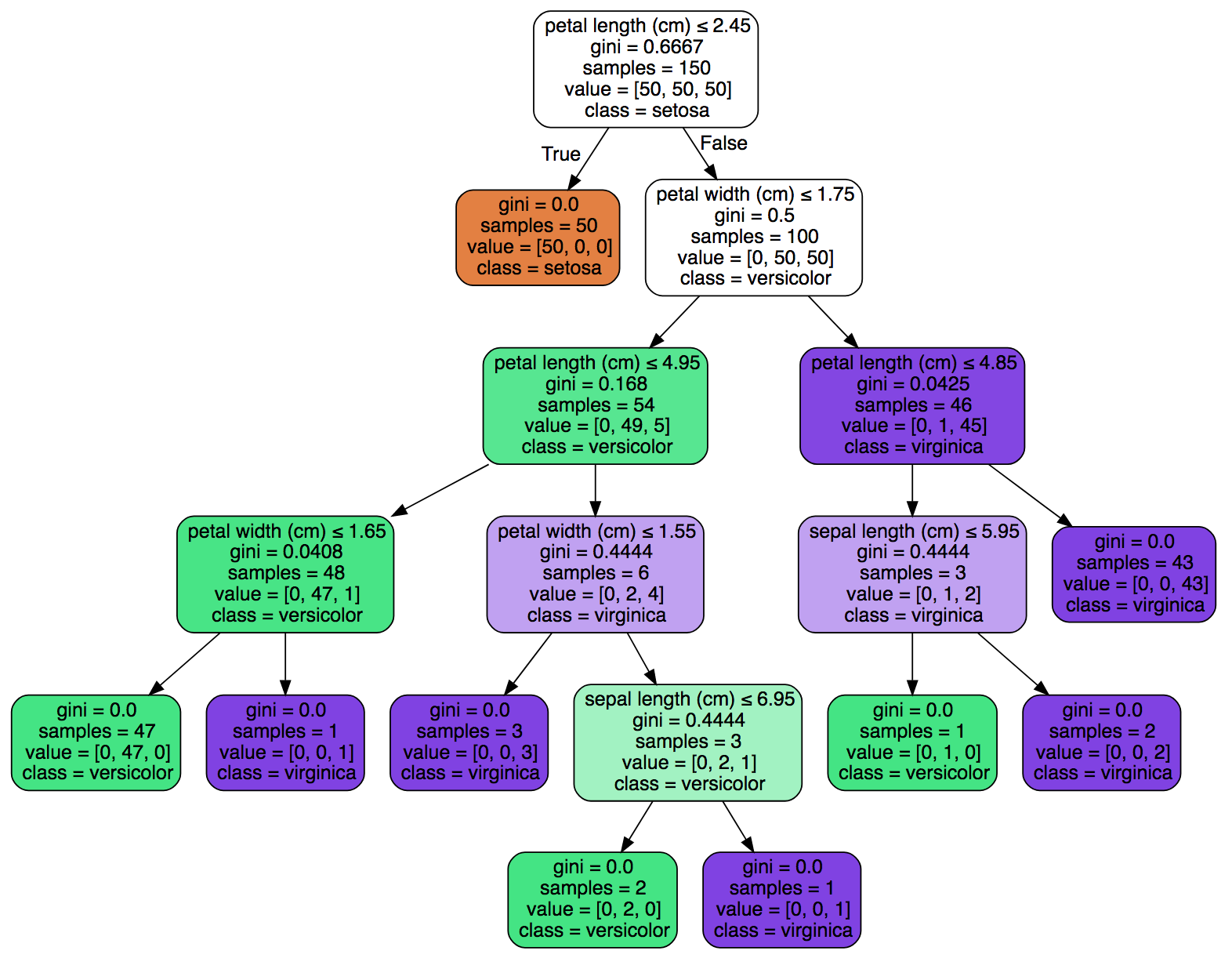

Decision Trees choose which variables and values most predict the outcome based on your dataset. Slice and dice. It tries to "cut" your data points by separating variables at certain ranges within their values. Once it makes a cut, it moves to the remaining available variables and tries to do the same, while trying to do as few cuts as possible to keep things simple.

The result is like fitting rectangular Tetris blocks on your data. This sounds like a bad idea, but because of this crude approach the tree has a huge party trick that sets it apart in all of Machine Learning.

Decision trees can explain themselves. Yes, you read that right. All those media articles about how Neural Networks are doing unpredictable and even things? Not a problem here.

Even better than that, there is a free tool called graphviz that generates a visual representation of the resulting algorithm. You can actually check the logic, and be 100% sure you know what it does and when. Get a weird result? Look it up, and you'll see exactly why.

A useful variation of the Decision Tree is a Random Forest, which runs a bunch of individual tree solutions and gives you an average. Compare them side-by-side in the comparison chart above, and you see the idea.

How software is currently created

So, back to the big picture. We now have some cool new tools to play around with. So what? I'm just creating an app or website. This doesn't apply to me. I'm not trying to beat chess masters here.

Wrong. First, let's establish how most software is created today. Software is rules based. Meaning you define a set of rules on how things work, and then the software just does the same exact thing over and over and over.

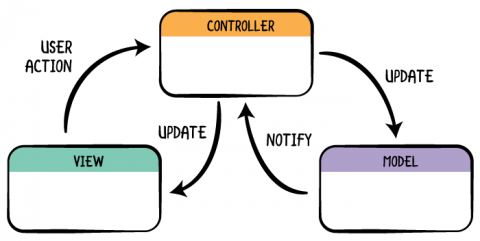

This is a typical structure commonly used in modern software. You have three kinds of code. One that shows things (view), one that defines things (model), and one that decides what happens between the two (controller). In this kind of structure, there are two ways explicit rules are imposed: the model itself, and the "business logic" of the controller. Business logic is a fancy word for "if this happens then do that".

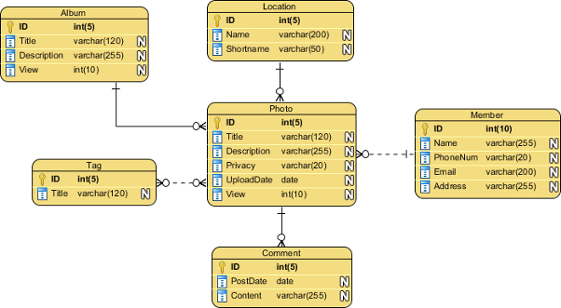

So what goes in the model box? A fixed model with fixed relationships. This is why software is slow and hard to create, because you have to map it all out. The further you get, the harder it is to change anything. Innovation slows down over the iterations and versions, as the degrees of freedom are reduced to zero.

How (simple) Machine Learning can help you create better software

The terms A.I. and M.L. have become so overused that most people now scoff at anyone who says they use either. I used to be that guy. But having been a practitioner for a while, I'm starting to see the light. There is in fact a legitimate way to sprinkle a little A.I. into any software.

Teach logic to your software

What if rather than have to decide on how everything has to work at the beginning, you could just teach your software what to do? That way, if you had to change it later, you could just teach it again? While you may be picturing Tony Stark and Jarvis, you can do it too, today.

This is where we get back to Classification, specifically. What is logic? What is decision making? It's connecting a number of inputs to a number of outputs. Also called Multiclass Classification. What's a great algorithm for this purpose? Something that allows you to train on data rather than define the code, but is simple and explainable? Decision Tree. How does it work?

To train any classifier with scikit-learn, you need two lines of code. Yes, two.

classifier = sklearn.tree.DecisionTreeClassifier()

classifier = clf.fit(inputs, outputs)

The best part is that it can replace complex logic and modeling work with one line of code. Yes, you read that right. Once you train a model, this is how it works:

output = model.predict(inputs)

Alternatively, you might want to get a probability distribution across all possible outputs for a set of inputs.

outputs = model.predict_proba(inputs)

I mean, isn't that just beautiful? If you have new data, or need to replace the model, you have to change one file: the model itself. Job done. No database migrations. No automated integration test suites. Drag & drop.

How do I get data?

So what kind of data can you pump into one of these classifiers? Here's one simplified example. Let's imagine your app is recommending what pet a user should buy based on their preferences. You might ask about characteristics that users would want in a pet, and train a model to produce a recommendation. The output will depend on how much data you have, and how specific you want the recommendations to be. Rather than a database model, which has to return an exact matching dataset using complicated join statements, you could return the top 3 most probable choices in one line of code.

Toy example: few simple inputs, few hundred datapoints

| Whiskers | Meows | Furry tail | Animal |

|---|---|---|---|

| Yes | Yes | Yes | Cat |

| No | No | Yes | Dog |

| Yes | No | No | Rat |

| ... | ... | ... | ... |

| Yes | No | Yes | Mouse |

In most cases though, your data won't be that simple, and the inputs won't be unified as a mere yes/no which can be turned into 1 and 0. So you may need to adapt your data to be something the classifier can learn. You could do some operations manually to turn the strings into numeric classes, or run automated algorithms to encode your data, such as a One Hot Encoder. Since the training is trying to establish relationships in your data, making the numbers easier to relate will help get a better result, as long as you can still interpret that result!

Simple example: many inputs of various formats, thousands of datapoints

| Whiskers | Meows | Character | Average weight | Average lifespan | Animal |

|---|---|---|---|---|---|

| Yes=1 | Yes=1 | Loner=1 | 5kg=0.05 | 18y=0.18 | Scottish Fold=1 |

| No=0 | No=0 | Stable=2 | 30kg=0.3 | 10y=0.1 | Rottweiler=2 |

| Yes=1 | No=0 | Aggressive=0 | 20g=0.0002 | 1y=0.01 | Hissing Cockroach=3 |

| ... | ... | ... | ... | ... | ... |

| Yes=1 | No=0 | Friendly=4 | 100g=0.001 | 2y=0.02 | Hamster=4 |

So you may have a question of how to generate such training data. I mean, who is qualified to say what is the right behavior? What if you have inputs but no output labels? This of course depends entirely on the problem you're solving, but answers could range from creating and labeling your own data, to finding existing (open) research data, or even scraping existing databases or websites like Wikipedia.

An interesting opportunity this approach creates is that of expert opinion. What if you crowdsourced the training data from a panel of experts in that specific field? Maybe doctors, zoologists, engineers, or even lawyers. Well, maybe not lawyers.

A.I. is becoming mobile friendly

Traditionally, one of the challenges in adopting A.I. was that you needed to run these models in the backend. So first of all, you needed an actual backend server, which meant a different language potentially, and the hassles of hosting and so forth. Secondly, it meant those models could only be run when connected to the server. So if it was a core feature of your app for example, it would only work online.

Apple has been first to tackle the offline issue by introducing the CoreML SDK as part of iOS11. It works like a charm. All you need to do is convert your existing model into CoreML format, and you can literally drag & drop it into your XCode project. From there, the model will generate a class API for you that you can call as follows:

guard let marsHabitatPricerOutput = try? model.prediction(solarPanels: solarPanels, greenhouses: greenhouses, size: size) else {

fatalError("Unexpected runtime error.")

}

The future here is that several companies including Apple are rumored to be working on dedicated A.I. chips for their next generation devices. That would enable fast execution of complex neural nets on your own device.

How to get started

Scikit-learn tutorials are a great place to start.

It's all in Python, which is the easiest language to pick up, so don't be intimidated. Start with this one, and get through it line-by-line. There are few things to get your head around in terms of preparing data, so just do it.

http://scikit-learn.org/stable/tutorial/basic/tutorial.html

How to run different classifiers and visualize the results in 2D:

http://scikit-learn.org/stable/auto_examples/classification/plot_classifier_comparison.html

Apps for iOS using CoreML SDK:

https://developer.apple.com/documentation/coreml

Try it, it's really not that hard if you know how to code at all!

Have you dipped your toes in A.I. already? Any other tips to get started with some blue collar Machine Learning?

Do you want me to resteem your blog post to over 32,800 followers and many viewers to get more upvotes? For more info. go here: https://steemit.com/@a-0-0

@dullboy- my account hacked and robbed now a thief sending a rubbish

Where to watch (in general) the team of steemit,

do not send mail to restore the account

I'm a fan of Data, the Android in Star Trek Next Generation. I'm a big fan of C3PO and Johnny 5. Love Wall-E. Some of the programs, the artificial intelligence (A.I.), nowadays, currently, generally, have complex programs, codes, are adaptive and are learning as some do say. Are they learning like people? Do robots have souls or are they only simulating what humans do? I do love blockchain technology. Thanks for sharing, hehe. I'm Oatmeal Joey Arnold. You can call me Joey.

Excelente publicacion. Lo guardare en mi computador para estudiarlo mas profundamente

Software development is in evolution phase since the beginning, I regularly follow top software development firms like IBM, GoodCore Software and the thing I noticed about their software development process is that things rapidly change overnight in this field, so its better to always be prepared.

Great article, but I think that we have a big way out there so machines would learn to create something meaningful. As for right now, if you want to make a website or develop an app then vue.js development company is still your way out. Machines are only a prototype that won't be able to replace a person pretty soon.

Machine learning is a form of artificial intelligence that allows inventory management software development to learn and improve from experience without being explicitly programmed. Machine learning is changing the way software is developed by making it possible for computers to automatically learn and improve from data.