Talking about Big Data - Data Technology

Content

Big data technology, I think it can be divided into two big levels, big data platform technology and big data application technology. To use big data, you must have computing power. The big data platform technology includes the underlying technologies required for data collection, storage, circulation, and processing, such as the hadoop ecosystem and the data plus ecosystem. Data application technology refers to the technology that processes data and transforms data into commercial value, such as algorithms, and models, engines, interfaces, products, etc. derived from algorithms. These underlying platforms for data processing, including platform-level tools and algorithms running on the platform, can also be deposited in a big data ecological market, avoiding repeated research and development, and greatly improving the efficiency of big data processing.

This article mainly introduces big data platform technology. In the next article, we will focus on the processing and use of big data, that is, the application and processing technology of big data. In the next article, we will discuss the ecological market of big data.

Big data first needs data, and data must first solve the problem of collection and storage. Data collection and storage technology, with the explosion of data volume and the rapid development of big data services, is also in the process of continuous evolution.

In the early days of big data, or in the early development of many enterprises, only relational databases were used to store core business data. Even data warehouses were also centralized OLAP relational databases. For example, many companies, including Taobao, used Oracle as a data warehouse to store data in the early days. At that time, the largest Oracle RAC in Asia was established as a data warehouse. According to the scale at the time, it could handle data sizes below 10T.

Once an independent data warehouse appears, it will involve ETL, such as data extraction, data cleaning, data verification, data import and even data security desensitization. If the data source is only a business database, ETL will not be very complicated. If the data source is from multiple sources, such as log data, APP data, crawler data, purchased data, integrated data, etc., ETL will become very complicated. , The task of data cleaning and verification will become very important.

At this time, ETL must be implemented in accordance with data standards. If there is no data standard ETL, the data in the data warehouse may be inaccurate, and wrong big data will lead to upper-level data applications, and the results of data products are all wrong of. A wrong big data conclusion is better than no big data. This shows that data standards and data cleaning in ETL, data verification is very important.

Finally, as data sources increase and data users increase, the entire big data flow has become a very complex network topology. Everyone is importing and cleaning data, and everyone is also Using data, however, no one believes that the other party imports and cleans the data, which will lead to more and more duplicate data, more and more data tasks, and more and more complex tasks. To solve such problems, data management must be introduced, that is, the management of big data. Such as metadata standards, public data service layer (trusted data layer), data usage information disclosure, etc.

As the amount of data continues to grow, centralized relational OLAP data warehouses can no longer solve the problems of enterprises. At this time, professional-level data warehouse processing software based on MPP, such as GreenPlum, appears. Greenplum uses the MPP method to process data, which can process more and faster data, but is essentially a database technology. Greenplum supports about 100 machines and can handle PB-level data. Greenplum products are developed based on the popular PostgreSQL. Almost all PostgreSQL client tools and PostgreSQL applications can run on the Greenplum platform. There are abundant PostgreSQL resources on the Internet for user reference.

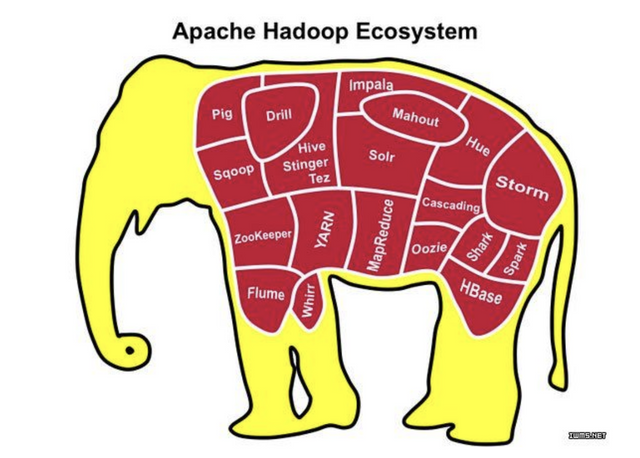

As the amount of data continues to increase, for example, Ali needs to process more than 100PB of data every day, and there are more than 1 million big data tasks every day. The above solutions found that there was no way to solve them. At this time, some larger M/R-based distributed solutions appeared, such as Hadoop, Spark and Storm in the big data ecosystem. They are currently the three most important distributed computing systems. Hadoop is often used for offline complex big data processing, Spark is often used for offline fast big data processing, and Storm is often used for online real-time big data processing. As well as the data plus launched by Alibaba Cloud, it also includes the big data computing service MaxCompute (formerly ODPS), relational database ADS (similar to Impala), and the Java-based Storm system JStorm (formerly Galaxy).

Let's take a look at different solutions in the big data technology ecosystem, and compare the solutions of Alibaba Cloud DataPlus. In the next article, I will also introduce DataPlus separately.

Big data technology ecosystem

Hadoop is a distributed system infrastructure developed by the Apache Foundation. The core design of the Hadoop framework is: HDFS and MapReduce. HDFS provides storage for massive amounts of data, and MapReduce provides calculations for massive amounts of data. As a basic framework, Hadoop can also carry many other things, such as Hive. People who don't want to develop MapReduce with a programming language, and those who are familiar with SQL can use Hive to open offline data processing and analysis. For example, HBase runs on HDFS as a column-oriented database. HDFS lacks random read and write operations. HBase emerged for this reason. HBase is a distributed, column-oriented open source database.

Spark is also an open source project of the Apache Foundation. It was developed by the laboratory of the University of California, Berkeley, and is another important distributed computing system. The biggest difference between Spark and Hadoop is that Hadoop uses hard disks to store data, while Spark uses memory to store data, so Spark can provide 100 times the computing speed of Hadoop. Spark can run in a Hadoop cluster through YARN (another resource coordinator), but now Spark is also going ecologically, hoping to take all upstream and downstream, a set of technology stack to solve everyone's multiple needs. For example, Spark Shark is for VS hadoop Hive, and Spark Streaming is for VS Storm.

Storm is a distributed computing system promoted by Twitter. It is developed by the BackType team and is an incubation project of the Apache Foundation. It provides real-time computing features on the basis of Hadoop, and can process big data streams in real time. Unlike Hadoop and Spark, Storm does not collect and store data. It directly receives data in real time through the network and processes the data in real time, and then sends back the results directly through the network in real time. Storm is good at handling real-time streaming. For example, logs, such as the click stream of website shopping, are continuous, sequential, and unending. Therefore, when data comes through message queues such as Kafka, Storm will start working on the same side. Storm does not collect or store data itself, and it processes and outputs results whenever it comes.

The modules above are just the general framework at the bottom of large-scale distributed computing, and computing engines are usually used to describe them.

In addition to the calculation engine, if we want to do data processing applications, we also need some platform tools, such as developing IDEs, job scheduling systems, data synchronization tools, BI modules, data management, monitoring alarms, etc., together with the calculation engine, they form a big The basic platform of data.

On this platform, we can do big data processing applications based on data and develop data application products.

For example, in a restaurant, in order to make Chinese food, Western food, Japanese food, and Spanish food, it must be prepared with ingredients (data), with different kitchenware (big data underlying computing engine), and different condiments (processing tools). Different types of cuisine; but in order to receive a large number of guests, he must be equipped with larger kitchen space, stronger kitchenware, and more chefs (distributed); whether the dishes are delicious or not, it depends on The level of the chef (big data processing, application ability).

Alibaba's big data technology ecology

Let’s take a look at Ali’s three-piece computing engine

Alibaba Cloud was the first to use the Hadoop solution and successfully expanded the scale of a single Hadoop cluster to 5,000 units. Since 2010, Alibaba Cloud has independently developed the Maxcompute platform (formerly ODPS), a distributed computing platform similar to Hadoop. At present, the scale of a single cluster exceeds 10,000 units and supports multi-cluster joint computing, which can process 100PB of data in 6 hours. The amount is equivalent to 100 million high-definition movies.

Analytical database service ADS (AnalyticDB) is an RT-OLAP (Realtime OLAP) system. In the data storage model, a free and flexible relational model is used for storage, and SQL can be used for free and flexible calculation and analysis without pre-modeling; and using distributed computing technology, ADS can process tens of billions of data or even more. Up to or even beyond the processing performance of MOLAP systems, and truly realize millisecond-level calculations of tens of billions of data. ADS is a highly pre-distributed MPP architecture that uses search + database technology. The initial cost is relatively high, but the query speed is extremely fast and high concurrency. Impala, a similar product, uses a low-pre-distributed MPP architecture with Dremel data structure. The initialization cost is relatively low, and the concurrency and response speed are relatively slow.

Streaming computing products (formerly Galaxy), which can analyze large-scale flowing data in real time during the changing movement process. It is a set of distributed real-time streaming computing framework based on Storm and rewritten in Java open sourced by Alibaba, also called JStorm. The comparison product is Storm or Spark Streaming. Recently, Alibaba Cloud will start public testing of stream sql, and realize real-time streaming computing through sql, which reduces the threshold for using streaming computing technology.

In addition to the calculation engine, the entire Ali big data ecosystem, I will introduce in detail in the article of the number plus.

How will the underlying technology of big data develop in the future? I personally feel that there will be two key developments as follows:

Cloudization and ecologicalization of data processing

In the future, data must be interconnected to be valuable. The current private cloud big data solution is a transitional stage. The future big data needs a larger and more professional platform. Only here can the entire big data ecosystem be realized (will be introduced in a later article). Including the trading market of the data itself (big data trading platform), as well as the trading market of tools and algorithms (or algorithm-based engines, interfaces, products) for processing data, similar to the current App Store.

The data ecology is a very large market.

Cloud data warehouse integration

In the future, many companies will benefit from integrated cloud database/data warehouse solutions. From the current technology perspective, big data platforms are increasingly capable of real-time; online business databases are increasingly capable of distributed computing. When these capabilities are integrated and unified, the online/offline boundary will no longer be clear, and the entire data technology system will return to the original database/data warehouse integration.