I'm starting to learn Node.js and Steem apis: Lorenz curves of post rewards for past two weeks

In the past I've used javascript for some projects, but I have been meaning to learn how to use Node.js for some more client/server type stuff. The recent controversy on the chain has drawn some attention to dApp and blockchain tool development, and that made me think that it might be interesting to investigate the Steem apis. Another aspect of the controversy is that it has been about “distribution”, but it seems to me that people often talk about this in a very hand-wave-y way, even though it could easily be a very data-focused discussion given that it's all available in the blockchain. So my thought is that trying to write a tool to help visualize some of this data would be a good way for me to learn how to use Node.js and the Steem apis, and if it works well it might be useful as people discuss the past, present, and future of the blockchain.

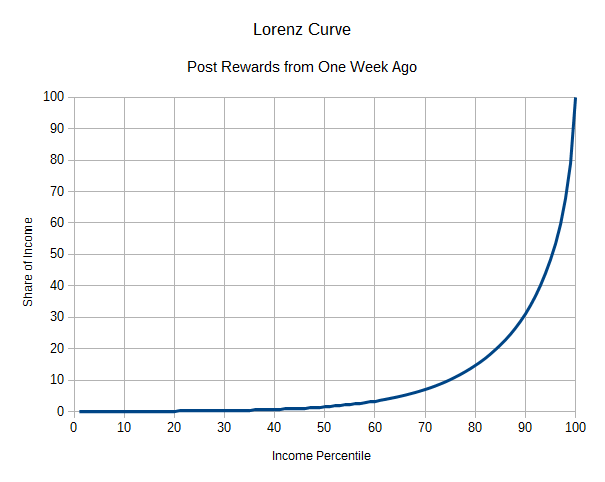

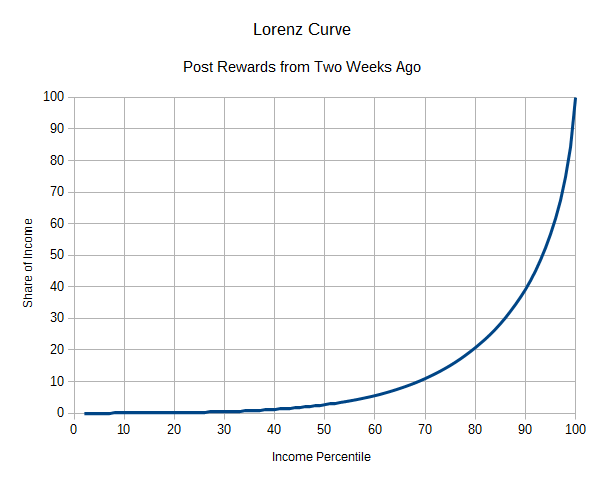

Eventually my hope is to develop a robust tool that generates its own pretty charts on a variety of measures, but as an initial task to get started I settled on trying to generate the Lorenz curve of post rewards for a week's worth of posts. In traditional economics, one way that people try to analyze distribution of resources is with the Lorenz curve. It's usually used for income, since in real-world economies that's usually easier to measure than wealth. On the X axis you plot the income percentile, and on the Y axis you plot the share of income that goes to that percentile. So for example, if the lowest-income 10% of a population earned 2% of the combined income of the entire population, you would plot that at point (10, 2). Since a percentile includes everything below it, this will give you an upward sloping curve. If the population was totally equal then you'd get a straight line, since the bottom 10% would get 10% of the income, the bottom 50% would get 50%, etc. If the population was very unequal, you'd get almost zero for most percentiles, and then it would shoot up to 100% at the end when you get to the handful of people who get everything. (One aspect of Steem that will make analyses of this type difficult is that there isn't a one-to-one correspondence between accounts and people, either for good reasons like having a personal account and an account for a project or business, or for bad reasons like running a network of spam accounts).

Right now the script just spits out the data into a CSV file, which I imported into LibreOffice to make the charts below. I'm not sure that there's a ton of insight to draw from these, except to note that things seem more skewed toward the top end last week than they were a week before that.

Eventually I'm hoping to expand this to take into account things like beneficiaries, curation rewards, rewards from comments below the top level, etc., since I think that is all potentially interesting, as well as looking at SP distribution rather than just income. Personally I am interested in seeing if there are any noticeable changes over time, and whether they correspond to the various changes that have been implemented in the system. I'm including the code below, but be aware that it's not very elegant since I'm still in a learning phase.

const fs = require('fs');

const dsteem = require('dsteem');

const client = new dsteem.Client('https://api.steemit.com');

const oneWeek = 7*24*60*60*1000; // number of milliseconds in a week.

var payoutSum = 0;

var payouts = {};

queryLoop(1);

function initializeCount() {

payoutSum = 0;

payouts = {};

}

function analyzePost(post) {

var reward = post.total_payout_value.toString().split(/\s+/);

if (reward[1] != "SBD") {console.error("Don't know what", reward[1], "is");}

reward[0] = parseFloat(reward[0]);

if (reward[0] > 0) {

if (typeof payouts[post.author] === 'undefined') {

payouts[post.author] = reward[0];

} else {

payouts[post.author] += reward[0];

}

payoutSum += reward[0];

}

}

function generateStats(week) {

console.log("Start generating stats for week", week);

var authors = Object.keys(payouts);

authors.sort((a,b) => {return payouts[a] - payouts[b];});

var outputHack = "";

var numberOfAuthors = authors.length;

var peopleAccountedFor = 0;

var rewardsAccountedFor = 0;

var previousPercentile = 0;

authors.forEach(author => {

peopleAccountedFor++;

rewardsAccountedFor += payouts[author];

incomePercentile = (100*peopleAccountedFor/numberOfAuthors).toFixed(2);

fractionOfIncome = (100*rewardsAccountedFor/payoutSum).toFixed(2);

if (incomePercentile >= (previousPercentile+1)) {

outputHack += incomePercentile+','+fractionOfIncome+"\n";

previousPercentile = Math.floor(incomePercentile);

console.log("Accounted for first", previousPercentile, "percentile");

}

});

if (outputHack != "") {

fs.writeFile("week"+week+".csv", outputHack, (err) => {console.error(err);});

}

}

async function queryLoop(stopAge) {

var currentTime;

var weeksAgo = 0;

var query = {

tag: '', // tag to query

limit: 1, // number of posts to return

truncate_body: 1, // limit body of post to 1 char, since we don't need it

};

// fetch first record, so we have a jumping-off point for the loop.

var runQuery = await client.database.getDiscussions('created', query)

.then(result => {

result.forEach(post => {

currentTime = new Date(post.created);

query.start_author = post.author;

query.start_permlink = post.permlink;

query.limit = 51;

})

});

totalPostsQueried = 0;

var previousWeek = 0;

while (weeksAgo < 3) {

runQuery = await client.database.getDiscussions('created', query)

.then(result => {

totalPostsQueried += 50;

console.log("Processing results to", totalPostsQueried);

result.forEach(post => {

if (post != result[result.length-1]) {

weeksAgo = (currentTime - new Date(post.created))/oneWeek;

if(Math.floor(weeksAgo) != previousWeek) {

// we crossed into a new week, dump stats for previous week.

generateStats(previousWeek);

initializeCount();

previousWeek = Math.floor(weeksAgo);

}

analyzePost(post);

} else {

// skip processing last element, since next query will fetch it.

query.start_author = post.author;

query.start_permlink = post.permlink;

}

})

})

}

return runQuery;

}

I think it's important to point out that the last couple of weeks have been – unusual, to put it mildly, compared to historical data in terms of post rewards and payouts for posting. With a lot of the big players actually weighing in on controversial subjects, you get a lot more SP moving in terms of rewarding their posting because they already have wide audiences who are interested in what they have to say (for good or ill). There's been a lot of attention on the platform to what movers and shakers have to say, so inevitably they are going to see more of the voting traffic going to their work than elsewhere.

Normally, this is where I would say that it would be more productive to compare the last week's traffic to that of the same week a year ago, except that circumstances are completely different and there is absolutely no way that you can use information during a black swan event to make assessments and decisions because there's no baseline.

That's not to say that this isn't interesting and important work, because it is. Even if looking at JavaScript makes me physically ill. It takes all kinds. It's a good first cut at getting information out of what is a complicated system. I despair of you ever getting anything useful out of trying to work out how the curational rewards impact things, but that's not your fault – that's the fault of them being completely mad.

When looking at systems like this, you are always going to see some sort of exponential curve. It's the nature of the beast. In particular with proof of stake systems, the more stake you have, the more reason there is to invest more stake as you come closer to having enough to determine governance decisions. That leaves aside the usefulness of being able to direct portions of the reward pool as useful in and of itself. In these kinds of environments, the real question is how much of a change in that exponential curve do you see from period to period?

It might be helpful to do a log scale on the share percentages along the Y axis. Since we know it's going to be exponential upfront, the real question is how does it differ over the testing window and how much does it deviate from the given log base? Juxtaposing these graphs onto the same graph and giving us a log scale vertically would help make it easier to pull good information out of the presentation.

Good work.

Yes, the focus on recent weeks wasn't because I think that's the most interesting data, but just to keep things constrained while the project is in its early phases. Once I have more automation in the flow and more confidence that everything is working properly I'll have it look at a longer historical record.

I've never seen a curve like this on a nonlinear scale before, but I suppose it couldn't hurt to see if it helps make it easier to interpret the data. It's not clear to me that the curves should be exponential if we're just extrapolating from first principles, but from eyeballing these it does seem to be a good approximation. Here's what it looks like when I switch the charts from the first post to log scale on the Y axis.

This is a pretty good plan. It's also really good stage to start thinking about what kind of tools you can use to present your findings in graphical form a little more clearly. Habits you build at this point will serve you really well going forward.

In particular, start looking at ways that you can put these curves on the same graph so that they can be visually compared in a very direct way. Different colors on each and a slightly lower opacity for the line so that you can see when they cross is a good start. Also, for logarithmic Y axes, you're going to really want to do more than one horizontal indicator bar so that the casual observer can see that the progression is logarithmic and tightening as it goes up. No more than eight or it gets really messy in this amount of space, but less than five I don't find to be quite as useful.

I was just reading an article the other day on graph presentation (though focusing on temporal series rather than snapshots, but you will want to be doing temporal series relatively soon if you keep going down this path) that was really helpful.

Looking at what we have right in front of us with these curves, even without laying them over one another we can see that the left side starts further to the right in the newer data but it still retains that interesting upward hook feature at the end, even with a logarithmic presentation. A cynical take might be that a lot of the accounts that were getting the smallest shares of income stopped posting between those periods – but since we don't know who those accounts are (and some of my poking around a couple of years ago suggests that there are a lot of very small post/reward collectors who aren't human at all), it's impossible to really interpret what that might mean.

Good stuff.

Thanks for sharing the knowledge :) @tipu curate 3

Upvoted 👌 (Mana: 0/10 - need recharge?)