Lessons in Machine-Learnt Racism

It has come to my attention that a Chinese app (WeChat, you might have heard of it) is embroiled in a small scandal: it occasionally translates the word “black foreigners” as “niggers” which for those who don’t know is a very bad word that no English speaker should ever use. Of course, the Anglosphere media is quick to blame the Chinese for cultural insensitivity at best, blatant racism at worse. But is WeChat or Chinese culture to blame?

No!

We English speakers have only ourselves to blame! Like children, artificial intelligence learn from imitation and pattern recognition. Like children, computers and algorithms come into the world with a blank slate. If a child does something bad, the parents are to blame. If an algorithm does something bad, however, the programmers are not necessarily to blame.

HATE IS NOT INNATE, HATE IS LEARNED

人之初,性本善;性相近,習相遠。

The nature of humanity is kindness;

But nurture creates differences.

English and Chinese are both primary and second languages to me for different reasons, but one thing is common: I had no exposure to bad words in both languages due to my environment growing up. Eventually, I had to “learn” that the term “Chinaman” and “Chink” are hateful and racist terms for Chinese people. Logically, “Chinaman” makes sense, as the literal character-for-character translation of 中國人 “Chinese person”, while “Chink” sounds like a natural shortening of the word Chinese. In other words, the negative connotations and the baggage of hate had to be learnt.

And what does this have to do with the word “Nigger” and its variant “Negro”?

These are also logical or natural language evolutions for a descriptive word for a black person. Languages are massively multiplayer decentralised systems. In a parallel universe, “Nigger” might be a standard or respectful way of addressing black people. But these terms in our current universe became weaponised by those propagating hate, and have never or can never be deweaponised.

And why is this relevant to the topic at hand? Prejudice and intolerance is unfortunately a universal human trait, and social status or classism is the most prominent form of discrimination. The traditional worldview of the Chinese was people were either cultured (化內) or barbarians, i.e. uncultured (化外), where culture is the defined as those adhering to the traditional Chinese culture (華夏文化). Discrimination occurred between the “Chinese” which itself is an ever-fluctuating concept versus the barbarians, which are of a lower social class and status. However, unlike the West—especially in the United States of America—China does not have a tradition of considering black people as subhuman. The problem is that China ironically needs to learn about how to be racist in order to avoid being racist.

LEARNING DATA SETS HAVE BIAS

Garbage in, garbage out.

Chinese culture is paradoxically an inward- and outward-looking nation. While fiercely proud of its own culture, China has also absorbed and appropriated many foreign aspects throughout the millennia. Having been humbled by over a century of humiliation, China looks to the West for guidance in modernisation. However, the issue is that cultural baggage gets lost in translation, and that can be unintentionally hurtful in a globalised society, especially in situations like this.

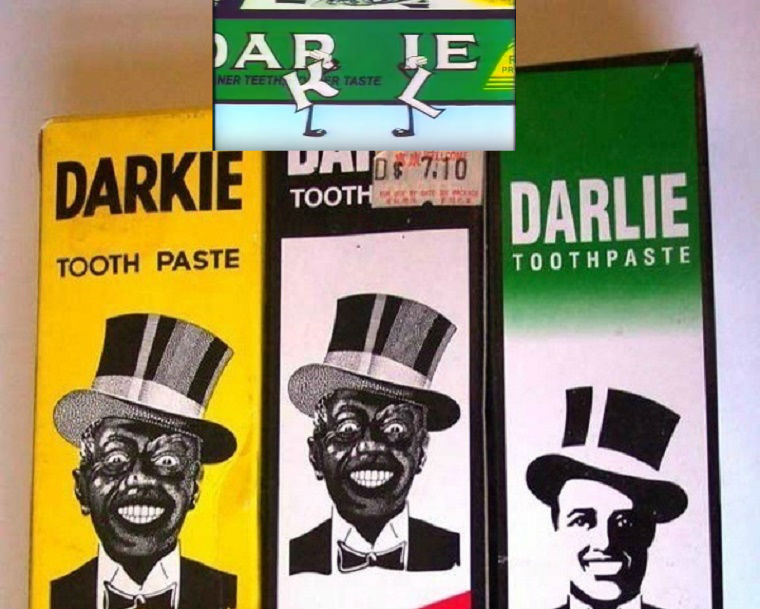

The Chinese are very practical folk. A black-skinned person is a black foreigner, or a negro (derived from Latin), or a nigger (a derivative of the Latin term). These words carry no connotation for the Chinese. In fact, the most popular brand of toothpaste in China is 黑人牙膏 which practically translates as Negro Toothpaste, because the idea is that the product makes your teeth as white as the teeth on black people, a complement—albeit a strange one, but consider that Chinese people will tell you in your face that you are fat if you’re overweight, and still mean well because being fat means being well-off, i.e. not starving like a peasant. But the point is that China does not have malice in calling black people by names that are inappropriate in the West, because the cultures are fundamentally different. The blackface character of Negro Toothpaste now considered offensive in the West but the Chinese think oh that’s a logical and widespread representation of black people in the West.

This brand used to be called Darkie, but now it's known as Darlie.

As long as the West continues to portray black people (and other minorities in general) in biased ways, the rest of the world (including China) will inherit these bias and unwitting normalise them. I don’t work at Tencent, but it is very likely that WeChat got their training data from the English-speaking internet. If that world is full of racist trolls, is the WeChat team—who have few if any US-American English speakers on their team—really to blame for inadvertently creating a racist troll?

Ironically, one could say that the algorithm worked perfectly: if you’re trying to insult a person by calling them a thief, throwing in some racist language is strangely ‘appropriate’. If that disturbs you, do not blame the machine, or the programmers, or the culture of the people that programmed the machines; blame the source, which is their training data, i.e. we English-speakers of the West which provide this training data, and our entertainment and media that reinforce our behaviours, subliminally or otherwise. Blame yourself for your choice of words, your habits, your actions.

CHANGE THE MACHINE OR CHANGE OURSELVES?

Be the change you want to see in the world

At the end of the day, we must ask ourselves what we want: a gentle, compassionate, harmonious society or a cold, practical, impartial one. Do we want to propagate a culture of respect for self and respect for others, or is freedom of expression a sacrosanct human right? There is no right answer: on one side of the spectrum is Japan, a country with strict and complex social protocols, and on the other side is the USA, a country that is fiercely individualistic. And as long as we do not tend to the extremes, neither value is mutually exclusive.

What is clear is that there is no place for racism in a gentle, compassionate, harmonious society. Civility begins with us. We choose the words we use. We can choose to not use those bad words, and we can actively stigmatise those who use those bad words. Rather than washing our hands of our sins, passing the blame to others, we must acknowledge our part and help machines learn without indoctrinating them with the hateful baggage of humanity.

The nature of humanity is kindness; but nurture creates differences.

Good article. Our machine learning algorithms have no concept of words that are more intended to cause injury and emotional distress than actual communication. The math models are constructed from a corpus of human-created text, and words will be chosen later by the model/algorithm if they appeared with any frequency in the corpus.

The lesson is that we shouldn't use populist communication channels (like Twitter and Facebook) to create a training corpus. There are too many people in the world who fall into low-brow behavior, and their low thoughts are infecting social media. Of course, it doesn't help that the social media companies have been utterly irresponsible not guarding against abusive language, wrapping themselves instead in the complacency of greed.

You might find my latest blog post of interest, because it addresses the lack of transparency in big data, and how biased data collection is causing problems.