GPT-4: What is it and how does it work?

GPT-4 is the latest large multimodal model from OpenAI, and it's able to generate text from both text and graphical input. OpenAI is the company behind ChatGPT and Dall-E, and its primary research focus is in, you guessed it, artificial intelligence. Today, we're going to talk about GPT specifically, what it does, and how it contributes to a growing industry that also includes ChatGPT, Bing Chat, and Google Bard.

GPT stands for Generative Pre-Trained Transformer, and GPT-4 is its fourth iteration. To understand what that means, we can break down each component individually:

Generative: It generates text.

Pre-Trained: A model is trained on a large set of data to find patterns or make predictions.

Transformer: A model that can track relationships in sequential data (like words in a sentence) that can also learn the context.

In essence, it's software that can generate text by learning how text is formed by studying large amounts of data and then stringing them together based on prompts via what it knows makes sense.

How does GPT-4 improve on GPT-3.5?

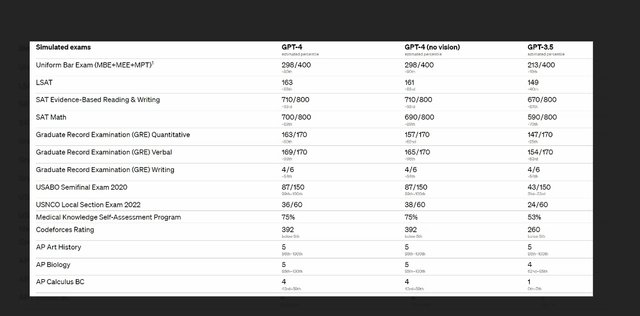

OpenAI launched GPT-4 on March 14, 2023. According to OpenAI, there are a few key ways that GPT-4 improves on its predecessor. It can accept visual prompts to generate text and, interestingly, scores a lot higher in the uniform bar exam than GPT-3.5 in ChatGPT did (it scored in the top 10% of test makers, while GPT-3.5 scored in the bottom 10%). It's also more creative and has a larger focus on safety and preventing misinformation spread.

There are other performance improvements as per the company's research, including improvements in Leetcode, AP level class, and SAT results.

What can GPT-4 be used for?

GPT-4 is just the language model and is not synonymous with services such as ChatGPT or Bing Chat. As a result, it can be implemented into a lot of already existing systems and ones currently in development. From customer support lines to machine translation, anything that makes use of text can benefit from GPT-4. In the technical paper that outlines how GPT-4 works (and written by OpenAI), the authors also note that GPT-4 helped them to copyedit the paper, summarize text, and improve their LaTeX formatting.

Currently, GPT-4 powers both Bing Chat and the version of ChatGPT that's only accessible with a ChatGPT Plus subscription. It is also accessible with a Duolingo Max subscription. The reason why GPT-4 can be a scary prospect across a lot of industries is because of just how much it can do when given a prompt. It has the potential to replace multiple jobs and improve the workflow in others. It has copyediting capabilities, can help people to program, and I've even seen it create weight loss programs. Asking it real questions will generally net you real answers, though you should still use it as a guide and fact-check the information that it gives you.

What GPT-4 excels at is its reasoning capabilities. If you have to schedule a meeting and are given availability by multiple people, you can have GPT-4 find times that work for everybody by feeding it the available times. It's a simple task that people have been able to do themselves for basically forever, but it shows the reasoning and logical capabilities of GPT-4.

Others have also been using GPT as an accessibility tool. People with dyslexia can use it to help write them emails or proofread their existing written content. There are so many applications for it, but it's not perfect and has many limitations.

What are the limitations of GPT-4?

GPT-4 is not infallible; in fact, even OpenAI states that GPT-4 sometimes suffers from "hallucinations," which is just a way of saying that generates false information. Furthermore, it also states that "care should be taken when using the outputs of GPT-4, particularly in contexts where reliability is important."

OpenAI isn't quiet about the fact that users could come to over-rely on GPT-4 for its abilities. As the technical paper details, GPT-4 "maintains a tendency to make up facts, to double-down on incorrect information, and to perform tasks incorrectly." It goes on to state that it does these while remaining more convincing and believable than earlier GPT models, thanks to an authoritative tone and improved accuracy in some other areas. On top of that, GPT-4 can amplify biases and perpetuate stereotypes, reinforcing social biases and worldviews.

What's the future of GPT?

OpenAI intends to iterate and improve on GPT-4 following its deployment as more issues are identified, and there are a few steps that the company has already committed to taking. These include:

Adopt layers of mitigation in the model system:

As AI models become more capable and are adopted further throughout multiple industries, the need for multiple lines of defense is critical.

Build evaluations, mitigations, and approach development with real-world usage in mind: Learning who the users are and what these tools are going to be used for is vital in terms of mitigating any potential harm that could be done. It's especially important to account for the human element in deploying these tools and real-world vulnerabilities.

Ensure that safety assessments cover emergent risks: As models grow in their capability, preparing for complex interactions and unforeseen risks is important.

Plan for unforeseen capability jumps: Because AI is such a fast-moving industry and we don't necessarily understand everything that goes on in the "mind" of a trained AI model, small changes could accidentally lead to accidental capability jumps. This should be accounted for.

To put it simply, OpenAI is continuing to build on GPT-4 to evolve the technology. It has the potential to be useful in a lot of applications, but it isn't perfect.