The role of History Nodes in the COTI network

Abstract

- The launch of COTI’s History Nodes is a major milestone that enables users to run Full Nodes in the COTI network.

- COTI’s History Nodes provide reliability, decentralization, and usability.

- The History Nodes maintain all aspects of transaction data storage and retrieval in the COTI network.

- History Nodes consist of both hot and cold clusters. While the hot cluster handles the majority of network requests, the cold cluster serves as backup.

- In Q3 2019, we commenced work on the first implementation of our History Nodes. They are currently live in TestNet and will be implemented in the MainNet by the end of October 2019.

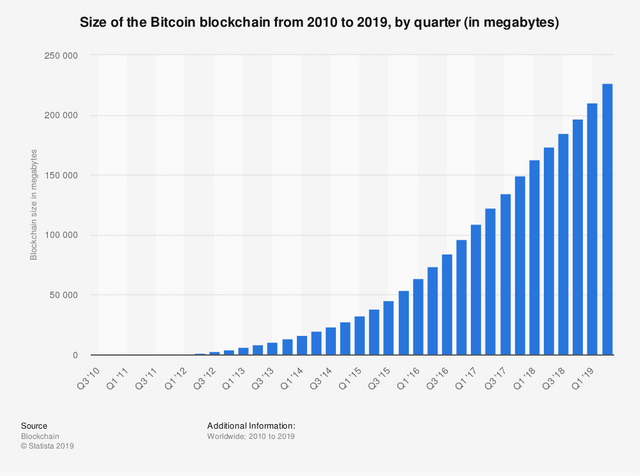

Successful payment systems are equipped to process approximately one billion transactions per year. In the cryptocurrency world, the average transaction size is 0.2–5.0 kb, depending on the system type. This means that after one year, a successful cryptocurrency payment system will have terabytes of transaction data to store.

For example, Bitcoin’s full node data size exceeds 230 gigabytes, although Bitcoin has almost 100 times less operations than payment systems like PayPal, Amazon Pay, and Visa.

Cryptocurrency systems address this problem in various ways:

- Classic PoW systems like Bitcoin currently do not offer any solution because they are too slow.

- PoS systems revert to sharding, or splitting a global blockchain to many small shards.

- BFT-like systems usually archive old ‘epochs’. In classic “Practical Byzantine Fault Tolerance,” as defined by Miguel Castro and Barbara Liskov, the process is known as ‘garbage collection’ and only deals with current epochs or ledgers (e.g., Ripple and Stellar). Facebook’s Libra, for instance, does not address this issue in its current white paper.

COTI’s History Node solution

COTI’s History Node solution offers a number of unique features, including reliability, decentralization, and usability.

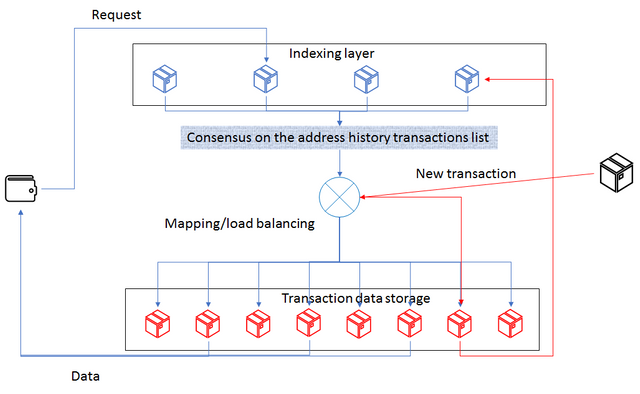

COTI History Nodes maintain all aspects of transaction data storage and retrieval in the COTI network. This service can be provided by a single powerful node using industrial-grade servers, although better reliability can be yielded from distributed solutions like Elasticsearch.

Main use cases for History Nodes:

- Retrieval of operation history for an address (requests are signed by the address’ private key) and optionally filtered by date.

- Retrieval of one transaction by hash (signing not required)

- Generation of user address requests (requests are signed by the user’s private key)

- Retrieval of transactions by date

Designing reliable History Nodes presents a set of challenges. The following features will be provided:

Without taking maliciousness into consideration:

- Mapping of each snapshot’s shards into various History Node segments

- Identification of inaccessible History Nodes over time

- Replication of lost and inaccessible segments from History Nodes

- Assignment of new data from current snapshots to free segments in History Nodes

Taking maliciousness into consideration:

- Assignment of active masters between available History Nodes

- Confirmation of correct mapping between shards and History Node segments

- Determination of the validity of retrieved data and History Node segments

- Verification of replicated data correctness

- Identification and removal of corrupted data and retrieval of the original backup to replace it

At the network maturity stage, COTI History Nodes will use a consensus mechanism to meet all the above requirements.

Elasticsearch integration:

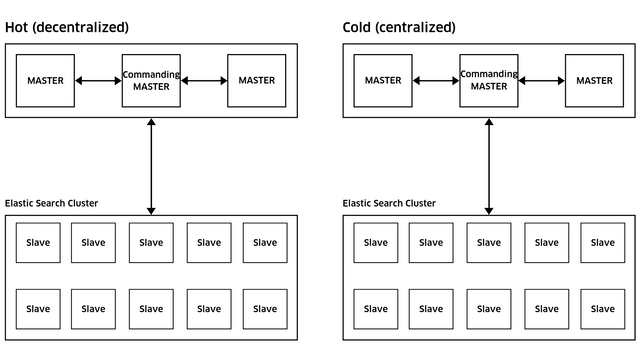

- In the Elasticsearch model, COTI History Nodes are all masters, while Elasticsearch cluster servers are slaves.

- There will be several Master History Nodes (let us assume three for now). The Master History Node will execute all the write operations, while others can only handle read-only operations. In the Master History Node, data is verified using a PBFT-like consensus protocol. Master History Nodes also hold copies of DAG transactions in a RocksDB database. This is required for verification of incoming DAG-based transactions by other nodes.

- The Master History Node will be elected and changed from time to time using a PBFT-like protocol. The ClusterStamp is written into Elasticsearch storage by the Master History Node.

- The Elasticsearch cluster consists of slave servers responsible for storing the data received from the Master History Nodes.

History Nodes can be classified into two clusters:

- The Hot Cluster is depicted in the first row. It is decentralized and can handle the brunt of network requests. This cluster is elastic and adjusts according to needs.

- The Cold Cluster is depicted in the second row. It is centralized and adds an additional level of reliability. If the Hot Cluster fails, the Cold Cluster prevents any potential data loss.

The launch of COTI’s History Nodes is a major milestone that enables users to run Full Nodes in the COTI network. They are currently live in TestNet and will be implemented in the MainNet by the end of October 2019. Join us in our Telegram group for all future updates and tech developments.

COTI Resources

Website: https://coti.io

Github: https://github.com/coti-io

Technical whitepaper: https://coti.io/files/COTI-technical-whitepaper.pdf

Full Nodes and Staking Model: https://medium.com/cotinetwork/coti-opens-full-node-registration-and-reveals-staking-model-800bfcfdbe8a