Blocksize Consensus

As we near the closing of 2016 (year of the Monkey) there is a growing awareness in Bitcoin on how we can solve the question of block size limits for many years to come. Please see here for an overview of this.

The idea is that we can move from a centrally dictated limit to one where the various market participants can again set the size in an open manner. The benefit is clear, we remove any chance of non-market participants having control over something as influential as the block size.

A good way to look at this is that the only ones that can now decide on the size are the ones that have invested significant value, believing in an outcome that is best for Bitcoin as a whole.

The credit goes to Andrew Stone for coming up with this concept of building consensus. He also published another, intertwined, idea which is the Acceptable Depth (AD) concept.

If the open market for block sizes is still making people uncomfortable, the AD concept is causing pain. The concept has had many detractors and the way to handle complaints and attack-scenarios has been to add more complexity to it. The ntroduction of something called the "sticky gate" that was created with no peer feedback is a good example as there is now an official request created by stakeholders which asks for it to be removed again. The result of that request was for the author to not remove it, but suggest yet more complexity.

I really like the initial idea where the market decides on the block size. This is implemented in Bitcoin Classic and I fully support it.

The additional idea of "Acceptable Depth (AD)" was having too many problems so I set out to find a different solution. This part of Andrew's solution is not a consensus parameter. Any client can have their own solution without harming compatibility between clients.

What is the problem?

In the Bitcoin reality where any full node can determine the blocksize limits we expect a slowly growing block size based on how many people are creating fee-paying transactions.

A user running a full node may have left his block size limit a little below the size that miners currently create. If such a larger-than-limit block comes in, it will be rejected. Any blocks extending that chain will also be rejected and this means the node will not recover until the user adjusts the size limits configuration.

It would be preferable to have a slightly more flexible view of consensus where we still punish blocks that are outside one of our limits, but not to the extend that we reject reality if the miners disagree with us. Or, in other words, we honour that the

miners decide the block size.

In the original "AD" suggestion this was done by simply suggesting we need 5 blocks on a chain when the first is over our limits. After that amount of blocks has been seen to extend the too-big one, we have to accept that the rest of the world disagrees with our limits.

This causes a number of issues;

- It ignores the actual size of the violating block. It is treated the same if its 1 byte too large or if its various megabytes too large. This opens up attacks from malicious miners.

- There is no history kept of violating blocks. We look at individual violating blocks in isolation, making bypassing the rules easier.

Solving it simpler

Here I propose an much simpler solution that I have been researching for a month and to better understand the effects I wrote a simulation application to see the effect of many different scenarios.

I introduce two rules;

Any block violating local limits needs at least one block on top before it can be added to the chain.

Calculate a punishment-score based on how much this block violates our limits. The more a block goes over our limit, the higher the punishment.

How does this work?

In Bitcoin any block already has a score (GetBockProof()). This score is based on the amount of work that went into the creation of the block. We additionally add all the blocks scores to get a chain-score.

This is how Bitcoin currently decides which chain is the main chain: the one with the highest chain-score.

Should a block come in that is over our block size limit, we calculate a punishment. The punishment is a multiplier against the proof of work of that block. A block that is 10% over the limit gets a punishment score of 1.5. The effect of adding this block to a chain is that it adds the blocks POW, then subtracts 1.5 times that again from the chain. With the result that the addition of this block removes 50% of the latest blocks proof of work value from that chain.

The node will thus take as the 'main' chain-tip the block from before the oversized one was added. But in this example, if a miner appends 1 correct block on top of this oversized one, that will again be the main chain.

Should a block come in that is much more over size limit, the amount of blocks miners have to build on top of it before the large block is accepted is much larger. A 50% over limit block takes additional 6 blocks.

The effect of this idea can be explained simply;

First, we make the system more analogue instead of black/white. A node that has to chose between two blocks that are both oversized means we choose the smallest one.

Second, we can make the system much more responsive where a block that only violates our rules a tiny little bit at height N and then if some other miner adds a block N+1, this means we can continue mining already at block N+2.

On the other hand, an excessive block takes various more blocks on top of it to get accepted by us. So its still safe.

Last, we remove any attack vectors where the attacker creates good blocks to mask the bad ones. This is no longer possible because the negative score that a too big block creates will stay with this chain.

Visualising it

This proposal is about a node recovering when consensus has not been reached. So I feel OK about introducing some magic numbers and a curve that practice shows a good result in various use-cases. This can always be changed in a later update because this is a node-local decision.

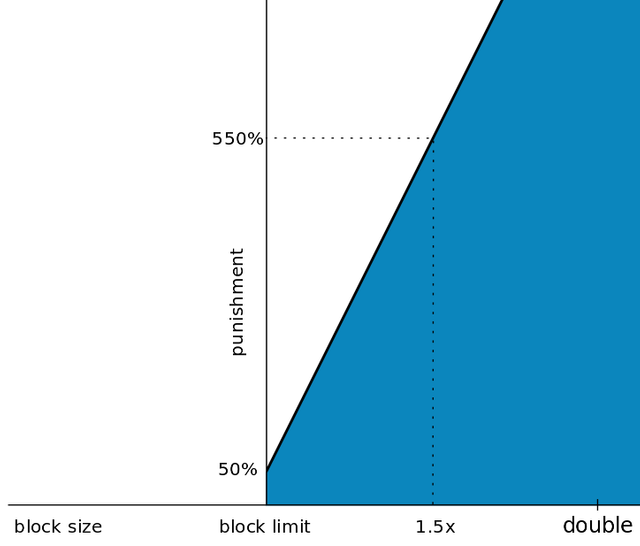

On the horizontal axis we have the block size. If the size is below our limit, there is no punishment. As soon as it gets over the block limit, there is a jump and we institute a punishment of 50%. This means that it only adds 50% of the proof-of-work score of the new block.

If the block size goes more over limit, this punishment grows very fast taking 6 blocks to accept a block that is 150% of our configured block size limit.

The punishment is based on a percentage of the block size limit itself, which ensures this scales up nicely when Bitcoin grows its acceptable block size. For example a 2.2MB block on a 2MB limit is 10% punishment. We add a factor and an offset making the formula a simple factor * punishment + 0.5.

The default factor shown in the graph is 10, but this is something that user can override should the network need this.

Conclusion

Using a simple-to-calculate punishment and the knowledge that Bitcoin already calculates a score for the chain based on the proof-of-work of each block, we can create a small and simple way to find out how much the rest of the world disagrees with our local world-view. In analogue, real world measurements.

Nodes can treat the size limits as a soft limit which can be overruled by mining hash power adding more proof of work to a chain. The larger the difference in block size and limit, the more proof of work it takes.

Miners will try to mine more correct chains, inside of the limits, fixing the problem over time, but won't waste too much hash power pushing out one slightly oversized block in a chain.

This is not meant as a permanent solution for nodes, and full node operators are not excused from updating their limits. On the other hand a node that is not maintained regularly will still be following Bitcoin, but it will trail behind the main chain by a block.