THE BIRTH OF BIG DATA AND THE REVOLUTION OF ANALYTICS

Birth of Big Data

Nowadays, large data volumes are daily generated at unprecedented rate from heterogeneous sources (e.g., health, government, social networks, marketing, financial). This is due to many technological trends, including the Internet Of Things, the proliferation of the Cloud Computing as well as the spread of smart devices. Behind the scene, powerful systems and distributed applications are supporting such multiple connections systems (e.g., smart grid systems , healthcare systems , retailing systems like that of Walmart , government systems .

Previously to Big Data revolution, companies could not store all their archives for long periods nor efficiently manage huge data sets. Indeed, traditional technologies have limited storage capacity, rigid management tools and are expensive. They lack of scalability, flexibility and performance needed in Big Data context. In fact, Big Data management requires significant resources, new methods and powerful technologies. More precisely, Big Data require to clean, process, analyze, secure and provide a granular access to massive evolving data sets. Companies and industries are more aware that data analysis is increasingly becoming a vital factor to be competitive, to discover new insight, and to personalize services.

Because of the interesting value that can be extracted from Big Data, many actors in different countries have launched important projects. USA was one of the leaders to catch Big Data opportunity. In March 2012, the Obama Administration launched Big Data Research and Development Initiative with a budget of 200 million. In Japan, Big Data development became one important axe of the national technological strategy in July 2012 . The United Nations issued a report entitled Big Data for Development: Opportunities and Challenges . It aims to outline the main concerns about Big Data challenges and to foster the dialogue about how Big Data can serve the international development.

As a result of the different Big Data projects across the world, many Big Data models, frameworks and new technologies were created to provide more storage capacity, parallel processing and real-time analysis of different heterogeneous sources. In addition, new solutions have been developed to ensure data privacy and security. Compared to traditional technologies, such solutions offer more flexibility, scalability and performance. Furthermore, the cost of most hardware storage and processing solutions is continuously dropping due to the sustainable technological advance .

Unlike traditional data, the term Big Data refers to large growing data sets that include heterogeneous formats: structured, unstructured and semi-structured data. Big Data has a complex nature that require powerful technologies and advanced algorithms. So the traditional static Business Intelligence tools can no longer be efficient in the case of Big Data applications.

What is Big Data ?

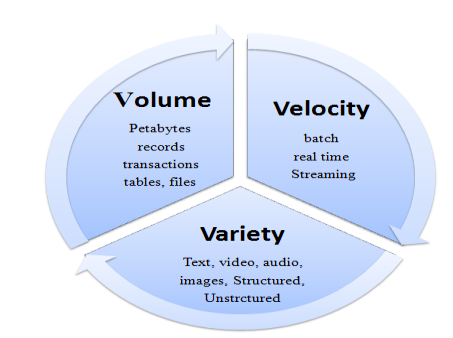

Most data scientists and experts define Big Data by the following three main characteristics (called the 3Vs)

Volume: Large volumes of digital data are generated continuously from millions of devices and applications (ICTs, smartphones, products’ codes, social networks, sensors, logs, etc.). About 2.5 exabytes were generated each day in 2012. This amount is doubling every 40 months approximately. In 2013, the total digital data created, replicated, and consumed was estimated by the International Data Corporation (a company which publishes research reports) as 4.4 Zettabytes (ZB). It is doubling every 2 years. By 2015, digital data grew to 8 ZB . According to IDC report, the volume of data will reach to 40 Zeta bytes by 2020 and increase of 400 times by now

Velocity: Data are generated in a fast way and should be processed rapidly to extract useful information and relevant insights. For instance, Wallmart (an international discount retail chain) generates more than 2.5 PB of data every hour from its customers transactions. YouTube is another good example that illustrates the fast speed of Big Data.

Variety: Big Data are generated from distributed various sources and in multiple formats (e.g., videos, documents, comments, logs). Large data sets consist of structured and unstructured data, public or private, local or distant, shared or confidential, complete or incomplete, etc.

These are fantastic

tanks :)

Congratulations @osos! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOPGreat post.

tanks