Further automate your transcripts and subtitles of your #Whaletank talk

(or any podcast)

Mixin' and mergin'

I finally been able to mix the audio tracks from the Whaletank recordings with ffmpeg. I recorded the Whaletank with Mumble using multichannel recording, for each speaker separately. Uploaded separately to YouTube to get automatic captions for distinguishing speakers in the subtitles, a process which you can read about in my previous post.

The TopSol project discussed in the BeyondBitCoin Whaletank 9-9-2017:

Be sure to toggle subtitles in the YouTube video

Mixing Audiotracks

I finally found a solution to the problem I had with ffmpeg. In short I had to use amix instead of amerge but somewhere was written that amix would use float samples and thus I thought I couldn't use that. Maybe I'll learn how apad works as well.

So the final commandline-fu I used is ffmpeg -i Mumble-2017-09-09-16-33-18-149.210.187.155-fuzzynewest.ogg -i Mumble-2017-09-09-16-33-18-149.210.187.155-chrisaiki2.ogg -i Mumble-2017-09-09-16-33-18-149.210.187.155-Recorder.ogg -i Mumble-2017-09-09-16-33-18-149.210.187.155-steempowerpics.ogg -i Mumble-2017-09-09-16-33-18-149.210.187.155-Taconator.ogg -filter_complex "[0:a][1:a][2:a][3:a][4:a] amix=inputs=5:duration=longest[aout]" -map "[aout]" -ac 2 -c:a libvorbis -b:a 128k output.ogg. Important to note is the number of inputs (5). ffmpeg counts from 0 (zero) and you specify the streams in the output file with "[0:a][1:a][2:a][3:a][4:a] etc. using amix as the filter to mix the audiotracks which were recorded with Mumble's multichannel option. And :duration=longest to use the longest track as a reference. amerge would use the shortest by default and couldn't be changed. Alas all the examples where with amerge. As I answered my own question on StackOverflow

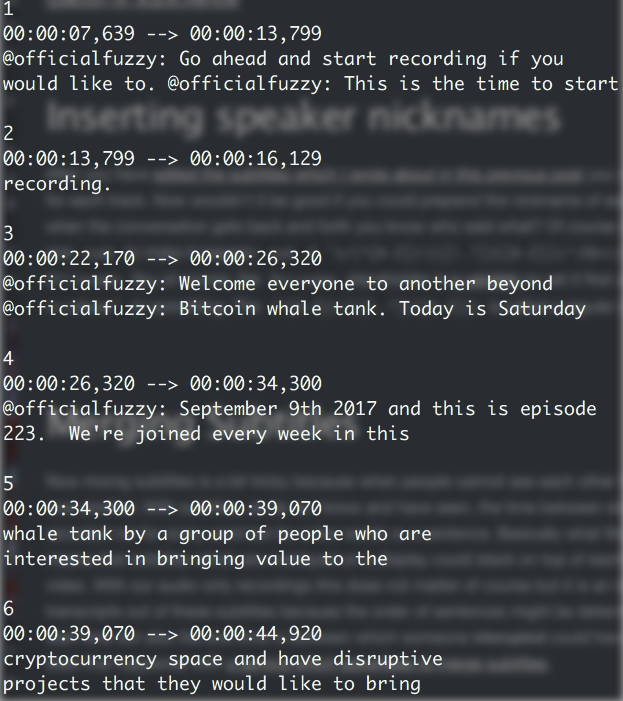

Inserting speaker nicknames

After you have edited the subtitles which I wrote about in this previous post you now have a subtitle for each track. Now wouldn't it be good if you could prepend the nickname of each speaker so that when the conversation gets back and forth you know who said what? Of course it would! So here is one code to make it happen: sed -E "s/(^[A-Z])|(([\.?\!][ ]*)([A-Z]))/\3$nickname: \1\4/" $filename You of course, the $nickname placeholder is a variable so set it first with nickname="your nickName" or something. The (^[A-Z])|(([\.?])([A-Z])) is called a regular expression or regex.

Merging Subtitles

(to be improved)

Now mixing subtitles is a bit tricky because when people cannot see each other they tend to speak over another. With subtitles, as far as I know and have seen, the time between start and end are recorded not for every word but for a few words or a sentence. Basically what fits on the screen. This means that subtitles which are overlayed on a display could stack on top of each other covering the video. With our audio only recordings this does not matter of course but it is an issue when you make transcripts out of these subtitles because the order of sentences might be determined not only by the start time but also the end time in between which someone interupted could have said something. At least that happened with a service which advertises to merge subtitles.

Now there are other options but either they did not work or I couldn't get them to.:

- Allthough it looked promising I could not build srtmerge - a Python library used to merge two Srt files. It need a package which seems impossible to install or get

ImportError: No module named chardet.universaldetector - a tool called 2srt2ass that can take two langauages in two separate subtitle SRT files and combines them into one SSA/ASS file, showing one language at the top of the screen and one at the bottom. The problem is YouTube doesn't understand SSA/ASS files.

- There's also an awk script on Ubuntu Forums that might be able to merge subtitles but only when the timings of the files are in the order they are spoken. Well this is sadly almost never the case because most people ask questions afterwards. But because we process each audio file seperately, we get a subtitle file per speaker and thus each subtitle file will start at

1for that speaker. srtool: sounds cool but I couldn't find it!

So sadly this leaves some manual editing to get rid of duplicate pieces of sentences. I was thinking to code my own subtitle merger but I'll do that when there isn't a program which doesn't already do that and I can't edit the source. Starting from scratch when I don't know the demand for this sounds risky because it might be a lot of work to do properly.

(I) Need help?

If you know about other programs (best which be called from a shell like bash) and have tested them please let me know. It might seem easy to Google but test those programs is another story.

PS: looking at GitHub now, maybe I'll find something which works like this:

subMerger sub1 sub2 sub3 … subn > allmerged

Also if you need any help let me know!

Closing comments

It's very cool to be able to read along what's being said. It's much easier to read what website or speaker is being reffered to than to have to listen to it. Also you get to know people by their nickname and can thus more easily follow them on Steemit.

Furthermore with interactive transcripts you could jump to any section which interest you. No need to watch a whole video or listen to a podcast which might not be so interesting at the end. But then it is too late and you've already wasted your time.

It might be not obvious for most of us but the more you know the more you will waste your time with things you already know or are even inaccurate.

PS: You should look for "funny subtitles" images, it's hilarious!