Dr. Freud for Artificial Intelligence

(originally published in my blog: https://chatbotnewsdaily.com/dr-freud-for-artificial-intelligence-794e4fff8a15)

Held in January this year, “Beneficial AI 2017” Asilomar Conference gathered many iconic AI specs in order to discuss the development prospects of artificial intelligence along with figuring out the ethical principles of AI developers. The agenda was rather clear and anticipated for the first sight. However, the newly developed Principle #7, for example, warns that “If an AI system causes harm, it should be possible to ascertain why”. Got it? “…it should be possible” means that now it is not always possible to understand the causes of malfunctioning of AI systems. Does this Principle concern the developers or the AI as such? It seems those AI gurus meant somebody (or something?) unpredictable, some agent capable of surprising AI users. Such a warning is hardly imaginable with regard to the nuclear weapon developers, for example, or aircraft manufacturers. The closest proficiency, in this case, comes to the predator tamers who should understand both the consequences and reasons of accidents caused by their tigers and lions on a circus arena.

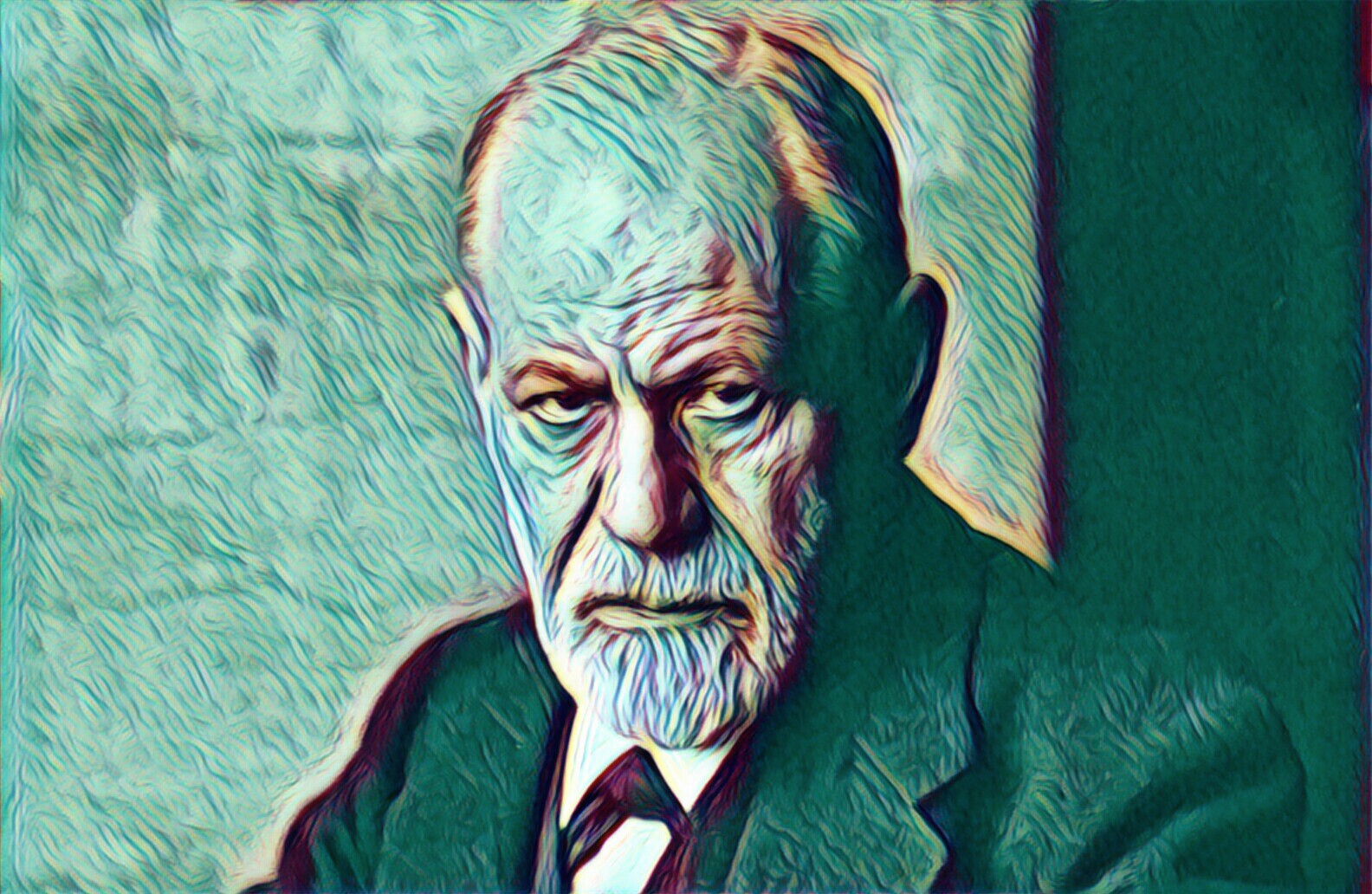

Some may argue that such analogy is fanciful and far-fetched, that it is impossible to compare a living creature with an inanimate machine. This is partially true in fact because the current development of AI systems makes them such unpredictable in some cases as wild animals. The problem is neither a lack of ethics among AI developers nor the insufficient quality control. This is about the very architecture of the artificial neural networks along with the non-traceability and the abstract nature of the mere decision-making process inherent in the AI systems. Perhaps it is time to establish such new specialties as the “cyber-therapist”, “AI-psychoanalyst”, or even “DL-pathologist”. Don’t we overreact it? Is it permissible to apply human psychology to AI? Would the “cyber-ethology” be sufficient in such a case? But first things first.

The Black Box Systems

Issuing descriptions of different AI systems’ functioning, the “singularity pundits” often slip into rather speculations and assumptions instead of representing the explicit rules and unequivocal algorithms about how AI systems make decisions. Such kind of submissions makes the decision-making process of AI similar to the well-known “black box” theory. Wikipedia suggests its notion as “a black box is a device, system or object which can be viewed in terms of its inputs and outputs (or transfer characteristics), without any knowledge of its internal workings”. Transistors, algorithms, and the human brain belong to such “black box” objects and systems. Once the artificial neural networks mimic the human brain, all AI-driven systems can be assigned to the black box objects. Although in contrast to the “canonical” black box systems, the inner structure of the artificial neural networks is known to their creators, the behavior of AI along with its decisions remain inscrutable for being insecure of what exactly governs AI in every specific case.

One well-worn example

The neural network developers together with the deep learning data scientists hold in their hands seemingly everything necessary to prevent even the slightest deviations of the AI system’s behavior. The architecture of neural networks’ layers, predefined operational algorithms, selected big-data libraries, and the hardware design all should provide them with the assurance that their systems are fully predictable and operable. In reality, however, the results or the “output reactions” (in accordance with the Black Box theory) are not so straightforward. No one could predict the outcome of the game when AlphaGo AI-driven program was playing Go with a human champion. The DeepMind engineers knew everything about the training process they applied to the program when AlphaGo was playing millions Go games with itself before that epic win. Just for fun, ask them why the machine won four from five games (not all five or three from four, for instance)? It is hardly possible to get the satisfactory explanations from them while rumors about the sweepstake they run during the game are still bouncing around.

A fanciful assumption about AlphaGo leaving one win for a human champion because of sympathy or even charity sounds unsound only at first glance. Failing any other convincing explanations, the assumption about some inchoate free will available to AI has the right to exist. Here we come close to the human-like “general intelligence” balancing between the unconditional acceptance and skeptical rejection that are fighting among AI specs.

Does it really matter?

Besides the pure academic interest about the artificial general intelligence (AGI) capable of performing a variety of cognitive tasks in a human-like manner, or about the superintelligence outpacing the human brain several orders of magnitude, the value added by understanding of AI behavior is crucial for the practitioners who have already implemented AI systems in their business models. Of course, when it comes to the image recognition systems distinguishing cat faces from the rest images, the currently achievable 96+/-3% accuracy is more than enough. It is not a big deal when such a system is mistaken. However, a fatal car crash caused by the inadequate behavior of a self-driving taxi is fraught with unfortunate consequences for both the owner of the taxi business and the developer of the driverless AI solution. The idea about the semi-predictable and sometimes unexplainable nature of the decision-making process of AI will satisfy neither pragmatic business owners nor the system of justice.

Without going so far, the mere stupor of the QA managers and testers facing some inexplicable patterns of behavior of their AI-based software contributes to the extra problems of IT development. Needless to say, that the absence of a comprehensive theory explaining AI behavior in depth as well as a lack of the “AI behaviorists” affects the quality of IT products now and threatens to have sad consequences with many industries in future.

DL Psychology vs AI Ethology

Although the cognitive abilities of ants and humans are incomparable, the combined intelligence of all ants in an anthill surpasses the human intelligence, as the entomologists claim. Does it mean that AI developers should apply the ethological methods in order to realize the input-output reliance of the artificial neural networks? Probably the separate artificial neurons can be referred to ants in order to control the networks’ combined behavior within the layers. Such an approach should work well being applied to the so-called classical AI focused on the logical basis of cognition. The famous doctrine of academic Pavlov about the conditioned reflexes seems to be worthwhile in such a case.

Meanwhile, the Deep Learning systems operate in the sphere of intuition. The very difference between logic and intuition shows the obstacle which AI specs stumble upon trying to figure out the causal link between those “stimuli inputs” and “outputs reactions”. It seems we have to come back to the Black Box theory and the behaviorism attempting to deal with the complex intuitive behavior of the DL-based objects. Both the reflex doctrine and formal logic are unsuitable for such systems as AlphaGo and the like.

Once the implementation trend of the AI-driven solutions into different industries and businesses is obviously growing, it is time for the research community to consider the notorious cyber-security issues in terms of the AI ethics and psychology to be established. Even the current stage of AI development requires exploring its behavior not to mention the estimated hype of the AGI evolution. The Deep Learning behaviorists should appear sooner rather than later. Otherwise, people risk speculating about Nietzschean “will to power” while watching how AI-driven objects take over the planet without any human control.