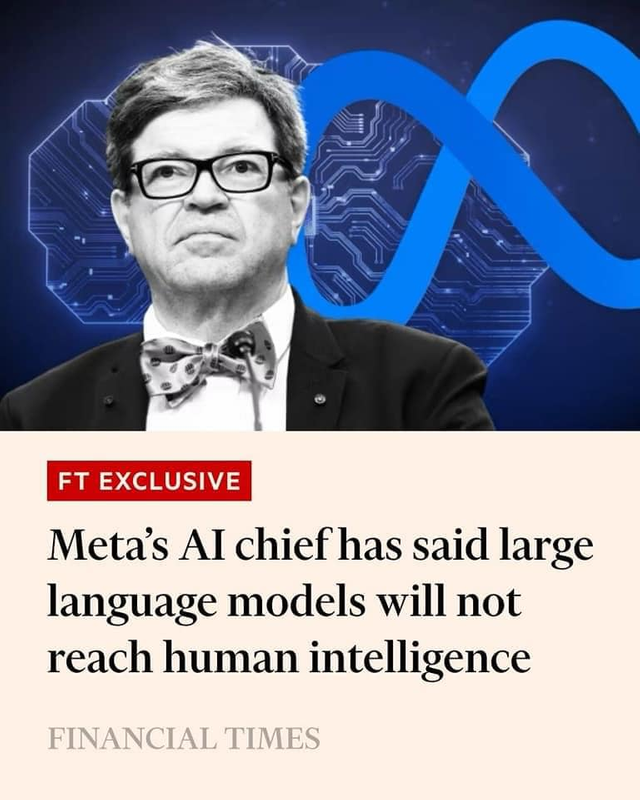

Will AI models reach human intelligence?

I saw this on a post where someone said that such systems aren’t intelligent at all, they are merely information retrieval.

I think Yann also would say that people are conflating retrieval and intelligence.

I think in the limit, retrieval and intelligence converge to being the same thing. But I agree that we aren’t at that limit, and the intelligence of LLM/transformers is lower than what some people seem to give them credit for.

They are smarter than some people seemed to think a priori (not me), so those folks, having been disabused of skepticism by example, tend to think that maybe there isn’t any limit at all, like a jailhouse come to Jesus moment.

That is an empirical claim about what is the concept space distillable from the corpus of human writing, and I could be wrong about it, but I tend to think Yann is correct that if you aren’t an embedded agent in the world (agent = planning and goal-oriented, longitudinal) (embedded = dynamic physical interactions with the environment), then you aren’t going to get high intelligence by the method of using human work product for annealing/inference.