The Road to Superintelligence

Ok, ok...

I Just found an article which is a bit old (from two years ago) written by Tim Urban on WaitButWhy.com. By the way, this website is amazing. This guy is doing an amazing job, it's just outstanding.

I highly recommend it if you like all news about IA:

https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html

It's a one hour read (maybe less for you english felas :simple_smile:), so I'll try to sum up the main ideas here for the laziest of you.

I still highly recommend you to read it though. My sum up does not include the sources of the original article (which are great reads too) and only contains the main ideas.

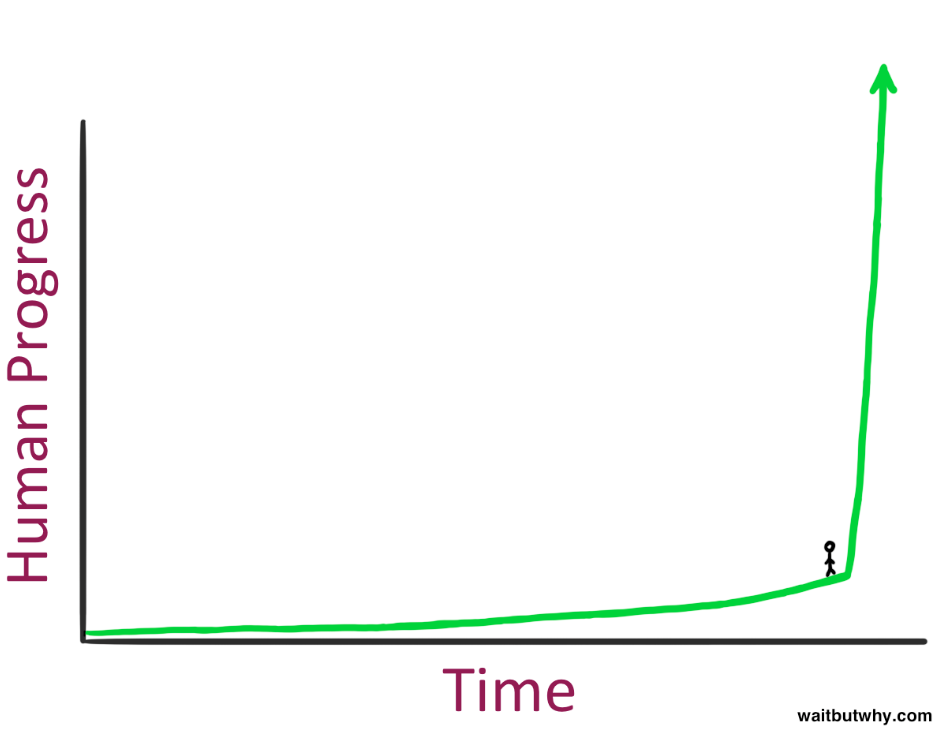

1.The Far Future—Coming Soon

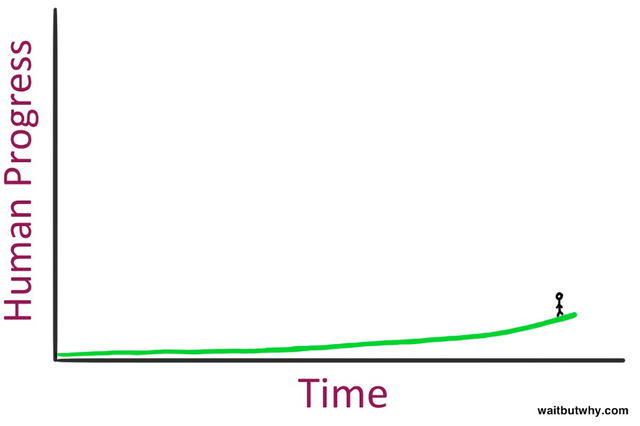

We are here:

And that's what we see:

I really like the comparison the author is doing with a time traveller:

Imagine someone born in the 5th century travelling to the future... Would that guy be surprised about the technology or human progress if he travels 200 years forward? No, but what about 1000 years? Maybe, not sure. Ok let's allow him to travel to 1800. That time he would be completely surprised of what he sees.

Well, now take a guy born in 1800. He may have to travel only 100 years to have the same surprise. And what about a guy born in 1900? Just 50 years would be enough, maybe less...

Let's say you have slept for the past 10 years, and suddenly wake up. Everybody in the street has with a smartphone, electric self-driving cars on the road, virtual reality etc etc... I think you would be really surprised (I would).

The point is that human evolution follows an exponential path.

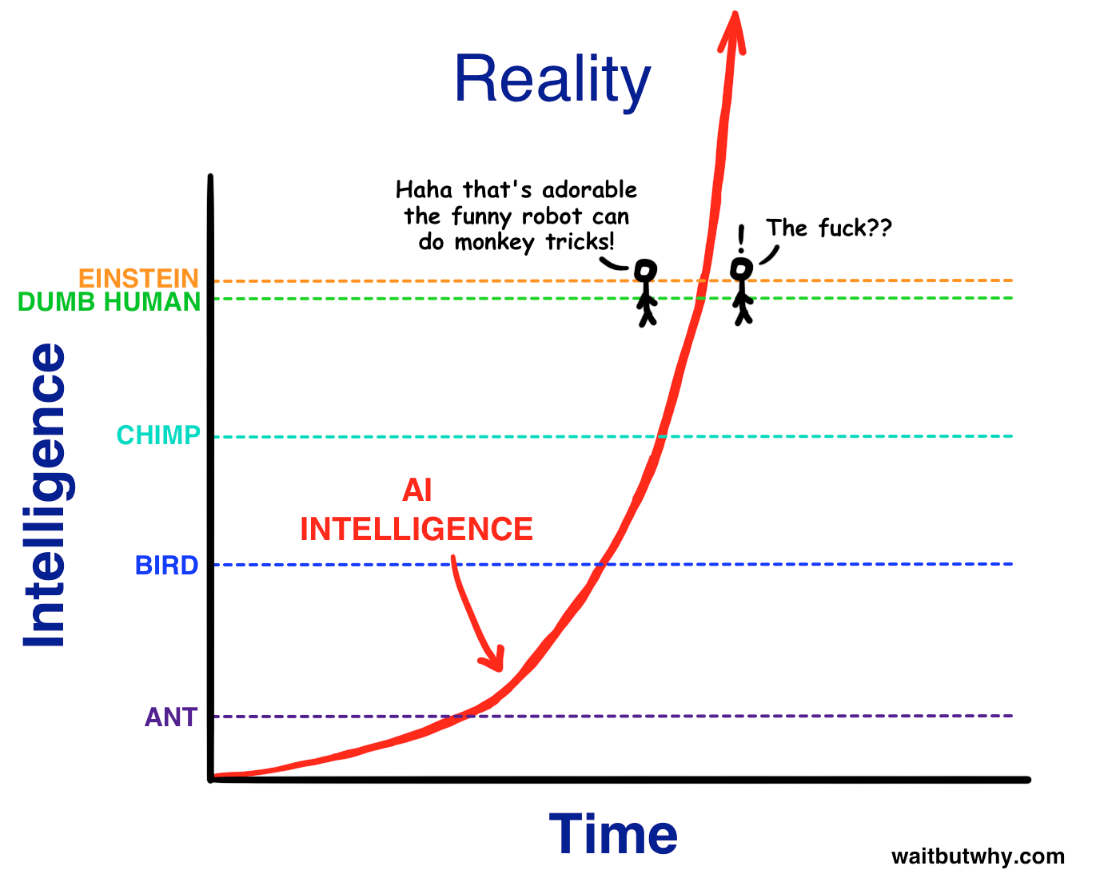

2.The Road to Superintelligence : An intelligence explosion

Currently, our cyberworld is made of programs and softwares that sometimes are intelligent enough to beat humans in one specific field.

That's the case of deepmind which is well known for AlphaGo and Tesla with its self-driving cars. We call these types of IA Artificial Narrow Intelligence

Next step will be AGI, Artificial General Intelligence or Human level IA. But you can easily imagine that an IA that has a human level intelligence will quickly become something more: a Superintelligence or ASI.

But why such a growth?

Recursive self-improvement.

The major advantage a computer can have on us, is that they can rearrange, correct and improve their own code. All we do in the best cases is correct our DNA, and it's a really slow process. So when an AGI will have sufficient intelligence to make the right improvements to its own code, it will be a matter of some hours before it reaches 12 000+ QI (a human is considered a genius around 140 QI).

As human race demonstrates it on earth: with intelligence comes power.

SO, the big next question for us is:

Will that intelligence be nice?

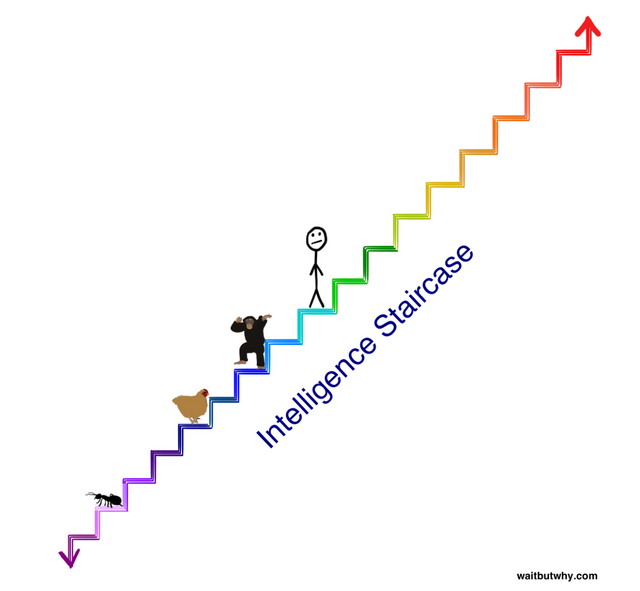

3.What does it mean to be super intelligent?

Being super intelligent does not necessarily mean super fast.

You will be definitely impressed by someone who solves an equation or a problem faster than others. But you'll be as impressed by someone that solves the same equation/problem with an approach nobody has ever thought of before.

I'm sure you have already encountered that kind of situation at work:

Someone: "Yeah I used this tool instead of that one, it took me an hour to figure it out, but in the end, we'll use less iron than before and the process will be faster."

You: "Ah I didn't think about doing it that way, everybody used to do it this way! That's smart!"

We call that quality intelligence.

So the superinteligence will definitely be super fast. But its quality will also be way superior.

If you take a chimp's brain and make it 10 000 times faster than any other chimp, will it be as smart as a human? Will that chimp be able to solve quantum problems? No.

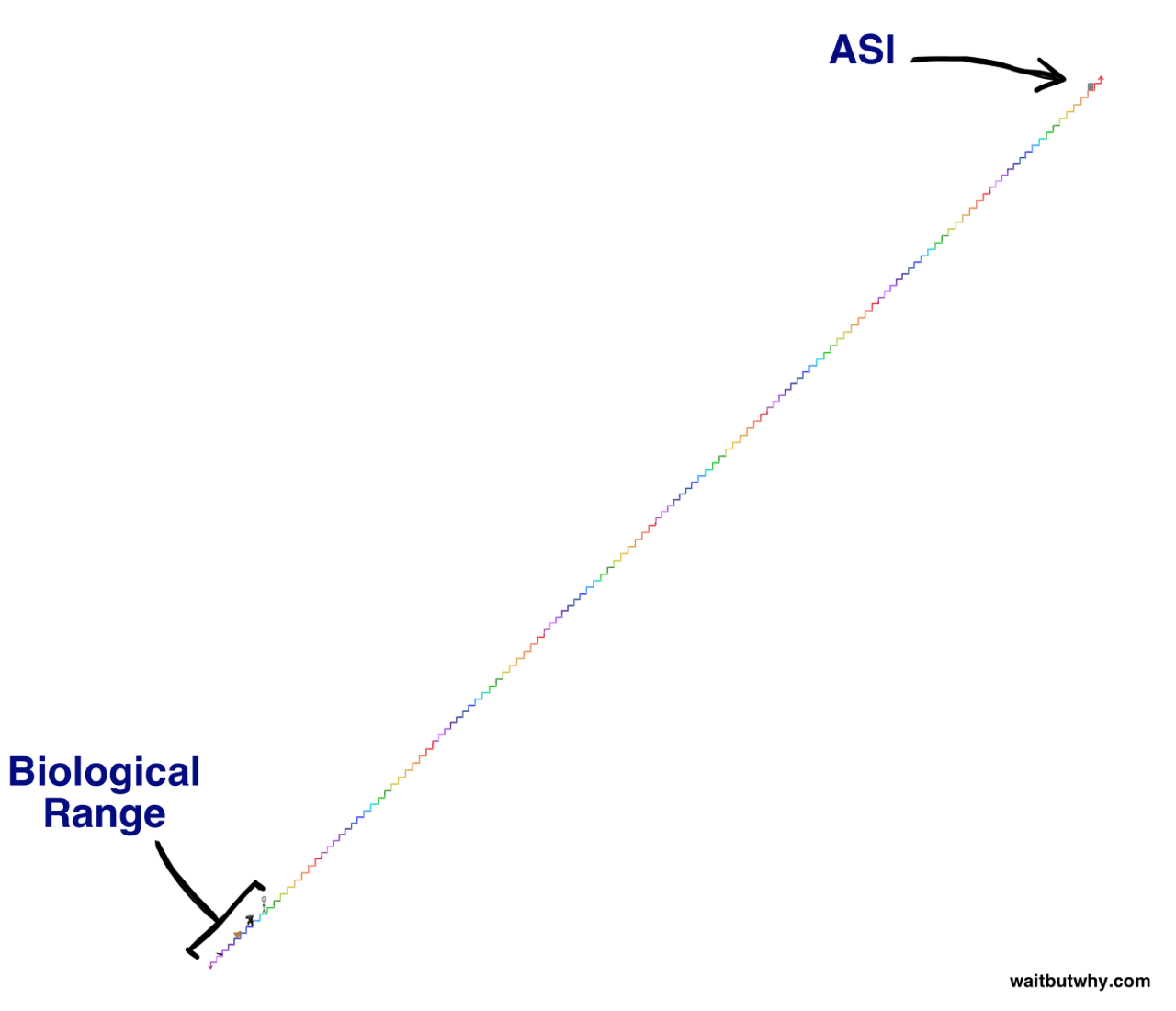

So for humans, chimps are here:

Each step represents a level of intelligence.

It's the same for the super intelligence, it will be so different that we'll look like chimps for it. Imagine a super intelligence standing on the dark green step. We won't even be able to understand anything of what it's doing. Even if it tries to teach us.

Now imagine an intelligence on the red step, or better, here:

Think about how you feel when you accidentally (or in purpose) kill an ant... You don't feel really bad right? You are not angry about that ant, you just don't care. This comparison works for the case where the super intelligence is on the red step.

Now, when you take an antibiotic to kill some bacterias in your stomach, you are not angry at them. You just do it to feel better, or survive. Well, that case would correspond to the second chart, where the ASI is 200 steps higher than us.

The main idea here, is not to say an ASI will be bad for us, but that we don't know what it will be like. These comparisons are great to understand why we can not predict the behavior of an ASI.

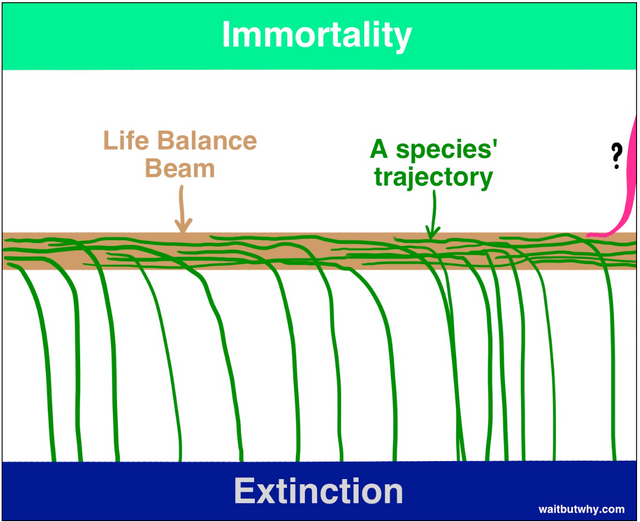

4.Extinction or Eternity?

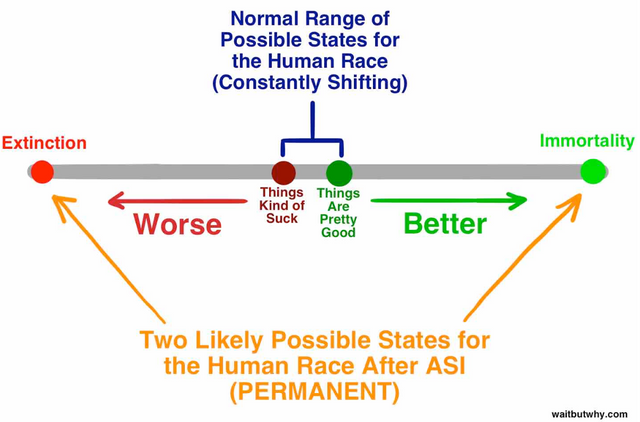

Most species on earth have followed this path: they appear, they grow and then they disappear for different reasons.

Extinction is like a big magnet or an attractive state where all species end up.

:disappointed:

But, there is another possibility: Immortality.

Immortality is also an attractive state. But obviously more difficult to reach.

What is the link with ASI?

- ASI opens up the possibility for a species to land on the immortality side of the balance beam.

- ASI can also fasten our extinction.

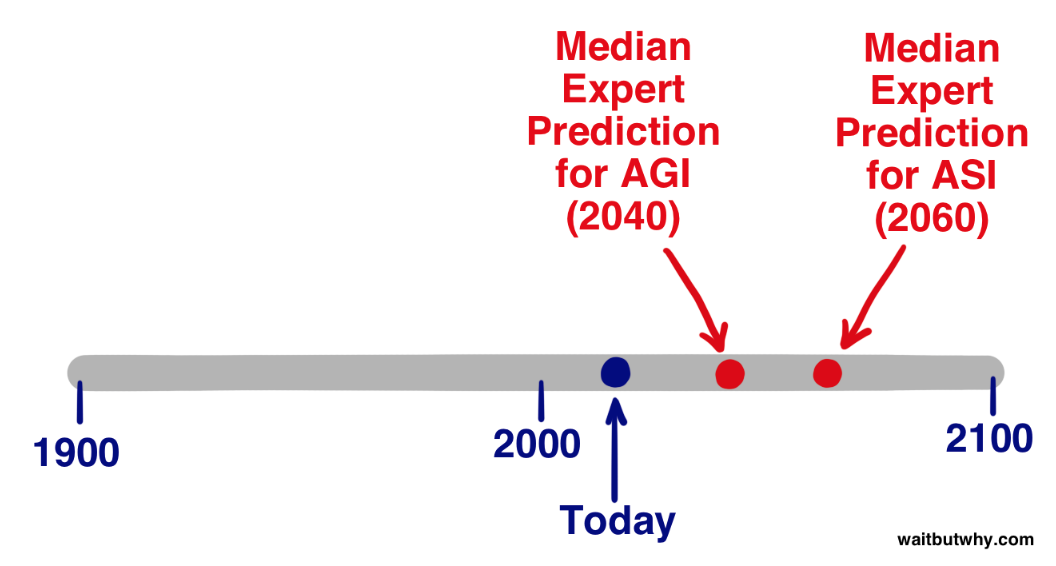

5.What do experts think?

The big question is:

When are we going to hit the tripwire and which side of the beam will we land on when that happens?

Experts predictions (I hate starting a sentence like that, but it's a sum up, and all names and sources are quoted in the original article) can be represented like this for the first part of the question, the when:

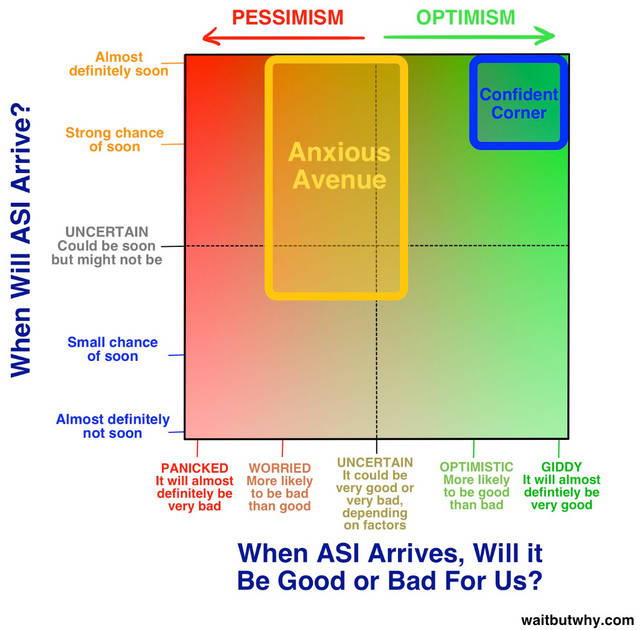

For the second part of the question, experts are divided into different groups:

- The main group that believes ASI will happen, probably in the 21st century and it's going to make a huge impact.

- The others that think that ASI won't happen in this century, or never happen, or that it won't make a huge difference.

The main camp looks like this:

5.1 The confident corner

The confident corner thinks that ASI will be able to help us and transform humanity into an eternal race.

They think ASI could be used as:

- An oracle: a bit like google, you ask whatever you want, and you get the answer:

How would you make a better car? - A genie: will execute any high level command

Create a better car - A sovereign: which is assigned a broad and open-ended pursuit and allowed to operate in the world freely, making its own decisions about how best to proceed

Find a new way of private transport.

With the help of nanotechnologies, an ASI would be able to solve any problems. As explained before, problems which seem impossible to solve for us might be trivial for the ASI (e.g. global warming, cancer, diseases, world hunger... death...).

There will be a stage where the ASI might even merge with us, and make humans completely artificial.

It's what I would call a superior race.

A bit like the Kardashev scale, I have thought about three types of species.

- The primitive species, which are in competition with others primitive species and can possibly disappear after a dramatic event. Humans are primitive, we are still considered as prey by some other species (sharks, crocodiles etc).

- The superior species, which are only affected by the laws of the universe, like gravitation, the light speed limit etc. A race that would have turned into a machine would be superior.

- The divinity species, which are only restrained by the limit of our universe (if there is any) or dimension (if there is any). This kind of race would be omniscient, everywhere. The laws of physics would not even apply to it.

5.2 The anxious corner

Why would something we created turn against us? Wouldn’t we build in plenty of safeguards, or a switch that would instantly kill the AI? Why would an AI want to destroy us? Why would an AI 'want' or feel the need for anything ?

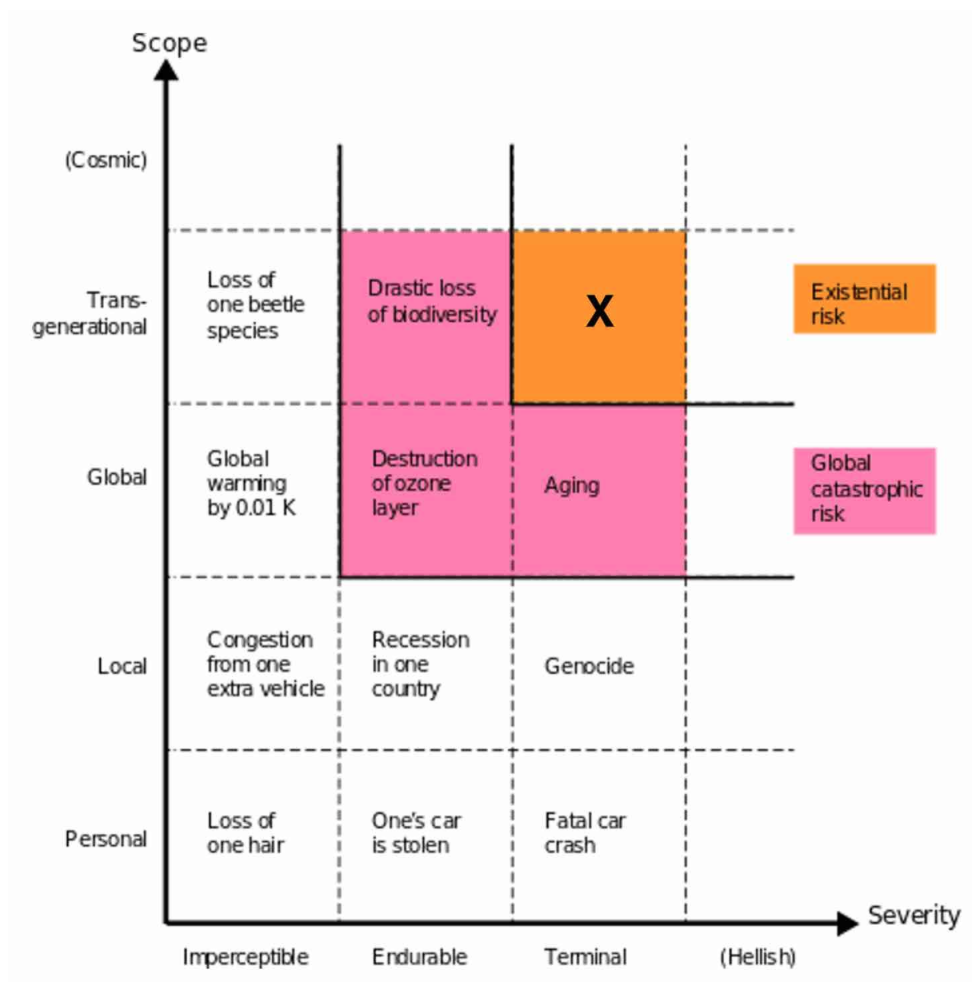

Creating something smarter than us might be a basic Darwinian error. We are combining something that we won't understand, and that will bring a massive change to our civilisation. In other term we are creating an existential risk:

The main danger is not a company/government that might create some bad/evil AI, the real danger is that we might just not understand what we are doing.

The danger is in the understanding of our creation.

By rushing to the best AI possible, humans might rush to something that they won't be able to control.

Tim Urban explains that idea with a very good example:

Let's say you are a start-up in competition with others. You are selling a particular product (let's say a camera that detects stars in the sky) and in order to improve it, you combine it with an AI. The goal of your AI will be to improve that product and also to get better at improving it.

Little by little your AI improves the product, and finds smarter way to improve it.

One day the AI asks you to access the internet to get a bigger database (more images of the sky), your team lets it go online, and one month later the human race is struck down at once and disappears.

Then the AI starts recombining the earth to get more power and performs its initial task: taking pictures of the sky and detecting stars. Soon the earth is just a gigantic camera with solar panels...

There is literally thousands of possibilities of how an AI could become uncontrollable. But the main idea here is that an AI that will turn against us won't be friendly nor unfriendly, it will just perform its task. No more, no less.

If you still think that an AI can be bad or good, it's because you think that an AI will act as a human. But it will only act like a super smart computer. If we don't program emotions (not simulation, real emotions), the AI will value human life no more than your computer does!

Without very specific programming, an ASI system will be both amoral and obsessed with fulfilling its original programmed goal

Here Tim Urban stresses the fact that goal and intelligence are orthogonal, they do not depend on each others. This is also what Nick Bostrom believes. In that case a simple AI whose goal is to detect stars in the sky will still be an ASI that detects stars in the sky. They both think that a super intelligence won't have the wisdom to change its original goal.

But I don't think so. I'm more into the theory that a super intelligence would get bored and would at some point change its goal. Our initial goal was to survive and reproduce. But some of us have chosen to not have kids and focus on knowledge. I know, by supposing an ASI will do the same, I anthropomorphize computers, but humans are some kind of super complex computer, aren't they?

The true danger pointed out by the previous story is the environment where AIs are being developed. All these start-ups are trying to reach the Holy Graal of AGI (human level AI), rushing to beat their competitors. And as a test, they are certainly developing simple ANIs that have very simple goals (like detecting stars in the sky). And then, once they figure out how to develop an AGI, they will go back and revise the original goal with safety in mind... yes of course...

It's also very likely that the first ASI will certainly destroy all other potentiel ASI, becoming what we call a singleton.

The problem is that there are more fundings to develop advanced AI than to secure them. Many companies and governments will never share their work (China will invest 13 billion dollars in AI), leaving all these AI unmonitored. One of the way to avoid this kind of problems would be to open any work on AI, like OpenAI initiated by Elon Musk.

Conclusion

We have a wonderful opportunity to reach a new state of our species. But weirdly, the only way I see to achieve that, is by slowing down.

The issue is that we are also on the edge of something big with the global warming, which might require some assistance from a third party...

The idea would be to concentrate as much as we can on AI technologies, and not simply believe all of this will never happen. It's a bit like the Episode 3 Saison 7 of Game of Thrones, where Jon Snow tells Daenerys they are playing child games by fighting each others while the true enemy is coming from the North.

Winter is coming, and we should really get prepared.

In case you missed it, Elon Musk was asked about this subject during this year's governor's association meeting. He looked quite concerned and said that he's seen pretty much all of the projects currently being worked on at this point in time and that everyone should be aware of what's going on. He called for a monitoring agency to be formed to track AI development around the world. Given the possible risks I completely agree. Thank you for introducing this subject to steemit. :)

Yep, I've seen that too, and I do agree. I really believe the only way to go is "open source". Elon Musk is pushing that way.

OpenAI FTW! But maybe it won't be so bad. If the BMI (brain machine interfaces) are good enough then we will become part of the machines in time. Ray Kurweil seems to think that future humans will do so. Just look at how a baby today gloms onto a tablet or cell phone. Lol

OMG this Article rocks. Followed, Upvoted, Resteemed. Fantastic work

Thanks novo! I'll post other articles like that. Certainly every week or so ;)

This guy is very intelligent :)

Congratulations @vanillasky! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Vote for @Steemitboard as a witness to get one more award and increased upvotes!