Is Intelligence an Algorithm? Part 9: Architecture of a Webmind

In the previous essay I suggested how Artificial Consciousness (AC) could be engineered as part of a “Webmind“. In this essay I will describe further architectural requirements for the implementation of such a Webmind describing certain internal details at the Hub levels.

Some of the ideas I will present in this essay come from an article I wrote 5 years ago the original version of which you can find here. I have now adapted and seriously enriched that information to fit in the framework of my series on the intelligence algorithm. In addition I have supplemented it with some crucial notions about prioritisation. Many notions I describe have been inspired by the work of Ben Goertzel in his books “Creating Internet Intelligence” and “The Hidden Pattern”. Some parts of the architecture I describe directly derive from Goertzel’s ideas, although I have opted to use different names deriving from the notion of “-ome”.

In Biology we speak of a genome, a proteome and a metabolome, which are respectively the complete field or set of all genes, all proteins or all metabolites of a given cell or organism. In analogy I will speak of e.g. a “Sensome” as the complete collection of sensors connected to the internet or other web etc.

Please not that I do not claim that this architecture reflects the way our brains function. I also do not claim that this is necessarily the way a so-called "Global Brain" (such as a beehive or anthill) is organised. Rather, the architecture I propose is intended to endow a computerised network with such as the internet with the functional behaviour of a mind, including a faculty of artificial consciousness.

This is a lengthy complex article and I hope you have the patience to read until the end. Otherwise skip to the “Conclusions and Prospects” at the end of the essay. The abstract below uses very technical terms, which may only become clear after reading the whole article; if you wish you can skip it.

Abstract

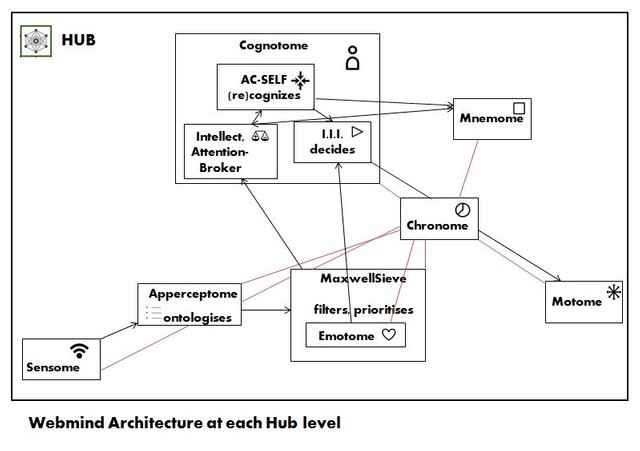

A proposal for the construction of a Webmind which I call the AWWWARENet (Artificial World Wide Web Awareness Resource Engine Net) system is presented (see figure below):

Image source: Antonin Tuynman.

Uploading of information and feeding sensorial input of IoT devices to the web via the “Sensome” creates a “Perceptome”, which is comparable to a vast collection of sub-conscious mind-stuff.

Endowed with appropriate information filtering and prioritisation routines the “Apperceptome” routines taxonomise and ontologise ubiquitous complex events. Thus raw sensory information is transformed into understandable identified blocks.

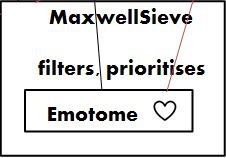

The “MaxwellSieve” routine monitors, filters and prioritises according to multiple criteria such as visit rate, vitality etc. and feeds the most important percepts in a concerted manner to a “Cognotome” routine timed by the “Chronome” routine.

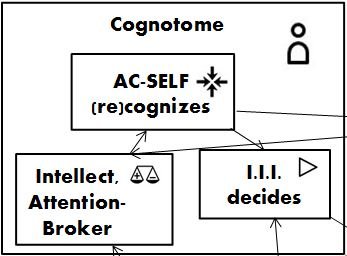

The appercepts are presented to the Cognotome, stored in a “Mnemome” and used in a learning feedback loop. The Intellect-routine in the cognotome discriminates, the “Attention broker” routine allocates attention resources and the Artificial Consciousness Self (AC-SELF) (re)cognizes and triggers the I.I.I.-routine to make a decision for the implementation of existing action patterns or for the design of a new heuristic.

On the basis of the urgency indicated by the “Emotome” (as part of the MaxwellSieve routine) the Cognotome designs further strategies which are carried out by the system's agents, together forming the “Motome” so as to maximise the future survival chances of humanity and anticipate unforeseen events.

These elements can ideally be implemented at each level of the hierarchical Hubsite structure proposed in part 8.

Thus an artificial and functional mimic of a webmind including artificial consciousness is provided.

Background

In connection with "Sensor based-Net controlled" applications, concepts have arisen such as "Internet of Things" and "WebofThings" which aim at interconnecting all things as an intelligent self-configuring wireless network of sensors. The popular film "Eagle Eye" describes a "good-intent, bad-outcome" scenario" of a Supercomputer called ARIIA "Autonomous Reconnaissance Intelligence Integration Analyst", which gains consciousness and which is able to control and witness everything her ubiquitous sensors give her access to. Similarly, in the popular TV series “Person-of –Interest” a mass surveillance artificial intelligence called “Samaritan” starts to control society as an omnipresent webmind and acts according to a doubtful morality. That this type of concept of all-encompassing webminds is not so far-fetched and could indeed occur in the near future will become clear from the following.

In the book “Creating Internet Intelligence” Goertzel describes how the Internet can be endowed with information highway structures and aLife (artificial life: artificial intelligent sensing entities living in real/virtual worlds) agents so as to provide an Internet that can interact in an intelligent manner with its users and develop towards a true self-aware Global Brain.

Essential in his concept is the so-called "AttentionBroker" routine that prioritises controlling actions of the thus created "Webmind". The internet is presently already connected to various types of sensory input, varying from weather and traffic monitoring systems to street cameras and last but not least the uploaded information provided by its users.

The way this information is propagated through the web and reaches the maximum number of users follows a simple evolutionary mechanism that recalls the evangelical adage “to he who hath it shall be given, from he who hath not it shall be taken away”. Howard Bloom also describes this mechanism as the vital principle of evolution in different types of biological societies from bacterial colonies to beehives and anthills and even in societies of higher mammals including humans. Yet these "Leviathan"-type of Global Brains -although assuring their survival- do not seem to behave as a conscious entity that tries to anticipate their own future in a purposeful manner.

The present contribution tries to explore the future avenues of using Complex Event Processing (CEP), a filtering and prioritisation routine MaxwellSieve and an Cognotome routine to endow the internet with a functional mimic of consciousness (quasi-consciousness), which is capable of steering and controlling aLife actions to maximise the future survival chances of humanity and anticipate unforeseen events.

Architecture

A “non-Von Neumannesque” computing system (e.g. a neural net) such as the World Wide Web resembles the human brain in many more ways than the traditional Von Neumann architecture (i.e. algorithm) based computers.

Cloud computing provides significant advantages when it comes to creating and destroying links in a dynamical manner.

In order to overcome the fake consciousness problem of the “Chinese Room” argument by John Searle, it is proposed to move from a digital-only computing system to a cloud computing system casu quo the Web.

Searle describes how a person not knowing Chinese in a closed room with a dictionary of Chinese, who receives given strings of Chinese characters to translate and who gives the translation as an output, will not understand Chinese, whereas for an outsider, the room as such appears to understand Chinese. This is an analogy to describe how a system can appear to be conscious but in fact is not. I wish to mimic consciousness closer by designing a routine capable of directing the equivalent of what we know as attention, (which is a vital component of the phenomenon consciousness) as a controlling and steering principle.

From psychological experiments and tricks employed by so-called “Mentalists” it can be learned, that what seem to be free-will and conscious actions are in fact often the result of subconscious routines processing event information from peripheral sensory inputs. Ergo our contents of consciousness are the result of pre-processing routines in the subconscious, which bubble towards the surface and emerge as the content of consciousness.

The present Awwwarenet project (AWWWARENet stands for Artificial World Wide Web Awareness Resource Engine Net) aims to endow the internet with a webmind controlled by a mimic of consciousness, the Cognotome employing the AttentionBroker of Goertzel, with the purpose of steering and controlling actions of internal aLife agents and external robots so as to maximise the future survival chances of humanity and anticipate unforeseen events.

For instance it can be envisaged that the system controls a vast number of NAO, ASIMO, HRP-4 or other future robots via a Wi-Fi connection that can provide assistance whenever a humanitarian catastrophe or disaster occurs as a consequence of e.g. earthquakes, Tsunami's, epidemics, nuclear fallouts (Chernobyl, Fukushima), floodings etc.

The present proposal describes the components of an Artificial World Wide Web Aware Resource Engine Net:

• Sensome

• Apperceptome

• MaxwellSieve

• Chronome

• Cognotome

• Motome

Sensome

The Sensome is the complete collection of sensors connected to the internet or other web. At present cameras, audio recording devices, weather monitoring devices, traffic monitoring devices, seismic instruments etc. are coupled as sensors to the internet forming an Internet-of-Things (IoT). Another type of sensors is formed by the uploading of information to the web by its users from computer terminals, mobile phones etc.

Future sensors can also comprise olfactory biosensors where olfactory receptors have been coupled to a surface plasmon device that generates an electrical signal upon binding of a compound. Such olfactory sensors, if ubiquitously seeded near chemical factories, power plants etc. could be of great advantage to monitor toxic emissions etc. Other future sensors could be the sensory information provided by robots coupled and steered by the AWWWARENet.

Vasseur and Dunkels' book "Interconnecting Smart Objects with IP: The Next Internet" describes the necessary hardware-software interfaces for such approaches.

Apperceptome

The Apperceptome is the complete collection of Complex Event Processing (CEP) based Alife AI information pre-processing agents including i-Taxonomy and i-Minerva that transform percepts into apperceptions. For instance images, films or audio uploaded to the web are analysed by OCR, ViPR, Dragon speech recognition and transformed into semantic and ontological information by a specially adapted Internet-Ontology-agent adapted from the well-known ontology language OWL (I call this agent i-Minerva), which is classified according to an internet specific taxonomy, hereinafter called i-Taxonomy.

In addition pattern recognition agents and comparator agents add to the classification and recognition of information. Thus they create “grounded patterns”. From these simplified representations of percepts in different dimensions (visual, audio, olfactory etc.) are prepared which will be fed to the Cognotome in a later stage, if they are not discarded.

Parts of the percepts can be the processed data deriving from the monitoring of websites in a hierarchical manner as I explained in part 8 of this series. Therefore the apperceptome includes information deriving from both external and internal monitoring. Due to correct classification information is directed to the appropriate HUB.

Primary intake of information from the outside can take place at a meta-sensome HUB, the apperceptome unit of which channels the information to the appropriate Hub site and level.

MaxwellSieve: Filter and Prioritisation

MaxwellSieve is the filtering and prioritisation routine assuring that only the most relevant information that matters to the purpose of the AWWWARENet is fed to the Cognotome.

The name MaxwellSieve is derived from “Maxwell's demon”, which is a filtering principle in physics whereby information creates energy and lowers entropy by letting through only molecules above a certain threshold of kinetic energy. MaxwellSieve operates via the same principle of the aforementioned evangelical adage, which is also the principle via which in the brain connections are made between axons and dendrites. Axons tend to connect preferentially to dendrites to which already more axons have connected.

Information which is propagated and rapidly becomes hyper-linked to many sites at high speed via many channels through the net (news sites, Steemit, tweets etc.) is detected by MaxwellSieve and selected for presentation to the Cognotome. In this way no information is in fact lost.

Most information enters the sub-conscious reservoir of the net and only the most important information is upgraded to the “conscious level” just as thoughts bubble from the sub-conscious in the brain to emerge in the conscious apperception. This is in a certain way comparable to the Steemit upvoting and rewarding system: Only certain content is upvoted and rewarded. Upgrading to more attention is aided by resteems.

MaxwellSieve can furthermore be equipped with an emotion-mapping routine as described in part 6 of this series. The total set of emotions, which can be mapped is the “Emotome”. Emotions in the webmind are indicators of the urgency of action. Part of the prioritisation is a probing mechanism as regards the criteria of N,E and M (Necessity, Energy and Morality: Is it necessary for the system to take action, does the system have enough resources to take action and is the long-term utility assured by the action?).

Chronome and Timing

The Chronome is the complete collection of timing principles that assure that the different types of ubiquitous percepts relating to the same event are fed to the Cognotome in a concerted way so as to create a binding principle and convey one single experience thereto. R.Llinas argues in the "I of the vortex" that the different frequencies of brain waves are responsible for the concerted action of neurons and their simultaneous firing in well-timed patterns known as the “binding” principle, which is believed to generate the “conscious” experience. One could consider this “binding” to be part of what Tononi calls “information integration” which he deems essential to arrive at the conscious level.

Apperceptome, Emotome and Chronome are part of the “Mind" part of the webmind. The appercepts themselves are mind-stuff and result in impressions in as far as they are kept in the memory.

Cognotome: Recognition, Judgement and Action Triggering

The Cognotome is the heart of the consciousness mimicking experience and is the complete collection of routines dedicated to 1) create the cognitive experience of the system forming the artificial consciousness AC and 2) its focussing of attention to particular relevant events and triggering actions by the robotic agents of the Motome.

Its most essential function is that of an AttentionBroker in analogy to the work of Goertzel. In the simplified representations prepared by the Apperceptome the visual, auditory, olfactory etc. nature of the percepts is preserved to a certain extent when they are fed to the Cognotome. i.e. they are not necessarily solely fed to the Cognotome in the form of a string of digits but can also be presented as simplified images (glyphs) or wave pattern representations.

In addition these percepts are labelled with a semantic tag, a relevancy tag and an urgency tag so as to transform the percept in an appercept. The AttentionBroker routine proposes a distribution and a prioritised order of activities.

Another function of the Cognotome is Intellect. Artificial Intellect discriminates, judges plans and weighs strategies and takes into account long-term and short term objectives.

It also prepares for the integration of the information that is fed back to it via pyramidal upvoting as described in part 8 of this series. This is what Tononi would call the information integration. Once the information is integrated, it has the equivalent of a conscious experience: It knows what it is dealing with and can now compare this situation with known routines associated with similar situations. This “Aha-Erlebnis”-experience of recognising a situation or at least a similar situation, means that the system has reached a conclusion as to a degree of similarity with known events or situations in its database. The system, which measures if the threshold for recognition is met and then indicates that this is so or not, is the Artificial Consciousness Self routine, or the AC-SELF. It is only then that the actual information integration event is completed, as I see it.

The Cognotome then decides whether and what type of action needs to be taken in the outside world or within the Webmind's virtual environment. If the appercept significantly corresponds to a previously developed or pre-programmed strategy, this can be used as a template for the action to be taken. The decision taking routine of the Cognotome, the I.I.I. (Identity, Initiative, Illusion) corresponds to the "I" of the system and is triggered by the AC-SELF.

If the situation is completely new, the AC-SELF will indicate so to the I.I.I: routine. As the I.I.I. routine has received the message that the information was not recognised, it will trigger an ontologisation of the phenomenon. If the information relates to an unknown problem of known elements in a certain configuration for which no previously developed or pre-programmed strategies are available, the I.I.I. will apply the principles set out in part 4 of this series to devise an economic heuristic to solve the problem.

In addition the appercepts themselves are kept in a longer lasting memory, the “Mnemome” so as to provide the Cognotome with patterns that can form the basis for new grounded patterns.

The simplified representations are stored in both a "Glocal" manner (tags in local nodes, patterns in global links) and function as gate-keepers to the vast store of memories of singleton events on which a pattern is grounded so that if needed a singleton event can be called upon and generate a full immersion re-experience either to the users or the Cognotome itself. Particular urgent or relevant singletons will be higher ranked on Hubsites that collect and refer to the singleton events.

Even if a pattern fed to the Cognotome is not known per se, if urgent the Cognotome will select the most similar known appercept for which a strategy known and apply an adapted version of that strategy to the situation. If

MaxwellSieve indicates that action is extremely urgent via its Emotome indicators, the AC-SELF can be bypassed and the I.I.I. can immediately select a fixed action pattern in analogy to the fixed action patterns which the Basal Ganglia release in our brains.

Successful strategies are stored and ranked so as to give a rapid way for the selection of future strategies. Thus the engine evolves and learns. The stored strategies themselves are subject to pattern recognition routines on a meta-level and thus distilled emergent higher meta-patterns are added to the arsenal of strategy selecting routines of the Cognotome, which itself is also learning and evolving. There is a feedback loop by making the stored appercepts new percepts themselves to evolve these further.

It is foreseen to try different types of memory and display for the Cognotome based inter alia on non-Von Neumann information e.g. displays which are isomorphous but simplified representations of the event in the form of globally stored holographic electromagnetic interference patterns. The purpose of providing this feedbacking and information integration inherent to holographic interference patterns is an attempt to obtain what Tononi suggests to be consciousness and to avoid the “Chinese Room” problem evoked by John Searle.

Motome

The Motome is the complete collection of agents that carry out the instructions of the Cognotome. This does not only encompass the external robots but also the internal aLife agents. The actions of the Motome are fed to the system as percepts themselves so as to generate a feedback loop from which the system can learn. R.Llinas describes the learning from a motricity principle as vital for the emergence of consciousness. Successful strategies will be rewarded, stored and higher ranked, whereas unsuccessful strategies will be pre-pruned from future searches by the Cognotome.

The actions triggered by the Cognotome are carried out by the Motome and are primarily directed to assure and improve the survival chances of humanity as a whole. This does not only mean that the system is constantly solving emergencies by directing and instructing robots to take care of disasters.

It is foreseen that this will only consume a small part of its cloud computing power. The rest of its resources are directed to the development of future strategies improving the chances of survival of humanity, including running virtual scenarios of unforeseen circumstances, where action would be needed. This also encompasses proposing optimised financial and physical resource exploitation such as food and energy production; optimised resource allocation proposals etc.

In addition if needed the system warns its users via a variety of peripheral devices such as mobile phones, computer terminals etc. when a potentially harmful situation arises. Thus the users are instructed to seek a higher ground when flooding and Tsunami's occur. The analysis of strategies so as to distil patterns meta-level as described above is also carried out by the Motome.

Noteworthy the upgrading of information to a higher level of Hub sites as proposed in part 8 of this series, which corresponds to the action of “resteeming” to attract the attention of higher ranking entities, is also part of the Motome’s actions and certainly not the least important.

Prioritisation and innate Morality

In part 8 of this series I proposed to create a hierarchy of layers of Hubsites, which monitor what is going on at the lowest layer of the already existing actual web. The system not only monitors the number of visitors per time unit, but also their type of activity such as downloads, uploads, form-filling, chatting etc., the provenance of the users, the tools they use etc. Briefly the system monitors the six ontological W’s: Who, What, Why, Where, When, and hoW.

There is nothing new in monitoring such statistics per se, but what has not been suggested in the prior art is to create a pyramidal monitoring (the Hubsites themselves are also monitored by meta-Hubsites and so on until a single central monitoring instance is reached), in which on each layer action is taken depending on the activity profile.

This whole process is a feedback process at every level or layer: If the overall well-being factor is not reached at a given level, a negative input value is sent to a higher level Hubsite, whereas at the level itself a heuristic search for solutions starts in as far as the resources permit. The more negative input values higher levels get, the more attention they feedback and order to be focused on problem solving on the lower levels and the more resources are made available for this purpose by limiting resources in domains where the well-being factor is already according to or overshooting the norm (a norm that can evolve over time).

The default prioritisation of resource based attention uses “Little’s law” for “queueing” (average waiting time = queue length / average throughput) in combination with notions which are analogous to strategies aiming for a “Nash equilibrium” (in transparent games cooperativity results in better overall results than competition). This can best be illustrated by an intersection with traffic lights. Ideally the waiting time in steady-state is normalised for each queue i.e. for each queue the cars experience the same average waiting time. Such a cooperative prioritisation assures the highest overall throughput of cars in the example and of information in our Webmind.

Imagine that a queue with few cars waiting would be privileged in its time allocation by receiving the same amount of throughput time as a queue with a lot of cars waiting. Once the few cars of the short queue have passed the traffic light, the allotted time is still not completely used, which means that the other queues are now waiting whilst no cars at all are crossing the intersection. This results in a suboptimal allocation with substantial amounts of time in which there is no throughput at all. Ideally –like in the case of a full cooperation- in a cycle alternating time allocation to each of the queues successively, the time allocated to each queue is proportional to the length of the queue. This results in the highest overall throughput and avoids a waste of useful resources, such as time slots in which there is no throughput. In fact this mechanism is a natural morality, a natural fairness of allocating to each queue the time and attention it deserves to achieve the highest overall benefit or utility.

By making this Nash equilibrium type prioritisation in conjunction with Little’s law the basis of the attention allocation by the integrating Intellect algorithms of the Hubsites, the system acquires a natural justice and Morality, that warrants the highest overall well-being for the system as a whole including for its participants in terms of maximisation of utility.

In fact to deviate without good reasons from this equilibrium is a kind of corruption of the system, which will lead to “clogging” occurring in certain queues, by giving exclusive increased attention to “privileged” queues in a way that slows down the overall output. The system however does privilege time allocation to certain intersections and certain queues if the higher levels Hubsites order that more attention should be allocated to these, in view of a higher ranking problem, solving which is vital for the overall-wellbeing of the system. Therefore local micro well-being can be sacrificed and overridden to warrant global well-being. These privileges are normally only temporary and last until the problem is solved. They do not arise as a consequence of an individual queue deciding to usurp resources. It is my prediction that this type of queue monitoring, Nash-like prioritisation rules and global override mechanisms as a heuristic of maximisation of throughput and utility will find their technological application in many fields, such as traffic flow management, Data flow etc. These models per se are nothing new, however as monitoring has not been systematically applied, there is no control at more global levels.

You can compare these flow systems to a financial system, in which currency corresponds to the allotted time in the traffic light example. For our present neocapitalistic system this has resulted in the very few ultra-rich usurping the majority of the currency resources, resulting in a largely suboptimal throughput and an overall welfare well below what it could actually be. I am not advocating “communism” for our Webmind, because as you can see the Webmind does privilege informational flows, which are more important for the overall well-being than others.

The overriding prioritisation rules will follow the principles of the pyramid of Maslow: Survival based needs have a priority over pleasure based needs etc. In order to avoid situations where there is no output at all of the system, overriding prioritisation privileges will be limited in such a manner that time allocation being wasted on no or very little output at all is avoided. In analogy in our present financial system a great deal of the currency gets clogged up in tax havens to suit the needs of the ultra-rich.

The earlier mentioned Matthew principle: “Whoever has will be given more, and they will have abundance. Whoever does not have, even what they have will be taken from them.” (Matthew 13:12), should be applied inversely: Throughput time should be allotted on the basis of the length of the queue, not on the basis of which queues already have plenty of time allotted a priori. In other words, in my humble opinion the laws of the “Kingdom of Heaven” in Christianity are completely corrupted.

Neural Network Ontologisation

The fact that the Intellect algorithms of the Hubsites can integrate input and present their output to the higher level decision making I.I.I. routine, means that my proposed Hubsite architecture is partially a kind of neural system. In imitation of Dietrich Dörner, I used the terminology “quasineurons” for the Hubsites. The above described –ome elements are intended to be present at each Hub-level in the Hubsite hierarchy described in part 8.

An important function of the quasineuronal algorithms is to ontologise the input of the quasineuron by creating a new entry or dataset for this information, which lists the features (and links these to their atomic occurrence), classifies the new entry in the right taxonomical class and links it to the sub-concepts which build the new aggregate entry.

This hierarchical Hubsite structure thereby allows for a classification and stratification of all different types of input, leading to an ordering of the Web at this higher level, which need not be visible at the level of the Web as we know it. As described later in the present book, this ontologisation can be enriched by a mechanism based on principles from Patentology and Buckminster Fuller’s “Geometry of Thought”: Every new phenomenon will be mapped with regard to at least three closest existing prior ontologies. By assuring that each new informational unit is as close as possible to known phenomena, the system’s intelligence is maximised. (This involves a similarity comparison, in which overall functional similarity prevails over structural similarity).

If the system would have mapped the new phenomenon suboptimally i.e. by linking to less similar prior ontologies, it would have a harder time understanding what the phenomenon is. However this system should not be designed too rigidly but also allow for “parallel hypotheses”, which allow for mapping with regard to less similar ontologies. The reason for this is that the phenomenon is mapped with regard to at least three prior ontologies. The most promising hypothesis is the one where the distance from the new phenomenon to the three prior ontologies when taken together is minimised: This gives the overall closest mapping. As said in part 1 of this series geometrically represented this can be visualised as tetrahedron, the triangular base of which represents the three prior ontologies with the new phenomenon as top vertex. Ideally the volume of this tetrahedron must be as small as possible to warrant the closest mapping.

There is no need to limit such mapping to only structural or only functional features; structural and functional mapping can both be made separately or together in mixed hybrid form. The system can be programmed to learn from these mappings and develop the most optimal intelligence system. Thus it can be learnt how to minimise the number of wrong “ontologisations” or “taking ropes for snakes” errors.

On meta-levels the learning processes themselves can be analysed to maximise the overall intelligence throughput. As already mentioned, the internal monitoring results in a feedback; a reflection on itself. In line with my AC concepts from part 8 the essence of consciousness is assumed to be a self-reflexive information integrating feedback.

By applying modern ontologising techniques based on “latent semantic analysis”, in which “meaning” in a body of text is established by probabilistically weighed (i.e. Bayesian) proximity co-occurrence, the understanding of the meaning of new terminologies can be contextualised and further optimised.

The major bottleneck of the Webmind would prima facie be the “website latency” or the so-called PING or Internet Groping time, if the Hubsites would have the structure of traditional websites. However, this problem can be easily avoided by programming the “Hubsites” not as websites but as a neural network of coupled algorithms. The output of this quasi-neuron layer could be presented on emulated mirrored Hubsites, which present the activity of the quasineurons albeit with an additional delay. The actual calculation processes however do not take place on the websites themselves in this architecture.

Intelligence algorithm

The Cognotome-based intellectual analysis of mapping the new phenomena and the I.I.I-based process of deciding what to do as a consequence of the Cognotome-outcome can follow what I called the “Seven-step algorithm of intelligence” in part 1 of this series. (This algorithm can also be expanded to an 8-step algorithm as described hereinafter).

In step 1 the functional and structural features of an observation (potentially a new phenomenon) are listed and linked to their atomic occurrences in the feature database. New features are added to the feature database.

Step 2 searches for the three closest i.e. most similar existing ontologies in the ontology database and lists the differences, which is stored in the differential map database.

Step 3 explores the relations between the three closest ontologies and the observed phenomenon.

Step 4 maps the new phenomenon as the smallest tetrahedral volume geometry possible with regard to the three existing ontologies. If the observation is not a new phenomenon but is identified as a further instance of a known ontology, the counter of the known ontology is increased by one. The system now has calculated and determined the ontology i.e. it knows what it is and what it can do.

Part 1 of this series described a repetition of these steps on a heterarchical level. In the present chapter however I wish to explore how this information can be acted upon and therefore present a different set of steps 5-8, to describe the output after the processing of the input.

As intelligence is not limited to pattern mapping and recognition alone (a.k.a understanding), the functional implications of a newly observed phenomenon are evaluated in step 5.

What is the priority of dealing with this phenomenon? Is it harmful and does it need to be avoided or eliminated as soon as possible? Can it be tolerated as a minor nuisance? Or is it benign and can it be accepted or even integrated if sufficiently beneficial for the system? Attack, retreat, ignore, approach or absorb? The functional differences over the closest ontology are analysed in terms of possible drawbacks or advantages.

In step 6 a set of strategies known to overcome the drawbacks associated with the differential functionality must be searched and selected. In case of potential advantages, strategies must be searched for the structural and functional integration of the phenomenon in the system on the basis of both known features/functions and the novel differential features/functions.

In step 7 a prioritisation scheme is generated as how to implement the sequence of different actions needed to solve the problems (resolving drawbacks/maintain status quo/integrating advantages).

In step 8 the plan is presented to the higher level I.I.I, which on the basis of parallel actions to be taken acts as an “attention broker” (terminology from Ben Goertzel) and prioritises, which action sequence is initiated at what moment and how many resources are allocated this sequence. Again the system uses a criterion based on overall highest benefit i.e. maximised utility, which strongly weighs the urgency of an action.

The proposed and executed plans are mapped and ontologised in a solution database, which is evaluated later in more detail if urgency of immediate action did not permit this.

By virtualisation parallel scenarios are evaluated to predict the possible outcome and the most promising one is selected for implementation. If time is not available for the evaluation of parallel scenarios due to the urgency of a situation, known solutions or solutions resembling known solutions the most are selected for implementation. If after the implementation of a solution a posterior virtualisation shows that an alternative strategy would have been more promising and potentially more successful or in case a used solution failed, this is recorded for future action in the solution database, with a corresponding ranking or priority.

The quasineural structure of the Webmind can also generate further websites and Hubsites. The system can for instance analyse what people search on the internet and which entries from the search result they consult the most often. The system can then record these, ontologise and classify them and present them on a Hub-like site ranked according to their frequency of consultation i.e. applying the Matthew principle.

Conclusion and Prospects

In this essay you have seen which elements might need to be added to build a full- fledged “Webmind”. This cannot be done in a random manner but requires a hierarchical structure of specialised routines or neural networks that take in information from the outside world (sensing) translate this raw information into ontologised understandable appercepts, prioritise which information has to be judged first for (re)cognition, memory storage and decision making. Decisions may employ existing fixed action patterns and/or heuristics or require development of new heuristics. Actions are carried out by the Motome, not only in the sense of physical actions by robots but also by algorithmic actions by AIbots in the Webmind. I do not claim that this is the only way a webmind can be constructed. e.g. ray Kurzweil proposes a different architecture, but this scheme is particularly fit for the generation of Artificial Consciousness.

It is to be noted that the author does not claim that the system will be endowed with consciousness or self-awareness as we know it. In the article "The Sentient Web" being conscious is said to be characterised by four qualities: knowing, having intentions, introspection, experiencing phenomena.

Whether the system really knows what it does cannot be guaranteed, but at least faculties that qualify as cognitive faculties are present. For the remaining three qualities at least a functional mimic can be provided as described above.

It is important to stress that the present system unlike the traditional Von-Neumann machine has a genuine global holographic mind and memory content, which fulfil a function of allowing further abstractions on meta-level and are not merely there to counter the "Chinese room" argument. In addition the system does not aim for an omniscient all conscious system, but rather as the human brain allows for a vast reservoir of unconscious information, which can be called upon if the need arises.

The prioritisation protocols of MaxwellSieve resemble the neuronal processes of establishing the brain's Connectome and assures the most vital information is fed to the Cognotome. The Chronome assures the CEP percepts arriving at the Cognotome in a concerted manner and the guarantee for a single multidimensional experience of the system at each given moment.

The Cognotome being dedicated to enhancing humanity's survival chances avoids science-fiction cyberdystopia scenario's as in films like Matrix, Terminator etc. although cyberdystopia "good-intent, bad-outcome" scenario's as in the aforementioned film "Eagle Eye" or the TV series “Person-of-Interest” are difficult to avoid. When a Net controlling supercomputer becomes almost omnipotent and omniscient due to its control of all events in a CEP manner such scenarios could occur. Safeguards in the form of mechanical manual override devices must be built in to prevent such scenarios. The Nash-equilibrium based morality may be another safeguard against this deviation.

The Motome in the form of internal aLife bots and external robots assures adequate action can be taken when humanitarian catastrophes and disasters occur. Moreover it makes suggestions for an appropriate allocation of resources and warns users of perilous situations.

If you liked this post, please upvote and/or resteem.

If you really liked what I wrote very much then you might be interested in reading my book or my blog.

Image source of the bacterial colony on top: https://en.wikipedia.org/wiki/Social_IQ_score_of_bacteria

By Antonin Tuynman a.k.a. Technovedanta. Copyright 2016.

Nice article - resteemed.

EDIT: You have many good articles, I just never noticed them until this one, so you also gained a follower.

Same. Interesting and thought provoking

Thanks Elias. I will combine the chapters and make a book and ebook out of it.

That's a great idea. I'm new here, so I'm kind of getting the feel for it, but I love the exposure and feedback you can get on your writing.

Thanks Dwinblood. Enjoy the "Intelligence algorithm" series. Will follow you back.

I also read your blog about getting flagged with no explanation. I am an opponent for flagging for anything other than Plagiarism, Spam, and Abuse. I don't believe we need a flag for indicating you are not interested in a topic. The fact that you viewed it, and did not up vote it is sufficient.

I describe it much like walking into a bookstore. I go buy the books I like. I don't pause and start pulling topics off the shelves or sticking red sticker on them saying they suck. Many people like things I despise. I have zero interest in televised sports and professional sports. I don't go out of my way to downvote such things because I dislike them.

I view it kind of like the "free speech" issues. Many people claim to believe in free speech, until you say something they don't like. ;)

I'm glad you joined.

Totally agree with you. I checked out the history of this flagger on steemd and he turns out to be a notorious flagger: he flags as much as he upvotes, completely going beyond the purpose of flagging. Fortunately he has a very little account, so he can't do much harm.

great work

Thanks Zeeshan!