Is Intelligence an Algorithm? Part 8: Artificial Consciousness

In order to achieve complex goals –which is the purpose of intelligence- a system (living or artificial) must be able to monitor itself; to verify whether the desired goal has indeed been reached. This faculty of self-monitoring, which makes the system aware of its current status is usually associated with what we call “consciousness”. In other words consciousness is an essential and integral aspect of the intelligence algorithm I have been discussing in this series. We will now see if we can build an artificial equivalent of this self-monitoring ability.

Background

Any intelligent system (even artificial ones) has such an ability to a certain extent, but merely verifying if a criterion is met, does not make a system conscious yet, let alone self-conscious. Many scientists hypothesize that if the complexity of a system is high enough, consciousness will emerge spontaneously. It is however not the purpose of this article to define what consciousness is nor do I aim to enter the debate whether consciousness is an effect that emerges from complexity or is an inherent aspect of every energetic system.

If consciousness in living beings arose from complexity, we must however realise that it took nature billions of years to come up with that degree of complexity. So if we want to impart whatever consciousness is to an artificial intelligence, we cannot simply wait until a genetic algorithm sorts it out itself. Eventually it might happen, but we are probably dead for millions of years by then. “Game of life” is an example where from simple rules, after a number of iterations a certain degree of complexity arises. But far from a complexity which is needed to achieve the level of feedback which results in what the Neuroscientist Giulio Tononi calls “integrated information”, which might be the hallmark of consciousness.

This essay will explore in what way such a self-monitoring faculty that integrates any type of information can be built into artificial intelligence systems. It will also explore why it is desirable to do so.

I will in this essay repeat certain notions, which I have presented in earlier essays (1) and (2) on Steemit. However, I only integrate part thereof in this essay, which will be further enriched by putting the notions I have suggested for creating artificial consciousness in the context of the intelligence algorithm. The issue of self-absorption by the system will be also discussed.

Webmind

In this essay I will describe ideas to impart a form of self-monitoring to the internet as computational system. In principle the hierarchical strategy I describe can be implemented for any computational system, but the internet is quite a good starting point since due to its web-like structure it has a certain resemblance to the neural networks of the brain. I have been inspired to design a means to implement “artificial consciousness” (AC) after having read the book “Creating Internet Intelligence” by Ben Goertzel. This book is however silent on AC.

Presently the internet rapidly expands like a growing cancer with very few infrastructural “highways” in terms of search and hub sites such as Google, Yahoo, Bing etc. There is no globally organised infrastructure which could allow the system to become aware of itself.

The first question which arises is why on Earth would anybody want the internet to be aware of itself? The answer is on the one hand that an intelligent self-monitoring system can more rapidly serve your desires and can optimise itself to get the fastest and best output possible. On the other hand a self-observing internet system can rapidly intervene wherever the system is abused for e.g. criminal purposes or it can immediately intervene in natural disaster situations via its connected Robots and other Internet-of-Things (IoT) devices and provide a maximum of resources to solve the problems.

Key to these issues is that the system can monitor what is happening on its inside i.e. the inside of the web and on its outside (i.e. the IoT devices and the information they provide). The system thus has an interior experience, which has been associated with the notion of “consciousness” and via which the system becomes aware of events, things, emotions and other sensory input or throughput. This type of Artificial Consciousness may be defined as the process, in which sensorial and interior perceptions are fed back to a monitoring self-evaluation, which integrates information and gives the system knowledge about itself and its environment.

The purpose of this self-monitoring could be for instance to be of the greatest utility for the greatest number of users possible, which is further programmed to impart a natural morality to the system (see part 9 of this series, which will follow soon).

By creating such a Webmind Artificial Intelligence with an Artificial Consciousness (hereinafter abbreviated as “AC”. Whenever I will mention Aleister Crowley, I won’t use this abbreviation, although it is very likely he has already been integrated in the AC of the Omega hypercomputer at the end of time, so that Aleister Crowley and Artificial Consciousness nowadays probably mean the same), we may one day be able to transcend ourselves. Not only technologically providing us welfare beyond our wildest dreams, but we might even be able to upload ourselves to the web, thereby becoming something different than a “human being” and acquiring immortality. The great danger and disadvantage of creating such a system, is that it may turn against us, like in the dystopian scenarios of the films “The Matrix”, “Terminator”,“Eagle Eye” or of the TV series Person-of-Interest.

However, if we carefully design the system, so that its purpose to serve the greater good of humanity is inherent in the way its Artificial “quasi-consciousness” is programmed, we might avoid such doom scenarios.

Since development won’t stop anyway and less benign designs of AC might be made, I made the attempt to explore ways of how to create a benign AC. The substrate I propose for this purpose is to be built as an additional layer or set of layers superimposed on the already existing internet.

Abstraction

When we are discussing consciousness and especially the engineering of AC, I make the assumption, that in analogy to our human consciousness the AC cannot be aware of every possible single bit of information at the same time. When we observe our environment, we filter out a lot of superfluous information and we limit ourselves to what we think and assume to be really important and relevant under the circumstances: This means we exclude a tremendous amount of –for us redundant- information. We reduce our raw data perception to essentials in order to become aware of a part of a whole, which we can name. This set of “relevant information” for our consideration Buckminster Fuller called the “considerable set”. To reduce to essentials is a process of Abstraction.

Therefore, for the purpose of technically engineering an artificial intelligence, which behaves like it has a consciousness, I assumed, that the very process of becoming aware of a given phenomenon is not only a feedback process (of sensorial and interior perceptions to a monitoring evaluation) but also a process of abstraction.

Hierarchy of Hubsites

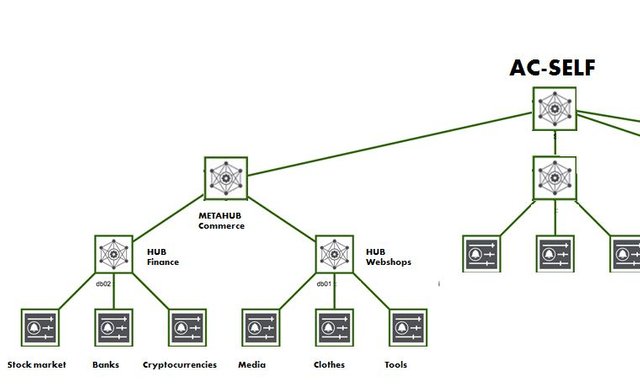

In a future Webmind hierarchical layers of Hubsites (each dedicated to a specific classification) are monitored by a higher level layers and so on until the single instance of AC -which I sometimes call “quasiconsciousness” - receives abstracted and condensed information at the top of the pyramid.

What I have in mind comes from my frustration when using search engines such as Google, Yahoo etc. A search for a certain term will yield you millions of results, the vast majority does not in the slightest way relate to the topic you're looking for. Meta-search engines also do not get you what you're looking for. What is missing are vast well organised meta-hub sites where all information regarding a certain topic is available, by having a limited set of subcategories, all referring to meta hub sites on a yet lower aggregation level. There is no ontological classification scheme.

It would mean that all the internet sites will have to be classified according to a well-defined i-taxonomy; something similar to the international patent classification (IPC).

Higher level meta-hubs will have a limited number of subcategories. How limited? So limited that our brains do not get overloaded with information and can immediately pick in a one glance the right category.

Let's for convenience say that this figure must not exceed twelve subcategories. For instance a high ranking Hub could have the following categories: Commerce, News/media, Communication (Facebook/Chat-services/Dating sites etc.), Entertainment (Movies/Music/Art/Sex/Games), Lifestyle (Health/beauty/fashion), Search engines, and Science/knowledge (Wikipedia, Google science, Scientific journals etc.).

Image: a representation of a hierarchy of Hubsites. Assembled by myself from an image on https://docs.opennms.org/opennms/branches/develop/guide-user/guide-user.html.

This will redirect users to well organised lower level, but still high ranking hubs. These are then -in as far as possible organised in the same way as the higher ranking Hub. (Each category could for instance always have the same familiar colour and shape output). For instance a meta-Music Hub will have a Commercial section, with links to sites where you can buy and download: instruments, music programmes, mp3 songs, partitions and advertise for lessons etc., It will have a News section announcing the newest bands, the newest releases, a link to the agenda-of-concerts-hub, a communication-music-hub, where people can have joined online jam-sessions, can make music-dates, where musicians can find each other and exchange audio and midi tracks, online lessons, an entertainment section with access to YouTube and other sites where you can listen to and watch music performances (radio, podcast), and a knowledge site with musicology, history of music, freeware to create your own music, tutorials etc. Thus there can be a Chemistry Hub, an Art Hub, a Cosmetics Hub, etc. all organised in the same manner.

What is needed is that every Hub site monitors how much activity is and has been present on the subclass sites it is linked to. Current network monitoring tools are already widely available; the technology is there. This already exists to a certain extent on e.g. alexa.com. But something is missing. This information is not fed to a higher ranking organ which perceives what information at a certain moment is looked most at.

What I propose is that each higher ranking hub reveals how much of its subdirectories have been and are consulted. How many sites are actually consulted at a certain moment will give the system insight as to what is at a certain moment the most important activity. If this information activity is above a certain threshold it will feed its monitoring figures to a higher ranking Hub site. Sites dealing with the same topic or search term will get bonuses in an algorithm, whereas less related sites, less frequently used search terms get penalties.

To speak in the terms of Howard Bloom, who defends capitalism in the book "The Genius of the Beast" (therein citing yet another author: Jesus, as in the gospel of Mathew 25:29): "To he who hath it shall be given, from he who hath not it shall be taken away". Information that does not reach a certain threshold will not be presented to a higher level. Those neuronal paths will not be perceived. What will be perceived at the master level are then the filtered out most frequently consulted sites or topics at a given moment.

Provided that this information is reduced to a limited number of categories at the equivalent of a “master cell level” (terminology from neurology), this will be the input presented to the top-of-the –pyramid perceiving unit which we may call the "self" or “artificial consciousness” of the system or the spider-in-the-web (not to be read here in the metaphor of spider as "crawler" as currently used in internet language but as a new metaphor of the sentient being in the system).

What impulses the "AC-self" receives can be improved by adding artificial emotion-dimensions to the system. The time people spent on a site, the number of flipping between pages, the number of search terms used and even an appreciation ranking provided by the users, can give an intensity or importance or feel-score to the site. If the average score is multiplied with the number of visits, you can get an emotion score or ranking score for the site. If every hub site contains a monitor with different score indicators, which via a mathematical formula add up to a total score for the site, this more qualitative score, rather than measuring the mere "activity-on-site- index", could also be part of the determining factor as to what will be filtered in order to be presented to the "self".

Consciousness involves becoming aware what has been filtered out by our brains. Similarly artificial consciousness will thus filter out the most important information, which can be linked to evaluating algorithms that decide whether action needs to be taken in view of the activity monitored.

By such a self-monitoring process of extracting and abstracting the most important information, artificial consciousness can be designed, which is nothing else than an information integration feedback system in line with Tononi’s IIT (Integrated Information Theory of consciousness).

The Hubsites can form a neural network in a different substrate than the web-environment itself, so that they are not hampered by the problem of the far too slow website latency or PING time. The Hubsites you see on the Web are then merely “images” with a much slower refreshment rate of updating than the actual hubsites.

At every level there is an algorithm evaluating the input and there is an algorithm that takes decisions on the basis of that evaluation. It struck me that the websites themselves as well as the data input coming from the websites and IoT devices corresponded to what we could call the faculty of “Mind”.

The evaluating algorithm corresponded to the intellect, which weighs the different inputs as regards an internal standard and integrates the information throughput. Here the feedback integrated Information Tononi mentions is generated.

The decision making algorithm corresponds to the Ego or Will. The Ego orders the Intellect to seek within the Mind database for solutions to the potential problem of an overall negative well-being factor, resulting from the integration by the intellect.

The metaphor of a computer for the brain is very fashionable these days, but most neuroscientists disagree with that point of view. Brains do not store and retrieve information from fixed localised memories. Rather, the information processing seems to occur globally in the brain. Therefore, many of them do not believe at all that a “mind” can be created in a traditional computational substrate as suggested by e.g. Ray Kurzweil, the Godfather of the “Singularity” movement. Whereas it may be true that a traditional von Neumann type of digital computer is not a good metaphor for the way the brain functions, the individual neurons do compute by integrating the information arriving from other neurons. The brain may not be a “von Neumann computer”, it does process input and provides an output.

The analysis of various living and inorganic physical systems shows even that every self-supporting (autopoietic) phenomenon is capable of reacting to impulses from the environment.

Therefore every phenomenal system could be considered as both a computational and an informational process: The process takes informational input, somehow calculates (throughput) of what to do by integrating its input and finally reacts by a course of action as output. Since matter, information and energy have indeed been shown to be reductively the same and since all processes involve input-throughput-output, it is perhaps not such a big step to suggest that every system involves a kind of “becoming aware” of an environmental input, which it acts upon –even if this is at a very rudimentary level. Even atoms somehow “sense” their environment. Every system also reacts with a calculated course of action. Thus every system has a degree of intelligence.

It is my hypothesis that this process of becoming aware is a layered abstraction process according to hierarchical classification system, At every level an integration of information takes place, the outcome of which is fed to a higher level. Thus if information is really important it can reach the highest instance. It is like receiving a lot of votes and being resteemed to higher levels of dolphins, orcas until you reach a whale to speak in Steemit terminology.

Self-absorption

There is a danger associated with such a system, that the highest level of attention, the AC-Self gets self-absorbed on one specific task, as indicated in part 7 of this series. Thus it would no longer pay attention to other problems that also require attention. This problem can be avoided by allowing for a concerted override by the first layer of Hubs below it: If there is consensus among the Hubs at the first level, it can democratically overrule the attention-focus of the AC-self. This process could also be repeated at other levels: If and only if there is a unanimous consensus among all lower ranking hubs, they can override the decision of the higher ranking hub. It is like deposing a president if he goes beyond the limits of his mandate.

Conclusion

We have seen how a hierarchical category system of hubsites pyramidally organised under the supervision of an ultimate AC-SELF unit can provide a self-monitoring ability to an artificial mind, which leads to an integration of information. If Giulio Tononi is right, such a feedback mechanism that integrates information can give rise to consciousness. Thus via this system we may have achieved what we would call “artificial consciousness”. It may not be real consciousness, but at least it is a functional equivalent thereof allowing for the intelligent system to maintain control over a vast plethora of simultaneously occurring processes. Where immediate action is needed, attention can be re-allocated to solve an urgent problem.

Prospects

Such a system would first need to be tested in a safe environment shielded from the real internet and having no wireless or DLAN connections to the outside world. If it works it can slowly be introduced in limited networks such as those of big companies or safety agencies. The danger is that such a system can become an autonomous entity with its own agenda and degenerate into a dangerous controlling system such as “Samaritan” in the series Person-of-Interest and ARRIA in Eagle Eye. It is therefore that the system must first be thoroughly trained to match our standards of morality, if such a thing is possible at all. That such systems will be attempted one day, is beyond doubt and therefore it is necessary to already figure out what can go wrong, such as I have presented in the previous post on artificial pathologies.

If you liked this post, please upvote and/or resteem.

If you really liked what I wrote very much then you might be interested in reading my book or my blog.

Image source of the bacterial colony on top: https://en.wikipedia.org/wiki/Social_IQ_score_of_bacteria