Natural Language Processing: Not An Easy Task

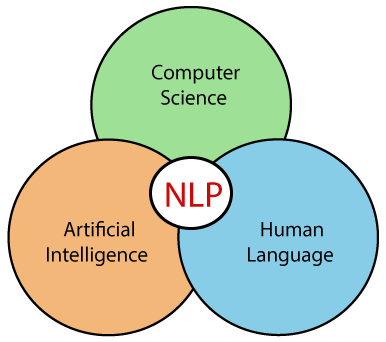

Natural language processing (NLP, also called computational linguistics) is a field at the intersection of computer science, AI and human languages.

NLP proposes computational techniques and models for processing human languages. NLP has various tasks and components that touch to cognitive science or etymology tasks. NLP is dealing with human and it is a very interesting and important field of study. You may work for development of child's or only in machine learning field. There are numerous of possibilities when we talk about NLP.

Think about a case where we want to translate some text from language A to language B. You cen represent the text as a sequence of characters and ignore the words or you can represent the test as sequence of words which we want to translate to language B or you can represent it as sequence of characters and words (without any phrasals) and in the end you can have the language B results. Your model have to show inputs in a format or you should use a format when you train your model. Any human language can not be learned without any format. Every child knows some words and rules and end of the day s/he can talk. May be s/he does not know anything about irony or inverted sentence. But with time s/he will learn the complex rules about his/her language. Pattern is the same for computers. We need a form of a language to represent it in a computer.

NLP is related to:

Artificial intelligence (approaches and methods)

Machine/deep learning (learning and programming techniques)

Linguistics (the core)

Formal languages and automata theory (description of languages and their relations/interactions)

Psycholinguistics (the attitude)

Cognitive science (the way how we think)

NLP is everywhere in our daily life:

Google searches (recommendation)

Google translate

Siri

MS Word auto-correct

Amazon Alexa

Video games

etc.

We need computers to understand/use/generate human language because:

texts are everywhere (emails, blogs, web pages, articles, banking documents, doctor reports, etc.)

Information is growing every single day

Multilingual communication is a need

Dialogue based communicatiın are getting popılar (chatbots, digital personal assistants, etc.)

We (as human beings) cannot search or read any faster than computers

Every second, on average, around 6,000 tweets are tweeted on Twitter (200 Billion tweets per year). Text based information is growing and we (as human beings) cannot be fast as computers to track and understand that kind of data.

However, NLP is not an easy task. Language is:

a formulation of words, rules, and exceptions

both systematic but still complex to grasp easily

unfortunately keeps changing and evolving (new words and exceptions)

ambiguity is everywhere (at all levels)

language has cultural point of view

Your NLP system can be work really well on A type dialect of Arabic but not well on dialect B. There are numerous of exceptions in every language. You can set the ground rules (rules for creating plural words in language A) but you should also handle the exceptions.

Also, with time, language is evolving. Before Google, we did not have google it idiom. From territory to territory inputs can vary. How are you? Howdy? Our model can also handle cultural rules and patterns.

NLP has a interpretation task. We need to understand the tax. Think about a text which is about Beatles and saying:

Beatles is a legendary group of people like Coldplay and Iron Maiden.

Also we have an another corpus:

Coldplay is British music band.

Our model should understand that Beatles is a music band. In real world, direct information is rare. So, NLP should handle the interpretation task. But if we talk abut interpretation, we should also note that ambiguity is everywhere.

If your model call the person as a taxi, you have a serious problem.

Ambiguity can be semantic or morphological. You can check the following article for morphological ambiguity.

Let’s give an overview:

NLP models the communication between a speaker and a hearer

Speaker side:

Intention: decide when and what information to be transmitted

Generation: transform the information to be communicatated into a sequence of words

Synthesis: use desired modality (text, speech, both)

Hearer side:

Perception: transform input modality into a string of words

Understanding: process the expressed content in the input

Incorporation: decide what to do with that content

Humans can fluently process and use all of these while understanding or generating the language. Ambiguity is a big deal for computers.

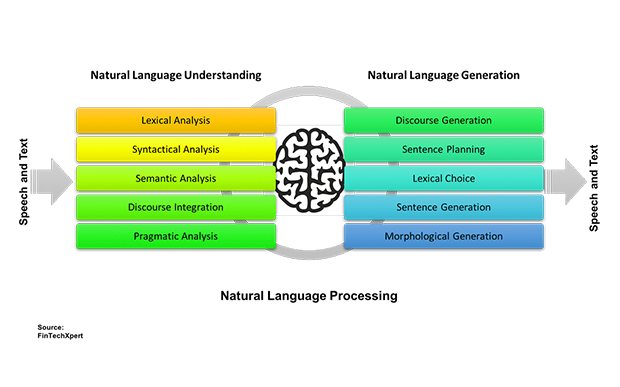

Natural Language Understanding (NLU) and Natural Language Generation (NLG) is subset fields of NLP. Let’s go through the process.

NLU:

Lexical Analysis: Validate the words

Syntactical Analysis: Validate the sentences

Semantic Analysis: Understand the sentence (extract the meaning)

Discourse Integration: Use the past knowledge/experience while understanding the sentence

Pragmatic Analysis: Sometimes using a sentence in a one speech can have a good meaning but have a bad meaning in another sppech. Pragmatic analysis will deal with such scenarios.

NLG:

Morphological Generation: We should generate the words.

Sentence Generation: Construct the sentence appropriately.

Lexical Choice: Adjust the sentence (in word level) according to hearer (e.g formal or informal)

Sentence Planning: For larger speeches we should plan the sentences. For example if I want to transmit 10 information to you, I can make it in 10 sentence or 3 sentence.

Discourse Generation: If there will be a two way communication (i: hello w: hello i: how are you? w: fine and you? ….) we should store the past information and generate the next. If I say “it is not easy” you will easily understand that it means NLP, not AI or business analytics. That is the discourse generation.

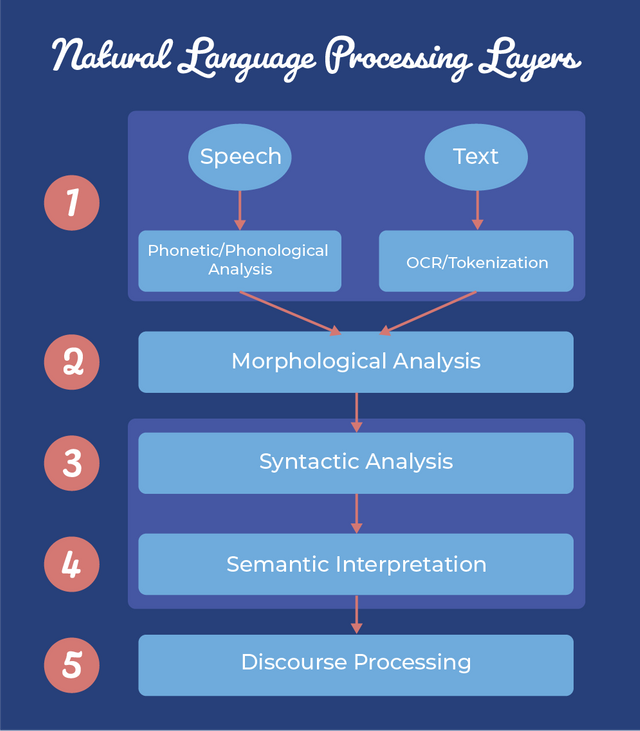

The Characteristics of NLP

First we will start with layers of NLP. In general, we need to go through every steps in a NLP system. Let’s dive into definitions:

Phonology: the relation between words and sounds used to realize them

Morphology: the construction of words

Syntax: the formation of grammatical sentence from words and their roles in the sentence

Semantics: the meaning of words and sentences

Discourse: the effect of other sentences on the interpretation of a sentence

Pragmatics: the use of sentences in different situations

World Knowledge: the amount of external knowledge necessary to understand language ( Speech between two medical doctors is irevellant to me because there is no knowledge about that terminology in my world knowledge)

There are so many topics in NLP and it is a big field.

Tokenization

Stemming

Part of speech tagging

Query expansion

Parsing

Topic classification

Morphological analysis

Information extraction

Information retrieval

Named entity recognition

Sentiment analysis

Word sense disambiguation

Conference resolution

Text simplification

Machine translation

Summarization

Paraphrasing

Language generation

Question answering

Dialogue systems

Image captioning

Don’t forget to subscribe to my newsletter.

https://blog.us8.list-manage.com/subscribe?u=bc59b84aac480b1dd72215c88&id=1d9a14612a