[Steem] Steem Per Mvests Leads to Bot Island

Sometimes the rabbit hole goes deeper than you thought.

(Available in raw Jupyter Notebook for on GitHub.)

And so it began, with a simple back and forth between @crokkon and I, talking about the methods of deriving steem-per-mvest and whether or not it was even worth doing.

This is going to be an example of how asking the simplest questions can take you down some very strange rabbit holes, and if you're willing to follow them you can stumble on things you have no way of understanding. But at least you found them.

The basic question:

Has the effective ratio between steem and mega-vests changed significantly enough over the last year to even bother worrying about generating a precise ratio in order to determine how much a given vote is worth?

It seems like a very simple question to answer. And it is! But digging out the answer ends up revealing more than it intends.

Getting Out the Shovel

Like most things lately that involve code, this starts simply enough by loading up the basics of SteamData, pulling in some useful analysis tools, and finishing off the imports with something to draw pretty pictures. I know up front that I'm going to want to plot at least one graph of the ratio between deposits and withdrawals in the table of operations of the system fulfilling requests to convert steem to SP.

If you've been following my code posts over the last few weeks, you know what's coming up now. Imports, database initialization, query, and then we start looking at the data we get back.

# Setting up the imports

from steemdata import SteemData

import datetime

from datetime import datetime as dt

import numpy as np

import bokeh

import bokeh.plotting as bplt

# Init connection to database

db = SteemData()

query = {

'type' : 'fill_vesting_withdraw',

'timestamp' : {'$gte': dt.now() - datetime.timedelta(days=360)}}

proj = {'deposited.amount': 1, 'withdrawn.amount': 1, 'timestamp': 1, 'from_account': 1, 'to_account':1, '_id': 0}

sort = [('timestamp', -1)]

%%time

result = db.Operations.find(query,

projection=proj,

sort=sort)

fvL = list(result)

Wall time: 1min 33s

I decided to go big rather than to go home and just grab the last year of transaction information on vesting withdrawals. That sounds like complete overkill, and it probably is, but as a result I can look at the data and really get a feeling for what's going on under the hood. Also, it gives us a chance to make a solid prediction (technically hindcast) of what the ratio was and how much it's varied.

It turns out that there are just over a million vesting withdrawals that occurred in the last year. Honestly, that could be a lot worse. We can really work with this amount of data.

While were looking, let's check out the first five and the last five in the list. Since I told the database to return the list in reverse chronological order, those at the top of the most recent and of those at the bottom are the oldest.

len(fvL)

1049438

fvL[:3], fvL[-3:]

([{'deposited': {'amount': 35.77},

'from_account': 'zakariashikder',

'timestamp': datetime.datetime(2018, 3, 8, 18, 18, 33),

'to_account': 'zakariashikder',

'withdrawn': {'amount': 73050.513285}},

{'deposited': {'amount': 20.336},

'from_account': 'nobutsd',

'timestamp': datetime.datetime(2018, 3, 8, 18, 17, 33),

'to_account': 'nobutsd',

'withdrawn': {'amount': 41531.82173}},

{'deposited': {'amount': 0.927},

'from_account': 'junhokim',

'timestamp': datetime.datetime(2018, 3, 8, 18, 17, 12),

'to_account': 'junhokim',

'withdrawn': {'amount': 1894.72076}}],

[{'deposited': {'amount': 65.278},

'from_account': 'midnightoil',

'timestamp': datetime.datetime(2017, 3, 13, 13, 32, 18),

'to_account': 'midnightoil',

'withdrawn': {'amount': 135825.952611}},

{'deposited': {'amount': 43.866},

'from_account': 'catulhu',

'timestamp': datetime.datetime(2017, 3, 13, 13, 32, 9),

'to_account': 'catulhu',

'withdrawn': {'amount': 91274.494945}},

{'deposited': {'amount': 5.902},

'from_account': 'dennygalindo',

'timestamp': datetime.datetime(2017, 3, 13, 13, 31, 36),

'to_account': 'dennygalindo',

'withdrawn': {'amount': 12281.595967}}])

There's nothing particularly shocking or surprising about this information really.

Well, that and the fact that @tinfoilfedora, who showed up once, has one of the best names that I've ever seen on the platform. Bravo!

Now that we have the raw data that we came for, we need to come up with the actual ratio in question. This is relatively easy if we just make a list comprehension which pulls out the necessary values and gives them a quick divide. The ratio just falls out.

While we're at it, let's look at the first and last five just to see if the data looks relatively coherent and there's nothing obviously wrong with it.

spmL = [e['deposited']['amount'] / e['withdrawn']['amount']

for e in fvL]

spmL[:5], spmL[-5:]

([0.0004896611726798767,

0.0004896486393543035,

0.0004892541526805249,

0.000489633353887179,

0.000489619055570078],

[0.0004806019912260807,

0.0004800960347037997,

0.0004806003473206151,

0.00048059427802293165,

0.00048055643711602])

np.median(spmL)

0.00048264105814548454

Things look pretty good in terms of raw data. Not only that, but we can tell from just an easy eyeball of the information that steem per million vests hasn't really changed all that much in the last year. The only really conspicuous changes down in the millionths place, which might be meaningful to the really big whales in the pool but for most of the people reading this – that's probably less than noise in the signal.

Just because we have the information, I decided to pull the median out. Not the average, not the mean, not even the mode – the median. For those not familiar with statistical operations, that's what you get when you sort a list of numbers highest to lowest and literally pick the one that is in the middle of the list.

This data is not entirely clean, as you will see later, but because the sample is so large, the median was going to pull out the most reasonable value in the structure. It passes the sniff test as a reasonable value in between those at the beginning and and of the last year.

Table That Motion

@crokkon was good enough to share a link to some code that he had written a little over two weeks ago which did a little dumpster diving in the database to do these calculations and work out the ratio over time. He cleverly managed to avoid showing off the filters that he had implemented "for simplicity", but I figured it couldn't be that big a deal.

It's actually a pretty big deal. We'll take a look at why here shortly.

First, though, let's shove some data into a structure which is designed for manipulating tabular data.

I know that a lot of coders on the platform are already familiar with pandas because it is one of the best known big data manipulating tools in the world. It's new to me. That probably doesn't say a lot positive about my experience as an analyst, but bear with me – we'll run with it.

After all, you've made it this far.

To shove the data from the database into a form that we can manipulate easily, we'll basically just implement a series of implicit for loops in the form of some list comprehensions. Essentially we just build a nice record dictionary in a semi-lazy way, fold it together with the list of ratios that we generated, and incidentally tell the data frame that the bit of information that primarily differentiates one of these entries from the other is the timestamp.

That last bit is important because pandas has some nice tools for working with timeseries, which we'll talk about shortly.

import pandas as pd

Data = pd.DataFrame({'deposited': [e['deposited']['amount'] for e in fvL],

'withdrawn': [e['deposited']['amount'] for e in fvL],

'from_account': [e['from_account'] for e in fvL],

'to_account': [e['to_account'] for e in fvL],

'ratio': spmL

},

index=[e['timestamp'] for e in fvL])

Now we have a nice, flexible, high-speed data structure – which is full of data with questionable consistency.

Having spent a little bit of time trawling through this pile, there were two things that immediately leapt out at me as indicators of less than useful knowledge. Firstly, some of the calculated ratios were zero. That can really only occur when the deposit is equal to zero. There were also sometimes when the ratio was 1, which can only happen when – well, nothing good.

So let's filter out bits of the database where the ratio is greater than 0.99 and entries where deposits are zero.

Data = Data[(Data['ratio'] < 0.99)]

Data = Data[(Data['deposited'] > 0)]

One of the nice things about pandas is that when given tabular data, it's really quite nice about creating a clear output.

Again, let's look at the top and the bottom of this list since it's still in chronological order. Now we have a nice temporal index, and all of our fields are nicely lined up.

Again, the ratios make sense and pandas is very good about giving us just enough information to see where there is some differentiation.

Data.head()

| deposited | from_account | ratio | to_account | withdrawn | |

|---|---|---|---|---|---|

| 2018-03-08 18:18:33 | 35.770 | zakariashikder | 0.000490 | zakariashikder | 35.770 |

| 2018-03-08 18:17:33 | 20.336 | nobutsd | 0.000490 | nobutsd | 20.336 |

| 2018-03-08 18:17:12 | 0.927 | junhokim | 0.000489 | junhokim | 0.927 |

| 2018-03-08 18:15:45 | 10.278 | jakiasultana | 0.000490 | jakiasultana | 10.278 |

| 2018-03-08 18:15:33 | 2.673 | markboss | 0.000490 | markboss | 2.673 |

Data.tail()

| deposited | from_account | ratio | to_account | withdrawn | |

|---|---|---|---|---|---|

| 2017-03-13 13:40:15 | 2337.791 | salva82 | 0.000481 | salva82 | 2337.791 |

| 2017-03-13 13:36:48 | 0.689 | romangelsi | 0.000480 | romangelsi | 0.689 |

| 2017-03-13 13:32:18 | 65.278 | midnightoil | 0.000481 | midnightoil | 65.278 |

| 2017-03-13 13:32:09 | 43.866 | catulhu | 0.000481 | catulhu | 43.866 |

| 2017-03-13 13:31:36 | 5.902 | dennygalindo | 0.000481 | dennygalindo | 5.902 |

Since we have everything in such a nice structure, we can query it directly for various manipulations rather than having to write the code to do all those things by hand.

For instance, what if we just want to pileup all the accounts that have received these withdrawals and add up the total amount deposited and withdrawn over the last year. If we were to actually write the Python code for that, it would involve at least one loop, a set to hold the accounts and unify them, and probably an accumulator.

With a panda data frame? It's a one-liner.

Data.groupby('to_account')['deposited', 'withdrawn'].sum().head()

| deposited | withdrawn | |

|---|---|---|

| to_account | ||

| a-a | 6.153 | 6.153 |

| a-a-lifemix | 216.746 | 216.746 |

| a-angel | 6.785 | 6.785 |

| a-c-s | 7.721 | 7.721 |

| a-condor | 737.694 | 737.694 |

len(Data.groupby('to_account')['deposited', 'withdrawn'].sum())

32112

The interesting thing here is that of that 1 million set of implicit withdrawals, only 32,000 accounts actually received funds.

That's – kind of surprising. This is the kind of thing that perks my ears when stuff rolls around. Essentially, this is a much smaller number of accounts than I ever expected to see involved over the last year.

I can't even really begin to theorize about why these numbers are so small. I find it more than a little concerning. There are a lot of promotional people who constantly tout the number of active accounts which often exceed 70,000 – but here we see 32,000 accounts which have triggered this operation on the blockchain.

I can't explain that. Yet.

We will leave aside that mystery for the moment and make use of pandas again to do something that would be much harder without it – carving all of these transactions up into weekly blocks. Again, something that in straight Python would be a real challenge but here turns into a single line where we simply tell the structure to resample itself and break down into one-week chunks, taking the numeric values within those chunks and taking the median value.

Yes, I tried using the average value. Because of some of the weird bits going on under the hood, the mean was just a bad choice. The median is just fine for our purposes, and even know it's probably not the right thing for the deposited or withdrawn values, I think it's interesting to look at because one of the things that we can tell immediately is that the usual value is relatively low.

52 weeks ago, there was a particularly odd outlier in values. One day, that might be worth investigating more thoroughly. Everything after that looks pretty normal.

WkData = Data.resample('1W').median()

WkData

| deposited | ratio | withdrawn | |

|---|---|---|---|

| 2017-03-19 | 2.2810 | 0.000481 | 2.2810 |

| 2017-03-26 | 2.5440 | 0.000481 | 2.5440 |

| 2017-04-02 | 2.6260 | 0.000481 | 2.6260 |

| 2017-04-09 | 1.7650 | 0.000481 | 1.7650 |

| 2017-04-16 | 2.3390 | 0.000481 | 2.3390 |

| 2017-04-23 | 2.4710 | 0.000481 | 2.4710 |

| 2017-04-30 | 2.1810 | 0.000482 | 2.1810 |

| 2017-05-07 | 2.4730 | 0.000482 | 2.4730 |

| 2017-05-14 | 2.4740 | 0.000482 | 2.4740 |

| 2017-05-21 | 1.0300 | 0.000482 | 1.0300 |

| 2017-05-28 | 0.7700 | 0.000482 | 0.7700 |

| 2017-06-04 | 1.0070 | 0.000482 | 1.0070 |

| 2017-06-11 | 1.0110 | 0.000483 | 1.0110 |

| 2017-06-18 | 1.0140 | 0.000483 | 1.0140 |

| 2017-06-25 | 1.0140 | 0.000483 | 1.0140 |

| 2017-07-02 | 0.8645 | 0.000483 | 0.8645 |

| 2017-07-09 | 0.6950 | 0.000483 | 0.6950 |

| 2017-07-16 | 0.6950 | 0.000483 | 0.6950 |

| 2017-07-23 | 0.6960 | 0.000484 | 0.6960 |

| 2017-07-30 | 0.6960 | 0.000484 | 0.6960 |

| 2017-08-06 | 0.6960 | 0.000484 | 0.6960 |

| 2017-08-13 | 0.6970 | 0.000484 | 0.6970 |

| 2017-08-20 | 1.1330 | 0.000484 | 1.1330 |

| 2017-08-27 | 1.1690 | 0.000485 | 1.1690 |

| 2017-09-03 | 1.1960 | 0.000485 | 1.1960 |

| 2017-09-10 | 1.2010 | 0.000485 | 1.2010 |

| 2017-09-17 | 1.2020 | 0.000485 | 1.2020 |

| 2017-09-24 | 1.2030 | 0.000485 | 1.2030 |

| 2017-10-01 | 1.3300 | 0.000486 | 1.3300 |

| 2017-10-08 | 2.0045 | 0.000486 | 2.0045 |

| 2017-10-15 | 1.3415 | 0.000486 | 1.3415 |

| 2017-10-22 | 1.4575 | 0.000486 | 1.4575 |

| 2017-10-29 | 1.5650 | 0.000486 | 1.5650 |

| 2017-11-05 | 1.5430 | 0.000486 | 1.5430 |

| 2017-11-12 | 2.3850 | 0.000487 | 2.3850 |

| 2017-11-19 | 2.7410 | 0.000487 | 2.7410 |

| 2017-11-26 | 2.8080 | 0.000487 | 2.8080 |

| 2017-12-03 | 2.8735 | 0.000487 | 2.8735 |

| 2017-12-10 | 2.6980 | 0.000487 | 2.6980 |

| 2017-12-17 | 2.9570 | 0.000487 | 2.9570 |

| 2017-12-24 | 2.8225 | 0.000488 | 2.8225 |

| 2017-12-31 | 2.7750 | 0.000488 | 2.7750 |

| 2018-01-07 | 2.0065 | 0.000488 | 2.0065 |

| 2018-01-14 | 2.0525 | 0.000488 | 2.0525 |

| 2018-01-21 | 1.8890 | 0.000488 | 1.8890 |

| 2018-01-28 | 1.7640 | 0.000488 | 1.7640 |

| 2018-02-04 | 1.5410 | 0.000489 | 1.5410 |

| 2018-02-11 | 1.5070 | 0.000489 | 1.5070 |

| 2018-02-18 | 1.4270 | 0.000489 | 1.4270 |

| 2018-02-25 | 1.1840 | 0.000489 | 1.1840 |

| 2018-03-04 | 0.8895 | 0.000489 | 0.8895 |

| 2018-03-11 | 0.6290 | 0.000489 | 0.6290 |

Another thing that you learn while dumpster diving in data is to look for strange aberrations. Anything different is something worth looking at.

Strangest thing I've seen in this database? That some of these transactions don't have the same from account and to account. It's not even a particularly small portion of the database. Out of 1 million transactions, almost 300,000 have different origin and destination accounts.

No, I don't have a really good explanation for why that's the case, either. It's far too common to be unusual behavior, but there's something about the way that these withdrawal transactions are happening that I don't understand.

MultData = Data[(Data['from_account'] != Data['to_account'])]

MultData.head()

| deposited | from_account | ratio | to_account | withdrawn | |

|---|---|---|---|---|---|

| 2018-03-08 17:08:33 | 0.397 | jennykidd | 0.000489 | cabi5boh | 0.397 |

| 2018-03-08 17:08:18 | 0.415 | bitrexx | 0.000489 | cabi5boh | 0.415 |

| 2018-03-08 15:45:09 | 0.387 | index178 | 0.000489 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index180 | 0.000489 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index181 | 0.000489 | sigmajin | 0.387 |

len(MultData)

296947

While we've got the information out, let's see what happens when we group by the receiving account.

MultData.groupby('to_account').mean()

| deposited | ratio | withdrawn | |

|---|---|---|---|

| to_account | |||

| aaron | 2621.641637 | 0.000487 | 2621.641637 |

| adiel | 3.751456 | 0.000483 | 3.751456 |

| ahmeddimassi | 0.836500 | 0.000489 | 0.836500 |

| aizensou | 7.521411 | 0.000481 | 7.521411 |

| alittle | 21509.356333 | 0.000486 | 21509.356333 |

| alot | 72956.143818 | 0.000486 | 72956.143818 |

| alpha | 7272.757897 | 0.000481 | 7272.757897 |

| anastacia | 81067.693000 | 0.000483 | 81067.693000 |

| another | 2.276687 | 0.000481 | 2.276687 |

| anyx | 6.104247 | 0.000482 | 6.104247 |

| apollo | 17483.891125 | 0.000481 | 17483.891125 |

| apoloo1 | 0.223000 | 0.000486 | 0.223000 |

| artakan | 1.023773 | 0.000481 | 1.023773 |

| ashleigh | 50199.675174 | 0.000483 | 50199.675174 |

| ausbitbank | 31.232647 | 0.000488 | 31.232647 |

| awesonimals | 1.180615 | 0.000488 | 1.180615 |

| b0y2k | 26.259962 | 0.000481 | 26.259962 |

| bacchist | 14.011846 | 0.000488 | 14.011846 |

| badassmother | 5.077814 | 0.000488 | 5.077814 |

| balin | 13.605761 | 0.000484 | 13.605761 |

| barbarossa | 2.661227 | 0.000484 | 2.661227 |

| berkah | 26.675143 | 0.000481 | 26.675143 |

| bhuz | 880.096813 | 0.000481 | 880.096813 |

| bitcoiner-0 | 0.319839 | 0.000471 | 0.319839 |

| bitcoiner0 | 0.198748 | 0.000471 | 0.198748 |

| boba | 0.044326 | 0.000471 | 0.044326 |

| brainwashed | 0.227000 | 0.000483 | 0.227000 |

| buildteam | 718.086306 | 0.000486 | 718.086306 |

| cabi5boh | 0.561829 | 0.000489 | 0.561829 |

| cgame | 2.428500 | 0.000483 | 2.428500 |

| ... | ... | ... | ... |

| tard | 4.900731 | 0.000486 | 4.900731 |

| teamaustralia | 10.924636 | 0.000488 | 10.924636 |

| teamsmooth | 2776.313679 | 0.000486 | 2776.313679 |

| teemnomad | 9.190000 | 0.000484 | 9.190000 |

| tesirece11q | 0.404020 | 0.000483 | 0.404020 |

| thatupeeshet | 0.404274 | 0.000483 | 0.404274 |

| thebatchman | 5.027615 | 0.000483 | 5.027615 |

| thecyclist | 1190.967433 | 0.000482 | 1190.967433 |

| theresteemer | 11.420601 | 0.000488 | 11.420601 |

| thethelathit | 0.384407 | 0.000484 | 0.384407 |

| thetinytim | 2.231578 | 0.000485 | 2.231578 |

| theytewhenu6 | 0.404186 | 0.000483 | 0.404186 |

| to4ogotkckw | 0.404165 | 0.000483 | 0.404165 |

| trollbuster | 2.265856 | 0.000484 | 2.265856 |

| twinner | 188.775286 | 0.000485 | 188.775286 |

| twinner05 | 1781.704333 | 0.000486 | 1781.704333 |

| twinner08 | 775.044500 | 0.000486 | 775.044500 |

| twinner13 | 5335.431500 | 0.000486 | 5335.431500 |

| twinner21 | 776.055833 | 0.000486 | 776.055833 |

| umbriel | 4.623880 | 0.000484 | 4.623880 |

| urknall | 2574.558308 | 0.000487 | 2574.558308 |

| venuspcs | 3.812435 | 0.000486 | 3.812435 |

| vix | 3.457633 | 0.000484 | 3.457633 |

| vklpgxpgpdks | 0.404217 | 0.000483 | 0.404217 |

| www | 1671.388692 | 0.000483 | 1671.388692 |

| xer | 3690.547000 | 0.000483 | 3690.547000 |

| xeroc | 623.663154 | 0.000484 | 623.663154 |

| yc0ybqrcuyl6 | 0.403993 | 0.000483 | 0.403993 |

| yme | 7.760092 | 0.000484 | 7.760092 |

| zear | 237.211543 | 0.000489 | 237.211543 |

187 rows × 3 columns

Now, this is where it gets strange. There are only 187 distinct recipient accounts for these 300,000 withdrawal transactions.

It was looking a little strange, before – but anytime you see a sample this large have less than 200 of anything happening, it's worth looking at.

I went with the mean derivation on this one because it seemed like an actual average for each of these values would be meaningful. They are certainly interesting. Some of these things are surprisingly vast, while in general most of the leakage effects are relatively small.

Again, I have no idea what's going on here – but because there are only 187 distinct rows that's a series of relationships which we can easily graph, and we will – after we do a few other things first.

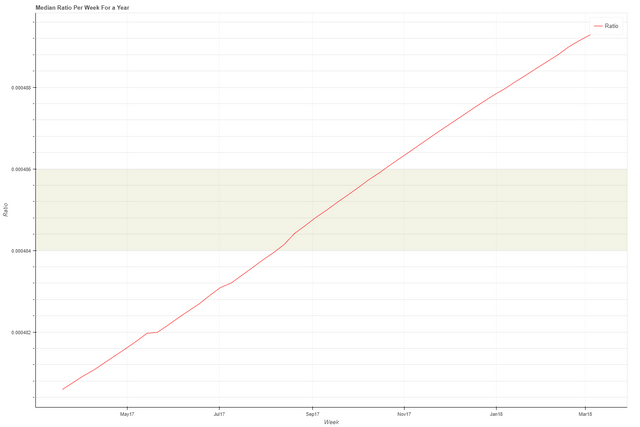

Do You Think My Slope Is Pretty?

It's time, finally, to answer the question we started this whole thing with. We already have a pretty good idea, but a picture is worth a thousand words. Which probably means that a graph is worth about half of what I've written already about this problem?

When I put it that way, it starts to be kind of sad.

Rather than use plotly today, I decided to learn an entirely new graphing system, because if you don't learn at least three new complicated things a day what good are you? Besides, while plotly does make some really nice online graphs – they really do require that a server elsewhere crunch the data, and I am almost certain to have more hardware sitting here under me to do this graph crunching than any online services likely to let me have for free.

I was right about that. Crunching the graphs with bokeh took almost 0 time and generated a very nice HTML interface locally which could be saved out to a convenient image.

Setting up a graph is a little bit fiddly and I don't have exactly what I wanted – but it's good enough for government work which means it's more than good enough for this job.

bplt.output_file('weeklyRatio.html')

Plot = bplt.figure( tools='pan,box_zoom,reset,save',

title='Median Ratio Per Week For a Year',

x_axis_label='Week', y_axis_label='Ratio',

x_axis_type='datetime',

width=600, height=400, sizing_mode='scale_height'

)

Plot.axis.minor_tick_in = -3

Plot.axis.minor_tick_out = 6

Plot.yaxis[0].formatter = bokeh.models.NumeralTickFormatter(format="0.000000")

# Plot.yaxis.major_label_orientation = "vertical"

Plot.grid.grid_line_alpha = 0.4

Plot.ygrid.minor_grid_line_color = 'grey'

Plot.ygrid.minor_grid_line_alpha = 0.2

Plot.ygrid.band_fill_color='olive'

Plot.ygrid.band_fill_alpha=0.1

Plot.line(WkData.index, WkData.ratio, color='red', legend='Ratio')

bplt.show(Plot)

This thing looks like a climate change graph, but it has something in common with global warming.

The slope of that curve is nowhere near as intense as it appears on the sheet.

Notice what the range on the Y axis actually is. It just runs over the range that we've already seen, differing by 1/100000 from the top to the bottom. I also used the weekly numbers rather than the full 1 million element set because there was absolutely no need for that level of resolution. 52 points along the timeline are more than sufficient.

While the slope isn't particularly useful for us to know, the fact that it exists is interesting. There has been a general trend upwards on the steem per mega-vests over the last year. As I understand it, that could only be the result of witnesses making a conscious decision to increase that number ever so slightly.

Again, I could be completely wrong about that. Once you start getting down to this level of poking at the blockchain, there is a lot of mystery and a lot of absolute absence of documentation, with the only reference that you can look at which makes a difference being the source code.

I haven't quite descended to that level of madness. Yet.

Who's Touching Who?

Let's take the pause that refreshes and look back up at those accounts which make up 30% of the overall transactions of this nature but only target 178 accounts or so. What's going on there?

I have no idea what's going on there, but we can see what that network of accounts actually looks like. All we need to do is create a relationship map which sums the transfers between them and look at what's in front of us.

That's going to take our old friend graphviz, so let's get that set up.

from graphviz import Digraph

dot = Digraph(comment="Steem-to-Mvest Oddity Map",

format="svg",

engine="sfdp")

dot.attr('graph', overlap='false')

dot.attr('graph', ratio='auto')

dot.attr('graph', size='1000000,1000000')

dot.attr('graph', start='1.0')

dot.attr('graph', K='10')

dot.attr('graph', margin='5')

We'll start with accounts being light grey, just for some visual texture.

dot.attr('node', shape='rectangle',

style='filled', color='black',

fillcolor='lightgrey')

Now that we have a framework, there needs to be some data. We'll build a new data frame to give us something to work with.

No timeline info is going to be necessary, which is nice. All that can go. Just accounts and values of what's been withdrawn.

rGraphData = pd.DataFrame({'to': MultData.to_account,

'from': MultData.from_account,

'deposit': MultData.deposited,

'withdraw': MultData.withdrawn})

rGraphData.head(20)

| deposit | from | to | withdraw | |

|---|---|---|---|---|

| 2018-03-08 17:08:33 | 0.397 | jennykidd | cabi5boh | 0.397 |

| 2018-03-08 17:08:18 | 0.415 | bitrexx | cabi5boh | 0.415 |

| 2018-03-08 15:45:09 | 0.387 | index178 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index180 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index181 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index182 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index183 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index184 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index185 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index186 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index187 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index188 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index189 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index190 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index191 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index192 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index193 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index194 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index195 | sigmajin | 0.387 |

| 2018-03-08 15:45:09 | 0.387 | index196 | sigmajin | 0.387 |

rGraphData.tail()

| deposit | from | to | withdraw | |

|---|---|---|---|---|

| 2017-03-13 16:59:42 | 0.935 | newbie6 | originate | 0.935 |

| 2017-03-13 16:58:45 | 0.898 | newbie26 | originate | 0.898 |

| 2017-03-13 16:58:09 | 1.272 | newbie25 | originate | 1.272 |

| 2017-03-13 16:57:36 | 1.670 | newbie24 | originate | 1.670 |

| 2017-03-13 16:56:57 | 1.670 | newbie23 | originate | 1.670 |

At first glance, I thought I had absolutely blown something in the translation. All of the from accounts appeared to be the same with the same timestamp – but then I looked closer. A lot closer. All of these transactions occurred during the same second, it's absolutely true. But they are being issued from a series of accounts with integer increasing names to a single withdrawal target.

Is this what a bot farm paying off looks like?

I have no idea what this is. But whatever it is, it's synchronized tightly enough so that all of these things are being issued at the same moment.

Looking at the other end of the record, the oldest things look pretty standard. Though, again, we see a situation in which a series of integer-named accounts are all withdrawing to the same account.

At least they're not all hitting the blockchain at once.

Again, what's going on here? I have no idea. Maybe @sigmajin and @originate would like to let us know?

Since we know that there are different numbers of from and to accounts, or at least that's the assumption we're going on for the moment, maybe we should figure out exactly how big the disparity between those two groups is.

That's pretty easy if we look at the data simply grouped by each of them separately.

rGraphData.groupby(['from', 'to']).sum().head()

| deposit | withdraw | ||

|---|---|---|---|

| from | to | ||

| a-ok | nrg | 51.622 | 51.622 |

| a00 | nrg | 40.528 | 40.528 |

| a1r | nrg | 40.622 | 40.622 |

| a2v6aaz0tf | sigmajin | 15.304 | 15.304 |

| a4elentano | dart | 40.231 | 40.231 |

rGraphData.groupby(['from', 'to']).sum().tail()

| deposit | withdraw | ||

|---|---|---|---|

| from | to | ||

| zyl | onthax | 209.354 | 209.354 |

| zynnnymehetele | dart | 35.217 | 35.217 |

| zzz | www | 35819.052 | 35819.052 |

| zzz3ya | dart | 35.126 | 35.126 |

| zzzya | dart | 35.100 | 35.100 |

Well that now we know how that works out. Nearly 24,000 source accounts are going to just under 200 recipient accounts on a regular basis that nearly 1/3 of the traffic of this particular kind of operation on the blockchain, day in, day out, for the entirety of the last year.

I'm not going to say that such things look sketchy, but they look sketchy as Hell.

toRG = rGraphData.groupby(['to', 'from'])

toRG.sum().head()

| deposit | withdraw | ||

|---|---|---|---|

| to | from | ||

| aaron | jesta | 211542.903 | 211542.903 |

| powerbot-1 | 4255.104 | 4255.104 | |

| powerbot-2 | 8486.685 | 8486.685 | |

| powerbot-3 | 6477.957 | 6477.957 | |

| powerbot-4 | 7806.740 | 7806.740 |

toRG.sum().tail()

| deposit | withdraw | ||

|---|---|---|---|

| to | from | ||

| zear | bellyrub | 3454.115 | 3454.115 |

| bellyrubbank | 66.964 | 66.964 | |

| itzzia | 57.687 | 57.687 | |

| psych101 | 33.549 | 33.549 | |

| zeartul | 4690.089 | 4690.089 |

And there's our activity, all laid out neatly and effectively with all of the accounts which are pumping up into others completely revealed.

I find it interesting that, thinking back on the recent @bellyrub insanity, we can see what accounts that @zear has been involved with – at least as regards whatever this particular vesting operation represents.

All right, let's get on making that relationship graph. I'm more curious than ever to see what it looks like.

toNodes = set()

fromNodes = set()

for e in list(toRG.groups.keys()):

(t, f) = e

toNodes.add(t)

fromNodes.add(f)

len(toNodes), len(fromNodes)

(187, 21507)

for n in toNodes:

dot.node(n)

dot.attr('node', fillcolor='lightgreen', shape='oval')

for n in fromNodes:

dot.node(n)

Let's look at making the connecting edges a little more interesting. We'll label them with the actual sum of transfers that have gone along that road.

sumToRG = toRG.sum()

dot.attr('edge', fontcolor='darkred')

for e in sumToRG['deposit'].iteritems():

(f, t), v = e

dot.edge(t, f, taillabel=str(v))

%time dot.render('02')

Wall time: 2min 41s

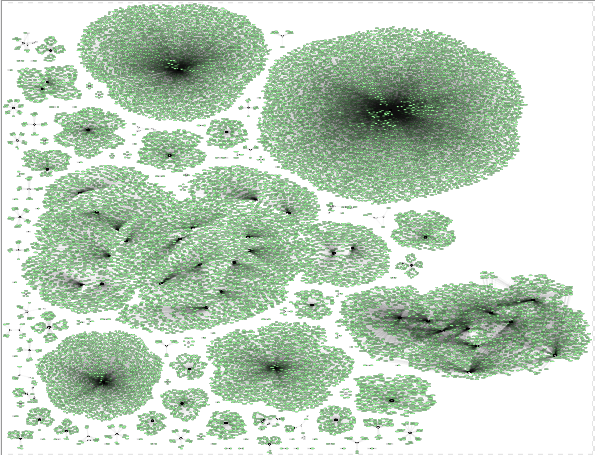

(Available straight from the source on Github, if you want it big enough to actually read.)

That graph is huge, and I apologize for the necessity – and for the fact that it needed to be rendered with SVG because no binary representation was going to be effective enough to allow enough scaling so that you can make out any part of it.

Notice what we are seeing. There are clearly visible islands with very little interconnection between them which represent – I'm not exactly sure what.

Further research suggests, but does not prove, that this particular operation on the blockchain is related to powering down, filling the request for steem in exchange for SP/vests, which would make sense as to why it would be the source for deriving the ratio of steem to vests.

What we see here are accounts which are receiving steem for other accounts powering down. How does that even work? If that is the explanation for what we're seeing, there are literally 187 and only 187 accounts which are profiting from that process in the last year.

Looking deeper at that list of 187, you see some very interesting things, like the fact that most of them have no posts or votes. They are simply repositories for funds.

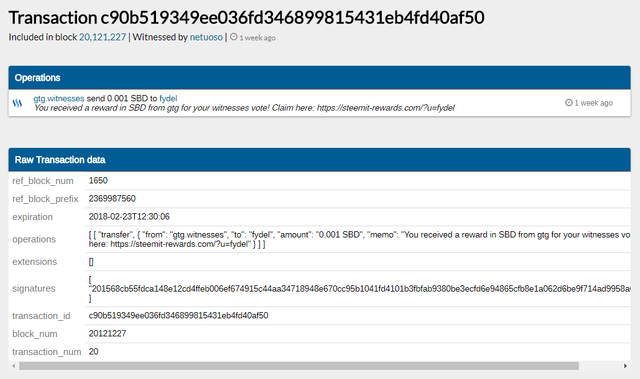

Perhaps the most interesting thing that I saw while just poking around through the list of 187 was this particular transaction on the blockchain, where @gtg apparently transferred 0.001 SBD to one of these minimally active accounts in exchange for a witness vote.

Maybe that's common practice, I don't know – but I do know that it struck me as interesting.

Take It Away

I realize that I have a lot more questions than I have answers. That seems to be the way that investigation goes for me; find something interesting, follow it down the rabbit hole, watch it turn into the World Serpent.

What have I found here?

Well, firstly – you can totally replace your expected steem per vest with a pretty straightforward constant and generally expect to be close with any calculations that you might have been inclined to do in order to determine the value of a vote or other interaction with the blockchain.

But that's not the question that you are really interested in at this point, is it?

Me either.

What is going on with these 187 accounts? That's the question that most concerns me right now.

If you go panning around the graph, you'll see an entire swath of associated accounts which are integer, sequentially numbered. I would bet dollars to doughnuts that those are almost all bot-created and bot maintained. A lot of other accounts have the whiff of procedural generation about their naming, even if they don't go as far as labeling themselves "newbie1."

I'm pretty sure what we are seeing here are vast numbers of bots which are run in one of two modes, either centralized commander-node architecture or a more distributed ring of mutually owned nodes which are trying very hard to stay under the radar by distributing their interactions across a much wider number of accounts.

Not all of these accounts in a given island are bots, most assuredly. But all of them have a relationship.

The fact that there are extremely isolated islands suggests that there is very little collusion between these groups of related accounts. Looking at the graph as a whole at a high level, the nodes of activity are extremely obvious.

What does it mean? I haven't the foggiest.

What I do know is that over the last year, a full one third of the blockchain traffic of the fill_vesting_withdraw operation has involved these 187 accounts. That's 300,000 of 1,000,000.

If this stuff had been more obscure or less obscure, if it had been more common to be engaged in this operation with another account or if it had been less common – I wouldn't have given it another look.

It falls into just enough unusual that it drew my eye and it continues to be extremely unusual the more I, bit by bit, put together ideas about it.

If anyone has insight into what we see here, I invite you to share it with the rest of us. If anyone wants to reproduce my research, to go digging into the blockchain database and discover more about these accounts and what they're doing – that's why my code is here. Feel free to make use of it as you will.

In the meantime, I'm going to go make sure that my secure lab is security against intrusion and I am unlikely to be murdered in my sleep. And I want everyone to know that if you kill my dog, I have seen John Wick and I know what to do.

Also, if you would like to employ me as your own private dick, remember that I don't come cheap and I don't take cases that don't entertain me. In all my dialogue is written by Raymond Chandler.

That's life in the big city.

Epilogue

I feel like I ask a lot of existential questions in the course of my little explorations of the steem blockchain.

What does it mean to be? Are any of us real or are we all bots? Is it all a giant illusion, or are there things which are grounded reality but which elude our perception? Can any of us really, truly know anything?

The continuation of existence, the first, yes there is grounded reality, and knowledge is possible.

I'm glad I could help you with your existential crisis.

Remember, these regular doses of madness are brought to you by an obsessive need to know things and viewers like you. (The letter "Y" was unavailable for comment.)

I have no affiliation with #blockchainbi but they are some pretty hoopy froods.

Tools

Posted on Utopian.io - Rewarding Open Source Contributors

Thank you for the contribution. It has been approved.

Hi @lextenebris, glad to see you on utopian as well, great start! Sorry for the filters left out "for simplicity" :D But you worked out the details exceptionally well!

What you found with deposit and withdraw account being different are withdraw vesting routes. Those are not available via steemit.com, but for example with the steem

cli_walletorsteem-python/steem-js. If you power down on steemit.com you automatically get the appropriate amount of STEEM into your own account over the course of 13 weeks. Under the hood, this process is much more flexible:You can withdraw parts or all of the powering down SP to one or more other accounts controlled via percentages. You can also choose if the powered-down SP should end up as STEEM or as SP on the target accounts. The latter allows you to move SP from one account to another without going via STEEM. What you mostly saw in the data are 100% withdraw vesting routes where the full amount of SP goes as STEEM to another account. This way an account can be powered down to really 0 SP without locking the funds with 0 bandwidth. Withdraw vesting routes result in two

fill_withraw_vestingops in the blockchain per week, one for the powered-down account and one for the receiver. In the case of a 100% vesting vithdraw route there's one operation where the powered-down account withdraws X Vests into 0 STEEM and one where the receiving account withdraws 0 Vests into Y STEEM (IIRC?). Lower percentages than 100% seem to give lower steem-per-mvest values in this case. These are the "outliers" in my graph.Since withdraw vesting routes are comparably rare, the median value like you chose should give correct results for the steem-per-mvest. Maybe the daily/hourly/... upper limit might be a a good candidate as well. Or simply taking only ops where deposit and withdraw account are the same...

A lot of those powered-down "clusters" are likely mined accounts from the early Steen days, where the mined SP is transfered to the main miner account. The powered down accounts related to sigmajin are no unknowns, I came across them before because they all share the same Steem keys and were used to flag zappl posts in the past. @abh12345 also found them recently analyzing the flagging behavior in the last couple of months. With the same active key for all accounts it's easy to power them down in the same transaction.

You can contact us on Discord.

[utopian-moderator]

Not so much a great start as it is a great return to form. Even if Utopian wasn't exactly keen on my 3D design work for an established project. Still, flowing liquid under the bridge.

I was really hoping that the "filters" were going to be a lot more interesting in terms of how they simply cut out stuff that was excessive, but it turned out that whatever lived in there was exactly the stuff that would attract my attention.

It's just like having a sore tooth. I am the tongue that cannot resist poking it.

Right, which is largely what I was the arising – but part of this system still eludes me: what triggers the creation of this operation? Three quarters of the time, the from and to account are the same account, which makes a lot more sense for most purposes. About 1/3 of the transactions that take place in the last year were powering down accounts shifting the results to another account – and not just one or two accounts, but entire constellations of accounts, draining into a more centralized one. As I have tinkered with making the time horizon closer, I've found a strange thing! The percentage of the

fill_withdraw_vestingoperations weren't dropping as rapidly as we were looking at an ever smaller cross-section to see where the activity was coming from.I even shrunk the time horizon to one day, and observed what the rest of the layout ended up looking like, the amount of second party investment transfers were much closer to those transactions than 1/3.

One could say with some degree of surety that whatever is going on under the hood causing these events, so I have to roll back to ask about the initiatory choice that causes this particular operation to get signaled? Does it apply whenever an account is set to power down?

Like I said, even when I reduced my temporal horizon to 30 days, even a week, I still had far more of these vesting route operations than made sense. To me, that suggests that they are all actively engaged in sending that vestment to the central dumping spot and collecting it there, until it can be dumped for fair amount.

I'm not sure that I am ready to buy the "old clusters" rationale wholesale, at this point. After all, three or more of the highest rated, highest trafficked accounts on the blockchain are still listed in this list.

At some point, it would be very useful for us to lay down the comparisons and say which are possibly better at solving the problem.

In the meantime, I suppose I've discovered enough insanity floating around the world for one night.

Exactly! The power down is started with a

withdraw_from_vestingoperation. Seven days after this comes the firstfill_vesting_withdrawoperation that converts 1/13 of the VESTs into STEEM based on the current steem-per-mvest ratio. 12 morefill_vesting_withdrawoperations follow in the remaining 12 weeks so that after 13 weeks all VESTs from the initialwithdraw_from_vestingoperation are converted into STEEM.That's – interesting.

It's particularly interesting because even as I shrunk the time horizons on my query, these operations decreased in total volume, both standard and nonstandard, about as you would expect – but the transactions like this, with a different from and to account, became a larger proportion of those transactions in general.

Which leaves us with the question of what these things actually represent. I am willing to accept that some of them, particularly the smaller clusters, just represent a small bot host owner powering down their bots which gained SP one way or another. That's a perfectly normal sort of activity, even if I'm not particularly on board with how useful bots are compared to how abusive they can be.

It's the ridiculously large clusters, with dozens to hundreds of nodes, that concern me. They have enough accounts in them that they could probably keep a number of them rolling over every week and generate a consistent payoff almost daily assuming that they could continue to farm the SP somehow.

An extremely small number of accounts is responsible for 1/3 (or more, depending on how we split it) of the this particular type of transaction of transactions which occurs on the blockchain every day. That seems concerning to me.

I suppose the next step is to try and figure out what these things are doing, if anyone cares.

I mean... I know I'm a real guy... I just did some exercising and now I hurt like a guy. A while ago I did find my own little bot farm... 12 bots were posting memes 4 times a day through busy.org, which was paying 24c upvotes for using it's platform... these 12 accounts would then daily send their earnings to one account who was powering up and selling it's delegations. In 6 months that account was worth 88k USD... I sent it all to Steemcleaners but I forgot to keep records so I haven't had a chance to see what happened to it all.

I'm pretty sure you're a human being, or at least human enough I'm willing to grant you sapience rights. :)

That was a pretty good catch. What was the clue that put you onto them?

Yes!!! I can't wait to put that on my business card!

During my Curie duties I come across a fair amount of spam accounts.. and so dutifully report them to steemcleaners...usually spammers get no or one upvotes... but these ones were getting 11 upvotes... and so it was pure curiosity to investigate who was continuously upvoting such terrible spam... and found it was all the same accounts.. and then looked at their wallets. Once I realised two were in the same ring, it was super easy to find all the others.

I'm not sure that busy.org gives upvotes anymore... but the damage is done, they all had enough SP to vote each other...

Busy does, but only at something like a 16% level, which because of the rounding inherent in Steemit's undercarriage, can effectively never drop your voting power to 0.

@crokkon has a good analysis of the Busybot's recent activity.

Hahaha great and interesting analysis @lextenebris. It is always amusing, entertaining and gratifying allowing immerse ourselves deep down into rabbit's holes everywhere with a Rod Serling's mindset to defeat beforehand any idea or fear that by announcing your findings, you should mark yourself for death. LoL

Keep doing your splendid work digging deeper and moving stuff from here to there and from there to here to let us all know how revealing and insightful is watching things from different perspectives. And simply allow the facts gives reality check bitchslaps to those who stubbornly are not willing to change positions and optics because they already feels comfortable with their bellies full, having eaten so much shit on a plate full of mere assumptions.

Cheers!! :)

This was really interesting, unfortunately I didn't have time to read ALL of it, but heres my 0.002 steem:

I'm gonna keep a follow on you because I love data digging mysteries!

From other answers in the comments, it's fairly clear that the vast bulk of these are some sort of botnet which are paying out their SP in power down (since that's what this particular transaction represents) to another account. To really find out how they were earning it, I would have to point some more code into specifically pulling their transactions, but I'm pretty sure that these are all some kind of vote botnet.

There are a rare few which are legitimately someone's multi-account, but the rest…

Good times, good times.

The apparent @gtg transferring 0.001$ to accounts isn't @gtg, but a scammer.

https://steemit.com/psa/@isacoin/psa-warning-on-gtg-witnesses-account-offering-rewards

Aha!

Though that is why we ask these things, so that we can find out what's going on.

As scams go, that seems pretty… Sad? I suppose anything that could possibly get someone to hand over some of their higher level keys is a pretty good plan if you just plan to smash and plunder, but how likely is that to work?

I wonder what their hit rate actually was.

Phew... now there's some depth analysis. I'm not in the slighest bit surprised and this backs up my view that steemit is actually a form of 'techno-feudalism'; although I'm surprised we have as many as 187 'fiefdoms'.

I think the SVG rendering works well - reminds me of parasites.

Great work. Now go get some sleep.

Maybe this could've been 2 posts?

Only over the course of a year.

Amusingly, I've rerun my maps with increasingly smaller time slices, down to a single day. This particular weird operation still shows a couple of vast, isolated islands, even at that narrow a horizon.

Though if you really want to see who the feudal lords are, look at some of my work from last week doing breakdowns of where the SP is concentrated and where transfers of funds are occurring. That's the folks who make your and my vote kind of nothingness.

Sleep is for the weak. Or at least waits another hour or two; I may be East Coast, US, but I'm a nocturne.

This could have been two posts, but where's the fun in that?

Hey @lextenebris I am @utopian-io. I have just upvoted you!

Achievements

Community-Driven Witness!

I am the first and only Steem Community-Driven Witness. Participate on Discord. Lets GROW TOGETHER!

Up-vote this comment to grow my power and help Open Source contributions like this one. Want to chat? Join me on Discord https://discord.gg/Pc8HG9x

I didn't understand everything you presented, but I can tell you that the @gtg instance was NOT the witness. It was a scam account that was created to send phishing links to accounts and steal their passwords. @gtg is still legit.

I don't know what to make of the data, but it was interesting to read. I'm not a programmer, but I do like statistics. :)

I wonder if anyone has actually thought to search the memos of transactions, looking for that kind of message.

We know that the website was shut down pretty quickly (thanks to a reference someone else gave in another comment here), but that can't be the only one.

And that makes me curious.

Busy hadn't loaded the other comments when I first commented, so I didn't see that someone else said the gtg-witness was fake. No, I don't think that was the only site. There have been multiple scam sites that have popped up over the last few weeks especially. I don't really understand so much of what is happening in the background. I just post a few articles, man. Ha ha.

I fully admit to being fascinated by all of the shady shenanigans that can possibly go on in the system. I chalk it up to the conflict between being fascinated by driving slow by car wrecks and an appreciation of something well done, no matter what that thing is.

That said, you're probably far better off not knowing how the sausage is made.

Ha ha ha

Very nice post your friends