Local setup of Apache Kafka

What Will I Learn?

- You will get a high level overview of Kafka architecture

- You will learn how to set up local instances of Apache Zookeeper and Apache Kafka

- You will learn how to use

kafka-topicsutility to create Kafka topics and to fetch metainformation about existing topics - You will learn how to run Kafka console producer and console consumer.

Requirements

- Basic knowledge of system administration under Linux

- Experience working with command line

- Minimal understanding of messaging queue concept

Difficulty

Either choose between the following options:

- Basic/Intermediate

Apache Kafka is state of the art messaging broker for high throughput applications. Thanks for simple but elegant architecture, Kafka cluster is extremely reliable and scalable.

Vocabulary

Key concepts we need in order to understand Kafka:

- Broker - one instance of kafka server

- Cluster - numer of brokers working together

- Message - seperate piece of binary data, for example Avro-encoded object or a simple string.

- Topic - Seperate "channel" for messages of certain type.

- Producer - client that writes messages into the topic. Analogus to "publisher" in other messaging queues.

- Consumer - client that reads messages from the topic. Analogus to "subscriber" or "reciever" in other messaging qeues.

- Partition - subset of messages in a topic.

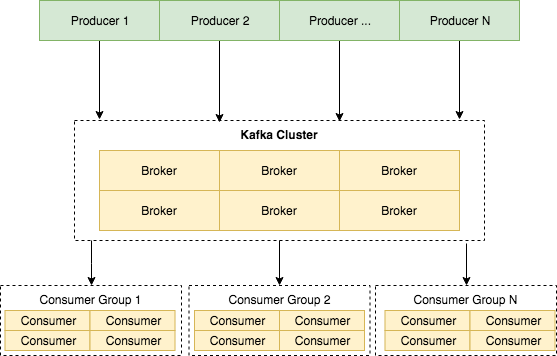

Architecture basics

Server part of kafka is called a broker. Multiple brokers can work in unison to form a cluster. Instead of using built-in solution for cluster coordination (as is the case with Cassandra, MongoDb or ElasticSearch to name a few), Kafka relies on ZooKeeper for node communication and coordination. Keep in mind that for production purposes you would need minimum of three ZooKeeper instances for reliability guarantees.

Producers send messages directly to one of the nodes in Kafka cluster. Consumers are slightly more complicated, since the can form a consumer group. Consumer group is cluster of consumer nodes that will receive all events in a topic. One consumer group is usually responsible for one concern. As an example, let's assume we have a topic containing system metrics information. One consumer group in that case might store this events in database to be displayed on a dashboard, while second one is performing real-time analysis and sends notifications when values cross certain threshold.

Zookeeper and Kafka setup

Setting up separate Zookeeper node is very easy. In fact, Zookeper and sample config file for it is included in default Kafka distribution. Starting zookeeper requires only a few steps:

- Download Kafka distribution. We will be using release version 1.0.0.

curl http://apache.lauf-forum.at/kafka/1.0.0/kafka_2.11-1.0.0.tgz -o kafka_2.11-1.0.0.tgz

- Extract Kafka archive contents

tar -xzf kafka_2.11-1.0.0.tgz

- Enter kafka directory and start zookeeper

cd kafka_2.11-1.0.0

$ bin/zookeeper-server-start.sh config/zookeeper.properties

This command will give you output lots of diagnostic information. To verify Zookeeper node is working properly open another terminal tab, navigate to Kafka directory and use ./bin/zookeeper-shell.sh utility:

$ ./bin/zookeeper-shell.sh localhost:2181

Welcome to ZooKeeper!

JLine support is disabled

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

At this point, we are ready to start Kafka "cluster" containing single broker instance:

$ bin/kafka-server-start.sh config/server.properties

Creating a topic, producing and consuming messages

Now let's create a new topic to play with:

$ bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic tutorial

Created topic "tutorial".

$ bin/kafka-topics.sh --list --zookeeper localhost:2181

tutorial

$ bin/kafka-topics.sh --describe --topic tutorial --zookeeper localhost:2181

Topic:tutorial PartitionCount:1 ReplicationFactor:1 Configs:

Topic: tutorial Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Let's describe each argument in details:

--create and --listare actions we want to perform. Other actions are--alter,--deleteand--describe.--zookeperis na address of zookeeper instance to usecases--replication-factordescribes how many copies of each message should exist in a cluster. Higher number gives better consistensy and availability guarantees, but requires more disk space.--partitionsspecifies how many partitions are present in a topic. Higher partition number increases the maximal size of consumer group, but might increase latency and in some circumstances increases CPU and memory usage on consumers.--topicname of the topic

Once topic is created with desired configuration, we can start sending and recieving messages through this topic. Kafka libraries exist for all popular programming languages, but for educational purpose of this tutorial we can just use kafka-console-consumer.sh and kafka-console-producer.sh utilites packaged with Kafka distribution.

Open two new terminal windows, navigate to Kafka directory in both of them and in one of them execute following command to run producer:

$ bin/kafka-console-producer.sh --broker-list localhost:9092 --topic tutorial

while in the second one execute command to run consumer:

$ bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic tutorial

Now whatever you type in kafka-console-producer will be transfered through kafka and will be delivered to kafka-console-consumer window.

Summary

We have learned high level principles of Kafka architecture, started local instance of Kafka broker and transferred message from console producer to console consumer. I hope this tutorial was interesting as well as educational.

Posted on Utopian.io - Rewarding Open Source Contributors

Thank you for the contribution. It has been approved.

You can contact us on Discord.

[utopian-moderator]

Hey @laxam I am @utopian-io. I have just upvoted you!

Achievements

Suggestions

Get Noticed!

Community-Driven Witness!

I am the first and only Steem Community-Driven Witness. Participate on Discord. Lets GROW TOGETHER!

Up-vote this comment to grow my power and help Open Source contributions like this one. Want to chat? Join me on Discord https://discord.gg/Pc8HG9x