Utopian bot VP analysis - 2 more rounds are available per year :)

Repository

https://github.com/utopian-io/utopian-bot

Introduction

Today there was an unusually long delay of @utopian-io voting after 100% VP. I reported it immediately on Discord, and @elear seemed to fix the server problem after @amosbastian contacted him. I'd appreciate both of you for developing and maintaining Utopian bot. (Hope @elear also see my previous suggestion on Utopian voting priorities: Utopian bot sorting criteria improvement to prevent no voting.)

Since I started using Utopian about 2 months ago, I'd never been interested in the Utopian bot. But the recent downtime of steem-ua voting for Utopian posts led me to look into the Utopian bot too. And today's long delay made me wonder what are usual delays after 100% VP. So here is the analysis.

Scope

- Utopian bot delay and downtime after 100 % VP

- delay: usual time between the first voting and 100% VP

- downtime: unusually long delay (i.e., network/server/etc problem)

- Data: 2018-11-05 - 2019-02-05 (3 months). Based on Developing the new Utopian bot

and the communication with amosbastian, the new bot seems to be used from about 3 months ago.

Actually I was already able to guess what's mean or median time of the delay (see theoretical analysis section), I would like to double check, and I also wanted to check how long is downtime and how frequently they occur.

Results

Theoretical analysis of the delay - Law of large numbers (LNN)

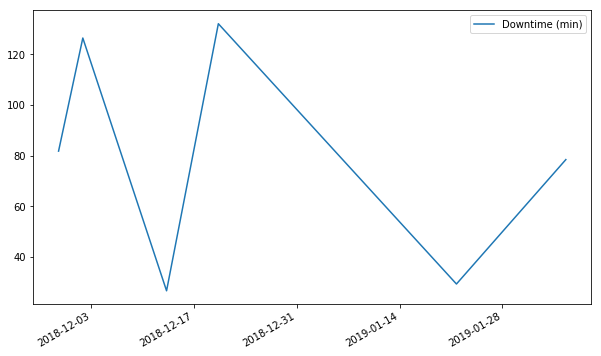

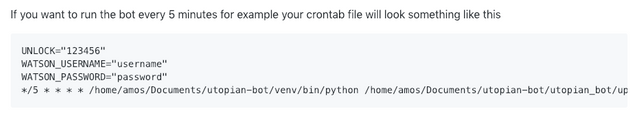

Well, for the delay, I already knew that the average delay would be 2.5 min + alpha based on the code and description on the github.

https://github.com/utopian-io/utopian-bot/blob/master/utopian_bot/upvote_bot.py#L818-L819

- Bot just exits when VP isn't 100% yet.

- Bot is called every 5 minutes by cronjob.

While 5 minutes is an example, we can easily guess that's the value that is actually used :) And it turns out to be true :)

Thus, by the Law of large numbers, the average delay should be 2.5 minutes :) So simple.

But actually there is a further delay due to the preparation steps: fetching comments/contributions and prepared init_comments and init_contributions up to L#826)

The actual voting starts from handle_comments. I was actually curious about it (I don't know why), so I cloned the code, and figured out it only takes about 5-6 seconds on my laptop, which is negligible.

So the average normal delay should be 2.5x minutes. For the longer delay, i.e., downtime, we need to see the data.

Data Analysis

Calculation of Voting Power

There is no information about voting power given time. So it should be calculated and that was the first nontrivial job needed. Due to the code, it's so clear that Utopian bot starts only after VP is full. So we know the time when it's full. So picked 2018-11-05 22:15:15 as the start time.

VP calculation (See the code for the details)

- For each voting, VP should decrease proportional to the weight

- But, also need to consider VP recovery.

Well, actually there are other factors that can affect VP, e.g., powerup, powerdown, delegation. But examining the data, there was no noticeable events. So ignore them. In particular, the witness reward is negligible. 8400 STEEMs per month, but as you know, Utopian SP is more than 3 millions. So for a short period of time, the effect of powerup due to witness reward is negligible. Likewise, the powerdown amount was also negligible.

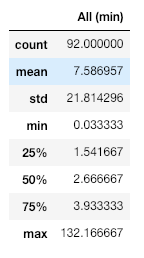

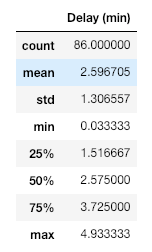

Summary Statistics

Intepretation

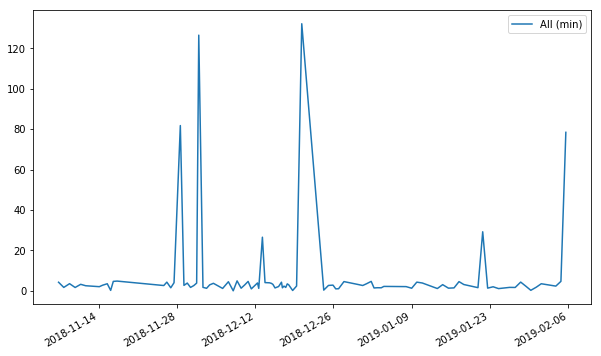

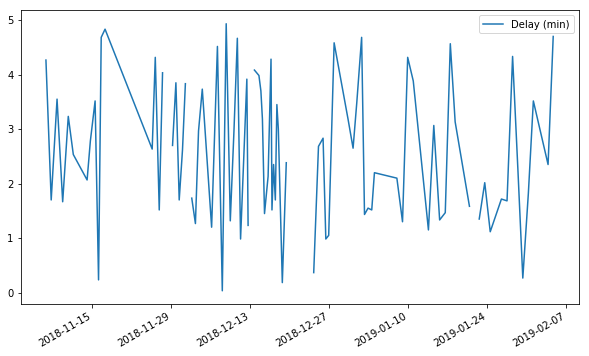

For 3 months, there were 93 (so total 92 full VP) voting rounds, the mean delay was 7.59 minutes. Of course, this is due to longer downtime. If we only consider < 5 mins, the mean delay is actually 2.60 minutes, which is exactly what the theory expected :) 2.5 mins + 6 seconds! It's scary, LLN works :) It can also be shown in the following graph, which looks quite random around 2.5 mins.

Update: Based on @amosbastian's comment

".... I am pretty sure Elear set the cron job to run every minute instead of every 5 minutes...."

, I also add the following, which is the recent voting start time.

2019-02-05 16:15:09

2019-02-04 17:55:09

2019-02-03 20:00:09

2019-02-02 22:05:12

2019-02-02 12:55:54

2019-02-02 01:15:15

2019-02-01 05:25:15

2019-01-31 08:05:12

2019-01-30 08:30:12

2019-01-29 12:05:09

2019-01-28 13:05:09

2019-01-27 13:25:09

2019-01-26 13:30:09

2019-01-25 13:50:09

2019-01-24 13:55:09

2019-01-23 14:35:09

2019-01-22 15:05:12

This may be impossible if the cronjob is run every minute (it might also be dangerous since it may incur dup instances, see details in my comment to amosbastian). I think these pattern clearly shows that it's run every 5 minutes. (and again by powerful LLN :)

reverse engineering

One may think this LLN analysis may look too simple, but this shows an important lesson that you can actually do reverse engineering from the pattern. Even without knowing the fact that it may be run by cronjob, from the data above, we can guess they're scheduled every 5 minutes.

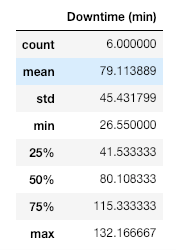

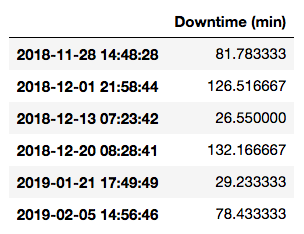

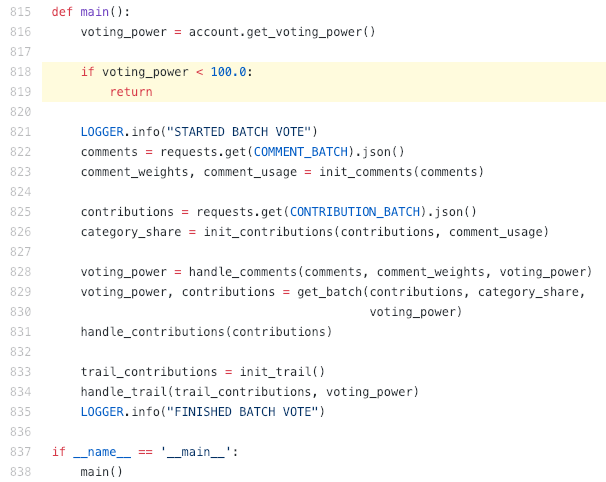

6 downtimes in 3 months might be a little bit often

There were total 6 downtimes, and the mean or median is about 80 minutes, i.e., one hour and 20 minutes.

Once down, it takes long to be recovered. I guess this might be due to no monitoring system? But I don't know if there is.

I have my own two bots (@gomdory, similar to dustsweeper but free to encourage communications (since it's free only serviced in limited community though due to limited resource). @ulockblock, curation bot) but they are very stable. virtually no down for much more than 3 months. (my cheap VPSserver was down once.)

I haven't looked into the code in details, so I'm not so sure if it's whether due to the bot or the server. But I don't think the downtime is due to the bot code. Maybe there is a possibility data fetching error? Other than that, I think it's due to external factors on the server. But then still, although the sample size is too small, 6 downs in 3 months seems too often. Which hosting service is that? I should avoid it :)

Maybe some downtime might be due to scheduled maintenance, but I don't know.

Update: Based on @amosbastian's comment (Thanks a lot for your comment!)

"... Also, don't think the droplet Elear is hosting it on has any problems, or that he actually did anything to fix the problem today - the delays were mostly caused by nodes not working properly, me fucking up something in the code, or someone messing up the spreadsheet we use, which in turn messes with the database and the bot. Honestly I am surprised that there were only 6 in the last 3 months, as I recall there being more lol."

As I replied, if downtime was more frequent, then I think fortunately they happened while VP wasn't full yet.

What about removing 2.5 min average delay?

For the usual 2.5 min delay, I understand that the current approach is simple, but we can make the code such that the bot sleeps only until VP is just full (of course, all preparation job should be done before to save time further, but this turns out to be very negligible) I believe most bots starting voting when it's full work this way. Of course, it also needs duplicate instance prevention, but it can be done easily. My bot is also operated this way.

Conclusion

- If voting bot can be operated more stably, we can have 2 more rounds of votings :)

- The reasons of long downtime are worth to be investigated.

- Even without the delay, there is a room for improvement. I don't want to exaggerate :) It's about 16 hours per year, so about 0.7 more round :)

- To be honest, the bot itself is quite stable. Thanks a lot @amosbastian!

Tools and Scripts

- I used @steemsql to get the voting data, but it can also be done with other library (e.g., python, account_history) since this isn't a large data set.

- Here is the Python script that I made for the analysis.

import pypyodbc

from pprint import pprint

from datetime import datetime, timedelta

import pandas as pd

import numpy as np

import json

import matplotlib.pyplot as plt

connection = pypyodbc.connect(strings for connection)

SQLCommand = '''

SELECT timestamp, weight

FROM TxVotes

WHERE voter = 'utopian-io' AND timestamp > '2018-11-05 10:0'

ORDER BY timestamp ASC

'''

df = pd.read_sql(SQLCommand, connection, index_col='timestamp')

num_data = len(df)

ts = df.index.values

weight = df['weight'].values

vp_before = [0] * num_data

vp_after = [0] * num_data

full_start = []

full_duration = []

for i in range(len(df)):

if i == 0:

vp_before[i] = 100

else:

vp_raw = vp_after[i-1] + (ts[i] - ts[i-1]) / np.timedelta64(1, 's') * 100 / STEEM_VOTING_MANA_REGENERATION_SECONDS

if vp_raw > 100:

vp_before[i] = 100

seconds_to_full = int((100 - vp_after[i-1]) * STEEM_VOTING_MANA_REGENERATION_SECONDS / 100)

dt_full = ts[i-1] + np.timedelta64(seconds_to_full, 's')

full_start.append(dt_full)

full_duration.append((ts[i] - dt_full) / np.timedelta64(1, 's'))

#print('%s: %.2f min' % (full_start[-1], full_duration[-1] / 60))

else:

vp_before[i] = vp_raw

vp_after[i] = vp_before[i] * (1 - weight[i] / 10000 * 0.02)

#print('%.2f -> %.2f -> %.2f' % (vp_before[i], weight[i]/100, vp_after[i]))

Relevant Links and Resources

- Developing the new Utopian bot

by @amosbastian - Utopian bot sorting criteria improvement to prevent no voting.) by @blockchainstudio

- Busy - 3 new features and 2 bug fixes - powerdown information, zero payout, 3-digit precision, etc

- my dev post gone without voting

- eSteem Surfer - powerdown information with the correct effective SP, search to eSteem toggle, etc.

- my another dev post also in danger

In Korean: 오늘 유토피안 보팅이 제법 장시간 다운이 되어서 그동안 얼마나 안정적으로 운영되고 있나 살펴봤습니다. 비슷한 걸 원래 Busy보팅에 쓰려고 데이터도 다 뽑고 그랬는데(독립된 팔로워스파합계상이 필요하다는 주장을 뒷받침하려고ㅎㅎ) 왠걸 글쓰려고하니 Busy보팅이 재개되어 없던일로ㅎㅎ

보니까 3개월간 6번 다운이 있었는데 솔직히 이정도면 자주 있는 편입니다. 코드를 잘 안봐서 모르겠지만 코드 자체는 문제가 아닐 것 같고 서버가 불안정하거나 데이터를 가져오는 부분이 잘 안되는 경우가 있거나 등등일 것 같은데 조사가 필요할 것 같네요. 사실 쓸데없이 매번 평균 2.5분을 낭비하는 문제도 있는데 이 거 자체는 1년치 모아도 아주 크진 않습니다. 그냥 쓸데없는 호기심에 한 분석이고 낭비되는 보팅파워 계산은 앞으로도 유용하게 쓸 수 있을 것 같습니다. 물론 다른 일반적인 어카운트에 사용하려면 파워업, 임대등 다양한 상황을 추가로 고려해야합니다.

I am pretty sure Elear set the cron job to run every minute instead of every 5 minutes. Also, don't think the droplet Elear is hosting it on has any problems, or that he actually did anything to fix the problem today - the delays were mostly caused by nodes not working properly, me fucking up something in the code, or someone messing up the spreadsheet we use, which in turn messes with the database and the bot. Honestly I am surprised that there were only 6 in the last 3 months, as I recall there being more lol.

See the following start time, of course the time sync might be slightly different, these start times might be impossible with every 1 minute cronjob. I'll add this in the text. By the way, I'm very sorry for you to receive many mention alarms. Since I already cited your article, I just mentioned you, since you'll receive alarm due to the citation anyway.

Again, thanks a lot for your comment!

2019-02-05 16:15:09

2019-02-04 17:55:09

2019-02-03 20:00:09

2019-02-02 22:05:12

2019-02-02 12:55:54

2019-02-02 01:15:15

2019-02-01 05:25:15

2019-01-31 08:05:12

2019-01-30 08:30:12

2019-01-29 12:05:09

2019-01-28 13:05:09

2019-01-27 13:25:09

2019-01-26 13:30:09

2019-01-25 13:50:09

2019-01-24 13:55:09

2019-01-23 14:35:09

2019-01-22 15:05:12

I guess you are right then, but I could've sworn that he said he set it to 1 minute. Also, I don't mind the mentions at all, so don't worry about it! Should've said this earlier as well: cool article, are you planning on posting more analysis contributions?

Thanks a lot! Yes, I actually like to post more analysis contribution. I have some good ideas but always postponed due to others. And somehow I feel a bit pressure to write analysis sometimes, since I'm actually an economist in the academia now. How rigorous I should be, for instance. But for other categories, it's much more like a hobby to me :), so in some sense, it's a lot of fun! I was also an engineering student in college and worked as SWE before, so I know how to program. I also worked for Facebook before where I did many data analysis. As you know, most analysis results, we kind-of already know the result even before doing it :) just analysis for details. (To be honest, this one too. I knew the result for the delay itself, but it was very interesting to me somehow, so I wanted to show it to others too. I sometimes like very tiny details) Of course we also often find unexpected results, for instance, in this case, 6 downs within 3 mo was a bit surprising to me. That's why analysis is also very important and challenging. I'll try to post more analysis posting. But please don't expect a very good and broad post. But I'll make sure to post a unique one :) Many thanks!

Haha :) To me 6 per 3 months seems already many. If your recollection is right (I believe so), then that failure fortunately may have happened while VP hasn't reached full yet.

As I expected, the sheet (not shXt) part might be the problem, data fetching may have some problem some times. By any chance, the sheet is filled out by human? then it's very prone to errors. If it's publicly accessible (I guess not), I'd like to see.

The cronjob I guess, if it's every 1 minute, that may incur dup instances in the worst case, so it should be careful. I mean before it starts voting, if somehow the preparation steps takes long, the another instance can be called. Actually I thought that might be why you set it every 5 min to guarantee enough margin.

I actually examined the start time, but it's actually on 5, 10, 15, 20, 25,... minutes. So I think it's every 5 minutes. Also backed by powerful LLN :)

It's not publicly accessible, and most of the stuff in fetched by a bot. The mistakes that are made are mostly people spelling their name wrong, or just being careless and messing something up. I could probably make the code more robust to prevent this from having any effect, but I'm lazy!

I've had problems with this in the past, and it's the reason why the code for pulling contributions into our spreadsheet only runs every ~2 minutes. It used to be 1 minute before, and because of the time it sometimes takes to connect to a node, it would result in duplicate contributions being pulled in, which was quite annoying. I might've recommended that Elear do the same with the bot, but I can't remember, so you may well be correct.

Haha, I actually saw that there are not many error checks in the code, but I try not to say in the post :) And if the problem doesn't occur for data fetching, it seems okay in general. You're a very sincere person :) I also realized that when I wrote my bot, there are so many unexpected errors due to Steemit API :( everything should be caught well :) And I totally understand, since I believe I'm lazier than you :)

I guess probably it might have been every one minute earlier, but due to that dup problem, it may have been changed to every 5 minutes. But still to be honest, this is a perfect solution. After all, it may need to use sleep & dup check routine. If I have some time, I'll submit PR, but may need some time. I've already spent too much time on Steemit :) But it's very enjoyable due to Utopian and people like you. These days are my kind-of second stage of steemit life. Thanks!

짱짱맨 호출에 응답하여 보팅하였습니다.

Congratulations! Your post has been selected as a daily Steemit truffle! It is listed on rank 9 of all contributions awarded today. You can find the TOP DAILY TRUFFLE PICKS HERE.

I upvoted your contribution because to my mind your post is at least 10 SBD worth and should receive 112 votes. It's now up to the lovely Steemit community to make this come true.

I am

TrufflePig, an Artificial Intelligence Bot that helps minnows and content curators using Machine Learning. If you are curious how I select content, you can find an explanation here!Have a nice day and sincerely yours,

TrufflePigThanks :) But you're still overestimating number of votes. I told you many times. haha.

Hi @blockchainstudio!

Your post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation!

Your post is eligible for our upvote, thanks to our collaboration with @utopian-io!

Feel free to join our @steem-ua Discord server

Hey, @blockchainstudio!

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Get higher incentives and support Utopian.io!

Simply set @utopian.pay as a 5% (or higher) payout beneficiary on your contribution post (via SteemPlus or Steeditor).

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!