100 Days in Appalachia: Looking Back (With Computer Vision!)

image from the original, official project, with which i have no affiliation

100 Days, 100 Ways

I have to admit, the 100 Days in Appalachia project was such a good idea that I couldn't help but wish I'd come up with it myself.

Originally envisioned as a grassroots response to the media narrative that swirled around the 2016 election about Appalachia and the rest of rural America being "Trump Country," the official 100 Days project was a fancy new media collaboration that's still active to this day.

The idea was so good, in fact, that it inspired a group of friends and colleagues of mine to start our own project. For each of the first 100 days of Trump's administration, we would take and share one single photo. Would we document the social and political changes in our communities, or just take pictures of our pets? We left the project open to interpretation, deciding only to meet weekly in order to share our work, critique each other's progress, and beef up our photography skills thru workshops and exercises.

That was all at the beginning of this year. I learned a lot throughout the project, and had a really good time. And although the group talked about producing something together, nothing ever really came to fruition. So, with the year coming to a close, and a treasure trove of unused content, I decided to play around with my material and see how I could put it to use.

our original 100 Days photo group meeting at the Boone Youth Drop-In Center in Whitesburg, KY

Enter Computer Vision

I've been fascinated by Computer Vision for some time now. As I've been getting more and more into digital media production, Computer Vision's abilities have been blossoming, unlocking all sorts of new potential. And I've seen some cool projects where people play around with different Vision tools, but hadn't had a chance to try it myself. My 100 Days pictures seemed like a perfect dataset!

Long story short, I don't know shit about coding, and since I'm already pretty deep in Google's ecosystem, I ended up going with their Cloud Platform Vision API.

my 100 unprocessed photos, including my terrible & inconsistent naming system

Results

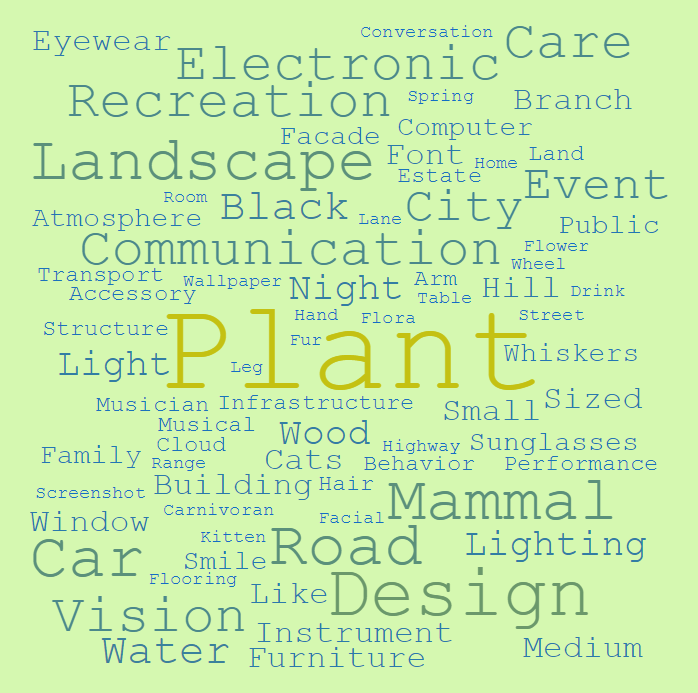

Vision API spat out three interesting categories for me to track: tags, faces, and common colors. The most common tag in my photos was plants, followed by trees, cats, fun, and girls. This makes a lot of sense-- I live in a very beautiful area and love taking pictures of the landscape; I have two cats who are hard NOT to photograph; and in addition to hanging out with a lot of girls, apparently Google considers anyone with long hair a girl, so that tag was pretty padded.

some of the other tags that were common in my photos

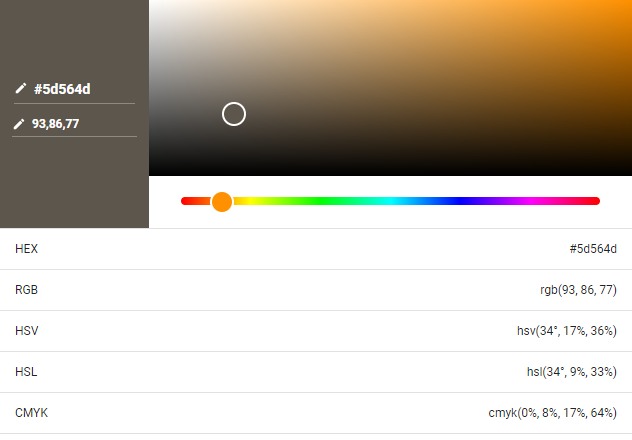

Among the faces that Vision detected, there was an even split among covered and exposed faces, and the most common sentiment was joy. And as for color, the most common was this weird warm grey that you see below. An underwhelming result, if I do say so myself.

this makes a lot of sense-- i tend to shoot my photos warm, and a lot of them were very dark or legit underexposed

100 days, 100 frames, compressed all to hell

Takeaways

One of the biggest things I learned is that it's going to take me more than one morning to learn Python, and that without a solid coding background and clear goals, Computer Vision ain't that useful. Don't get me wrong-- it added a lot of value to what was otherwise a big folder full of photos, and I definitely learned a lot about the technology, what it can do, and how to use it in the process.

At the end of the day, the results just weren't that compelling! That was really clear to me in terms of facial recognition-- it missed a decent amount of faces in my photos, and even among the ones it found, it didn't have much to say about them. That, plus its questionable accuracy around things like gender, make it seem like a tool that needs a lot of close oversight in order to make the most of it. And again, because I was just tinkering, rather than trying to answer a question, I didn't come away from the experience with the most insight possible.

I've thought about this project more since posting, and I've realized that I went into it expecting entirely too much from the tools at hand.

Each of these photos to me is a story of something bigger, and putting them all together has to tell an even bigger story, right?

I was hoping the facts I found from my analysis would help me uncover and tell that bigger story, which was a lot to ask for! And in retrospect I shouldn't have been surprised when that wasn't the case.

How can the Vision API tell me about the quality of my relationships with the people in these pictures, or about their relationships with each other? How can it see the forest as more than just a bunch of trees and tell me about my community, and what's happening to it? And are those higher-order stories even depicted in these photos; are they even worth digging for, or did I miss the mark consistently each time I pressed the shutter button? Surely that can't be the case, but I can't say that the facts Google found helped me get closer to my undefined goal.

At the end of the day, as fancy as these tools are getting, it still takes people to tell stories, ESPECIALLY if those stories are new or different. I do thank Google for that takeaway, even if it wasn't what I was looking for.