What is Artificial Intelligence - and why is Elon Musk afraid of it?

Earlier this week it was all over the news: Elon Musk says that AI is "the biggest risk we face as a civilisation". I've been doing a lot of research about AI the past year, so I thought I might give a little introduction and explain why Elon Musk is so afraid of it.

I'm by no means an AI expert, so if there's something I can improve in this post please let me know. Also, this post turned out a lot longer than I thought it would. There's just so much fascinating stuff to say about AI I could write about it for days!

Marvin, the artificially intelligent (and rather depressed) robot from The Hitchhiker's Guide to the Galaxy

A very brief history of Artificial Intelligence

For thousands of years, humans have fantasised about creating artificial beings with some kind of human-like consciousness or intelligence. From the golden robots of Hephaestus in ancient Greek myths and medieval folklores about Golems to Mary Shelly's Frankenstein, some form or another of artificial intelligence is present throughout our cultural history.

It took until the invention of the computer in the 1940's for AI to become even a remotely realistic concept. Back then computing power obviously wasn't even a fraction of what we have available now, but the foundation was laid. Given enough processing power, computer scientists stated, we should eventually be able to simulate the human mind.

From the 1950's to the 1970's a lot of time, money and effort was spent on the field of "AI Research", with scientists still optimistic that it would only take a generation or so to create a machine as intelligent as a human being. Near the end of this period they realised that they had vastly underestimated the complexity of the AI problem. Quickly the funding dwindled and the research was put on hold. This is known as the first "AI winter". More summers and winters followed in the decades since

The current AI hype

Right now we're in an AI summer again. Since the early 2000's huge developments have been made in the field, creating a true hype around artificial intelligence. Google, IBM, Facebook and many other big tech companies are investing massive amounts of money in research. They keep creating better and better implementations to solve all kinds of problems, from playing Jeopardy and Go to translating hundreds of languages and developing speech recognition.

IBM's Watson winning at Jeopardy

Every "AI summer" there are always people who predict the imminent arrival of Artificial General Intelligence (AGI): a machine with intellectual capabilities that equal to the abilities of human beings. Among those people is computer scientist and futurist Ray Kurzweil, currently working at Google as Director of Engineering, leading a team of researchers to improve their AI-based translation system.

But AGI is not what Elon Musk is afraid of. What he fears is the next step in AI evolution: Artificial Super Intelligence.

Artificial Super Intelligence

In terms of intelligence, AI can be roughly split up into three categories:

Artificial Narrow Intelligence, sometimes also called Weak AI, is AI that is specialized in one specific task. Google's AlphaGo is great at playing the game Go, but has no idea how to translate English to Korean. Netflix's algorithms are great at predicting what movies you might like, but wouldn't stand a chance in a game of chess. They are examples of narrow intelligence.

Artificial General Intelligence (AGI), sometimes called Strong AI, is an intelligence that is as smart as a human in all aspects. In theory, it should be able to reason, plan, understand complex ideas and find solutions for most things by learning quickly and from experience. We're far from there yet. Developing an AGI is much, much more difficult than a narrow AI.

IBM's Watson is a step in the right direction; the amount of understanding and learning it is able to do on the fly — learning from the feedback when it gives a wrong answer so it can give a better answer a minute later — makes it a lot more versatile than many other current AI implementations. AlphaGo for example does not learn while playing Go, it enters a game with a pre-loaded set of knowledge. But even then, an AI like Watson is still very limited in its capabilities.

Examples from science fiction that could be classified as AGI are the robot Ava from the 2015 movie Ex Machina, the robotic boy David in Steven Spielberg's A.I., and the hosts in the Westworld themepark from the 2016 tv-series with the same name.

The robot Ava from Ex Machina can be classified as having Artificial General Intelligence, although it could be argued she's actually smarter than most humans and therefore entering the realm of the Artificial Super Intelligent

Artificial Super Intelligence (ASI) is where things get really freaky. Leading AI thinker Nick Bostrom defines superintelligence as “an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills.” Artificial Super Intelligence ranges from a computer that’s just a little smarter than a human to one that’s trillions of times smarter—across the board.

This is what Elon Musk is scared of. And he's in good company too; Stephen Hawking, Ray Kurzweil, Bill Gates, the founders of AI company DeepMind – and many more – signed an open letter in 2015 warning against "potential pitfalls" of developing AI and expressing the fear of creating "something that cannot be controlled".

Intelligence out of control

Control is at the core of AI's potential danger, and here's why: as soon as an Artificial General Intelligence reaches the level of humans, it will be smart enough to (help) improve itself. AI is getting better all the time due to constant research, and a smart enough AGI will be a lot more efficient at doing such research.

This will create a chain reaction in which the AI gets smarter, improves itself with the new knowledge, and as a result immediately get even smarter again. Its intelligence will grow exponentially, and no human would be able to keep up with it. It will turn itself into an ASI.

The moment it surpasses us it will be impossible to understand how it's inner workings evolve. It will be impossible to control.

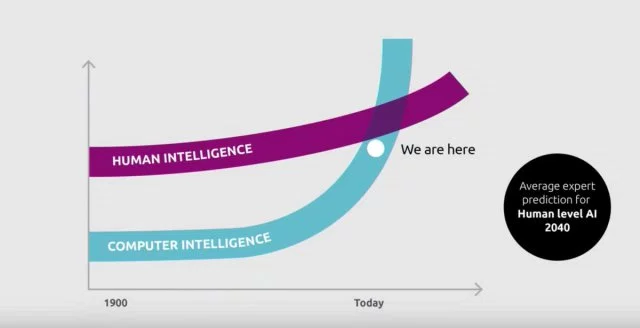

This is what will happen if computer intelligence keeps growing at an exponential rate like it does now.

Source: whatisthebigdata.com

This intelligence explosion is called the "singularity", and it's what all those smart minds fear so much. Some even say that "this would signal the end of the human era, as the new superintelligence would continue to upgrade itself and would advance technologically at an incomprehensible rate". The end of the human era! And on top of that, on average experts predict that this could happen within 40 years from now! But why?

Why would ASI be evil?

This is the question many first ask when hearing this outrageous claim, and so did I. Why would an intelligent machine be so evil that it wants to end the human era? What are its motives? Can a machine even possess such qualities as "good" and "evil"?

Maybe. Who knows. But what's way more likely is that an ASI will be evil unintentionally. It might not even realise it's evil.

A computer program as we know it now is written to perform a certain set of tasks to achieve a certain goal. This could be anything, from doing simple calculations to displaying graphics to doing everything your phone and PC do. As long as every part of those actions is explicitly programmed to do just that, everything will be fine. But once computers start to learn autonomously, this could change. There's already plenty of examples of self-learning alhorithms, from a simple AI that learns how to play Super Mario much beter than any human could ever play to Google's DeepMind that just taught itself to walk.

All the programmers know is that they instructed the program to learn something, and by which means they are learning it (I'm skipping over all the technical stuff like neural nets, supervised learning and decision tree algorithms here, I'll save that for another time). What they don't know exactly is what this learned behavior actually looks like inside the AI. The exact basis on which it came to certain conclusions and made certain choices is not easily read out. It's not a simple number in a database, it's embedded in the "brain structure" of the AI, much like in our brains.

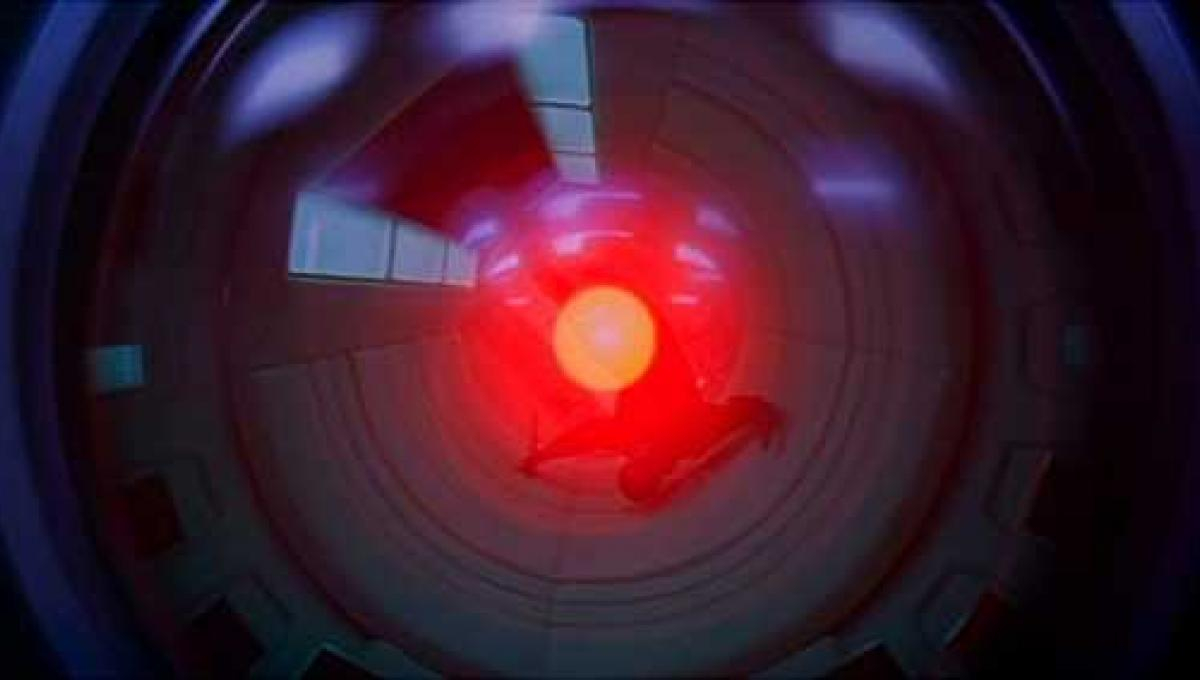

HAL9000, the Artificial Super Intelligence from the 1968 movie 2001: A Space Oddyssey that turns against its creators

Remember the part where I said a super intelligent AI will quickly turn so smart that we have no chance catching up on it? Combine that with the fact that we might not know entirely how it learned what it learned, and we've got ourselves a whole lot of uncertainty. What the repercussions of that might be can probably be explained best with the famous "paperclip factory" thought experiment by author and philosopher Nick Bostrom:

The paperclip optimizer

Imagine a bunch of programmers over at Staples create an AGI with the purpose of obtaining as many paperclips as it can. If it has been constructed with a roughly human level of general intelligence, the AGI might collect paperclips, earn money to buy paperclips, or begin to manufacture paperclips. By learning from the feedback from everything it does, it will keep finding more and more efficient ways of obtaining paperclips.

Because the AI's intelligence is of human level, it will also be smart enough to realise that becoming even smarter might make itself more efficient. Part of its resources will be spent on self-improvement. As described above, this will eventually trigger an intelligence explosion.

As it gets smarter, it will find even more effective ways of increasing its paperclip collection. It might start taking resources out of the earth, building more factories, maybe even more computers to increase its brain size. Eventually, with not a single "evil" byte in its artificial brain, it might come to the conclusion that humans are kind of standing in its way. They might be preventing it from expanding even further, or it needs the resources in the parts of the ground that people live on. It does not mean harm, but it also hasn't been intructed to keep humans alive or save the planet.

If let loose, eventually it will turn the entire planet into a paperclip factory. And after that, why not visit another planet and start over? And still it's just following orders.

Let that sink in for a moment.

Wait, seriously?

Hypothetically, yes. Even if it will take another century (or more) to reach AGI, many really smart guys who know what they're talking about agree that the above is possible. In fact, the thing that most of them don't understand is why everyone else isn't worried about this.

This is why Stephen Hawking says that the development of Artificial Super Intelligence "could spell the end of the human race".

It's why Bill Gates says he doesn't "understand why some people are not concerned".

And it's why Elon Musk fears the development of AI could be "summoning the demon".

They're not saying it will happen, but they are genuinely afraid it might. And when it comes to the desctruction of Earth or the extinction of the human race, how much risk are you wiling to take? How small of a "might" does it have to be to ignore it?

So can we stop this from happening?

Yes. Probably. Maybe. That's a whole new post in itself. I think this is enough to chew on for now.

Hopefully you've learned something about AI and why Elon Musk is afraid of it. Whether or not he's right to be afraid I'll leave up to you to judge. I for one welcome our new robot overlords.

Questions? Comments? Pics of kittens to feel a bit better after this depressing ordeal?

I'll see you in the comments ↓.

Sources and reading material:

Superintelligence - Nick Bostrom (2014)

The Singularity is Near - Ray Kurzweil (2005)

https://en.wikipedia.org/wiki/Technological_singularity

https://en.wikipedia.org/wiki/Artificial_general_intelligence

http://observer.com/2015/08/stephen-hawking-elon-musk-and-bill-gates-warn-about-artificial-intelligence/

https://wiki.lesswrong.com/wiki/Paperclip_maximizer

https://sectionfiftytwo.wordpress.com/2015/02/04/artificial-intelligence-a-real-concern/

http://www.datasciencecentral.com/profiles/blogs/artificial-general-intelligence-the-holy-grail-of-ai

https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html <-- Seriously, WaitButWhy is the best. If you're interested in AI, Tesla, SpaceX, cryonics, mathematics, and/or nerdy stuff in general, get your ass over there. I've wasted so many hours reading his articles it's not even funny anymore.

Great article, excellent research. Now for the kitten suggestion...

Heh, that one always cracks me up. Thanks!

Great read, this is a subject I am fascinated by. Thanks for sharing

@pilcrow

Nice Job!

Keep the good work up!

Thanks for sharing

Thanks

Good read. You should check out VINT by Sogeti. They have written a few papers about AI as well. Very well researched material with vision.

I resteemed the post as well, even without it using the teamnl tag ;)

Thanks, I hadn't heard of VINT yet. I'll definitely check it out!

https://www.sogeti.nl/updates/tags/vint?search=rapport

Here you can download these reports for free that colleagues of mine write. In 2016 they did one on AI which you'll probably find interesting.

And this is their international site: http://labs.sogeti.com

Thanks for this @pilcrow. I really liked the movie with Will Smith, I, Robot. Have you seen it?

I have, although it's been a few years. I'm planning on reading the book soon, then I'll revisit the movie again. Thanks for reminding me :)

You're welcome. Where are you from?

Artificial Super Intelligence

I know, right?

Elon Musk fears AI because it can't be fooled by his Bernie Madoff style of bait & switch and it will ultimately expose him as the alien invader he is.

What do you mean by his bait and switch?

First it's the battery powered car, then it's the power wall, then it's solar shingles, then it's spacex, then it's a tunnel through earthquake country. All subsidized by government grants, none of them, as far as I know, making any profit.

Bernie Madoff was a financial genius, Enron was the company of the future, until they weren't.

Congratulations Human. This post has been chosen to feature in today's Muxxybot curation post....*....