Using crappy data to help you spot & ignore bull-shit research.

Moving my old engineer.diet blog posts to steemit. I've moved all my blogs to steemit so I'm dismantling my paid-for WordPress hosting. This post is an old one from February 22nd 2017 that I thought was worth salvaging.

Using crappy data to help you spot & ignore bull-shit research.

It is interesting to see how different groups, i’dd call them tribes, are ready to embrace research that fits with their preconceptions while rejecting other research. On social media people including myself often get criticized for ignoring evidence. As a data guy I feel strongly that data science is the science of removing noise, that is the science of finding out what should better be ignored. Looking at nutritional science, the problem doesn’t seem to be that (tribal) people choose to ignore compelling evidence, instead the main issue lies in accepting evidence that could likely be spurious and thus should better be ignored. There is a lot of crappy research out there when it comes to nutrition, and a whole lot of crappy underlying data sets that more often than not will be closed to public scrutiny.

While epidemiological research, especially nutritional epidemiological research is often frowned upon as substandard research, and is often grouped together with the likes of rodent studies and the likes, and while from a causality reasoning point of view it will often be close to useless to prove anything conclusively, in this post I want to look at using epi data as bullshit filter for research that is supposed to be of a higher order.

To do this, we pick a piece of potential bullshit, and an open epi data set that should include the variables that are important to us. Note that the research I look at here is just an example of research that is supposed to be stronger and more rigid than the frowned upon epi studies and animal studies, a systematic review and meta-analysis, in this case one investigating the link between dietary fibre intake and risk of cardiovascular disease. We take a good look at the conclusion of this study and see if we can apply our bullshit filter to it. I picked this study because the claim it makes is one that is relatively easy to map to epi data adjustment in order to use that epi data set as bullshit filter, and because the subject of the supposedly massive health benefits of dietary fiber, especially the quantification of these benefits, seem to most consistently crumble when applying bullshit filter like the one discussed in this blog post. What I’ll be doing here is something that researchers could easily have done themselves and something not uncommon when exploring scenario’s in a forensic setting (much of my own background is in forensics), it is however a check that in nutrition no one seems inclined to applying.

So what does this paper claim? What effect > And how strong is this effect? The paper claims :

“Total dietary fibre intake was inversely associated with risk of cardiovascular disease (risk ratio 0.91 per 7 g/day”

Remember we are talking systematic review here, so we should expect the probability of this effect to be real and show up in any epi set to be rather high. So lets bring in our bullshit filter. as our bullshit filter we use the 1989 data sets from the China Study.

I’m not talking about the equally named book here, the book is, to state it bluntly, a smoking pile of rhetoric against (animal) protein and saturated fat that lacks the substantial rooting in the actual China Study data set to justify its name. That is, there is surprisingly little china study in the china study book. Don’t get me wrong, it is an amazing book that posed an interesting hypothesis, and I feel strongly we shouldn’t judge pop-science books as if they were scientific papers, it is just that this particular book has rissen to a status of scripture in certain dietary communities, where it should be considered a well written book about an originally compelling hypothesis that like so many good hypothesis’s has contributed to the scientific process but should no longer be considered viable. But enough about the China Study book for now, we are here to look at poorly done peer reviewed science here, not at pop-sci books advocating superseded theories.

Last November CTSU pulled the China Study data sets, data that had been publicly available for at least 13 years from its website. Tribal conspiracy theorist HFLC might argue that the CTSU pulled the China Study data sets in some kind of evil vegan conspiracy. I’m not assuming any such malice nor do I feel there is any real reason to assume so. The important thing is that archive.org still has the raw data available for us to use as bullshit filter. The data set isn’t by far as impressive as it is made believe to be. There are many holes and the data has no info on any higher order moments of variables from sub populations. We can’t really be all that picky though. Many interesting data sets are completely unavailable to the public so the people behind the China Study, no matter what one might think about their persisting stand behind an outdated hypothesis, must be lauded for making the data available to the public rather than hiding behind IRB firewalls. It must have been a hell of a job to compile this data set, yes there are nits with its design, but we can still use it.

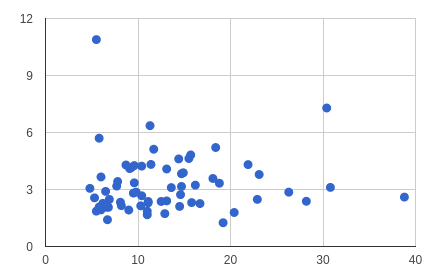

There are 66 data points that contain both fiber intake and cardiovascular mortality. Each data point represents a regional Chinese population in 1989. This is very interesting as this will likely make the data set void of many of the western associational clusters that might dominate our systematic review. So let’s look at our data points.

Pretty much the starry sky type of plot we are used to expect from epi data sets, so lets look at the correlation numbers. Our correlation is +0.06 with an unimpressive p value of 0.64. Does this mean anything? Well, it means that based on this data we are unable to reject the null hypothesis that this is a random correlation, but that isn’t actually what we are interested here, and if it was, not being able to reject a null hypothesis basicly gives us zero info. We need a different null hypothesis to work with, and the paper we discussed at the beginning gave us one.

So how do we go about at using this statement as null hypothesis ? :

“Total dietary fibre intake was inversely associated with risk of cardiovascular disease (risk ratio 0.91 per 7 g/day”

Well, let us start off by assigning a standard risk ratio of 1.0 to the mean fibre intake in our data set (12.99). We also look at and take note of the the mean of the cardiovascular mortality figures (3.24).

Now for each data point we have two values, F: the average number of grams of fiber and V the cardiovascular mortality figure for the region. So first we look at how many times 7 grams our F value is removed from the average number of 12.99 grams.

n=(F-12.99)/7

The next thing we do is that we calculate an adjustment risk factor based on P:

R=0.91ⁿ

Finally, we calculate the residual error for our fit using the adjusted risk ratio and the average mortality number:

E=V/(R3.24)*

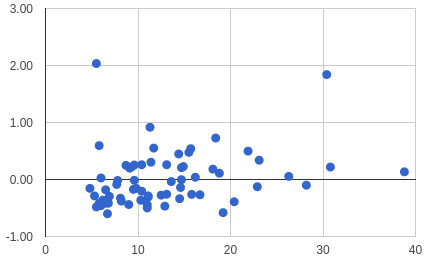

So now we look at how E looks when plotted against fibre intake:

Well, on first sight just as starry sky as and kind of similar to our original plot, but notice it is kinda tilted. So how about the correlation and p value? Well our correlation went from +0.06 to +0.27, not really massive, and we would expect something like this given that our model predicted a negative r to begin with, but how about the p value? Now here is where it gets interesting and possibly kinda controversial. Our p value for the correlation in our residual error is 0.026. I intentionally chose these two studies to search out an edge case such as this. Should we reject our null hypothesis that is based on what is perceived to be higher order evidence, purely because an unrelated and perceived as sub-standard epi data set suggests we should? I’m saying suggests, because 0.026 isn’t all that strong to begin with, at least, it wouldn’t be if we were looking to reject a regular null hypothesis.

But we should look at what our null hypothesis actually is. In law enforcement and thus by proxy in forensic science, the concept of asymmetry of proof is very important. The evidence needed to convict should be helt to higher standards than exculpatory evidence, and I would argue, especially given the arguably high rate of spurious claims in nutritional science, that this too should be a valid strategy. So how about epi evidence being substandard evidence? Well one thing to consider is that while the data is arguably confounded, spawning higher amounts of spurious associations on one end, the data points that make up N (used in calculating the p values) represent whole sub populations, thus leading to conceptual inflation of the true p. If we balance these three aspects, I would argue that a p value threshold of 0.05 would be just about right for determining if a piece of nutritional research should be ignored.

I know that using arguably confounded data sets to determine to ignore evidence that is widely considered superior will be a hard sell, but when considering the asymmetry of proof as used in law enforcement and forensics to be essential in a field of science plagued by spurious associations and spurious causality claims, we must affirm there is a definite need for bullshit filters. If the epi data has minimum cultural overlap with the research looked at, there is definitely added value to using epi data, and if as in this example we clearly can’t reject the default null hypothesis while we can reject the nul hypothesis that states that the effect+size defined in the paper are real, than ignoring the paper is an act that helps reduce noise as reducing noise is the real reason to use data science and nutritional science has a whole lot of residual noise as it stands.

As stated, the problem with nutritional science and bias doesn’t lie in bias driving people to ignore compelling evidence, it lies in biased and/or (data-sci wise) under informed people failing to ignore likely spurious evidence. Most research, even ‘golden standard’ RCT research in nutrition has some data science issues with it. Medical and nutritional education seem to only touch the very basics of data science, allowing for a wide range of issues to sneak into nutritional research that should be of the highest standard. The use of epi data as outlined in this post is just a band aid, and one with conceptual flaws, but it is a simple enough band aid for people with any background, including medical or nutritional science to apply, a type of band aid that in forensic science is quite common (though not completely uncontroversial). Use it as a band aid for finding out if you can or rather SHOULD ignore a certain publication.