WU availability for Gridcoin whitelisted projects (20/Dec/17)

Here is another WU availability update for Gridcoin whitelisted projects.

Gridcoin is an open source cryptocurrency (Ticker: GRC) which securely rewards volunteer computing performed upon the BOINC platform in a decentralized manner on top of proof of stake (source: 1).

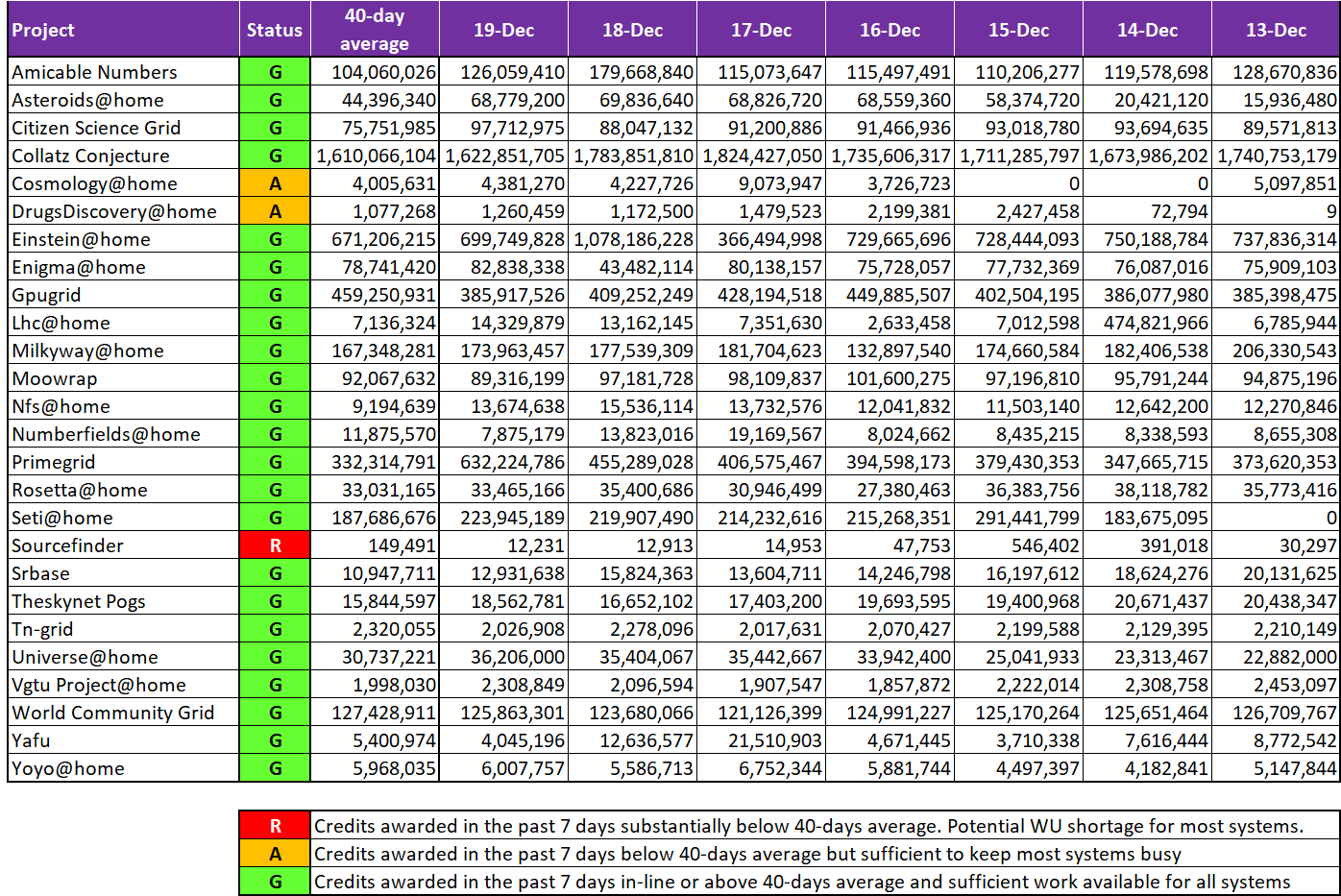

Compared to last week, DrugDiscovery@home did have sufficient work-units again. On the other hand Sourcefinder@home still suffers from insufficient WUs with a total output that would be just enough to keep a few systems fully loaded.

And here is the table with all details.

(source: 2)

(1) Gridcoin

(2) BOINCStats

Thanks for reading. Follow me for more BOINC & Gridcoin related articles.

This is one thing I've thought about -- what if BOINC can't keep up with Gridcoin demand? It'd be a case of having too much of a good thing ;)

With this in mind, I wonder if eventually we could market to researchers within academia and substantially increase the number of BOINC projects in existence. That way we could also implement slightly stricter standards for whitelisted projects (esp. regarding workunit availability) while still maintaining plenty of work for GRC miners.

There will never, ever be a point where BOINC cannot keep up with Gridcoin demand, until we have brute force cured every disease, cracked every encryption, mapped the entire known universe, etc. I'm dead serious.

As research moves into the modern age, the computation requirements for the bleeding edge of most fields require exponentially more compute power. This will continue to be the case. If Gridcoin could ever truly provide a platform where any researcher can get any models run in a short timeframe, that would mark a new age in the history of humanity.

To put this in perspective, lets take a look at my honours project. In a group of 4, we developed a new insulin dosing regime for patients just out of open heart surgery. The model is very complex to develop, as it needs to take into account a lot of data on the current patient. The process goes like this:

Now imagine I was provided infinite compute through BOINC. We can skip step one altogether, and just make a BOINC project try every possible regime, every dosing frequency, every drug delivery method and combination thereof, and then test the outcome on thousands of virtual patients. At the end of that set of simulations, we would have the perfect insulin dosing regime for patients after open heart surgery.

This is why I am frustrated to no end to see the amount of compute going to waste mining BTC. People want to improve the human condition, but don't want to help get there.

Oh absolutely. It's true that science can generate nearly inexhaustible demand for computation. But we need infrastructure to connect that demand with Gridcoin miners. As it stands, BOINC caters best to computational projects with a consistent and long-term supply of workunits, and not all scientific projects work that way. For instance, the research in my field typically consists of spates of ~10000 simulations at one time, interrupted by "dead periods" of hours to weeks in which data is analyzed and theories are developed. Sometimes a researcher will only need computations for one particular project which occupies (let's say) one month out of their year. So there's a lot of variability.

The infrastructure for handling these kinds of computational needs is only partially assembled. However, I'm confident it's possible to put together in the not-so-distant future. The way it would work is by pooling projects together like in WCG or yoyo@home, just streamlined and on a much larger scale, i.e. something that would be readily available to thousands of scientists around the world.

On the flip side of the coin, there already is an IMMENSE amount of computation to be done that DOES generate a large and consistent stream of WUs and can continue to do so basically indefinitely. I'm thinking of projects like PrimeGrid, SETI, collatz, etc. So long as those servers aren't overloaded, there's basically no limit to how many WUs they can send. Maybe that's what I was trying to get at -- wondering whether it would ever be possible to surpass the server capabilities of a project.

Anyways, I think we agree on most points. The infrastructure we have now is pretty good. BOINC has been around forever. I'm just convinced that we can make it even better than it is now. You're right that the potential positive impact on science and society is immense.

Yes, scientific projects with intermittent work often choose to collaborate with World Community Grid. It's like an umbrella project covering many smaller projects. If one of them is out of work, there are plenty of others online, to keep the workunits flowing. They are sponsored and supported by IBM - I guess it's not possible to outmatch their resources, even if WCG expands 100x next year.

Yeah, I should've done more research on WCG. IBM is a heavy-lifter, so yes you're right that we'd have to work pretty hard to overload their resources.

I'm always learning on here. Some of these thoughts might be worth condensing into a couple sentences and throwing into the whitepaper!

^^ THIS.

Computational science is the way of the future. PoW hashing is the thing that has no real demand, except inflated prices on scammy, centralized exchanges. In 10 years, PoW hashing will look like first cars look to us today - clumsy and wasteful.

I don't think we have to worry about a shortage of projects. Take for example some of the WCG projects, they are taking years to complete based on the current available computing power.

I don't crunch on WCG, so that's good to know. Certainly projects like PrimeGrid can indefinitely create new work, and even SETI at home can keep running consistently as long as data keeps being generated. So, it's good to have those "baseline" projects. I'm just wondering whether even those project servers have a limited bandwidth that Gridcoin could potentially run up against. I don't know the technical details of how the BOINC servers work, and particularly how they scale with number of users..