开始同步节点数据

在上午的文章中,我尝试了编译BitShares Core,但是编译完了总要用起来试试嘛,可惜我被从VPS踢下线了。原本计划等网络恢复正常不烦我了,我就登陆上去搞搞,结果等啊等,始终上不去。于是只好从另外一台VPS上跳上来玩了,真是烦。

witness_node

查看帮助

./witness_node --help

好多选项,看不懂,以后慢慢研究吧。

生成数据目录

./witness_node -d witness_node_data_dir

如果我们不手动指定数据目录,它会在witness_node 同级目录下生成witness_node_data_dir

数据目录中包含一个文件和两个目录

blockchain config.ini logs

看了一下config.ini,好多配置选项,昏迷。

生成数据目录的目的是生成config.ini配置文件,有了配置文件,我们就可以对witness_node进行诸多设置了。

减少内存、磁盘占用

关闭p2p日志

在config.ini中注释掉:filename=logs/p2p/p2p.log

--plugins

如果不指定--plugins参数,那么节点默认加载下列插件

- witness

- account_history

- market_history

我们可以用--plugins witness来只启用witness插件,或者用--plugins witness account_history启用witness和account_history两个插件,插件名称之前用空格分隔

account_history plugin

account_history插件可以设置如下选项

| 选项 | 值 | 说明 |

|---|---|---|

| --track-account | arg | Account ID to track history for (may specify multiple times) |

| --partial-operations | arg | Keep only those operations in memory that are related to account history tracking |

| --max-ops-per-account | arg | Maximum number of operations per account will be kept in memory |

market_history plugin

market_history 也有一些选项

| 选项 | 值 | 说明 |

|---|---|---|

| --bucket-size | arg (=[60,300,900,1800,3600,14400,86400]) | Track market history by grouping orders into buckets of equal size measured in seconds specified as a JSON array of numbers |

| --history-per-size | arg (=1000) | How far back in time to track history for each bucket size, measured in the number of buckets (default: 1000) |

| --max-order-his-records-per-market | arg (=1000) | Will only store this amount of matched orders for each market in order history for querying, or those meet the other option, which has more data (default: 1000) |

| --max-order-his-seconds-per-market | arg (=259200) | Will only store matched orders in last X seconds for each market in order history for querying, or those meet theother option, which has more data(default: 259200 (3 days)) |

听说这些选项不但影响运行时的内存占用,同样影响同步的速度,所以我计划只启用witness插件,这样就会有最小的内存占用以及同步效率。(待实践验证)

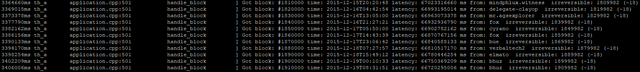

同步节点

试了半天,开始同步数据吧:

./witness_node --rpc-endpoint "127.0.0.1:8090" --plugins "witness" --replay-blockchain

其中:--rpc-endpoint "127.0.0.1:8090"开启节点 API 服务

好像似乎大概要很久。

测试

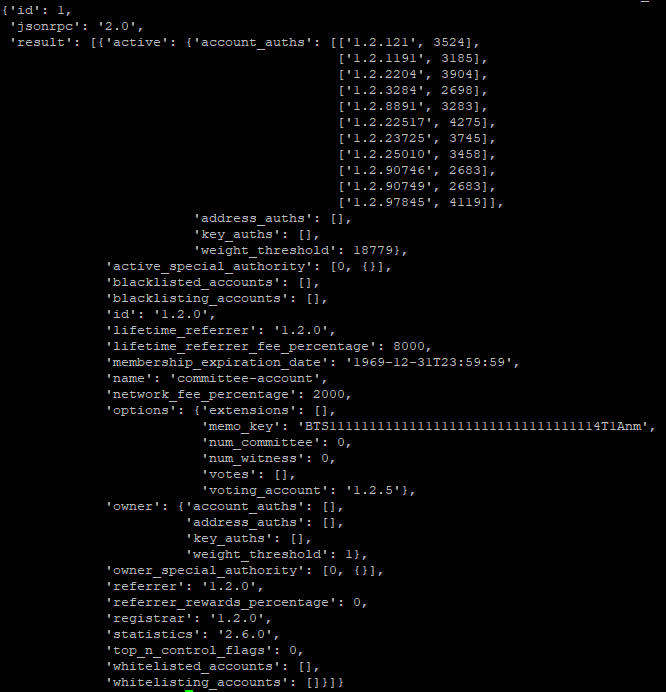

来试一下API服务

curl --data '{"jsonrpc": "2.0", "method": "call", "params": [0, "get_accounts", [["1.2.0"]]], "id": 1}' http://127.0.0.1:8090/rpc

晕,表示结果一团糟,一堆字符挤一起去了。

将结果格式化一下,这样看起来美美的:

再来

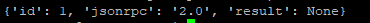

curl --data '{"jsonrpc": "2.0", "method": "call", "params": ["database", "get_block", [1000]], "id": 1}' http://127.0.0.1:8090/rpc

curl --data '{"jsonrpc": "2.0", "method": "call", "params": ["database", "get_block", [10000000]], "id": 1}' http://127.0.0.1:8090/rpc

额,急功冒进了吧,还没同步到10000000呢。

其它

测试这功夫看了一下,已经同步了300多万个块,现在一共呢接近2400万个块,照此计算貌似应该很快啊😍。不过好像前期的块中没啥数据,后期数据量大了,估计同步起来就慢了。

慢慢等吧,等它同步完,我再去深入了解一下。

之前在自己服务器上跑了一个全节点,真心吃资源。64G的内存根本不够,完全靠额外加的64G swap才跑起来。

目前来看我的需求是需要跑一个 witness ,另外提供rpc服务,供我后期跑机器人用。还需要再看看怎么优化一下参数。

额,我就8G😭

我也是好奇,你8G就能跑起来,你一定是使用了黑科技😂

还不算跑起来,等同步完,能用,才算跑起来

Nice post....

Do better again....

Thanks for sharing it should be better if you put In English thanks bro

Wow...what a concept..that was awesome.

First to last...i have read your post...@oflyhigh

I have 400.000 BTS in my wallet . Indonesia tranding ... i trust u BTS make it grow . Dont lose with NXT

Love

Nice post

thank you for sharing, good post.

好高深啊