R Programming: Text Analysis On Dr. Seuss - Fox In Socks

Hello there. In this post, the focus is on text analysis (text mining) with the R programming language on the Fox In Socks book. The original version of this post is from my website here.

Read out aloud Youtube videos such as this and this are available.

Topics

- References

- Getting Started

- Wordcounts In Fox In Socks

- Bigrams Counts In Fox In Socks

- A Look At Positive and Negative Words With Sentiment Analysis [Output Only]

References

- R Graphics Cookbook By Winston Chang

- http://ai.eecs.umich.edu/people/dreeves/Fox-In-Socks.txt

- http://lightonphiri.org/blog/ggplot2-multiple-plots-in-one-graph-using-gridextra

- Text Mining with R - A Tidy Approach by Julia Silge and David Robinson

- https://stackoverflow.com/questions/3472980/ggplot-how-to-change-facet-labels

Getting Started

In R, you want to load the following libraries:

dplyrfor data manipulationggplot2for data visualizationtidyrfor data cleaningtidytextfor word counts and sentiment analysis

To load libraries into R, use the library() function. For installation of packages, use install.packages("pkg_name").

# Text Mining on the Dr. Suess - Fox In Socks

# Text Version Of Book Source:

# http://ai.eecs.umich.edu/people/dreeves/Fox-In-Socks.txt

# 1) Word Counts In Fox In Socks

# 2) Bigrams in Fox In Socks

# 3) Sentiment Analysis - nrc, bing and AFINN Lexicons (Three In One Plot)

#----------------------------------

# Load libraries into R:

# Install packages with install.packages("pkg_name")

library(dplyr)

library(tidyr)

library(ggplot2)

library(tidytext)

Note that the Fox In Socks (online) book is obtained from http://ai.eecs.umich.edu/people/dreeves/Fox-In-Socks.txt as indicated in the comments in the above code.

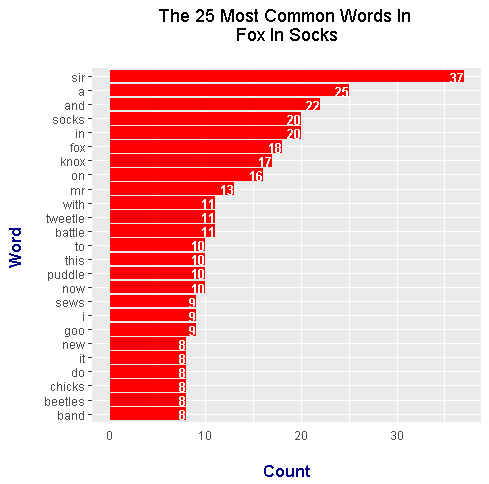

Wordcounts In Fox In Socks

With text mining/analysis, it is possible to obtain word counts from books or any piece of text. Word counts from a book gives an idea of what the book is about and which words are featured.

The text file can be found online. There is no need for setting a directory or copying and pasting.

foxSocks_book <- readLines("http://ai.eecs.umich.edu/people/dreeves/Fox-In-Socks.txt")

# Preview the start of the book:

foxSocks_book_df <- data_frame(Text = foxSocks_book) # tibble aka neater data frame

head(foxSocks_book_df, n = 15)

# A tibble: 15 x 1

Text

<chr>

1 Fox in Socks by Dr. Seuss

2 -------------------------

3

4 Fox

5 Socks

6 Box

7 Knox

8

9 Knox in box.

10 Fox in socks.

11

12 Knox on fox in socks in box.

13

14 Socks on Knox and Knox in box.

Notice that there is the title and a bunch of dashed lines at the top of this text file (website link). The title and dashed lines are not of importance and can be removed in R. This can be done by selecting only from the fourth line onwards.

# Remove first three lines that say Fox In Socks by Dr. Seuss,

# ---------- and a blank line:

foxSocks_book_df <- foxSocks_book_df[4:nrow(foxSocks_book_df), ]

From the tidytext package in R, the unnest_tokens() function is the first step to obtaining word counts from the Fox In Socks book.

# Unnest tokens: each word in the stories in a row:

foxSocks_words <- foxSocks_book_df %>%

unnest_tokens(output = word, input = Text)

# Preview with head() function:

head(foxSocks_words, n = 10)

# A tibble: 10 x 1

word

<chr>

1 fox

2 in

3 socks

4 by

5 dr

6 seuss

7 fox

8 socks

9 box

10 knox

English words such as for, the, and, me, myself carry very little meaning on their own. These words are called stop words. An anti join can be used to keep words that are not stop words in Fox In Socks.

# Remove English stop words from Fox In Socks:

# Stop words include me, you, for, myself, he, she

foxSocks_words <- foxSocks_words %>%

anti_join(stop_words)

From foxSocks_words, word counts can be obtained with the use of the count() function from R's dplyr package.

# Word Counts in Fox In Socks:

foxSocks_wordcounts <- foxSocks_words %>% count(word, sort = TRUE)

# Print top 15 words

head(foxSocks_wordcounts, n = 15)

# A tibble: 15 x 2

word n

<chr> <int>

1 sir 37

2 socks 19

3 fox 17

4 knox 17

5 battle 11

6 tweetle 11

7 puddle 10

8 goo 9

9 sews 9

10 band 8

11 beetles 8

12 chicks 8

13 beetle 7

14 box 7

15 broom 7

Once the counts are obtained, the results can be displayed as a horizontal bar graph with the use of ggplot2 graphics in R. Here is the code and output for the top twenty five words in Fox In Socks (after filtering out the stopwords).

# Plot of Word Counts (Top 25 Words):

foxSocks_wordcounts[1:25, ] %>%

mutate(word = reorder(word, n)) %>%

ggplot(aes(word, n)) +

geom_col(fill = "red") +

coord_flip() +

labs(x = "Word \n", y = "\n Count ", title = "The 25 Most Common Words In \n Fox In Socks \n") +

geom_text(aes(label = n), hjust = 1, colour = "white", fontface = "bold", size = 3.5) +

theme(plot.title = element_text(hjust = 0.5),

axis.ticks.x = element_blank(),

axis.title.x = element_text(face="bold", colour="darkblue", size = 12),

axis.title.y = element_text(face="bold", colour="darkblue", size = 12))

Frequent words include sir, socks, knox, fox, tweetle, and battle. From the top 25 words, there are repeats in the sense of having plurals and singular forms.

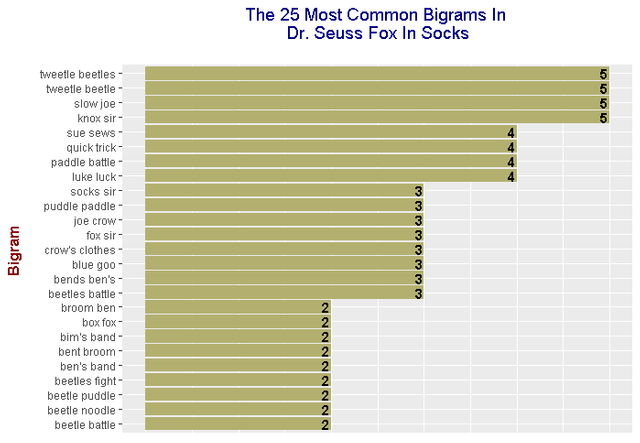

Bigrams Counts In Fox In Socks

Instead of the counts of single words, counts of two word phrases or bigrams can be obtained.

# 2) Bigrams (Two-Word Phrases) In Fox In Socks

foxSocks_bigrams <- foxSocks_book_df %>%

unnest_tokens(bigram, input = Text, token = "ngrams", n = 2)

# Look at the bigrams:

foxSocks_bigrams

# A tibble: 847 x 1

bigram

<chr>

1 fox in

2 in socks

3 socks by

4 by dr

5 dr seuss

6 seuss fox

7 fox socks

8 socks box

9 box knox

10 knox knox

# ... with 837 more rows

Removing stop words from the bigrams requires a bit more work. In this case, tidyr and the dplyr packages are used together in R. First, the separate() function from tidyr is used to split the bigrams into their two separate words. Any stopwords that are in the bigrams are removed with two filter() functions. After filtering, counts are obtained.

# Remove stop words from bigrams with tidyr's separate function

# along with the filter() function

foxSocks_bigrams_sep <- foxSocks_bigrams %>%

separate(bigram, c("word1", "word2"), sep = " ")

foxSocks_bigrams_filt <- foxSocks_bigrams_sep %>%

filter(!word1 %in% stop_words$word) %>%

filter(!word2 %in% stop_words$word)

# Filtered bigram counts:

foxSocks_bigrams_counts <- foxSocks_bigrams_filt %>%

count(word1, word2, sort = TRUE)

head(foxSocks_bigrams_counts, n = 15)

# A tibble: 15 x 3

word1 word2 n

<chr> <chr> <int>

1 knox sir 5

2 slow joe 5

3 tweetle beetle 5

4 tweetle beetles 5

5 luke luck 4

6 paddle battle 4

7 quick trick 4

8 sue sews 4

9 beetles battle 3

10 bends ben's 3

11 blue goo 3

12 crow's clothes 3

13 fox sir 3

14 joe crow 3

15 puddle paddle 3

The separated words can be reunited together with tidyr's unite() function.

# Unite the words with the unite() function:

foxSocks_bigrams_counts <- foxSocks_bigrams_counts %>%

unite(bigram, word1, word2, sep = " ")

foxSocks_bigrams_counts

# A tibble: 201 x 2

bigram n

* <chr> <int>

1 knox sir 5

2 slow joe 5

3 tweetle beetle 5

4 tweetle beetles 5

5 luke luck 4

6 paddle battle 4

7 quick trick 4

8 sue sews 4

9 beetles battle 3

10 bends ben's 3

# ... with 191 more rows

After unification, the results can be displayed with ggplot2 graphics.

# We can now make a plot of the word counts.

# ggplot2 Plot Of Top 25 Bigrams From Fox In Socks:

foxSocks_bigrams_counts[1:25, ] %>%

ggplot(aes(reorder(bigram, n), n)) +

geom_col(fill = "#b3b06f") +

coord_flip() +

labs(x = "Bigram \n", y = "\n Count ", title = "The 25 Most Common Bigrams In \n Dr. Seuss Fox In Socks \n") +

geom_text(aes(label = n), hjust = 1.2, colour = "black", fontface = "bold") +

theme(plot.title = element_text(hjust = 0.5, colour = "darkblue", size = 14),

axis.title.x = element_blank(),

axis.ticks.x = element_blank(),

axis.text.x = element_blank(),

axis.title.y = element_text(face="bold", colour="darkred", size = 12))

The bigrams tied for first place at a count of 5 are:

- tweetle beetles

- tweetle beetle

- slow joe

- knox sir

Notice how a lot of these bigrams do rhyme. The bigrams featured from the plot are a bit silly which is appropriate for a children's book. Having kids get used to rhymes works on reading and listening skills.

A Look At Positive and Negative Words With Sentiment Analysis

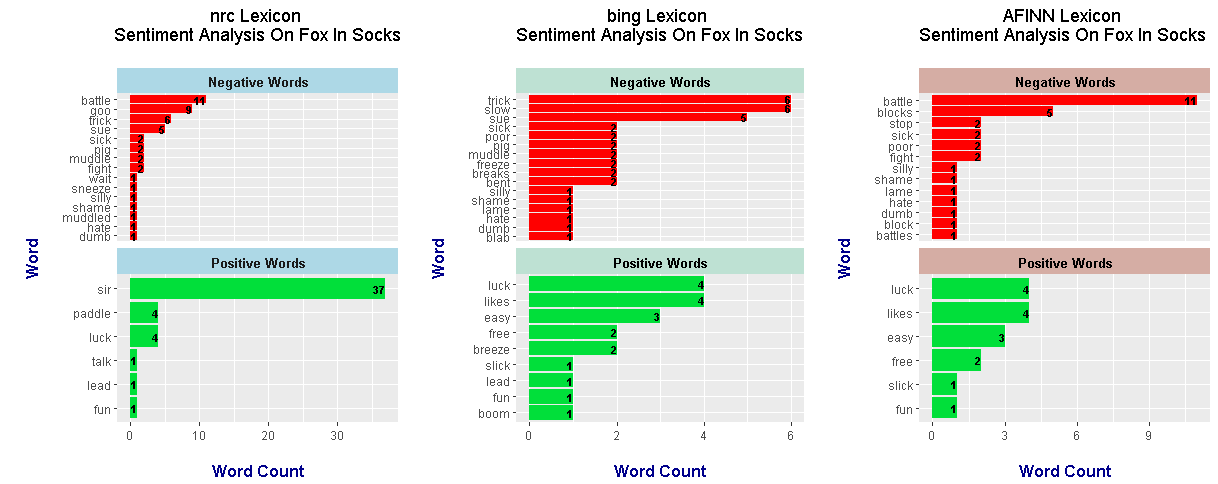

A big part of sentiment analysis involves the analysis of negative and positive words in text. The three main lexicons which (subjectively) scores and/or categorizes words are nrc, bing and AFINN.

To save time and space, no code for this section will be shown. Only the output is shown.

From the nrc lexicon sentiment analysis results, the top positive words are sir, paddle and luck. I think sir is considered positive as it is used as a sign of respect. I am not sure why paddle is positive. Negative words from the nrc lexicon include battle, goo, trick and sue. Note that lexicons are not great with context. Sue can be a verb or a name. In this case, nrc recognizes sue as a negative verb.

The bing lexicon does not recognize battle as a negative word but it does recognize the words slow, poor and freeze as negative. Positive words from the bing lexicon include luck, likes, easy, free and breeze. Sir is not included here.

In the AFINN lexicon results, negative words include battle, blocks, stop, sick, poor and fight. Positive words does not include sir but it does include the words luck, likes, easy, free, slick and fun.

According to bing and AFINN, the Fox In Socks is more negative than positive if you are looking at word counts (after filtering out stopwords). Since nrc considers sir as positive, nrc considers the book as more positive than negative. These lexicons are subjective and not perfect. Some information is better than none in this case.