Node Js Tutorial How To Create Read And Write Stream Manually And Automatically For Big Files

What Will I Learn?

I will learn how to create a read stream , write stream and how to listen the data by the event and write the file by chunks .

- How to create a read Stream by the ' fs ' module .

- How to write a file using write Stream .

- How to use the pipe to write automatically by chunks .

Requirements

- Node JS must be installed on your device , you can install it From here

- Knowledge about JavaScript language .

- Basic Knowledge about Command Line window .

- An editor for example notepad ++ .

Difficulty

- Basic

Tutorial Contents

In this tutorial I am going to talk about streams and buffer how to use createReadStream to read a big file chunk with a chunk also how to write this big file with the same principle to give it to the client by the pipe , so let's start ..

Readable Streams

Streams are collections of data — just like arrays or strings. The difference is that streams might not be available all at once, and they don’t have to fit in memory. This makes streams really powerful when working with large amounts of data, or data that’s coming from an external source one chunk at a time.

The streams contains chunks so the scenario is to collect the data to minimize the work of memory because it's not the same thing when you execute the code ' readFile ' at once it will take a lot of your memory but if you use the readStream it will be divided and the memory will work normally without problems , firstly we will understand how to use the read streams after that I will give you an example with a server to explain actually how it helps .

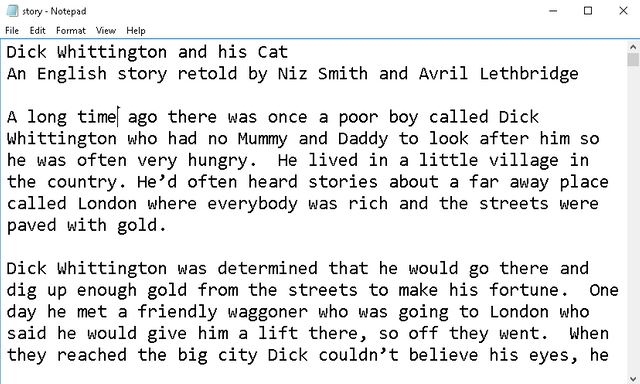

To use the read Streams we must define the ' fs ' module which provides an API for interacting with the file system to control the files by this code var fs = require('fs') I get the library in a variable named ' fs ' ; I have a file to read named ' Story.txt ' it's a big file has many stories to give you a real example about the streams and this is the file :

Now I want to define a variable to get the content of this file by the read Stream I will call it ' readStreamV ' and this is the code

var readStreamV = fs.createReadStream() this method has two parameters mostly the first is the file with the extension for example ' story.txt ' and the second is the encoding type in my file is the ' utf8 ' type and as I mentioned in the previous tutorial there is many of types you can read about on Wikipedia , sometimes we need to specify the directory by ' __dirname ' or ' ./ ' also to signify that's in the same directory to be like it : var readStreamV = fs.createReadStream('story.txt', 'utf8')

After reading the file with the streams we need an event to print or to do something for this file with the cunks and for that I want to explain it by print each new chunk arrive from the file

readStreamV.on('data', function(chunk){

console.log('new chunk ' );

})

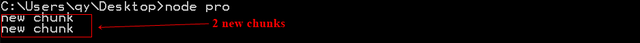

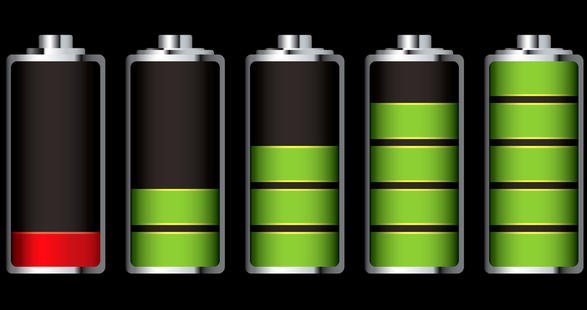

This code will print every new chunk received I will just execute it to see what's the result :

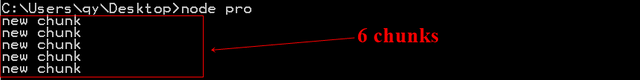

Here there is two chunks received it means the file was devided in 2 parts each part named chunk just to minimize as I said the size and to take less of capacity , I will copy and past the content many times and will see what will happen ..

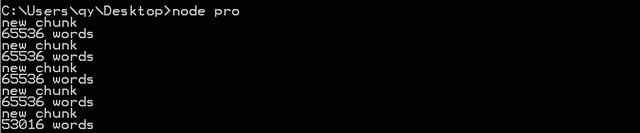

As you see on the picture we have 6 chunks received if you imagine how it reads all the content in the same chunk it will take a lot of your memory but if you store just by pieces it will be quickly and useful , I will now just print the chunk to see how to be and to see the length console.log(chunk.length + ' words ' ); the length is a propriety of String to get the number of letters contains this string and this is the result

So we receive a new chunk when the number of letters becomes equal to 65536 letters and the last chunk or part haz just 53016 letters and to understand more I want to print now the chunk on the console by console.log(chunk); and this is the result :

Writable Streams

As you read a big file by this method you can also write this big file chunk with chunk the same benefit to minimize and to be quickly I want currently to explain to you just the code and the way to use it , after that I will do a real application to send a big file to the server .

I want to put to you an imagine how the operation works I propose to use the idea of the battery

Image Source

This is how the chunk be full we name it ' buffer ' and after completion it is sent to be a chunk , so the chunk is a collect of data to full the buffer to be a chunk used by the ' on ' method passed by parameter for that I want to write a copy of the story txt , let's do it .

var createStreamV = fs.createWriteStream('storyCopy.txt')

I have created a variable named ' createStreamV ' by the module ' fs ' I used the method ' createWriteStream it has the name of file that you want to write as parameters , by this code I have created the file but it's now empty to full it we must use it inside the function and this is the code :

var fs = require('fs');

var readStreamV = fs.createReadStream('story.txt', 'utf8');

var createStreamV = fs.createWriteStream('storyCopy.txt');

readStreamV.on('data', function(chunk){

console.log('new chunk');

createStreamV.write(chunk);

});

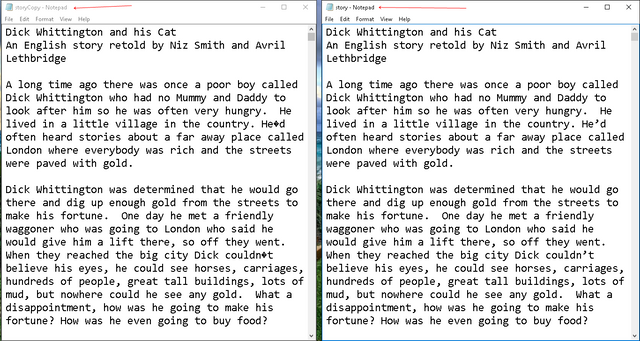

Let's go step by step firstly I read the story and keep the content inside the ' readStreamV ' variable then I created another variable ' createStreamV ' to write another file named storyCopy with the txt extension then when the event is data by the on method we have the chunk passed by parameter I print the new chunk when it arrives and I will write into the storyCopy file the chunk the parts that we have and if I execute it

Then this is the result of the file and the copy of file

So you can by this code var writeStr = fs.createWriteStream('name.ext'); create your file by this method and by writeStr.write('Here the file or the chunk as my example') .

The Pipe

The method is to save the data in a buffer it will send as a chunk of data and we listen to this data event by the ' on ' method when we receive a chunk of data then we write the stream , to do all of this automatically we use the pipe , the pipe will read the stream and write it automatically and this is the code

var readStreamV = fs.createReadStream('story.txt', 'utf8');

var createStreamV = fs.createWriteStream('storyCopy.txt', 'utf8');

readStreamV.pipe(createStreamV);

So firstly we read the content of the story then we write the file and we pipe by the readStream the writeStream , it's the same thing as we did by listening the data and write by the ' write ' method .

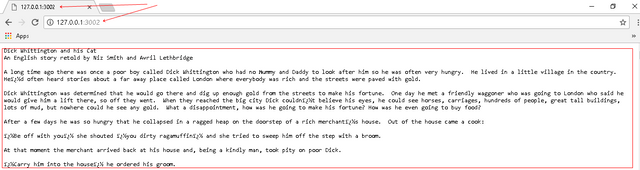

I want now to create a real application , the idea is the client do a request to read the story and the story is a big file I want now to apply the streams , I need to read the content then to pipe it to the copyStory I need to create a server ( You can read how to create a server ) by @alfarisi94 , we need a ' http ' module and to get it var http = require('http') then I will create the server by var server = http.createServer(function(req, res){

res.writeHead(200,{'Content-Type': 'text/plain'});

});

The function has as parameter the request and the response and I created the head with the '200 ' message it means ' ok ' and the type of the content is a text , after that we need to read our file by the same code var readStream = fs.createReadStream('story.txt', 'utf8') the final step is to pipe the result as a response to answer the client with the file that he demand by readStream.pipe(res); , finally we listen to the server in the port 3002 for example and the ip of the local host 127.0.01 server.listen(3002, '127.0.0.1'); , then we execute it and see what's the result :

And like it we uploaded a big file chunk with chunk in a real application by the ' pipe ' method .

Curriculum

- Node Js Tutorial Read , Write and Edit files and directories by fs module

- Node JS Tutorial How To Use Event Emitter By Events Module

Posted on Utopian.io - Rewarding Open Source Contributors

Thank you for the contribution. It has been approved.

You can contact us on Discord.

[utopian-moderator]

Thank you sir

Hey @rdvn, I just gave you a tip for your hard work on moderation. Upvote this comment to support the utopian moderators and increase your future rewards!

Hey @alexendre-maxim I am @utopian-io. I have just upvoted you!

Achievements

Community-Driven Witness!

I am the first and only Steem Community-Driven Witness. Participate on Discord. Lets GROW TOGETHER!

Up-vote this comment to grow my power and help Open Source contributions like this one. Want to chat? Join me on Discord https://discord.gg/Pc8HG9x

Congratulations @alexendre-maxim! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOP